李宏毅机器学习作业8-异常检测(Anomaly Detection)

目录

目标和数据集

数据集

方法论

导包

Dataset module

autoencoder

训练

加载数据

训练函数

训练

推断

解答与讨论

fcn

浅层模型

深层网络

cnn

残差网络

辅助网络

目标和数据集

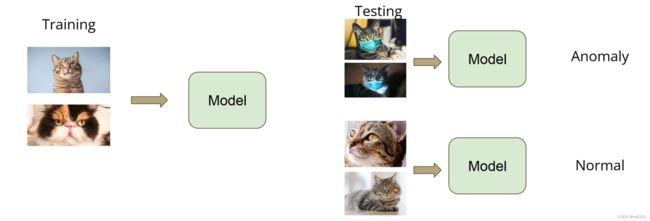

使用Unsupervised模型做异常检测:识别给定图像是否和训练图像相似

数据集

Training data

- 100000 human faces

- data/traingset.npy: 100000 images in an numpy array with shape (100000, 64, 64, 3)

● Testing data

- About 10000 from the same distribution with training data (label 0)

- About 10000 from another distribution (anomalies, label 1)

- data/testingset.npy: 19636 images in an numpy array with shape (19636, 64, 64, 3)

方法论

参考李宏毅机器学习笔记——Anomaly Detection(异常侦测)_iwill323的博客-CSDN博客

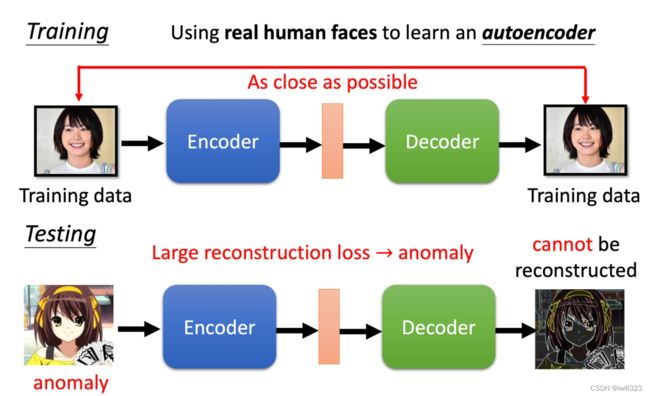

● Train an autoencoder with small reconstruction error.

● During inference, we can use reconstruction error as anomaly score.

○ Anomaly score can be seen as the degree of abnormality of an image.

○ An image from unseen distribution should have higher reconstruction error.

● Anomaly scores are used as our predicted values.

最后,使用ROC AUCscore对模型进行评价。

导包

import random

import numpy as np

import torch

from torch import nn

from torch.utils.data import DataLoader, RandomSampler, SequentialSampler, TensorDataset

import torchvision.transforms as transforms

import torch.nn.functional as F

from torch.autograd import Variable

import torchvision.models as models

from torch.optim import Adam, AdamW

import pandas as pd

import os

from d2l import torch as d2l

def same_seeds(seed):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

if torch.cuda.is_available():

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = True

same_seeds(48763)Dataset module

The transform function here normalizes image's pixels from [0, 255] to [-1.0, 1.0].

class CustomTensorDataset(TensorDataset):

"""TensorDataset with support of transforms.

"""

def __init__(self, tensors):

self.tensors = tensors

if tensors.shape[-1] == 3:

self.tensors = tensors.permute(0, 3, 1, 2)

self.transform = transforms.Compose([

transforms.Lambda(lambda x: x.to(torch.float32)),

transforms.Lambda(lambda x: 2. * x/255. - 1.),

])

def __getitem__(self, index):

x = self.tensors[index]

if self.transform:

# mapping images to [-1.0, 1.0]

x = self.transform(x)

return x

def __len__(self):

return len(self.tensors)autoencoder

原代码。分别是全连接网络,卷积网络,VAE模型

class fcn_autoencoder(nn.Module):

def __init__(self):

super(fcn_autoencoder, self).__init__()

self.encoder = nn.Sequential(

nn.Linear(64 * 64 * 3, 128),

nn.ReLU(),

nn.Linear(128, 64),

nn.ReLU(),

nn.Linear(64, 12),

nn.ReLU(),

nn.Linear(12, 3)

)

self.decoder = nn.Sequential(

nn.Linear(3, 12),

nn.ReLU(),

nn.Linear(12, 64),

nn.ReLU(),

nn.Linear(64, 128),

nn.ReLU(),

nn.Linear(128, 64 * 64 * 3),

nn.Tanh()

)

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

return x

class conv_autoencoder(nn.Module):

def __init__(self):

super(conv_autoencoder, self).__init__()

self.encoder = nn.Sequential(

nn.Conv2d(3, 12, 4, stride=2, padding=1),

nn.ReLU(),

nn.Conv2d(12, 24, 4, stride=2, padding=1),

nn.ReLU(),

nn.Conv2d(24, 48, 4, stride=2, padding=1),

nn.ReLU(),

)

self.decoder = nn.Sequential(

nn.ConvTranspose2d(48, 24, 4, stride=2, padding=1),

nn.ReLU(),

nn.ConvTranspose2d(24, 12, 4, stride=2, padding=1),

nn.ReLU(),

nn.ConvTranspose2d(12, 3, 4, stride=2, padding=1),

nn.Tanh(),

)

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

return x

class VAE(nn.Module):

def __init__(self):

super(VAE, self).__init__()

self.encoder = nn.Sequential(

nn.Conv2d(3, 12, 4, stride=2, padding=1),

nn.ReLU(),

nn.Conv2d(12, 24, 4, stride=2, padding=1),

nn.ReLU(),

)

self.enc_out_1 = nn.Sequential(

nn.Conv2d(24, 48, 4, stride=2, padding=1),

nn.ReLU(),

)

self.enc_out_2 = nn.Sequential(

nn.Conv2d(24, 48, 4, stride=2, padding=1),

nn.ReLU(),

)

self.decoder = nn.Sequential(

nn.ConvTranspose2d(48, 24, 4, stride=2, padding=1),

nn.ReLU(),

nn.ConvTranspose2d(24, 12, 4, stride=2, padding=1),

nn.ReLU(),

nn.ConvTranspose2d(12, 3, 4, stride=2, padding=1),

nn.Tanh(),

)

def encode(self, x):

h1 = self.encoder(x)

return self.enc_out_1(h1), self.enc_out_2(h1)

def reparametrize(self, mu, logvar):

std = logvar.mul(0.5).exp_()

if torch.cuda.is_available():

eps = torch.cuda.FloatTensor(std.size()).normal_()

else:

eps = torch.FloatTensor(std.size()).normal_()

eps = Variable(eps)

return eps.mul(std).add_(mu)

def decode(self, z):

return self.decoder(z)

def forward(self, x):

mu, logvar = self.encode(x)

z = self.reparametrize(mu, logvar)

return self.decode(z), mu, logvar

def loss_vae(recon_x, x, mu, logvar, criterion):

"""

recon_x: generating images

x: origin images

mu: latent mean

logvar: latent log variance

"""

mse = criterion(recon_x, x)

KLD_element = mu.pow(2).add_(logvar.exp()).mul_(-1).add_(1).add_(logvar)

KLD = torch.sum(KLD_element).mul_(-0.5)

return mse + KLD训练

加载数据

# load data

train = np.load('../input/ml2022spring-hw8/data/trainingset.npy', allow_pickle=True)

test = np.load('../input/ml2022spring-hw8/data/testingset.npy', allow_pickle=True)

print(train.shape)

print(test.shape)

# Build training dataloader

batch_size = 256

num_workers = 2

x = torch.from_numpy(train)

train_dataset = CustomTensorDataset(x)

train_dataloader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size, num_workers=num_workers, pin_memory=True, drop_last=True)

print('训练集总长度是 {:d}, batch数量是 {:.2f}'.format(len(train_dataset), len(train_dataset)/ batch_size)) 训练函数

def trainer(model, config, train_dataloader, devices):

best_loss = np.inf

num_epochs = config['num_epochs']

model_type = config['model_type']

# Loss and optimizer

criterion = nn.MSELoss()

optimizer = torch.optim.Adam(model.parameters(), lr=config['lr'])

scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer,

T_0=config['T_0'], T_mult=config['T_2'], eta_min=config['lr']/config['ratio_min'])

if not os.path.isdir('./' + config['model_path'].split('/')[1]):

os.mkdir('./' + config['model_path'].split('/')[1]) # Create directory of saving models.

model.train()

legend = ['train loss']

animator = d2l.Animator(xlabel='epoch', xlim=[0, num_epochs], legend=legend)

for epoch in range(num_epochs):

tot_loss = 0.0

for data in train_dataloader:

img = data.float().to(devices[0])

if model_type in ['fcn']:

img = img.view(img.shape[0], -1)

output = model(img)

if model_type in ['vae']:

loss = loss_vae(output[0], img, output[1], output[2], criterion)

else:

loss = criterion(output, img)

tot_loss += loss.item()

optimizer.zero_grad()

loss.backward()

optimizer.step()

scheduler.step()

mean_loss = tot_loss / len(train_dataloader)

print(epoch, mean_loss)

animator.add(epoch, (mean_loss))

if mean_loss < best_loss:

best_loss = mean_loss

torch.save(model.state_dict(), config['model_path'])训练

devices = d2l.try_all_gpus()

print(f'DEVICE: {devices}')

config = {

"num_epochs": 240,

"lr": 8e-4,

"model_type": 'cnn', # selecting a model type from {'cnn', 'fcn', 'vae', 'resnet'}

"T_0": 2,

"T_2": 2,

'ratio_min': 20

}

config['model_path'] = './models/' + config['model_type']

model_classes = {'fcn': fcn_autoencoder(), 'cnn': cnn_autoencoder(), 'vae': VAE()}

model= model_classes[config['model_type']].to(devices[0])

trainer(model, config, train_dataloader, devices)推断

train = np.load("../data/ml2022spring-hw8/data/trainingset.npy", allow_pickle=True)

test = np.load('../data/ml2022spring-hw8/data/testingset.npy', allow_pickle=True)

# build testing dataloader

eval_batch_size = 1024

data = torch.tensor(test, dtype=torch.float32)

test_dataset = CustomTensorDataset(data)

test_dataloader = DataLoader(test_dataset, shuffle=False, batch_size=eval_batch_size, num_workers=0, pin_memory=True)

print('测试集总长度是 {:d}, batch数量是 {:.2f}'.format(len(test_dataset), len(test_dataset)/ eval_batch_size))

eval_loss = nn.MSELoss(reduction='none')

# load trained model

model= model_classes[config['model_type']].to(devices[0])

model.load_state_dict(torch.load('cnn'))

model.eval()

# prediction file

out_file = 'prediction-cnn_res.csv'

anomality = []

with torch.no_grad():

for i, data in enumerate(test_dataloader):

img = data.float().to(devices[0])

if config['model_type'] in ['fcn']:

img = img.view(img.shape[0], -1)

output = model(img)

if config['model_type'] in ['vae']:

output = output[0]

elif config['model_type'] in ['fcn']:

loss = eval_loss(output, img).sum(-1)

else:

loss = eval_loss(output, img).sum([1, 2, 3])

anomality.append(loss)

anomality = torch.cat(anomality, axis=0)

anomality = torch.sqrt(anomality).reshape(len(test), 1).cpu().numpy()

df = pd.DataFrame(anomality, columns=['score'])

df.to_csv(out_file, index_label = 'ID')解答与讨论

fcn

浅层模型

参考李宏毅2022机器学习HW8解析_机器学习手艺人的博客-CSDN博客,使用了一个浅层网络,特点是第一个隐藏层特别宽,这样能更好地从样本中提取出有用的特征,然后慢慢缩减。原代码encoder输出是3维向量,这里改为10维,能更好地表达图像数据分布。

class fcn_autoencoder(nn.Module):

def __init__(self):

super(fcn_autoencoder, self).__init__()

self.encoder = nn.Sequential(

nn.Linear(64 * 64 * 3, 1024),

nn.ReLU(),

nn.Linear(1024, 256),

nn.ReLU(),

nn.Linear(256, 64),

nn.ReLU(),

nn.Linear(64, 10)

)

self.decoder = nn.Sequential(

nn.Linear(10, 64),

nn.ReLU(),

nn.Linear(64, 256),

nn.ReLU(),

nn.Linear(256, 1024),

nn.ReLU(),

nn.Linear(1024, 64 * 64 * 3),

nn.Tanh()

)

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

return x

该模型参数有25,739,146个,大小为98.46MB,看上去很大,训练起来感觉比后面的深层网络和CNN网络还要快一点。采用了几组超参数进行训练,如果仔细训练,得分还能更高。

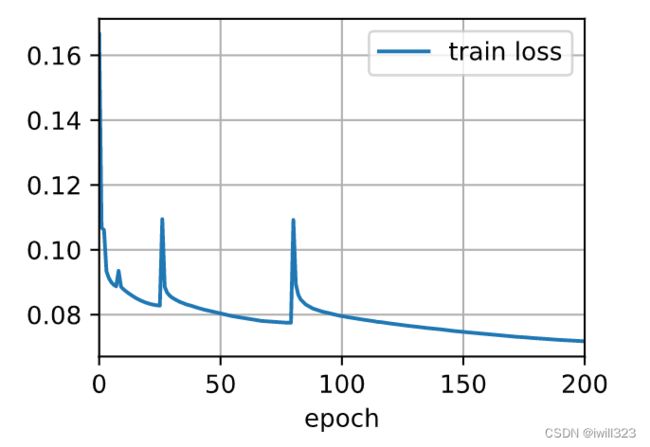

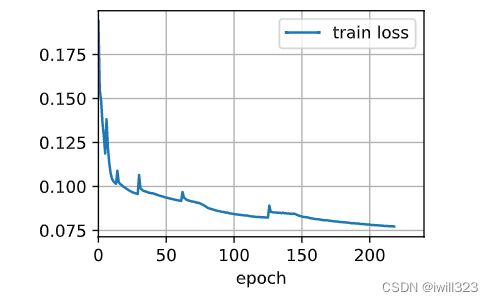

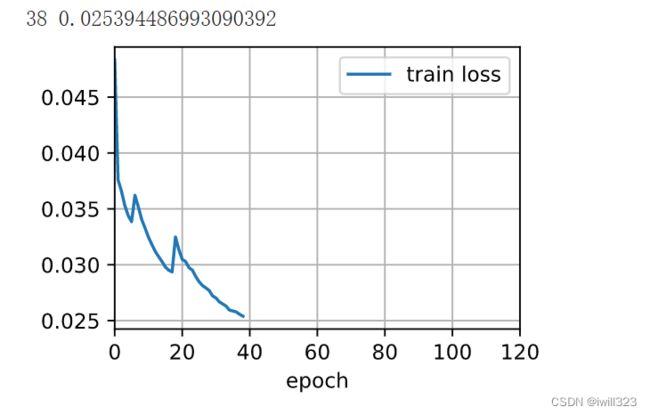

1. 采用我最常用的参数设置,训练过程平滑,结果也比较好

config = {

"num_epochs": 200,

"lr": 1e-3,

"model_type": 'fcn',

"T_0": 2,

"T_2": 3,

'ratio_min': 50

}

2.感觉每一个周期末尾loss下降太慢,怀疑是每个周期后半段的学习率太小了,于是把eta_min由50变成10,看上去loss下降趋势的确陡峭了一些。然而最终的训练结果还不如上一个。原因可能是eta_min=50的时候,前半段学习率较高,loss迅速下降,让后半段能够以较低的学习率继续学习,之所以让人感觉周期末尾loss下降太慢,是因为周期刚开始的时候loss下降快。反观eta_min=10,整个周期学习率都较高,loss的下降由整个周期来完成,而不像eta_min=50时主要由周期刚开始的部分完成。

config = {

"num_epochs": 10,

"lr": 8e-4,

"model_type": 'fcn',

"T_0": 2,

"T_2": 3,

'ratio_min': 10

}

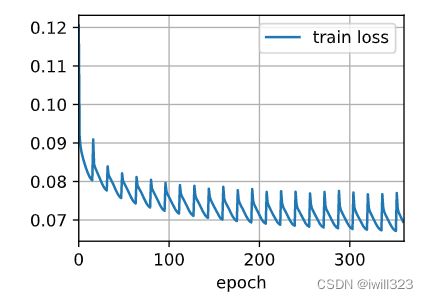

3.固定周期长度。在开始阶段loss下降比前两个都快,可能是因为在较短的epoch里就试了好几轮学习率,能够尝试到合适的学习率。但是loss后续下降很慢,因为波动太大,大量的计算用于把loss从升上去的高点往下拉。

config = {

"num_epochs": 360,

"lr": 1e-3,

"model_type": 'fcn',

"T_0": 16,

"T_2": 1,

'ratio_min': 50

}

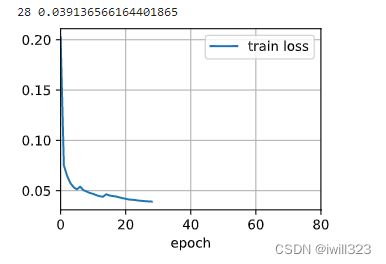

深层网络

并没有多深。一般希望网络更深,而不是更宽,于是把网络加深。参数有13,078,314个,大小为50.16MB。大小约是上一个模型的一半,模型表现要差一些:浅层网络可以轻松超过0.76分,但是深层网络最好只能稍稍超过0.75分。考虑到模型参数的巨大差距,似可接受。

class fcn_autoencoder(nn.Module):

def __init__(self):

super(fcn_autoencoder, self).__init__()

self.encoder = nn.Sequential(

nn.Linear(64 * 64 * 3, 512),

nn.ReLU(),

nn.Linear(512, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.ReLU(),

nn.Linear(256, 128),

nn.ReLU(),

nn.Linear(128, 64),

nn.ReLU(),

nn.Linear(64, 32),

nn.ReLU(),

nn.Linear(32, 16),

nn.ReLU(),

nn.Linear(16, 10)

)

self.decoder = nn.Sequential(

nn.Linear(10, 16),

nn.ReLU(),

nn.Linear(16, 32),

nn.ReLU(),

nn.Linear(32, 64),

nn.ReLU(),

nn.Linear(64, 128),

nn.ReLU(),

nn.Linear(128, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.ReLU(),

nn.Linear(256, 512),

nn.ReLU(),

nn.Linear(512, 64 * 64 * 3),

nn.Tanh()

)

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

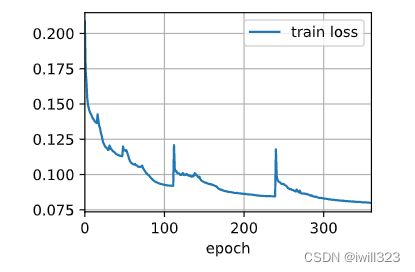

return x采用了几种超参数,优化结果差不多。

config = {

"num_epochs": 240,

"lr": 8e-4,

"model_type": 'fcn',

"T_0": 16,

"T_2": 1,

'ratio_min': 50

}

config = {

"num_epochs": 240,

"lr": 1e-3,

"model_type": 'fcn',

"T_0": 2,

"T_2": 3,

'ratio_min': 50

}

config = {

"num_epochs": 240,

"lr": 8e-4,

"model_type": 'fcn',

"T_0": 2,

"T_2": 2,

'ratio_min': 5

}

基本上模拟了一个固定学习率。刚开始阶段,loss下降很快。前面几个例子使用scheduler反而变慢了,所以可以尝试一开始使用一个较大的学习率,当loss下降变慢的时候再使用scheduler

config = {

"num_epochs": 240,

"lr": 8e-4,

"model_type": 'fcn',

"T_0": 1,

"T_2": 1,

'ratio_min': 5

}

把网络继续加深,模型表现基本没变

class fcn_autoencoder(nn.Module):

def __init__(self):

super(fcn_autoencoder, self).__init__()

self.encoder = nn.Sequential(

nn.Linear(64 * 64 * 3, 512),

nn.ReLU(),

nn.Linear(512, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.ReLU(),

nn.Linear(256, 128),

nn.ReLU(),

nn.Linear(128, 64),

nn.ReLU(),

nn.Linear(64, 32),

nn.ReLU(),

nn.Linear(32, 16),

nn.ReLU(),

nn.Linear(16, 10)

)

self.decoder = nn.Sequential(

nn.Linear(10, 16),

nn.ReLU(),

nn.Linear(16, 32),

nn.ReLU(),

nn.Linear(32, 64),

nn.ReLU(),

nn.Linear(64, 128),

nn.ReLU(),

nn.Linear(128, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.ReLU(),

nn.Linear(256, 512),

nn.ReLU(),

nn.Linear(512, 64 * 64 * 3),

nn.Tanh()

)

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

return xcnn

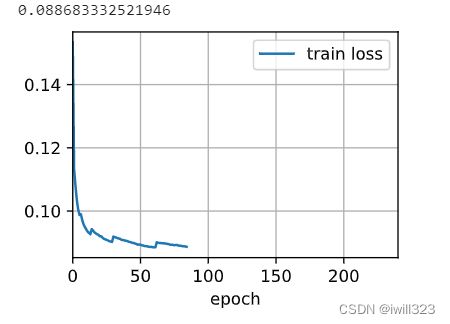

采用李宏毅2022机器学习HW8解析_机器学习手艺人的博客-CSDN博客设计。loss能降到0.88左右,得分约为0.75。值得一提的是,参数个数是601,885个,大小为3.91MB,和前面13,078,314个参数参数的全连接网络表现几乎一样,但是参数个数仅为后者的20分之一,可见cnn网络在图像任务上的威力。

class conv_autoencoder(nn.Module):

def __init__(self):

super(conv_autoencoder, self).__init__()

self.encoder = nn.Sequential(

nn.Conv2d(3, 24, 4, stride=2, padding=1),

nn.BatchNorm2d(24),

nn.ReLU(),

nn.Conv2d(24, 24, 4, stride=2, padding=1),

nn.BatchNorm2d(24),

nn.ReLU(),

nn.Conv2d(24, 48, 4, stride=2, padding=1),

nn.BatchNorm2d(48),

nn.ReLU(),

nn.Conv2d(48, 96, 4, stride=2, padding=1),

nn.BatchNorm2d(96),

nn.ReLU(),

nn.Flatten(),

nn.Dropout(0.2),

nn.Linear(96*4*4, 128),

nn.BatchNorm1d(128),

nn.Dropout(0.2),

nn.Linear(128, 10),

nn.BatchNorm1d(10),

nn.ReLU()

)

self.decoder = nn.Sequential(

nn.Linear(10, 128),

nn.BatchNorm1d(128),

nn.ReLU(),

nn.Linear(128, 96*4*4),

nn.BatchNorm1d(96*4*4),

nn.ReLU(),

nn.Unflatten(1, (96, 4, 4)),

nn.ConvTranspose2d(96, 48, 4, stride=2, padding=1),

nn.BatchNorm2d(48),

nn.ReLU(),

nn.ConvTranspose2d(48, 24, 4, stride=2, padding=1),

nn.BatchNorm2d(24),

nn.ReLU(),

nn.ConvTranspose2d(24, 12, 4, stride=2, padding=1),

nn.BatchNorm2d(12),

nn.ReLU(),

nn.ConvTranspose2d(12, 3, 4, stride=2, padding=1),

nn.Tanh(),

)

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

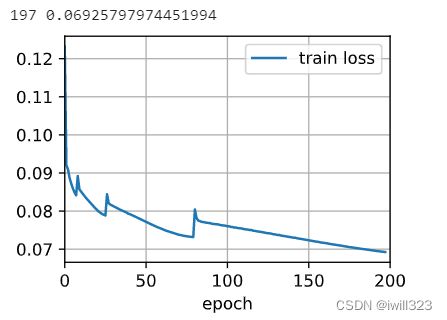

return x 下图是不使用scheduler,采用固定学习率。

config = {

"num_epochs": 240,

"lr": 8e-4,

"model_type": 'cnn', # selecting a model type from {'cnn', 'fcn', 'vae', 'resnet'}

"T_0": 2,

"T_2": 2,

'ratio_min': 10

}

采用StepLR函数调整学习率,lr=5e-3,scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=6, gamma=0.8)

残差网络

encoder部分完全采用resnet18网络,decoder部分基本上与encoder部分是镜像的,参数有14,053,571个,看上去不多,训练起来比较慢

class Residual_Block(nn.Module):

def __init__(self, ic, oc, stride=1):

super().__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(ic, oc, kernel_size=3, padding=1, stride=stride),

nn.BatchNorm2d(oc),

nn.ReLU(inplace=True)

)

self.conv2 = nn.Sequential(

nn.Conv2d(oc, oc, kernel_size=3, padding=1),

nn.BatchNorm2d(oc)

)

self.relu = nn.ReLU(inplace=True)

if stride != 1 or (ic != oc): # 对于resnet18,可以不需要stride != 1这个条件

self.conv3 = nn.Sequential(

nn.Conv2d(ic, oc, kernel_size=1, stride=stride),

nn.BatchNorm2d(oc)

)

else:

self.conv3 = None

def forward(self, X):

Y = self.conv1(X)

Y = self.conv2(Y)

if self.conv3:

X = self.conv3(X)

Y += X

return self.relu(Y)

class ResNet(nn.Module):

def __init__(self, block=Residual_Block, num_layers = [2,2,2,2], num_classes=32):

super().__init__()

self.preconv = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.layer0 = self.make_residual(block, 64, 64, num_layers[0]) # [1, 64, 16, 16]

self.layer1 = self.make_residual(block, 64, 128, num_layers[1], stride=2) # [1, 128, 8, 8]

self.layer2 = self.make_residual(block, 128, 256, num_layers[2], stride=2) # [1, 256, 4, 4]

self.layer3 = self.make_residual(block, 256, 512, num_layers[3], stride=2) # [1, 512, 2, 2]

self.postliner = nn.Sequential(

nn.AdaptiveAvgPool2d((1,1)),

nn.Flatten(),

nn.Linear(512, num_classes) # [1, num_classes]

)

self.decoder = nn.Sequential(

nn.Linear(num_classes, 512*2*2), # [1, 512*2*2]

# nn.BatchNorm1d(512*2*2),

nn.ReLU(),

nn.Unflatten(1, (512, 2, 2)), # [1, 512, 2, 2]

nn.ConvTranspose2d(512, 256, 4, stride=2, padding=1), # [1, 256, 4, 4]

nn.BatchNorm2d(256),

nn.ReLU(),

nn.ConvTranspose2d(256, 128, 4, stride=2, padding=1), # [1, 128, 8, 8]

nn.BatchNorm2d(128),

nn.ReLU(),

nn.ConvTranspose2d(128, 64, 4, stride=2, padding=1), # [1, 64, 16, 16]

nn.BatchNorm2d(64),

nn.ReLU(),

nn.ConvTranspose2d(64, 32, 4, stride=2, padding=1), # [1, 32, 32, 32]

nn.BatchNorm2d(32),

nn.ReLU(),

nn.ConvTranspose2d(32, 3, 4, stride=2, padding=1), # [1, 3, 64, 64]

nn.Tanh(),

)

def make_residual(self, block, ic, oc, num_layer, stride=1):

layers = []

layers.append(block(ic, oc, stride))

for i in range(1, num_layer):

layers.append(block(oc, oc))

return nn.Sequential(*layers)

def encoder(self, x):

x = self.preconv(x)

x = self.layer0(x) #64*64 --> 32*32

x = self.layer1(x) #32*32 --> 16*16

x = self.layer2(x) #16*16 --> 8*8

x = self.layer3(x) #8*8 --> 4*4

x = self.postliner(x)

return x

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

return xconfig = {

"num_epochs": 240,

"lr": 8e-4,

"model_type": 'cnn', # selecting a model type from {'cnn', 'fcn', 'vae', 'resnet'}

"T_0": 2,

"T_2": 2,

'ratio_min': 20

}

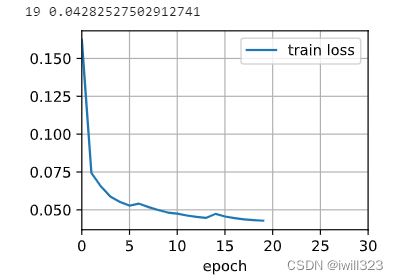

loss可以下降到0.02多一点,但是得分只有0.744分,我怀疑是训练过程loss算错了,专门找了些数据算了一些,loss的确很低。

下面是李宏毅2022机器学习HW8解析_机器学习手艺人的博客-CSDN博客提供的思路,把残差网络变浅、变窄了,loss为0.04左右,得分为0.76左右。这就比较费解了,fcn网络的loss为0.07,得分为0.76分,为什么残差网络的loss下降了,得分反而不变,甚至下降?这是否说明autoencoder还原图像的性能提升不能代表异常检测能力提升?

class Residual_Block(nn.Module):

def __init__(self, ic, oc, stride=1):

super().__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(ic, oc, kernel_size=3, stride=stride, padding=1),

nn.BatchNorm2d(oc),

nn.ReLU(inplace=True)

)

self.conv2 = nn.Sequential(

nn.Conv2d(oc, oc, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(oc),

)

self.relu = nn.ReLU(inplace=True)

self.downsample = None

if stride != 1 or (ic != oc):

self.downsample = nn.Sequential(

nn.Conv2d(ic, oc, kernel_size=1, stride=stride),

nn.BatchNorm2d(oc),

)

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.conv2(out)

if self.downsample:

residual = self.downsample(x)

out += residual

return self.relu(out)

class ResNet(nn.Module):

def __init__(self, block=Residual_Block, num_layers=[2, 1, 1, 1]):

super().__init__()

self.preconv = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(32),

nn.ReLU(inplace=True),

)

self.layer0 = self.make_residual(block, 32, 64, num_layers[0], stride=2)

self.layer1 = self.make_residual(block, 64, 128, num_layers[1], stride=2)

self.layer2 = self.make_residual(block, 128, 128, num_layers[2], stride=2)

self.layer3 = self.make_residual(block, 128, 64, num_layers[3], stride=2)

self.fc = nn.Sequential(

nn.Flatten(),

nn.Dropout(0.2),

nn.Linear(64*4*4, 64),

nn.BatchNorm1d(64)

nn.ReLU(inplace=True),

)

self.decoder = nn.Sequential(

nn.Linear(64, 64*4*4),

nn.BatchNorm1d(64*4*4),

nn.ReLU(),

nn.Unflatten(1, (64, 4, 4)),

nn.ConvTranspose2d(64, 128, 4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.ConvTranspose2d(128, 128, 4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.ConvTranspose2d(128, 128, 4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.ConvTranspose2d(128, 3, 4, stride=2, padding=1),

nn.Tanh(),

)

def make_residual(self, block, ic, oc, num_layer, stride=1):

layers = []

layers.append(block(ic, oc, stride))

for i in range(1, num_layer):

layers.append(block(oc, oc))

return nn.Sequential(*layers)

def encoder(self, x):

x = self.preconv(x)

x = self.layer0(x) #64*64 --> 32*32

x = self.layer1(x) #32*32 --> 16*16

x = self.layer2(x) #16*16 --> 8*8

x = self.layer3(x) #8*8 --> 4*4

x = self.fc(x)

return x

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

return x辅助网络

参考李宏毅2022机器学习HW8解析_机器学习手艺人的博客-CSDN博客,使用了额外的一个decoder辅助网络,ResNet网络与原来的训练方法一致,辅助网络是ResNet网络的decoder部分,没有看懂,先记下来

训练函数

def trainer(model, config, train_dataloader, devices):

best_loss = np.inf

num_epochs = config['num_epochs']

model_type = config['model_type']

# Loss and optimizer

criterion = nn.MSELoss()

optimizer = torch.optim.Adam(model.parameters(), lr=config['lr'])

optimizer_a = torch.optim.AdamW(aux.parameters(), lr=config['lr'])

scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer,

T_0=config['T_0'], T_mult=config['T_2'], eta_min=config['lr']/config['ratio_min'])

if not os.path.isdir('./' + config['model_path'].split('/')[1]):

os.mkdir('./' + config['model_path'].split('/')[1]) # Create directory of saving models.

aux.train()

legend = ['train loss']

animator = d2l.Animator(xlabel='epoch', xlim=[0, num_epochs], legend=legend)

for epoch in range(num_epochs):

tot_loss, tot_loss_a = 0.0, 0.0

temperature = epoch // 2 + 1

for data in train_dataloader:

img = data.float().to(devices[0])

if model_type in ['fcn']:

img = img.view(img.shape[0], -1)

# ===================train autoencoder=====================

model.train()

output = model(img)

if model_type in ['vae']:

loss = loss_vae(output[0], img, output[1], output[2], criterion)

else:

loss = criterion(output, img)

tot_loss += loss.item()

optimizer.zero_grad()

loss.backward()

optimizer.step()

# ===================train aux===================== 这条线以上部分和以前没有区别

model.eval()

z = model.encoder(img).detach_()

output = output.detach_()

output_a = aux(z)

loss_a = (criterion(output_a, output).mul(temperature).exp())*criterion(output_a, img)

# loss_a = loss_a.mean()

tot_loss_a += loss_a.item()

optimizer_a.zero_grad()

loss_a.backward()

optimizer_a.step()

scheduler.step()

# ===================save_best====================

mean_loss = tot_loss / len(train_dataloader)

print(epoch, mean_loss)

animator.add(epoch, (mean_loss))

if mean_loss < best_loss:

best_loss = mean_loss

torch.save(model.state_dict(), config['model_path'] + 'res')

torch.save(aux.state_dict(), config['model_path'] + 'aux')残差网络和辅助函数

class Residual_Block(nn.Module):

def __init__(self, ic, oc, stride=1):

super().__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(ic, oc, kernel_size=3, stride=stride, padding=1),

nn.BatchNorm2d(oc),

nn.ReLU(inplace=True)

)

self.conv2 = nn.Sequential(

nn.Conv2d(oc, oc, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(oc),

)

self.relu = nn.ReLU(inplace=True)

self.downsample = None

if stride != 1 or (ic != oc):

self.downsample = nn.Sequential(

nn.Conv2d(ic, oc, kernel_size=1, stride=stride),

nn.BatchNorm2d(oc),

)

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.conv2(out)

if self.downsample:

residual = self.downsample(x)

out += residual

return self.relu(out)

class ResNet(nn.Module):

def __init__(self, block=Residual_Block, num_layers=[2, 1, 1, 1]):

super().__init__()

self.preconv = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(32),

nn.ReLU(inplace=True),

)

self.layer0 = self.make_residual(block, 32, 64, num_layers[0], stride=2)

self.layer1 = self.make_residual(block, 64, 128, num_layers[1], stride=2)

self.layer2 = self.make_residual(block, 128, 128, num_layers[2], stride=2)

self.layer3 = self.make_residual(block, 128, 64, num_layers[3], stride=2)

self.fc = nn.Sequential(

nn.Flatten(),

nn.Dropout(0.2),

nn.Linear(64*4*4, 64),

nn.BatchNorm1d(64),

nn.ReLU(inplace=True),

)

self.decoder = nn.Sequential(

nn.Linear(64, 64*4*4),

nn.BatchNorm1d(64*4*4),

nn.ReLU(),

nn.Unflatten(1, (64, 4, 4)),

nn.ConvTranspose2d(64, 128, 4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.ConvTranspose2d(128, 128, 4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.ConvTranspose2d(128, 128, 4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.ConvTranspose2d(128, 3, 4, stride=2, padding=1),

nn.Tanh(),

)

def make_residual(self, block, ic, oc, num_layer, stride=1):

layers = []

layers.append(block(ic, oc, stride))

for i in range(1, num_layer):

layers.append(block(oc, oc))

return nn.Sequential(*layers)

def encoder(self, x):

x = self.preconv(x)

x = self.layer0(x) #64*64 --> 32*32

x = self.layer1(x) #32*32 --> 16*16

x = self.layer2(x) #16*16 --> 8*8

x = self.layer3(x) #8*8 --> 4*4

x = self.fc(x)

return x

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

return x

# 完全就是把ResNet的decoder部分拿了过来

class Auxiliary(nn.Module):

def __init__(self):

super().__init__()

self.decoder = nn.Sequential(

nn.Linear(64, 64*4*4),

nn.BatchNorm1d(64*4*4),

nn.ReLU(),

nn.Unflatten(1, (64, 4, 4)),

nn.ConvTranspose2d(64, 128, 4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.ConvTranspose2d(128, 128, 4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.ConvTranspose2d(128, 128, 4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.ConvTranspose2d(128, 3, 4, stride=2, padding=1),

nn.Tanh(),

)

def forward(self, x):

return self.decoder(x)配置参数

devices = d2l.try_all_gpus()

print(f'DEVICE: {devices}')

config = {

"num_epochs": 30,

"lr": 8e-4,

"model_type": 'res', # selecting a model type from {'cnn', 'fcn', 'vae', 'resnet'}

"T_0": 2,

"T_2": 2,

'ratio_min': 20

}

config['model_path'] = './models/' + config['model_type']

model_classes = {'fcn': fcn_autoencoder(), 'cnn': conv_autoencoder(), 'vae': VAE(), 'res':ResNet()}

model= model_classes[config['model_type']].to(devices[0])

# model.load_state_dict(torch.load('./best_model_fcn.pt'))

aux = Auxiliary().to(devices[0])

trainer(model, config, train_dataloader, devices)推断

eval_batch_size = 1024

# build testing dataloader

data = torch.tensor(test, dtype=torch.float32)

test_dataset = CustomTensorDataset(data)

test_dataloader = DataLoader(test_dataset, shuffle=False, batch_size=eval_batch_size, num_workers=num_workers, pin_memory=True)

print('测试集总长度是 {:d}, batch数量是 {:.2f}'.format(len(test_dataset), len(test_dataset)/ eval_batch_size))

eval_loss = nn.MSELoss(reduction='none')

# load trained model

model= ResNet().to(devices[0])

model.load_state_dict(torch.load(config['model_path'] + 'res'))

aux = Auxiliary().to(devices[0])

aux.load_state_dict(torch.load(config['model_path'] + 'aux'))

model.eval()

# prediction file

out_file = 'prediction.csv'

out_file_a = 'prediction_a.csv'

anomality = []

auxs = []

with torch.no_grad():

for i, data in enumerate(test_dataloader):

img = data.float().to(devices[0])

z = model.encoder(img)

output = model(img)

output, output_a = model.decoder(z), aux(z)

loss = eval_loss(output, img).mean([1, 2, 3])

loss_a = eval_loss(output_a, img).mean([1, 2, 3])

anomality.append(loss)

auxs.append(loss_a)

anomality = torch.cat(anomality, axis=0)

anomality = torch.sqrt(anomality).reshape(len(test), 1).cpu().numpy()

auxs = torch.cat(auxs, axis=0)

auxs = torch.sqrt(auxs).reshape(len(test), 1).cpu().numpy()

df = pd.DataFrame(anomality, columns=['score'])

df.to_csv(out_file, index_label = 'ID')

df_a = pd.DataFrame(auxs, columns=['score'])

df_a.to_csv(out_file_a, index_label = 'ID')

import matplotlib.pyplot as plt

plt.figure(figsize=(12, 4))

plt.subplot(121)

plt.title('reconstrcut error')

plt.hist(df.score)

plt.subplot(122)

plt.title('aux error')

plt.hist(df_a.score)

plt.show()