图像分割之最大熵阈值分割

最大熵阈值分割法和OTSU算法类似,假设将图像分为背景和前景两个部分。熵代表信息量,图像信息量越大,熵就越大,最大熵算法就是找出一个最佳阈值使得背景与前景两个部分熵之和最大。

基本原理

频率和概率

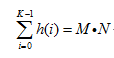

直方图每个矩形框的数值描述的是图像中相应灰度值的频率。因此,可以说直方图是一种离散的频率分布。给定一个大小为M*N的图像I,直方图中所有矩形框所代表的数值之和,即为图像中的像素数量,即:

相对应的归一化直方图表示为:

相对应的归一化直方图表示为:

![]()

0<=i![]()

与累积概率所所对应的累积直方图H是一个离散的分布函数P(),(通常也称为累积分布函数或cdf):

最大熵阈值分割

熵是信息论中一个重要的概念,这种方法常用于数据压缩领域。熵是一种统计测量方法,用以确定随机数据源中所包含的信息数量。例如,包含有N个像素的图像I,可以解释为包含有N个符号的信息,每一个符号的值都独立获取于有限范围K(e.g.,256)中的不同灰度值。

将数字图像建模为一种随机信号处理,意味着必须知道图像灰度中每一个灰度g所发生的概率,即:

![]()

因为所有概率应该事先知道,所以这些概率也称为先验概率。对于K个不同灰度值g=0,…,K-1的概率向量可以表示为:

![]()

上述概率向量也称为概率分布或称为概率密度函数(pdf)。实际数字图像处理应用当中,先验的概率通常是不知道的,但是这些概率可以通过在一幅图像或多幅图像中观察对应灰度值所发生的频率,从而估算出其概率。图像概率密度函数p(g)可以通过归一化其对应的直方图获得其概率,即:

最大熵

数字图像中给定一个估算的概率密度函数p(g),数字图像中的熵定义为:

用图像熵进行图像分割

利用图像熵为准则进行图像分割有一定历史了,学者们提出了许多以图像熵为基础进行图像分割的方法。我们介绍一种由Kapuret al提出来,现在仍然使用较广的一种图像熵分割方法。

给定一个特定的阈值q(0<=q

为了计算效率,对上面公式进行优化

示例演示

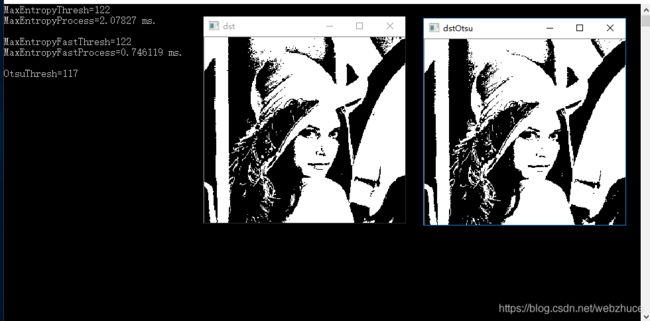

用OpenCV实现了两个实现方式,MaxEntropyFast是提升了效率的函数。完整代码

#include 运行结果

参考资料

- 最大熵阈值分割法