cv1.2图像去除噪声

作者 群号 C语言交流中心 240137450 微信 15013593099

图像去噪声

添加高斯噪声

// cv2.cpp : Defines the entry point for the console application.

//

#include

#include

using namespace cv;

using namespace std;

#define TWO_PI 6.2831853071795864769252866

double generateGaussianNoise()

{

static bool hasSpare = false;

static double rand1, rand2;

if(hasSpare)

{

hasSpare = false;

return sqrt(rand1) * sin(rand2);

}

hasSpare = true;

rand1 = rand() / ((double) RAND_MAX);

if(rand1 < 1e-100) rand1 = 1e-100;

rand1 = -2 * log(rand1);

rand2 = (rand() / ((double) RAND_MAX)) * TWO_PI;

return sqrt(rand1) * cos(rand2);

}

void AddGaussianNoise(Mat& I)

{

// accept only char type matrices

CV_Assert(I.depth() != sizeof(uchar));

int channels = I.channels();

int nRows = I.rows;

int nCols = I.cols * channels;

if(I.isContinuous()){

nCols *= nRows;

nRows = 1;

}

int i,j;

uchar* p;

for(i = 0; i < nRows; ++i){

p = I.ptr(i);

for(j = 0; j < nCols; ++j){

double val = p[j] + generateGaussianNoise() * 128;

if(val < 0)

val = 0;

if(val > 255)

val = 255;

p[j] = (uchar)val;

}

}

}

int main( int argc, char** argv )

{

Mat image;

image = imread("f:\\img\\lena.jpg", 1); // Read the file

if(! image.data ) // Check for invalid input

{

cout << "Could not open or find the image" << std::endl ;

return -1;

}

namedWindow( "Display window", WINDOW_AUTOSIZE ); // Create a window for display.

imshow( "Display window", image ); // Show our image inside it.

// Add Gaussian noise here

AddGaussianNoise(image);

namedWindow( "Noisy image", WINDOW_AUTOSIZE ); // Create a window for display.

imshow( "Noisy image", image ); // Show our image inside it.

waitKey(0); // Wait for a keystroke in the window

return 0;

} 椒盐噪声

// cv2.cpp : Defines the entry point for the console application.

//

#include

#include

void salt(cv::Mat&, int n=3000);

int main(){

cv::Mat image = cv::imread("f:\\img\\Lena.jpg");

salt(image, 10000);

cv::namedWindow("image");

cv::imshow("image",image);

cv::waitKey(0);

}

void salt(cv::Mat& image, int n){

for(int k=0; k(j,i) = 255;

}else{

image.at(j,i)[0] = 255;

image.at(j,i)[1] = 255;

image.at(j,i)[2] = 255;

}

}

}

去除噪声

1掌握算术均值滤波器、几何均值滤波器、谐波和逆谐波均值滤波器进行图像去噪的算法

2掌握利用中值滤波器进行图像去噪的算法

3掌握自适应中值滤波算法

4掌握自适应局部降低噪声滤波器去噪算法

5掌握彩色图像去噪步骤

1、均值滤波

具体内容:利用OpenCV 对灰度图像像素进行操作,分别利用算术均值滤波器、几何均值滤波器、谐波和逆谐波均值滤波器进行图像去噪。模板大小为5*5。(注:请分别为图像添加高斯噪声、胡椒噪声、盐噪声和椒盐噪声,并观察滤波效果)

2、中值滤波

具体内容:利用OpenCV 对灰度图像像素进行操作,分别利用5*5 和9*9尺寸的模板对图像进行中值滤波。(注:请分别为图像添加胡椒噪声、盐噪声和椒盐噪声,并观察滤波效果)

3、自适应均值滤波。

具体内容:利用OpenCV 对灰度图像像素进行操作,设计自适应局部降低噪声滤波器去噪算法。模板大小7*7(对比该算法的效果和均值滤波器的效果)

4、自适应中值滤波

具体内容:利用OpenCV 对灰度图像像素进行操作,设计自适应中值滤波算法对椒盐图像进行去噪。模板大小7*7(对比中值滤波器的效果)

5、彩色图像均值滤波

具体内容:利用

OpenCV 对彩色图像RGB 三个通道的像素进行操作,利用算术均值滤波器和几何均值滤波器进行彩色图像去噪。模板大小为5*5

// cv2.cpp : Defines the entry point for the console application.

//

#include

#include

#include

//1、 实验步骤:先为灰度图像添加高斯噪声、胡椒噪声、盐噪声和椒盐噪声,再分别利用算术均值滤波器、几何均值滤波器、谐波和逆谐波均值滤波器进行图像去噪。模板大小为5*5。

//核心代码如下:

//添加各类噪声:

IplImage* AddGuassianNoise(IplImage* src) //添加高斯噪声

{

IplImage* dst = cvCreateImage(cvGetSize(src),src->depth,src->nChannels);

IplImage* noise = cvCreateImage(cvGetSize(src),src->depth,src->nChannels);

CvRNG rng = cvRNG(-1);

cvRandArr(&rng,noise,CV_RAND_NORMAL,cvScalarAll(0),cvScalarAll(15));

cvAdd(src,noise,dst);

return dst;

}

IplImage* AddPepperNoise(IplImage* src) //添加胡椒噪声,随机黑色点

{

IplImage* dst = cvCreateImage(cvGetSize(src),src->depth,src->nChannels);

cvCopy(src, dst);

for(int k=0; k<8000; k++)

{

int i = rand()%src->height;

int j = rand()%src->width;

CvScalar s = cvGet2D(src, i, j);

if(src->nChannels == 1)

{

s.val[0] = 0;

}

else if(src->nChannels==3)

{

s.val[0]=0;

s.val[1]=0;

s.val[2]=0;

}

cvSet2D(dst, i, j, s);

}

return dst;

}

IplImage* AddSaltNoise(IplImage* src) //添加盐噪声,随机白色点

{

IplImage* dst = cvCreateImage(cvGetSize(src),src->depth,src->nChannels);

cvCopy(src, dst);

for(int k=0; k<8000; k++)

{

int i = rand()%src->height;

int j = rand()%src->width;

CvScalar s = cvGet2D(src, i, j);

if(src->nChannels == 1)

{

s.val[0] = 255;

}

else if(src->nChannels==3)

{

s.val[0]=255;

s.val[1]=255;

s.val[2]=255;

}

cvSet2D(dst, i, j, s);

}

return dst;

}

IplImage* AddPepperSaltNoise(IplImage* src) //添加椒盐噪声,随机黑白点

{

IplImage* dst = cvCreateImage(cvGetSize(src),src->depth,src->nChannels);

cvCopy(src, dst);

for(int k=0; k<8000; k++)

{

int i = rand()%src->height;

int j = rand()%src->width;

int m = rand()%2;

CvScalar s = cvGet2D(src, i, j);

if(src->nChannels == 1)

{

if(m==0)

{

s.val[0] = 255;

}

else

{

s.val[0] = 0;

}

}

else if(src->nChannels==3)

{

if(m==0)

{

s.val[0]=255;

s.val[1]=255;

s.val[2]=255;

}

else

{

s.val[0]=0;

s.val[1]=0;

s.val[2]=0;

}

}

cvSet2D(dst, i, j, s);

}

return dst;

}

//各类滤波器实现:

//算术均值滤波器——模板大小5*5

IplImage* ArithmeticMeanFilter(IplImage* src)

{

IplImage* dst = cvCreateImage(cvGetSize(src),src->depth,src->nChannels);

cvSmooth(src,dst,CV_BLUR,5);

return dst;

}

//几何均值滤波器——模板大小5*5

IplImage* GeometryMeanFilter(IplImage* src)

{

IplImage* dst = cvCreateImage(cvGetSize(src),src->depth,src->nChannels);

int row, col;

int h=src->height;

int w=src->width;

double mul[3];

double dc[3];

int mn;

//计算每个像素的去噪后color值

for(int i=0;iheight;i++){

for(int j=0;jwidth;j++){

mul[0]=1.0;

mn=0;

//统计邻域内的几何平均值,邻域大小5*5

for(int m=-2;m<=2;m++){

row = i+m;

for(int n=-2;n<=2;n++){

col = j+n;

if(row>=0&&row=0 && coldepth,src->nChannels);

int row, col;

int h=src->height;

int w=src->width;

double sum[3];

double dc[3];

int mn;

//计算每个像素的去噪后color值

for(int i=0;iheight;i++){

for(int j=0;jwidth;j++){

sum[0]=0.0;

mn=0;

//统计邻域,5*5模板

for(int m=-2;m<=2;m++){

row = i+m;

for(int n=-2;n<=2;n++){

col = j+n;

if(row>=0&&row=0 && coldepth,src->nChannels);

//cvSmooth(src,dst,CV_BLUR,5);

int row, col;

int h=src->height;

int w=src->width;

double sum[3];

double sum1[3];

double dc[3];

double Q=2;

//计算每个像素的去噪后color值

for(int i=0;iheight;i++){

for(int j=0;jwidth;j++){

sum[0]=0.0;

sum1[0]=0.0;

//统计邻域

for(int m=-2;m<=2;m++){

row = i+m;

for(int n=-2;n<=2;n++){

col = j+n;

if(row>=0&&row=0 && coldepth,src->nChannels);

cvSmooth(src,dst,CV_MEDIAN,5);

return dst;

}

IplImage* MedianFilter_9_9(IplImage* src){

IplImage* dst = cvCreateImage(cvGetSize(src),src->depth,src->nChannels);

cvSmooth(src,dst,CV_MEDIAN,9);

return dst;

}

//3、 实验步骤:自适应均值滤波(以高斯噪声为例),先为灰度图像添加高斯噪声,再利用7*7尺寸的模板对图像进行自适应均值滤波。

//核心代码如下:

IplImage* SelfAdaptMeanFilter(IplImage* src){

IplImage* dst = cvCreateImage(cvGetSize(src),src->depth,src->nChannels);

cvSmooth(src,dst,CV_BLUR,7);

int row, col;

int h=src->height;

int w=src->width;

int mn;

double Zxy;

double Zmed;

double Sxy;

double Sl;

double Sn=100;

for(int i=0;iheight;i++){

for(int j=0;jwidth;j++){

CvScalar xy = cvGet2D(src, i, j);

Zxy = xy.val[0];

CvScalar dxy = cvGet2D(dst, i, j);

Zmed = dxy.val[0];

Sl=0;

mn=0;

for(int m=-3;m<=3;m++){

row = i+m;

for(int n=-3;n<=3;n++){

col = j+n;

if(row>=0&&row=0 && coldepth,src->nChannels);

int row, col;

int h=src->height;

int w=src->width;

double Zmin,Zmax,Zmed,Zxy,Smax=7;

int wsize;

//计算每个像素的去噪后color值

for(int i=0;iheight;i++){

for(int j=0;jwidth;j++){

//统计邻域

wsize=1;

while(wsize<=3){

Zmin=255.0;

Zmax=0.0;

Zmed=0.0;

CvScalar xy = cvGet2D(src, i, j);

Zxy=xy.val[0];

int mn=0;

for(int m=-wsize;m<=wsize;m++){

row = i+m;

for(int n=-wsize;n<=wsize;n++){

col = j+n;

if(row>=0&&row=0 && colZmax){

Zmax=s.val[0];

}

if(s.val[0]0 && (Zmed-Zmax)<0){

if((Zxy-Zmin)>0 && (Zxy-Zmax)<0){

d.val[0]=Zxy;

}else{

d.val[0]=Zmed;

}

cvSet2D(dst, i, j, d);

break;

} else {

wsize++;

if(wsize>3){

CvScalar d;

d.val[0]=Zmed;

cvSet2D(dst, i, j, d);

break;

}

}

}

}

}

return dst;

}

int main()

{

IplImage*img=cvLoadImage("F:\\img\\lena.jpg",1);

if(NULL==img) exit(1);

IplImage * noise=AddPepperNoise(img);

IplImage * imgfil=MedianFilter_5_5(noise);

cvNamedWindow("src",1);

cvShowImage("src",img);

cvNamedWindow("noise",1);

cvShowImage("noise",noise);

cvNamedWindow("filter",1);

cvShowImage("filter",imgfil);

cvWaitKey(0);

cvReleaseImage(&img);

cvReleaseImage(&noise);

cvReleaseImage(&imgfil);

cvDestroyWindow("src");

cvDestroyWindow("noise");

cvDestroyWindow("filter");

return 0;

} 图像倾斜扶正

识别倾斜图像运动物体跟踪

去除背景

图像拼接

图像去抖

画轮廓包络

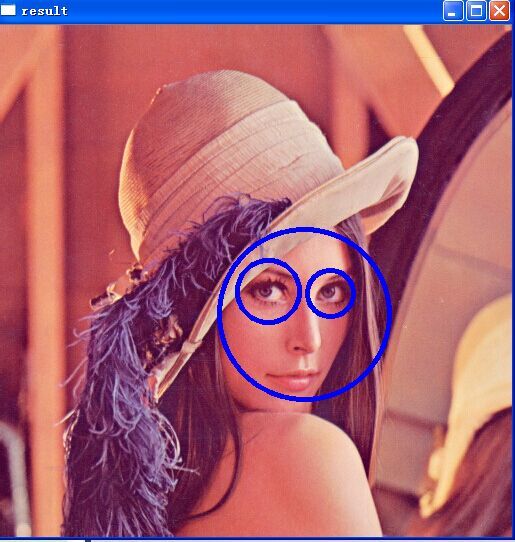

人脸检测

// cv2.cpp : Defines the entry point for the console application.

//

#include "opencv2/objdetect/objdetect.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/ml/ml.hpp"

#include

#include

using namespace std;

using namespace cv;

void detectAndDraw( Mat& img,

CascadeClassifier& cascade, CascadeClassifier& nestedCascade,

double scale);

String cascadeName = "F:\\opencv\\sources\\data\\haarcascades\\haarcascade_frontalface_alt2.xml";//人脸的训练数据

//String nestedCascadeName = "./haarcascade_eye_tree_eyeglasses.xml";//人眼的训练数据

String nestedCascadeName = "F:\\opencv\\sources\\data\\haarcascades\\haarcascade_eye.xml";//人眼的训练数据

int main( int argc, const char** argv )

{

Mat image;

CascadeClassifier cascade, nestedCascade;//创建级联分类器对象

double scale = 1.3;

//image = imread( "lena.jpg", 1 );//读入lena图片

image = imread("f:\\img\\lena.jpg",1);

namedWindow( "result", 1 );//opencv2.0以后用namedWindow函数会自动销毁窗口

if( !cascade.load( cascadeName ) )//从指定的文件目录中加载级联分类器

{

cerr << "ERROR: Could not load classifier cascade唉唉出错了" << endl;

return 0;

}

if( !nestedCascade.load( nestedCascadeName ) )

{

cerr << "WARNING: Could not load classifier cascade for nested objects" << endl;

return 0;

}

if( !image.empty() )//读取图片数据不能为空

{

detectAndDraw( image, cascade, nestedCascade, scale );

waitKey(0);

}

return 0;

}

void detectAndDraw( Mat& img,

CascadeClassifier& cascade, CascadeClassifier& nestedCascade,

double scale)

{

int i = 0;

double t = 0;

vector faces;

const static Scalar colors[] = { CV_RGB(0,0,255),

CV_RGB(0,128,255),

CV_RGB(0,255,255),

CV_RGB(0,255,0),

CV_RGB(255,128,0),

CV_RGB(255,255,0),

CV_RGB(255,0,0),

CV_RGB(255,0,255)} ;//用不同的颜色表示不同的人脸

Mat gray, smallImg( cvRound (img.rows/scale), cvRound(img.cols/scale), CV_8UC1 );//将图片缩小,加快检测速度

cvtColor( img, gray, CV_BGR2GRAY );//因为用的是类haar特征,所以都是基于灰度图像的,这里要转换成灰度图像

resize( gray, smallImg, smallImg.size(), 0, 0, INTER_LINEAR );//将尺寸缩小到1/scale,用线性插值

equalizeHist( smallImg, smallImg );//直方图均衡

t = (double)cvGetTickCount();//用来计算算法执行时间

//检测人脸

//detectMultiScale函数中smallImg表示的是要检测的输入图像为smallImg,faces表示检测到的人脸目标序列,1.1表示

//每次图像尺寸减小的比例为1.1,2表示每一个目标至少要被检测到3次才算是真的目标(因为周围的像素和不同的窗口大

//小都可以检测到人脸),CV_HAAR_SCALE_IMAGE表示不是缩放分类器来检测,而是缩放图像,Size(30, 30)为目标的

//最小最大尺寸

cascade.detectMultiScale( smallImg, faces,

1.1, 2, 0

//|CV_HAAR_FIND_BIGGEST_OBJECT

//|CV_HAAR_DO_ROUGH_SEARCH

|CV_HAAR_SCALE_IMAGE

,

Size(30, 30) );

t = (double)cvGetTickCount() - t;//相减为算法执行的时间

printf( "detection time = %g ms\n", t/((double)cvGetTickFrequency()*1000.) );

for( vector::const_iterator r = faces.begin(); r != faces.end(); r++, i++ )

{

Mat smallImgROI;

vector nestedObjects;

Point center;

Scalar color = colors[i%8];

int radius;

center.x = cvRound((r->x + r->width*0.5)*scale);//还原成原来的大小

center.y = cvRound((r->y + r->height*0.5)*scale);

radius = cvRound((r->width + r->height)*0.25*scale);

circle( img, center, radius, color, 3, 8, 0 );

//检测人眼,在每幅人脸图上画出人眼

if( nestedCascade.empty() )

continue;

smallImgROI = smallImg(*r);

//和上面的函数功能一样

nestedCascade.detectMultiScale( smallImgROI, nestedObjects,

1.1, 2, 0

//|CV_HAAR_FIND_BIGGEST_OBJECT

//|CV_HAAR_DO_ROUGH_SEARCH

//|CV_HAAR_DO_CANNY_PRUNING

|CV_HAAR_SCALE_IMAGE

,

Size(30, 30) );

for( vector::const_iterator nr = nestedObjects.begin(); nr != nestedObjects.end(); nr++ )

{

center.x = cvRound((r->x + nr->x + nr->width*0.5)*scale);

center.y = cvRound((r->y + nr->y + nr->height*0.5)*scale);

radius = cvRound((nr->width + nr->height)*0.25*scale);

circle( img, center, radius, color, 3, 8, 0 );//将眼睛也画出来,和对应人脸的图形是一样的

}

}

cv::imshow( "result", img );

}

人脸打马赛克

// cv2.cpp : Defines the entry point for the console application.

//

#include

#include

#include

#include

using namespace std;

using namespace cv;

static CvHaarClassifierCascade* cascade = 0;

static CvMemStorage* storage = 0;

void detect_and_draw( IplImage* image );

const char* cascade_name ="F:\\opencv\\sources\\data\\haarcascades\\haarcascade_frontalface_alt.xml";

void detect_and_draw( IplImage* img )

{

//static CvScalar colors[] =

//{

// {{0,0,255}},

// {{0,128,255}},

// {{0,255,255}},

// {{0,255,0}},

// {{255,128,0}},

// {{255,255,0}},

// {{255,0,0}},

// {{255,0,255}}

//}; //用不同颜色的圈圈区分不同的人

double scale = 1.3; //先缩小为small_img 后放大,为了便于处理也可以不用

IplImage* gray = cvCreateImage( cvSize(img->width,img->height), 8, 1 );

IplImage* small_img = cvCreateImage( cvSize( cvRound (img->width/scale), cvRound (img->height/scale)), 8, 1 );

int i;

cvCvtColor( img, gray, CV_BGR2GRAY ); //灰度图

cvResize( gray, small_img, CV_INTER_LINEAR ); //缩小

cvEqualizeHist( small_img, small_img ); //经过上面两步,gray指向的图像已经变为small_img

cvClearMemStorage( storage ); //动态内存重置

if( cascade ) //括号里是分类器的指针,就是用这个东西来判断是不是人脸的!!!

{

double t = (double)cvGetTickCount(); //开始计时

CvSeq* faces = cvHaarDetectObjects( small_img, cascade, storage,

1.1, 2, 0 /*CV_HAAR_DO_CANNY_PRUNING*/,

cvSize(30, 30) ); //检测的核心语句,注意cascade参数

t = (double)cvGetTickCount() - t; //计算时间间隔

//cout<<"detection time = %gms\n"<total : 0); i++ ) //总共有faces->total 个脸,把他们一个一个圈出来

{

CvRect* r = (CvRect*)cvGetSeqElem( faces, i ); //每个脸对应的链表节点

CvPoint center;

int radius;

center.x = cvRound((r->x + r->width*0.5)*scale); //圆心横坐标,注意scale,这里放大

center.y = cvRound((r->y + r->height*0.5)*scale); //纵坐标

radius = cvRound((r->width + r->height)*0.25*scale); //半径

//cvCircle( img, center, radius, colors[i%8], -1, 8, 0 ); //画圆圈出目标头像

//马赛克

cvSetImageROI(img,cvRect(center.x-radius,center.y-radius,radius*2,radius*2));

int W=8;

int H=8;

for(int mi=W;miroi->width;mi+=W)

for(int mj=H;mjroi->height;mj+=H)

{

CvScalar tmp=cvGet2D(img,mj-H/2,mi-W/2);

for(int mx=mi-W;mx<=mi;mx++)

for(int my=mj-H;my<=mj;my++)

cvSet2D(img,my,mx,tmp);

}

cvResetImageROI(img);

}

}

cvShowImage( "result", img );

cvShowImage( "gray", gray );

cvShowImage( "face", small_img );

cvReleaseImage( &gray );

cvReleaseImage( &small_img );

}

int main(int argc, char* argv[])

{

cascade = (CvHaarClassifierCascade*)cvLoad( cascade_name, 0, 0, 0 ); //加载人脸检测所用的分类器

if( !cascade )

{

fprintf( stderr, "ERROR: Could not load classifier cascade\n" );

return -1;

}

storage = cvCreateMemStorage(0); //动态存储结构,用来存储人脸在图像中的位置

cvNamedWindow( "result", 1 );

cvNamedWindow( "gray", 1);

cvNamedWindow("face",1);

const char* filename = "f:\\img\\lena.jpg"; //待检测图像(包含绝对路径)

//const char* filename = "景甜.jpg";

IplImage* image = cvLoadImage( filename, 1 ); //加载图像

detect_and_draw( image ); //对加载的图像进行检测

cvWaitKey(0);

cvReleaseImage( &image );

cvDestroyWindow("result");

cvDestroyWindow("gray");

return 0;

}

倾斜人脸检测

人脸识别

马赛克去除

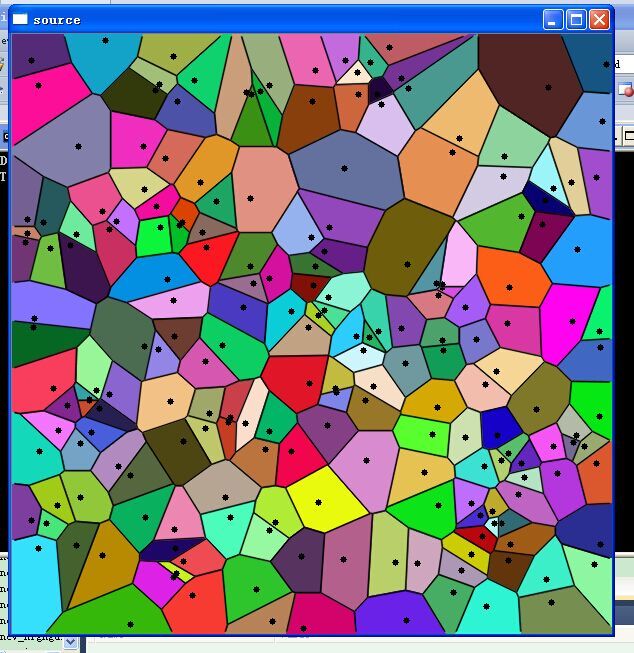

Delaunay三角网与Voronoi图

(实线多边形就是Delaunay三角网;虚线多边形式Voronoi图)

Voronoi图,又叫泰森多边形或Dirichlet图,它是由一组由连接两邻点直线的垂直平分线组成的连续多边形组成。N个在平面上有区别的点,按照最邻近原则划分平面;每个点与它的最近邻区域相关联。Delaunay三角形是由与相邻Voronoi多边形共享一条边的相关点连接而成的三角形。Delaunay三角形的外接圆圆心是与三角形相关的Voronoi多边形的一个顶点。Voronoi三角形是Delaunay图的偶图;

对于给定的初始点集P,有多种三角网剖分方式,其中Delaunay三角网具有以下特征:

1、Delaunay三角网是唯一的;

2、三角网的外边界构成了点集P的凸多边形“外壳”;

3、没有任何点在三角形的外接圆内部,反之,如果一个三角网满足此条件,那么它就是Delaunay三角网。

4、如果将三角网中的每个三角形的最小角进行升序排列,则Delaunay三角网的排列得到的数值最大,从这个意义上讲,Delaunay三角网是“最接近于规则化的“的三角网。

Delaunay三角形网的特征又可以表达为以下特性:

1、在Delaunay三角形网中任一三角形的外接圆范围内不会有其它点存在并与其通视,即空圆特性;

2、在构网时,总是选择最邻近的点形成三角形并且不与约束线段相交;

3、形成的三角形网总是具有最优的形状特征,任意两个相邻三角形形成的凸四边形的对角线如果可以互换的话,那么两个三角形6个内角中最小的角度不会变大;

4、不论从区域何处开始构网,最终都将得到一致的结果,即构网具有唯一性。

Delaunay三角形产生的基本准则:任何一个Delaunay三角形的外接圆的内部不能包含其他任何点[Delaunay1934]。Lawson[1972]提出了最大化最小角原则,每两个相邻的三角形构成凸四边形的对角线,在相互交换后,六个内角的最小角不再增大。Lawson[1977提出了一个局部优化过程(LOP,local Optimization Procedure)方法。

#include "opencv\\cv.h"

#include "opencv\\highgui.h"

#include

#include

/* the script demostrates iterative construction of

delaunay triangulation and voronoi tesselation */

CvSubdiv2D* init_delaunay( CvMemStorage* storage,

CvRect rect )

{

CvSubdiv2D* subdiv;

subdiv = cvCreateSubdiv2D( CV_SEQ_KIND_SUBDIV2D, sizeof(*subdiv),

sizeof(CvSubdiv2DPoint),

sizeof(CvQuadEdge2D),

storage );

cvInitSubdivDelaunay2D( subdiv, rect );

return subdiv;

}

void draw_subdiv_point( IplImage* img, CvPoint2D32f fp, CvScalar color )

{

cvCircle( img, cvPoint(cvRound(fp.x), cvRound(fp.y)), 3, color, CV_FILLED, 8, 0 );

}

void draw_subdiv_edge( IplImage* img, CvSubdiv2DEdge edge, CvScalar color )

{

CvSubdiv2DPoint* org_pt;

CvSubdiv2DPoint* dst_pt;

CvPoint2D32f org;

CvPoint2D32f dst;

CvPoint iorg, idst;

org_pt = cvSubdiv2DEdgeOrg(edge);

dst_pt = cvSubdiv2DEdgeDst(edge);

if( org_pt && dst_pt )

{

org = org_pt->pt;

dst = dst_pt->pt;

iorg = cvPoint( cvRound( org.x ), cvRound( org.y ));

idst = cvPoint( cvRound( dst.x ), cvRound( dst.y ));

cvLine( img, iorg, idst, color, 1, CV_AA, 0 );

}

}

void draw_subdiv( IplImage* img, CvSubdiv2D* subdiv,

CvScalar delaunay_color, CvScalar voronoi_color )

{

CvSeqReader reader;

int i, total = subdiv->edges->total;

int elem_size = subdiv->edges->elem_size;

cvStartReadSeq( (CvSeq*)(subdiv->edges), &reader, 0 );

for( i = 0; i < total; i++ )

{

CvQuadEdge2D* edge = (CvQuadEdge2D*)(reader.ptr);

if( CV_IS_SET_ELEM( edge ))

{

draw_subdiv_edge( img, (CvSubdiv2DEdge)edge + 1, voronoi_color );

draw_subdiv_edge( img, (CvSubdiv2DEdge)edge, delaunay_color );

}

CV_NEXT_SEQ_ELEM( elem_size, reader );

}

}

void locate_point( CvSubdiv2D* subdiv, CvPoint2D32f fp, IplImage* img,

CvScalar active_color )

{

CvSubdiv2DEdge e;

CvSubdiv2DEdge e0 = 0;

CvSubdiv2DPoint* p = 0;

cvSubdiv2DLocate( subdiv, fp, &e0, &p );

if( e0 )

{

e = e0;

do

{

draw_subdiv_edge( img, e, active_color );

e = cvSubdiv2DGetEdge(e,CV_NEXT_AROUND_LEFT);

}

while( e != e0 );

}

draw_subdiv_point( img, fp, active_color );

}

void draw_subdiv_facet( IplImage* img, CvSubdiv2DEdge edge )

{

CvSubdiv2DEdge t = edge;

int i, count = 0;

CvPoint* buf = 0;

// count number of edges in facet

do

{

count++;

t = cvSubdiv2DGetEdge( t, CV_NEXT_AROUND_LEFT );

} while (t != edge );

buf = (CvPoint*)malloc( count * sizeof(buf[0]));

// gather points

t = edge;

for( i = 0; i < count; i++ )

{

CvSubdiv2DPoint* pt = cvSubdiv2DEdgeOrg( t );

if( !pt ) break;

buf[i] = cvPoint( cvRound(pt->pt.x), cvRound(pt->pt.y));

t = cvSubdiv2DGetEdge( t, CV_NEXT_AROUND_LEFT );

}

if( i == count )

{

CvSubdiv2DPoint* pt = cvSubdiv2DEdgeDst( cvSubdiv2DRotateEdge( edge, 1 ));

cvFillConvexPoly( img, buf, count, CV_RGB(rand()&255,rand()&255,rand()&255), CV_AA, 0 );

cvPolyLine( img, &buf, &count, 1, 1, CV_RGB(0,0,0), 1, CV_AA, 0);

draw_subdiv_point( img, pt->pt, CV_RGB(0,0,0));

}

free( buf );

}

void paint_voronoi( CvSubdiv2D* subdiv, IplImage* img )

{

CvSeqReader reader;

int i, total = subdiv->edges->total;

int elem_size = subdiv->edges->elem_size;

cvCalcSubdivVoronoi2D( subdiv );

cvStartReadSeq( (CvSeq*)(subdiv->edges), &reader, 0 );

for( i = 0; i < total; i++ )

{

CvQuadEdge2D* edge = (CvQuadEdge2D*)(reader.ptr);

if( CV_IS_SET_ELEM( edge ))

{

CvSubdiv2DEdge e = (CvSubdiv2DEdge)edge;

// left

draw_subdiv_facet( img, cvSubdiv2DRotateEdge( e, 1 ));

// right

draw_subdiv_facet( img, cvSubdiv2DRotateEdge( e, 3 ));

}

CV_NEXT_SEQ_ELEM( elem_size, reader );

}

}

void run(void)

{

char win[] = "source";

int i;

CvRect rect = { 0, 0, 600, 600 };

CvMemStorage* storage;

CvSubdiv2D* subdiv;

IplImage* img;

CvScalar active_facet_color, delaunay_color, voronoi_color, bkgnd_color;

active_facet_color = CV_RGB( 255, 0, 0 );

delaunay_color = CV_RGB( 0,0,0);

voronoi_color = CV_RGB(0, 180, 0);

bkgnd_color = CV_RGB(255,255,255);

img = cvCreateImage( cvSize(rect.width,rect.height), 8, 3 );

cvSet( img, bkgnd_color, 0 );

cvNamedWindow( win, 1 );

storage = cvCreateMemStorage(0);

subdiv = init_delaunay( storage, rect );

printf("Delaunay triangulation will be build now interactively.\n"

"To stop the process, press any key\n\n");

for( i = 0; i < 200; i++ )

{

CvPoint2D32f fp = cvPoint2D32f( (float)(rand()%(rect.width-10)+5),

(float)(rand()%(rect.height-10)+5));

locate_point( subdiv, fp, img, active_facet_color );

cvShowImage( win, img );

if( cvWaitKey( 100 ) >= 0 )

break;

cvSubdivDelaunay2DInsert( subdiv, fp );

cvCalcSubdivVoronoi2D( subdiv );

cvSet( img, bkgnd_color, 0 );

draw_subdiv( img, subdiv, delaunay_color, voronoi_color );

cvShowImage( win, img );

if( cvWaitKey( 100 ) >= 0 )

break;

}

cvSet( img, bkgnd_color, 0 );

paint_voronoi( subdiv, img );

cvShowImage( win, img );

cvWaitKey(0);

cvReleaseMemStorage( &storage );

cvReleaseImage(&img);

cvDestroyWindow( win );

}

int main( int argc, char** argv )

{

run();

return 0;

}