Pytorch基础学习(第六章-Pytorch的正则化)

课程一览表:

![]()

目录

一、正则化之weight_decay

1.正则化与方差、偏差、噪声的概念

2.正则化策略(L1、L2)

3.pytorch中的L2正则项——weight decay

二、Bacth Normalization

1.Batch Normalization概念

2.Pytorch的Batch Normalization 1d/2d/3d实现

三、Normalization_layers

1.为什么要Normalization?

2.常见的Normalization——BN、LN、IN and GN

3.Normalization小结

四、正则化之dropout

1.Dropout概念

2.Dropout注意事项

一、正则化之weight_decay

1.正则化与方差、偏差、噪声的概念

Regularization(正则化):减小方差的策略

误差可分解为:偏差、方差与噪声之和。即误差 = 偏差 + 方差 + 噪声

偏差度量了学习算法的期望预测与真实结果的偏离程度,即刻画了学习算法本身的拟合能力

方差度量了同样大小的训练集的变动所导致的学习性能的变化,即刻画了数据扰动所造成的影响

噪声则表达了在当前任务上任何学习算法所能达到的期望泛化误差的下界

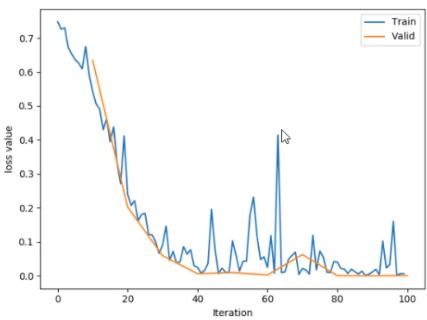

如上图所示,红色的线代表验证集的loss,橙色的线代表训练集的loss

偏差:训练集和真实值之间的误差

方差:训练集与验证集之间的差异

正则化就是用来解决方差过大的问题

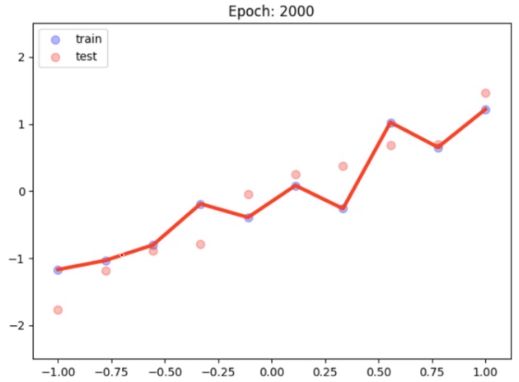

例:以一元线性回归的例子来进一步理解方差的概念,如图可以看曲线很好的拟合了训练集的样本,而该模型在测试集上的表现就会很差,这就典型的高方差,也就是过拟合现象

2.正则化策略(L1、L2)

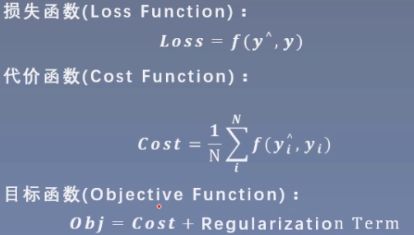

正则化策略:在目标函数中加一项正则项

损失函数:衡量模型输出与真实标签的差异

可以看到,目标函数由两部分组成,cost(模型输出与真实标签的差异)和正则项(用来约束模型的复杂度)

正则化策略L1和L2

L1 Regularization Term (权值的绝对值求和):

L2 Regularization Term(权值的平方求和):![]()

加上正则化后,要求目标函数的cost要小,权值的绝对值或者平方也要小

如图:左边为L1,右边为L2

图中的圆圈是loss,是一个等高线,w1,w2不管取什么值,它落在同一个等高线上,就说明他们的产生的cost是一样的。

途中黑色的矩形和圆形分别代表L1和L2的等高线

矩形:![]()

圆形:![]()

怎样才能让![]() 的值最小呢,图上可以看到,在相同loss下,loss等高线与矩阵的交点最小,此时w1为0,w1为0 代表参数解有稀疏项,也就是有0 的项,这就是为什么说加上L1就可以产生一个稀疏的解,加上L1后参数的解往往会发生在坐标轴上,所以某一些参数就是0,就产生 了稀疏解。

的值最小呢,图上可以看到,在相同loss下,loss等高线与矩阵的交点最小,此时w1为0,w1为0 代表参数解有稀疏项,也就是有0 的项,这就是为什么说加上L1就可以产生一个稀疏的解,加上L1后参数的解往往会发生在坐标轴上,所以某一些参数就是0,就产生 了稀疏解。

怎么能让![]() 的值最小呢,同理loss与圆形的交点处最小

的值最小呢,同理loss与圆形的交点处最小

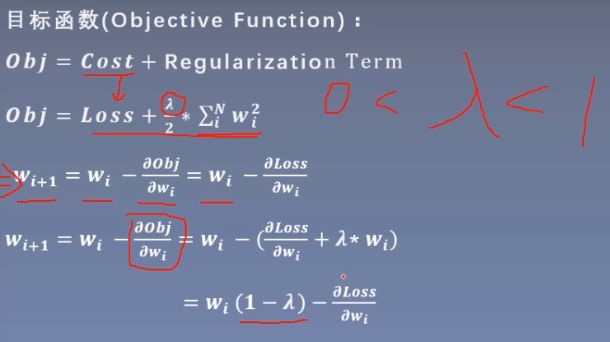

3.pytorch中的L2正则项——weight decay

L2 Regularization = weight decay(权值衰减)

第一个wi+1为未加正则项的权重计算方式

第二个wi+1加入正则项之后的权重计算方式,化简后的公式如下,wi的系数小于1,实现了权重的衰减

代码:一元线性回归模型

weight_decay通过优化器的weight_decay参数来调节

from tools.common_tools import set_seed

from torch.utils.tensorboard import SummaryWriter

set_seed(1) # 设置随机种子

n_hidden = 200

max_iter = 2000

disp_interval = 200

lr_init = 0.01

# ============================ step 1/5 数据 ============================

def gen_data(num_data=10, x_range=(-1, 1)):

w = 1.5

train_x = torch.linspace(*x_range, num_data).unsqueeze_(1)

train_y = w*train_x + torch.normal(0, 0.5, size=train_x.size())

test_x = torch.linspace(*x_range, num_data).unsqueeze_(1)

test_y = w*test_x + torch.normal(0, 0.3, size=test_x.size())

return train_x, train_y, test_x, test_y

train_x, train_y, test_x, test_y = gen_data(x_range=(-1, 1))

# ============================ step 2/5 模型 ============================

class MLP(nn.Module):

def __init__(self, neural_num):

super(MLP, self).__init__()

self.linears = nn.Sequential(

nn.Linear(1, neural_num),

nn.ReLU(inplace=True),

nn.Linear(neural_num, neural_num),

nn.ReLU(inplace=True),

nn.Linear(neural_num, neural_num),

nn.ReLU(inplace=True),

nn.Linear(neural_num, 1),

)

def forward(self, x):

return self.linears(x)

#构建两个模型,一个采用weight_decay,一个不采用weight_decay

net_normal = MLP(neural_num=n_hidden)

net_weight_decay = MLP(neural_num=n_hidden)

# ============================ step 3/5 优化器 ============================

optim_normal = torch.optim.SGD(net_normal.parameters(), lr=lr_init, momentum=0.9)

#weight_decay通过优化器来实现

optim_wdecay = torch.optim.SGD(net_weight_decay.parameters(), lr=lr_init, momentum=0.9, weight_decay=1e-2)

# ============================ step 4/5 损失函数 ============================

loss_func = torch.nn.MSELoss()

# ============================ step 5/5 迭代训练 ============================

writer = SummaryWriter(comment='_test_tensorboard', filename_suffix="12345678")

for epoch in range(max_iter):

# forward

pred_normal, pred_wdecay = net_normal(train_x), net_weight_decay(train_x)

loss_normal, loss_wdecay = loss_func(pred_normal, train_y), loss_func(pred_wdecay, train_y)

optim_normal.zero_grad()

optim_wdecay.zero_grad()

loss_normal.backward()

loss_wdecay.backward()

optim_normal.step()

optim_wdecay.step()

if (epoch+1) % disp_interval == 0:

# 可视化

for name, layer in net_normal.named_parameters():

writer.add_histogram(name + '_grad_normal', layer.grad, epoch)

writer.add_histogram(name + '_data_normal', layer, epoch)

for name, layer in net_weight_decay.named_parameters():

writer.add_histogram(name + '_grad_weight_decay', layer.grad, epoch)

writer.add_histogram(name + '_data_weight_decay', layer, epoch)

test_pred_normal, test_pred_wdecay = net_normal(test_x), net_weight_decay(test_x)

# 绘图

plt.scatter(train_x.data.numpy(), train_y.data.numpy(), c='blue', s=50, alpha=0.3, label='train')

plt.scatter(test_x.data.numpy(), test_y.data.numpy(), c='red', s=50, alpha=0.3, label='test')

plt.plot(test_x.data.numpy(), test_pred_normal.data.numpy(), 'r-', lw=3, label='no weight decay')

plt.plot(test_x.data.numpy(), test_pred_wdecay.data.numpy(), 'b--', lw=3, label='weight decay')

plt.text(-0.25, -1.5, 'no weight decay loss={:.6f}'.format(loss_normal.item()), fontdict={'size': 15, 'color': 'red'})

plt.text(-0.25, -2, 'weight decay loss={:.6f}'.format(loss_wdecay.item()), fontdict={'size': 15, 'color': 'red'})

plt.ylim((-2.5, 2.5))

plt.legend(loc='upper left')

plt.title("Epoch: {}".format(epoch+1))

plt.show()

plt.close()输出结果:

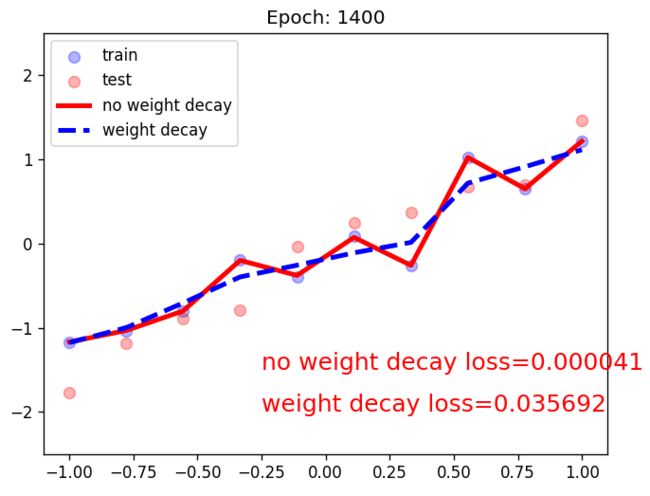

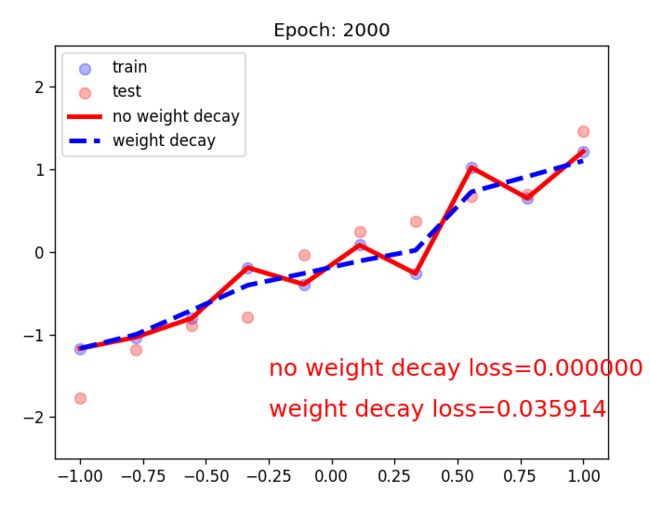

可以看到,模型迭代1000次后,红线的loss基本为0,而蓝线的loss是0.035左右,虽然红线的loss很低,但是它产生了过拟合现象。

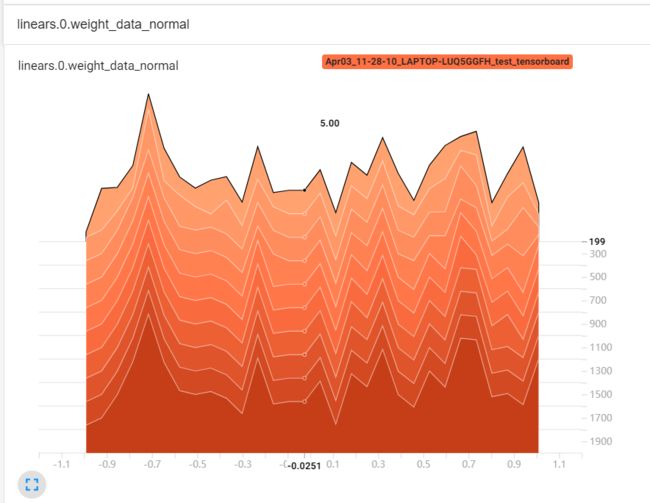

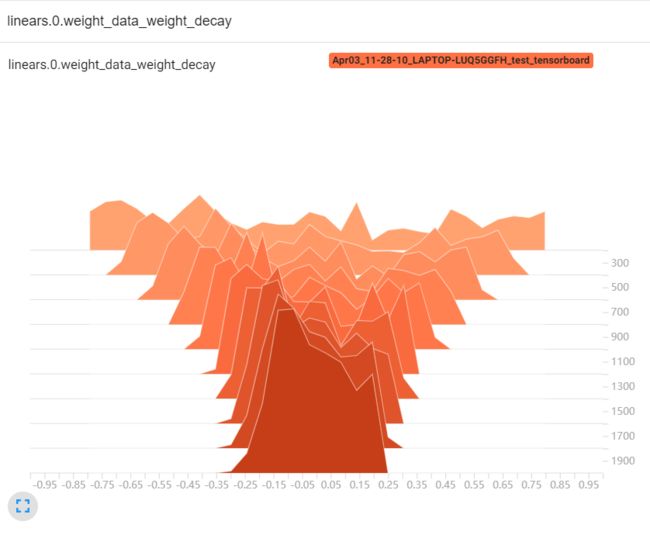

通过可视化看一下权值变化

看第一个全连接层的权值变化

没加weight_decay时,权值在-1到1之间,没有什么变化

加入weight_decay后,权值越来越小

二、Bacth Normalization

1.Batch Normalization概念

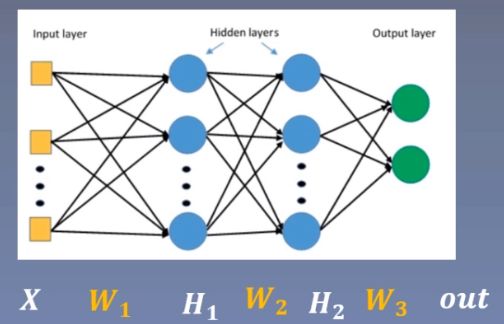

Batch Normalization:批标准化,不同样本在同一特征上的标准化

批:一批数据,通常为mini-batch

标准化:0均值,1标准差

优点:

- 可以用更大学习率,加速模型收敛

- 可以不用精心设计权值初始化

- 可以不用dropout或较小的dropout

- 可以不用L2或者较小的weight_decay

- 可以不用LRN(Local response normalization)

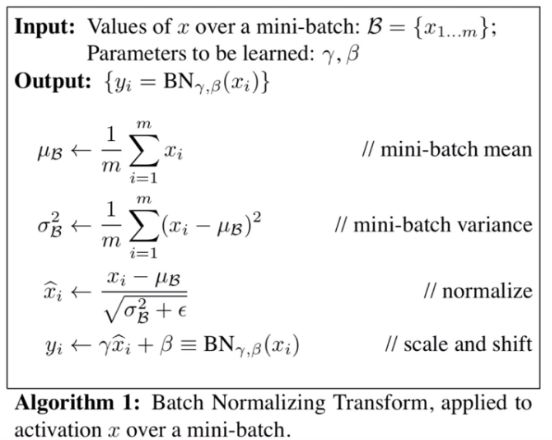

计算方式:

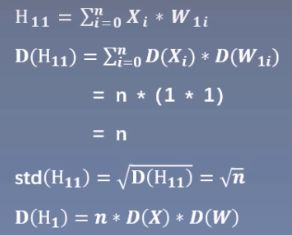

BN的提出时为了解决ICS(Internal Covariate Shift),那么ICS是什么呢?

在权重初始化的时候讲到,随着网络层的加深,权重梯度会越来越大,最终发生梯度爆炸,使网络难以训练。

而ICS就是相同的问题,如果数据分布尺度发生变化,则网络训练困难。

为什么加了BN之后就不用精心设计权值初始化了呢?

通过代码来观察

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

class MLP(nn.Module):

def __init__(self, neural_num, layers=100):

super(MLP, self).__init__()

self.linears = nn.ModuleList([nn.Linear(neural_num, neural_num, bias=False) for i in range(layers)])

self.bns = nn.ModuleList([nn.BatchNorm1d(neural_num) for i in range(layers)])

self.neural_num = neural_num

def forward(self, x):

for (i, linear), bn in zip(enumerate(self.linears), self.bns):

x = linear(x)

# x = bn(x)

x = torch.relu(x)

if torch.isnan(x.std()):

print("output is nan in {} layers".format(i))

break

print("layers:{}, std:{}".format(i, x.std().item()))

return x

def initialize(self):

for m in self.modules():

if isinstance(m, nn.Linear):

# method 1

nn.init.normal_(m.weight.data, std=1) # normal: mean=0, std=1

# method 2 kaiming

# nn.init.kaiming_normal_(m.weight.data)

neural_nums = 256

layer_nums = 100

batch_size = 16

net = MLP(neural_nums, layer_nums)

# net.initialize()

inputs = torch.randn((batch_size, neural_nums)) # normal: mean=0, std=1

output = net(inputs)

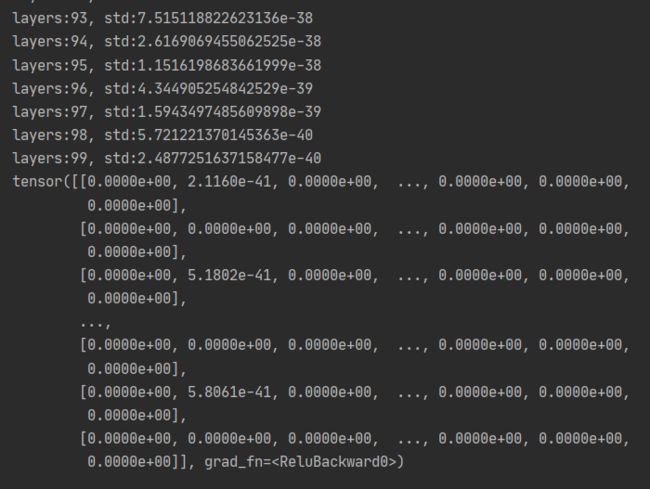

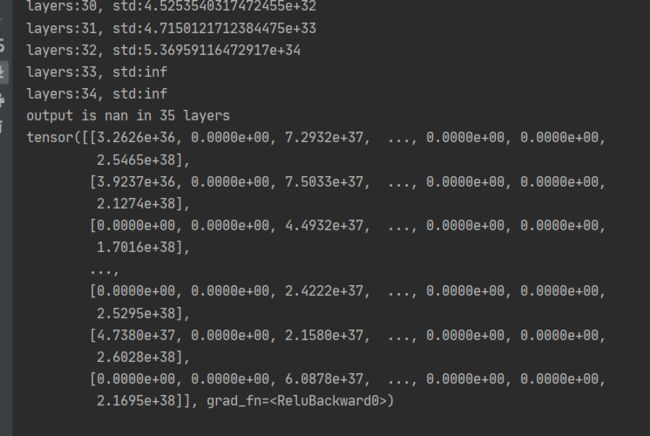

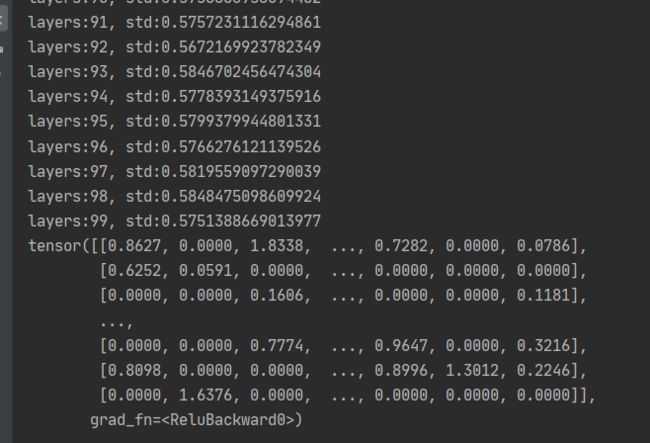

print(output)不进行权值初始化,导致梯度消失

我们进行权值初始化来观察一下梯度的变化情况

net.initialize()输出结果:

采用标准正态分布的初始化方法,可以发现发生了梯度爆炸

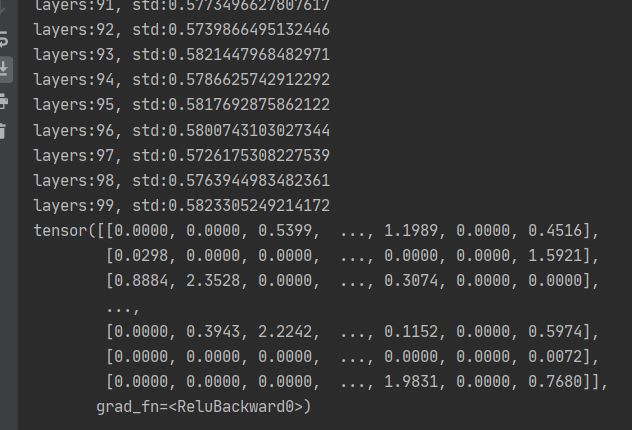

因为此时用到的激活函数是RELU,所以使用kaiming初始化方法

# method 2 kaiming

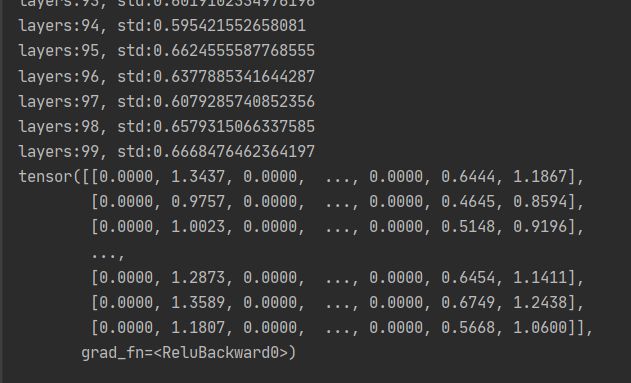

nn.init.kaiming_normal_(m.weight.data)输出结果:

可以看到梯度在0.6左右

如果我们们加入BN层,那网络输出数据的尺度又是怎样的呢?

x = bn(x)加入了BN层之后可以发现,数据的尺度保持的更好了

加入我们不对网络进行权值初始化,只使用BN

可以看到,数据保持的依然很好,所以有了BN层之后,就可以不去精心设计权值初始化,或不设计

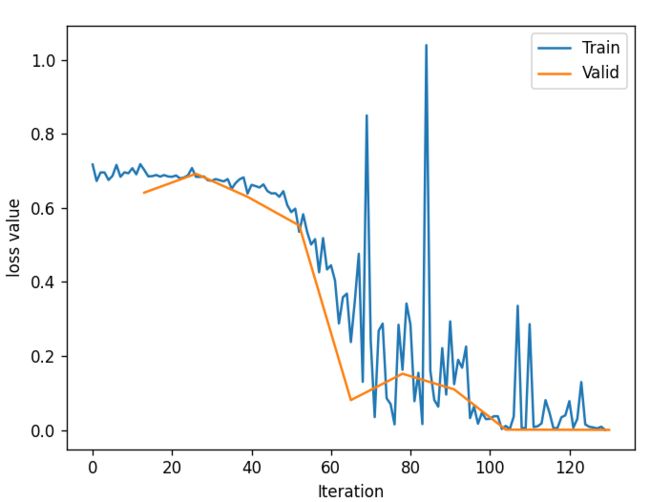

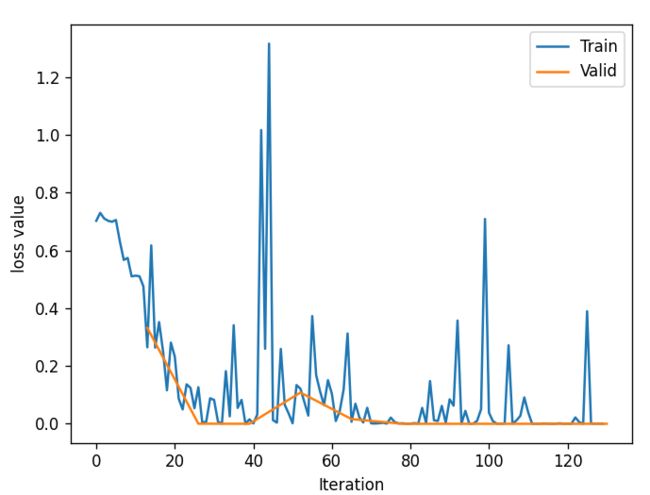

应用:BN层在人民币二分类任务中的使用情况

不进行BN,不进行权值初始化loss曲线

不进行BN,进行权值初始化

进行BN的loss曲线

综上所示:可以看到加入BN的loss激增减弱。

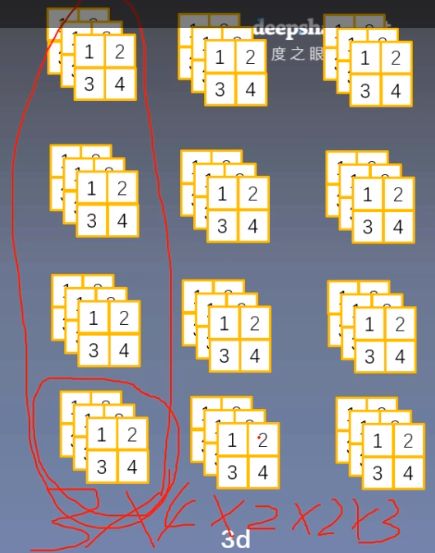

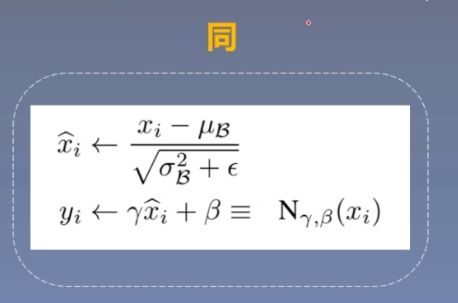

2.Pytorch的Batch Normalization 1d/2d/3d实现

_BatchNorm类

参数:

- num_features:一个样本特征数量(最重要)

- eps:分母修正项,一般设置比较小的数,防止除以0导致错误

- momentum:指数加权平均估计当前mean/var

- affine:是否需要affine transform

- track_running_states:是训练状态,还是测试状态

- nn.BatchNorm1d

- nn.BatchNorm2d

- nn.BatchNorm3d

主要属性:

- running_mean:均值

- running_var:方差

- weight:affine transform中的gamma

- bias:affine transform中的beta

训练:均值和方差采用指数加权平均计算

测试:当前统计值

(1)nn.BatchNorm1d input = B * 特征数 * 1d特征

四个主要参数在特征维度上进行计算

代码:

# ======================================== nn.BatchNorm1d

flag = 1

# flag = 0

if flag:

batch_size = 3

num_features = 5

momentum = 0.3

#图中一个黄色方框的大小

features_shape = (1)

feature_map = torch.ones(features_shape) # 1D

feature_maps = torch.stack([feature_map*(i+1) for i in range(num_features)], dim=0) # 2D

feature_maps_bs = torch.stack([feature_maps for i in range(batch_size)], dim=0) # 3D

print("input data:\n{} shape is {}".format(feature_maps_bs, feature_maps_bs.shape))

bn = nn.BatchNorm1d(num_features=num_features, momentum=momentum)

running_mean, running_var = 0, 1

for i in range(2):

outputs = bn(feature_maps_bs)

print("\niteration:{}, running mean: {} ".format(i, bn.running_mean))

print("iteration:{}, running var:{} ".format(i, bn.running_var))

mean_t, var_t = 2, 0

running_mean = (1 - momentum) * running_mean + momentum * mean_t

running_var = (1 - momentum) * running_var + momentum * var_t

print("iteration:{}, 第二个特征的running mean: {} ".format(i, running_mean))

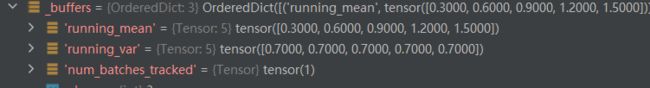

print("iteration:{}, 第二个特征的running var:{}".format(i, running_var))调试结果:

在每个特征维度计算均值、方差

(2)nn.BatchNorm1d input = B * 特征数 * 2d特征

代码:

# ======================================== nn.BatchNorm2d

flag = 1

# flag = 0

if flag:

batch_size = 3

num_features = 6

momentum = 0.3

features_shape = (2, 2)

feature_map = torch.ones(features_shape) # 2D

feature_maps = torch.stack([feature_map*(i+1) for i in range(num_features)], dim=0) # 3D

feature_maps_bs = torch.stack([feature_maps for i in range(batch_size)], dim=0) # 4D

print("input data:\n{} shape is {}".format(feature_maps_bs, feature_maps_bs.shape))

bn = nn.BatchNorm2d(num_features=num_features, momentum=momentum)

running_mean, running_var = 0, 1

for i in range(2):

outputs = bn(feature_maps_bs)

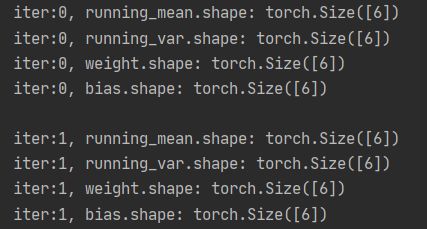

print("\niter:{}, running_mean.shape: {}".format(i, bn.running_mean.shape))

print("iter:{}, running_var.shape: {}".format(i, bn.running_var.shape))

print("iter:{}, weight.shape: {}".format(i, bn.weight.shape))

print("iter:{}, bias.shape: {}".format(i, bn.bias.shape))输出结果:

(3)nn.BatchNorm1d input = B * 特征数 * 3d特征

代码:

# ======================================== nn.BatchNorm3d

# flag = 1

flag = 0

if flag:

batch_size = 3

num_features = 4

momentum = 0.3

features_shape = (2, 2, 3)

feature = torch.ones(features_shape) # 3D

feature_map = torch.stack([feature * (i + 1) for i in range(num_features)], dim=0) # 4D

feature_maps = torch.stack([feature_map for i in range(batch_size)], dim=0) # 5D

print("input data:\n{} shape is {}".format(feature_maps, feature_maps.shape))

bn = nn.BatchNorm3d(num_features=num_features, momentum=momentum)

running_mean, running_var = 0, 1

for i in range(2):

outputs = bn(feature_maps)

print("\niter:{}, running_mean.shape: {}".format(i, bn.running_mean.shape))

print("iter:{}, running_var.shape: {}".format(i, bn.running_var.shape))

print("iter:{}, weight.shape: {}".format(i, bn.weight.shape))

print("iter:{}, bias.shape: {}".format(i, bn.bias.shape))输出结果:

三、Normalization_layers

1.为什么要Normalization?

Internal Covariate Shift(ICS):数据尺度/分布异常,导致训练困难

Normalization可以约束数据的尺度、数据的分布

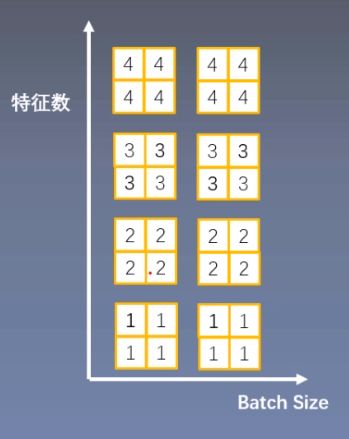

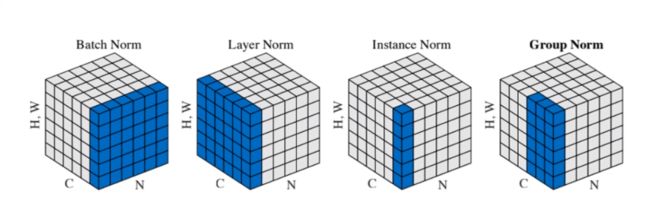

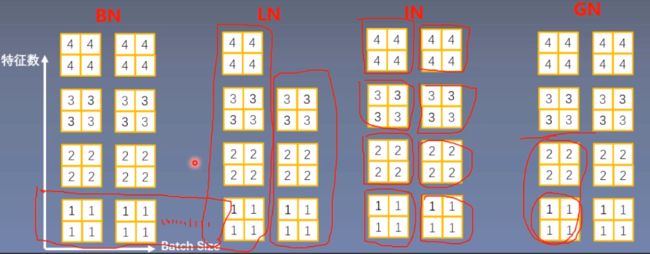

2.常见的Normalization——BN、LN、IN and GN

常见的Normalization

- Batch Normalization(BN)

- Layer Normalization(LN)

- Instance Normalization(IN)

- Group Normalization(GN)

(1)Layer Normalization

起因:BN不适用于变长的网络,如RNN(网络神经元长度不一样)

思路:逐层计算均值和方差(在相同样本中计算均值和方差)

注意事项:

- 不再有running_mean和running_var

- gamma和beta为逐元素的

对于该变长网络,如果进行BN,只能计算前三个均值和方差,再多计算就会因为神经元得缺失而出现偏差。

nn.LayerNorm

主要参数:

- normalized_shape:该层特征形状

- eps:分母修正项

- elementwise affine:是否需要affine transform(逐元素)

代码:

# ======================================== nn.layer norm

flag = 1

# flag = 0

if flag:

batch_size = 2

num_features = 3

features_shape = (2, 2)

feature_map = torch.ones(features_shape) # 2D

feature_maps = torch.stack([feature_map * (i + 1) for i in range(num_features)], dim=0) # 3D

feature_maps_bs = torch.stack([feature_maps for i in range(batch_size)], dim=0) # 4D

# feature_maps_bs shape is [8, 6, 3, 4], B * C * H * W

ln = nn.LayerNorm(feature_maps_bs.size()[1:], elementwise_affine=True)

# ln = nn.LayerNorm(feature_maps_bs.size()[1:], elementwise_affine=False)

# ln = nn.LayerNorm([6, 3, 4])

# ln = nn.LayerNorm([6, 3])

output = ln(feature_maps_bs)

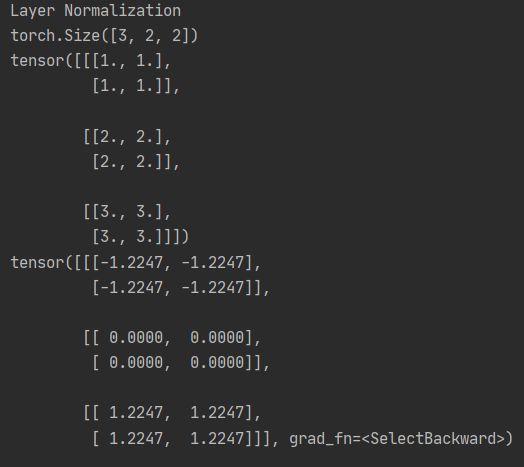

print("Layer Normalization")

print(ln.weight.shape)

print(feature_maps_bs[0, ...])

print(output[0, ...])输出结果:

(2)Instance Normalization

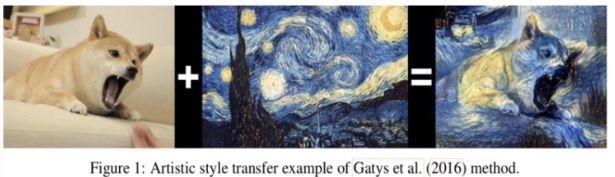

起因:BN在图像生成(Image Generation)中不适用

思路:逐Instance(channel)计算均值和方差

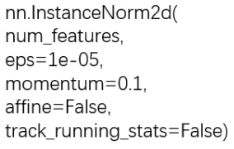

nn.InstanceNorm

主要参数:

- num_features:一个样本特征数量(最重要)

- eps:分母修正项

- momentum:指数加权平均估计当前mean/var

- affine:是否需要affine transform

- track_running_states:是训练状态,还是测试状态

代码:

# ======================================== nn.instance norm 2d

# flag = 1

flag = 0

if flag:

batch_size = 3

num_features = 3

momentum = 0.3

features_shape = (2, 2)

feature_map = torch.ones(features_shape) # 2D

feature_maps = torch.stack([feature_map * (i + 1) for i in range(num_features)], dim=0) # 3D

feature_maps_bs = torch.stack([feature_maps for i in range(batch_size)], dim=0) # 4D

print("Instance Normalization")

print("input data:\n{} shape is {}".format(feature_maps_bs, feature_maps_bs.shape))

instance_n = nn.InstanceNorm2d(num_features=num_features, momentum=momentum)

for i in range(1):

outputs = instance_n(feature_maps_bs)

print(outputs)

# print("\niter:{}, running_mean.shape: {}".format(i, bn.running_mean.shape))

# print("iter:{}, running_var.shape: {}".format(i, bn.running_var.shape))

# print("iter:{}, weight.shape: {}".format(i, bn.weight.shape))

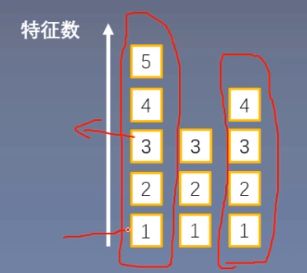

# print("iter:{}, bias.shape: {}".format(i, bn.bias.shape))(3)Group Normalization

起因:小batch样本中,BN估计的值不准

思路:数据不够,通道来凑

注意事项:

- 不再有running_mean和running_var

- gamma和beta为逐通道(channel)的

应用场景:大模型(小batch size)任务

nn.GroupNorm

主要参数:

- num_groups:分组数

- num_channels:通道数(特征数)

- eps:分母修正项

- affine:是否需要affine transform

代码:

# ======================================== nn.grop norm

# flag = 1

flag = 0

if flag:

batch_size = 2

num_features = 4

num_groups = 4 # 3 Expected number of channels in input to be divisible by num_groups

features_shape = (2, 2)

feature_map = torch.ones(features_shape) # 2D

feature_maps = torch.stack([feature_map * (i + 1) for i in range(num_features)], dim=0) # 3D

feature_maps_bs = torch.stack([feature_maps * (i + 1) for i in range(batch_size)], dim=0) # 4D

gn = nn.GroupNorm(num_groups, num_features)

outputs = gn(feature_maps_bs)

print("Group Normalization")

print(gn.weight.shape)

print(outputs[0])3.Normalization小结

小结:BN、LN、IN和GN都是为了克服Internal Covariate Shift(ICS)

四、正则化之dropout

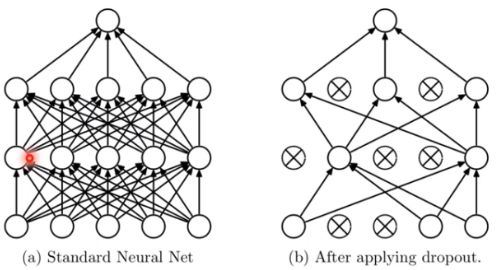

1.Dropout概念

Dropout:随机失活

随机:dropout probability,按一定的概率失去活性

失活:weight = 0

比如:某一个神经元特别依赖上一层的一个神经元,权重为0.5,其他对该神经元的依赖仅0.1,只要被依赖的神经元出现,该过于依赖它的神经元就会被激活。我们加了dropout之后,因为上层的神经元会随机失活,下层神经元就不会过于依赖某一个上层神经元。

nn.Dropout

功能:Dropout层

参数:

- p:被舍弃概率,失活概率

![]()

代码:

# ============================ step 1/5 数据 ============================

def gen_data(num_data=10, x_range=(-1, 1)):

w = 1.5

train_x = torch.linspace(*x_range, num_data).unsqueeze_(1)

train_y = w*train_x + torch.normal(0, 0.5, size=train_x.size())

test_x = torch.linspace(*x_range, num_data).unsqueeze_(1)

test_y = w*test_x + torch.normal(0, 0.3, size=test_x.size())

return train_x, train_y, test_x, test_y

train_x, train_y, test_x, test_y = gen_data(x_range=(-1, 1))

# ============================ step 2/5 模型 ============================

class MLP(nn.Module):

def __init__(self, neural_num, d_prob=0.5):

super(MLP, self).__init__()

self.linears = nn.Sequential(

nn.Linear(1, neural_num),

nn.ReLU(inplace=True),

nn.Dropout(d_prob),

nn.Linear(neural_num, neural_num),

nn.ReLU(inplace=True),

nn.Dropout(d_prob),

nn.Linear(neural_num, neural_num),

nn.ReLU(inplace=True),

nn.Dropout(d_prob),

nn.Linear(neural_num, 1),

)

def forward(self, x):

return self.linears(x)

net_prob_0 = MLP(neural_num=n_hidden, d_prob=0.)

net_prob_05 = MLP(neural_num=n_hidden, d_prob=0.5)

# ============================ step 3/5 优化器 ============================

optim_normal = torch.optim.SGD(net_prob_0.parameters(), lr=lr_init, momentum=0.9)

optim_reglar = torch.optim.SGD(net_prob_05.parameters(), lr=lr_init, momentum=0.9)

# ============================ step 4/5 损失函数 ============================

loss_func = torch.nn.MSELoss()

# ============================ step 5/5 迭代训练 ============================

writer = SummaryWriter(comment='_test_tensorboard', filename_suffix="12345678")

for epoch in range(max_iter):

pred_normal, pred_wdecay = net_prob_0(train_x), net_prob_05(train_x)

loss_normal, loss_wdecay = loss_func(pred_normal, train_y), loss_func(pred_wdecay, train_y)

optim_normal.zero_grad()

optim_reglar.zero_grad()

loss_normal.backward()

loss_wdecay.backward()

optim_normal.step()

optim_reglar.step()

if (epoch+1) % disp_interval == 0:

net_prob_0.eval()

net_prob_05.eval()

# 可视化

for name, layer in net_prob_0.named_parameters():

writer.add_histogram(name + '_grad_normal', layer.grad, epoch)

writer.add_histogram(name + '_data_normal', layer, epoch)

for name, layer in net_prob_05.named_parameters():

writer.add_histogram(name + '_grad_regularization', layer.grad, epoch)

writer.add_histogram(name + '_data_regularization', layer, epoch)

test_pred_prob_0, test_pred_prob_05 = net_prob_0(test_x), net_prob_05(test_x)

# 绘图

plt.clf()

plt.scatter(train_x.data.numpy(), train_y.data.numpy(), c='blue', s=50, alpha=0.3, label='train')

plt.scatter(test_x.data.numpy(), test_y.data.numpy(), c='red', s=50, alpha=0.3, label='test')

plt.plot(test_x.data.numpy(), test_pred_prob_0.data.numpy(), 'r-', lw=3, label='d_prob_0')

plt.plot(test_x.data.numpy(), test_pred_prob_05.data.numpy(), 'b--', lw=3, label='d_prob_05')

plt.text(-0.25, -1.5, 'd_prob_0 loss={:.8f}'.format(loss_normal.item()), fontdict={'size': 15, 'color': 'red'})

plt.text(-0.25, -2, 'd_prob_05 loss={:.6f}'.format(loss_wdecay.item()), fontdict={'size': 15, 'color': 'red'})

plt.ylim((-2.5, 2.5))

plt.legend(loc='upper left')

plt.title("Epoch: {}".format(epoch+1))

plt.show()

plt.close()

net_prob_0.train()

net_prob_05.train()输出结果:

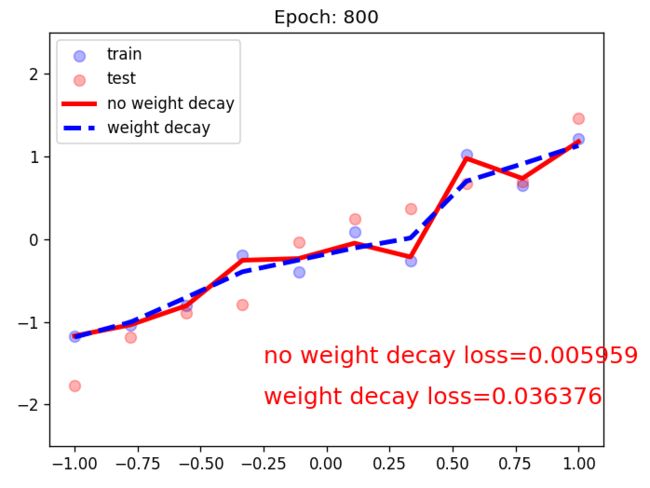

红色曲线出现了过拟合

而蓝色曲线没有过拟合

Dropout实现了类似L2的权重衰减的功能

2.Dropout注意事项

数据尺度变化:测试时,所有权重乘以1-drop_prob,drop_prob = 0.3,1-drop_prob = 0.7

实现细节:训练时权重均乘以1/(1-p),即除以1-p