特征工程tf-idf

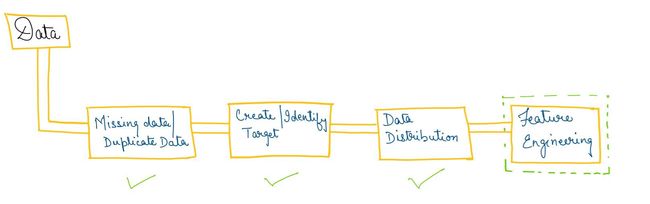

The next step after exploring the patterns in data is feature engineering. Any operation performed on the features/columns which could help us in making a prediction from the data could be termed as Feature Engineering. This would include the following at high-level:

探索数据模式之后的下一步是要素工程。 对特征/列执行的任何可帮助我们根据数据进行预测的操作都可以称为特征工程。 这将在高层包括以下内容:

- adding new features 添加新功能

- eliminating some of the features which tell the same story 消除了讲述同一故事的某些功能

- combining several features together 结合几个功能

- breaking down a feature into multiple features 将一个功能分解为多个功能

新增功能 (Adding new features)

Suppose you want to predict sales of ice-cream or gloves, or umbrella. What is common in these items? The sales of all these items are dependent on “weather” and “location”. Ice-creams sell more during summer or hotter areas, gloves are sold more in colder weather (winter) or colder regions, and we definitely need an umbrella when there’s rain. So if you have the historical sales data for all these items, what would help your model to learn the patterns more would be to add the weather and the selling areas at each data level.

假设您要预测冰淇淋或手套或雨伞的销量。 这些项目有什么共同点? 所有这些项目的销售都取决于“天气”和“位置”。 在夏季或更热的地区,冰淇淋的销售量更大,在寒冷的天气(冬季)或寒冷的地区,手套的销售量也更多,而下雨天我们肯定需要一把雨伞。 因此,如果您具有所有这些项目的历史销售数据,那么可以帮助您的模型学习更多模式的方法是在每个数据级别添加天气和销售区域。

消除讲述同一故事的某些功能 (Eliminating some of the features which tell the same story)

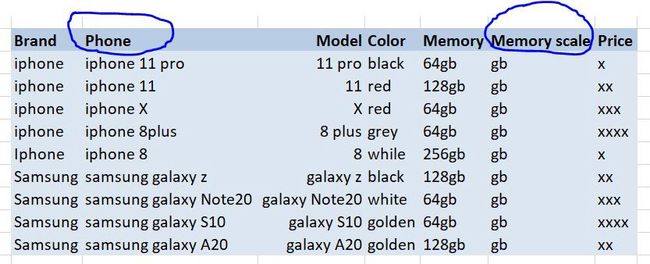

For explanation purpose, I made up a sample dataset which has data of different phone brands, something like the one below. Let us analyze this data and figure out why we should remove/eliminate some columns-

为了说明起见,我组成了一个样本数据集,其中包含不同手机品牌的数据,如下图所示。 让我们分析这些数据并弄清楚为什么要删除/消除某些列-

Image by Author 图片作者Now in this dataset, if we look carefully, there is a column for the brand name, a column for the model name, and there’s another column which says Phone (which basically contains both brand and model name). So if we see this situation, we don’t need the column Phone because the data in this column is already present in other columns, and split data is better than the aggregated data in this case.

现在,在此数据集中,如果我们仔细看,会出现一列品牌名称,一列型号名称以及另一列显示“ 电话”的信息 (基本上包含品牌名称和型号名称)。 因此,如果遇到这种情况,则不需要“电话”列,因为此列中的数据已经存在于其他列中,在这种情况下,拆分数据要好于汇总数据。

There is another column that is not adding any value to the dataset — Memory scale. All the memory values are in terms of “GB”, hence there is no need to keep an additional column that fails to show any variation in the dataset, because it’s not going to help our model learn different patterns.

另一列未向数据集添加任何值- 内存比例。 所有内存值均以“ GB”为单位,因此无需保留额外的列,该列无法显示数据集中的任何变化,因为这不会帮助我们的模型学习不同的模式。

组合多个功能以创建新功能 (Combining several features to create new features)

This means we can use 2–3 features or rows and create a new feature that explains the data better. For example, in the above dataset, some of the features which we can create could be — count of phones in each brand, % share of each phone in respective brand, count of phones available in different memory size, price per unit memory, etc. This will help the model understand the data at a granular level.

这意味着我们可以使用2–3个要素或行,并创建一个可以更好地解释数据的新要素。 例如,在上述数据集中,我们可以创建的某些功能可能是-每个品牌的手机数量,每个品牌在每个品牌手机中的百分比份额,具有不同内存大小的可用手机数量,单位内存价格等这将帮助模型更深入地了解数据。

将功能分解为多个功能 (Breaking down a feature into multiple features)

The most common example in this segment is Date and Address. A date mostly consists of Year, Month, and Day, let’s say in the form of ‘07/28/2019’. So if we break down the Date column into 2019, 7 or July, and 28, it’ll help us join the tables to various other tables in an easier way, and also will be easy to manipulate the data, because now instead of a date format, we have to deal with numbers which are a lot easier.

此段中最常见的示例是日期和地址。 日期主要由年,月和日组成,以“ 07/28/2019”的形式表示。 因此,如果我们将“日期”列细分为2019年,7月,7月和28日,这将有助于我们以一种更简单的方式将这些表连接到其他各种表,并且也将易于操作数据,因为现在不再使用日期格式,我们必须处理容易得多的数字。

For the same easier data manipulation and easier data joins reason, we break down the Address data (721 Main St., Apt 24, Dallas, TX-75432) into — Street name (721 Main St.), Apartment number/ House number (Apt 24), City (Dallas), State (TX/Texas), zip code (75432).

为了简化数据处理和简化数据合并的原因,我们将地址数据(721 Main St.,Apt 24,Dallas,TX-75432)分解为—街道名称(721 Main St.),公寓号/门牌号( Apt 24),城市(达拉斯),州(TX / Texas),邮政编码(75432)。

Now that we know what feature engineering is, let’s go through some of the techniques by which we can do feature engineering. There are various methods out there for feature engineering, but I will discuss some of the most common techniques & practices that I use in my regular problems.

既然我们知道了特征工程是什么,那么让我们看一下可以进行特征工程的一些技术。 有许多用于特征工程的方法,但是我将讨论一些我经常遇到的最常见的技术和实践。

Lags — this means creating columns for previous timestamp records (sales 1-day back, sales 1-month back, etc. based on the use-case). This feature will help us understand, for example, what was the iPhone sale 1 day back, 2 days back, etc. This is important because most of the machine learning algorithms look at the data row-wise, and unless we don’t have the previous days' records in the same row, the model will not be able to create patterns between current and previous date records efficiently.

滞后 -这意味着为以前的时间戳记录创建列(根据用例,返回1天的销售额,返回1个月的销售额等)。 例如,此功能将帮助我们了解1天后,2天后iPhone的销售情况。这很重要,因为大多数机器学习算法都是按行查看数据,除非我们没有同一行中的前几天记录,该模型将无法有效地在当前日期记录和以前的日期记录之间创建模式。

Count of categories — this could be anything as simple as count of phones in each brand, count of people buying iPhone 11pro, count of the different age groups of people buying Samsung Galaxy vs iPhone.

类别计数 -这可能很简单,例如每个品牌的手机计数,购买iPhone 11pro的人数,购买三星Galaxy与iPhone的不同年龄段的人数。

Sum/ Mean/ Median/Cumulative sum/ Aggregate sum — of any numeric features like salary, sales, profit, age, weight, etc.

总和/平均值/中位数/累计总和/总和 -任何数字特征,如薪水,销售额,利润,年龄,体重等。

Categorical Transformation Techniques (replacing values, one-hot encoding, label encoding, etc) — These techniques are used to convert the categorical features to respective numerical encoded values, because some of the algorithms (like xgboost) do not identify categorical features. The correct technique depends on the number of categories in each column, the number of categorical columns, etc. To learn more about different techniques, check this blog and this blog.

分类转换技术 (替换值,单次编码,标签编码等)-这些技术用于将分类特征转换为各自的数字编码值,因为某些算法(例如xgboost)无法识别分类特征。 正确的技术取决于每列中类别的数量,分类列的数量等。要了解有关不同技术的更多信息, 请访问此博客和此博客 。

Standardization/ Normalization techniques (min-max, standard scaler, etc) — There could be some datasets where you have numerical features but they’re present at different scales (kg, $, inch, sq.ft., etc.). So for some of the machine learning methods like clustering, it is important that we have all the numbers at one scale (we will discuss about clustering more in later blogs, but for now understand it as creating groups of data points in space based on the similarity). To know more about this section, check out these blogs — Feature Scaling Analytics Vidhya, Handling Numerical Data O'Reilly, Standard Scaler/MinMax Scaler.

标准化/标准化技术 (最小-最大,标准缩放器等)—可能有一些数据集具有数字功能,但它们以不同的比例(公斤,美元,英寸,平方英尺等)显示。 因此,对于某些诸如聚类的机器学习方法,重要的是使所有数字都在一个尺度上(我们将在以后的博客中讨论有关聚类的更多信息,但就目前而言,它理解为基于空间的数据点组)相似)。 要了解有关此部分的更多信息,请查看这些博客-Feature Scaling Analytics Vidhya , 处理数值数据O'Reilly , Standard Scaler / MinMax Scaler。

These are some of the very general methods of creating new features, but most of the feature engineering largely depends on brainstorming on the dataset in the picture. For example, if we have a dataset for employees, vs if we have the dataset of the general transactions, feature engineering will be done in different ways.

这些是创建新要素的一些非常通用的方法,但是大多数要素工程很大程度上取决于对图片数据集进行头脑风暴。 例如,如果我们有一个雇员数据集,而我们有一个一般交易数据集,那么要素工程将以不同的方式完成。

We can create these columns using various pandas functions manually. Besides these, there is a package called FeatureTools, which can also be explored to create new columns by combining the datasets at different levels.

我们可以使用各种熊猫函数手动创建这些列。 除此之外,还有一个名为FeatureTools的软件包,也可以通过组合不同级别的数据集来探索该软件包以创建新列。

This brings us to a (somewhat) end of Data Preprocessing Stages. Once we have the data preprocessed, we need to start looking into different ML techniques for our problem statement. We will be discussing those in the upcoming blogs. Hope y’all found this blog interesting and useful! :)

这使我们进入了(某种程度上) 数据预处理阶段的结尾。 对数据进行预处理后,我们需要针对问题陈述开始研究不同的ML技术。 我们将在即将发布的博客中讨论这些内容。 希望大家都觉得这个博客有趣和有用! :)

翻译自: https://medium.com/swlh/what-to-keep-and-what-to-remove-74ba1b3cb04

特征工程tf-idf