使用KNN根据深度自编码器降维特征识别MNIST数据集手写体数字(pytorch+scikit learn)

目标:实现无监督的数据降维,并根据降维信息实现KNN分类

内容:

1.自编码器降维

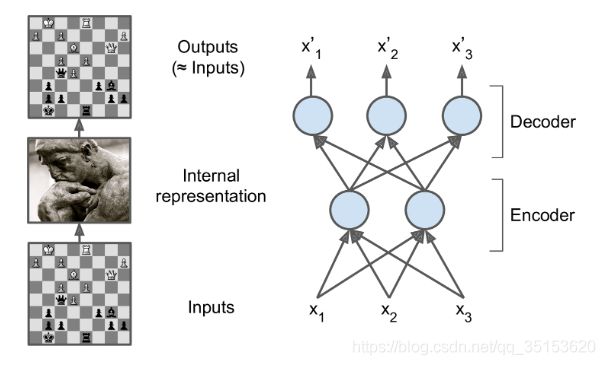

自编码器是为使神经网络学习数据原始特征,将高维数据特征用低维数据特征表示,是一种无监督的表征学习方法。

其包含编码器部分和解码器部分,编码器负责学习数据的低维嵌入特征,解码器负责将编码学习到的低维特征重新构建回原始数据特征,俩者就好像数据通信的编码和解码过程。

自编码器一般使用MSE损失函数,使重构建的特征接近于原始特征。

当然,如果用于回归数据,也可以使用交叉熵损失

深度自编码器就是在构建编码器和解码器时使用多个隐藏层,学习到更复杂的语义特征(维数更低),传统的自编码器使用全连接网络,而对于图像特征可以使用卷积神经网络进行学习。

2.构建深度自编码器

import torch

import torch.nn as nn

import torch.utils.data as Data

import torchvision

from torch.autograd import Variable

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

import os

#构建自编码器的前向传播网络

#返回编码器特征和重构特征

class GeneralizedAutoEncoder(nn.Module):

"""

GeneralizedAutoEncoder;

__init__(self) :Function-GAE network;

forward(self, x):Input-input data;

Function-forwar;

"""

def __init__(self):

super(GeneralizedAutoEncoder, self).__init__()

#编码器和解码器分别都是3层神经网络

#激活函数使用sigmoid

self.encoder = nn.Sequential(

nn.Linear(28 * 28, 500),

nn.Sigmoid(),

nn.Linear(500, 200),

nn.Sigmoid(),

nn.Linear(200, 30),

nn.Sigmoid()

)

self.decoder = nn.Sequential(

nn.Linear(30, 200),

nn.Sigmoid(),

nn.Linear(200, 500),

nn.Sigmoid(),

nn.Linear(500, 784),

)

def forward(self, x):

encoded = self.encoder(x)

decoded = self.decoder(encoded)

return encoded, decoded3.读取数据

batch_size = 500

#数据预处理,去均值化

data_tf = transforms.Compose([transforms.ToTensor(), transforms.Normalize([0.5,0.5,0.5], [0.5,0.5,0.5])])

#按batch大小读取数据

train_dataset = datasets.MNIST(root="./data", train=True, transform=data_tf, download=True)

test_dataset = datasets.MNIST(root="./data", train=False, transform=data_tf)

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False)4.训练

训练时使用gpu,若不使用的话,将其中cuda()去掉

from sklearn.neighbors import KNeighborsClassifier

EPOCH = 2000

LR = 0.001

#计算KNN,并根据KNN结果进行分类

def KNNforTest(train_fea_arr, train_label_arr, test_fea_arr, test_label_arr, K=1):

knn = KNeighborsClassifier(n_neighbors=K)

knn.fit(train_fea_arr, train_label_arr)

score=knn.score(test_fea_arr,test_label_arr,sample_weight=None)

print("knn for k=1, score is : {}".format(score))

#初始化权重,不进行可能导致模型拟合效果不好

def weights_init(m):

classname=m.__class__.__name__

if classname.find('Conv') != -1:

nn.init.xavier_normal_(m.weight.data)

nn.init.constant_(m.bias.data, 0.0)

elif classname.find('Linear') != -1:

nn.init.xavier_normal_(m.weight.data)

nn.init.constant_(m.bias.data, 0.0)

if __name__ == "__main__":

train_transforms = torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

])

#构建自编码器

autoencoder = AutoEncoder()

autoencoder.apply(weights_init)

autoencoder = autoencoder.cuda()

#优化器

optimizer = torch.optim.Adam(autoencoder.parameters(), lr=LR)

#MSEloss构建

loss_func = nn.MSELoss()

loss_func = loss_func.cuda()

for epoch in range(EPOCH):

for step, (x, y) in enumerate(train_loader):

_x = Variable(x.view(-1, 28 * 28))

_x = _x.cuda()

_y = Variable(x.view(-1, 28 * 28))

_y = _y.cuda()

_label = Variable(y)

_label = _label.cuda()

encoded, decoded = autoencoder(_x)

loss = loss_func(decoded, _y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if epoch % 20 == 0:

#每20次epoch输出loss信息,并进行KNN检测结果

print('Epoch: ', epoch, '| train loss: %.4f' % loss.data.cpu().numpy())

for (x_test, y_test) in test_loader:

_x_test = Variable(x_test.view(-1, 28*28))

_x_test = _x_test.cuda()

for (x_train, y_train) in train_loader:

encoded_test, decoded_test = autoencoder(_x_test)

knn_test_x = encoded_test.data.cpu().numpy()

knn_test_y = y_test.numpy()

knn_test_y = np.reshape(knn_test_y, [batch_size, ])

_x_train = Variable(x_train.view(-1, 28*28))

_x_train = _x_train.cuda()

encoded_train, decoded_train = autoencoder(_x_train)

knn_train_x = encoded_train.data.cpu().numpy()

knn_train_y = y_train.numpy()

knn_train_y = np.reshape(knn_train_y, [batch_size, ])

#KNN分类

KNNforTest(knn_train_x, knn_train_y, knn_test_x, knn_test_y)

#每200个epoch存储一次权重

if epoch % 200 ==0:

state = {

'state': autoencoder.state_dict(),

'epoch': epoch}

if not os.path.isdir('checkpoint'):

os.mkdir('checkpoint')

torch.save(state, "./checkpoint/autoencoder_pcainithighlr_{}.pth".format(epoch))

print("weight have saved, path ="/checkpoint/autoencoder_pcainithighlr_{}.pth".format(epoch))

state = {

'state': autoencoder.state_dict(),

'epoch': epoch}

if not os.path.isdir('checkpoint'):

os.mkdir('checkpoint')

torch.save(state, "./checkpoint/autoencoder_pcainithighlr_{}.pth".format(epoch))

print("weight have saved, path = "/checkpoint/autoencoder_pcainithighlr_{}.pth".format(epoch))