DataWhale_数据分析训练营task5(第三章模型建立与评估)

文章目录

- 第三章 模型搭建和评估

-

- 3.1 模型搭建和评估--建模

-

- 模型搭建

-

- 任务一:切割训练集和测试集

- 任务二:模型创建

- 任务三:输出模型预测结果

- 3.2 模型搭建和评估-评估

-

- 模型评估

-

- 任务一:交叉验证

- 任务二:混淆矩阵

- 任务三:ROC曲线

第三章 模型搭建和评估

3.1 模型搭建和评估–建模

经过前面的两章的知识点的学习,我可以对数数据的本身进行处理,比如数据本身的增删查补,还可以做必要的清洗工作。那么下面我们就要开始使用我们前面处理好的数据了。这一章我们要做的就是使用数据,我们做数据分析的目的也就是,运用我们的数据以及结合我的业务来得到某些我们需要知道的结果。那么分析的第一步就是建模,搭建一个预测模型或者其他模型;我们从这个模型的到结果之后,我们要分析我的模型是不是足够的可靠,那我就需要评估这个模型。今天我们学习建模,下一节我们学习评估。

我们拥有的泰坦尼克号的数据集,那么我们这次的目的就是,完成泰坦尼克号存活预测这个任务。

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from IPython.display import Image

%matplotlib inline

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.figsize'] = (10, 6) # 设置输出图片大小

载入这些库,如果缺少某些库,请安装他们

【思考】这些库的作用是什么呢?你需要查一查

#思考题回答

# pandas基于numpy提供数据分析工具

# Numpy提供矩阵运算功能

# matplotlib用于绘图

# seaborn基于matplotlib用于绘图

%matplotlib inline

载入我们提供清洗之后的数据(clear_data.csv),大家也将原始数据载入(train.csv),说说他们有什么不同

#写入代码

train = pd.read_csv('train.csv')

train.head()

| PassengerId | Survived | Pclass | Name | Sex | Age | SibSp | Parch | Ticket | Fare | Cabin | Embarked | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 3 | Braund, Mr. Owen Harris | male | 22.0 | 1 | 0 | A/5 21171 | 7.2500 | NaN | S |

| 1 | 2 | 1 | 1 | Cumings, Mrs. John Bradley (Florence Briggs Th... | female | 38.0 | 1 | 0 | PC 17599 | 71.2833 | C85 | C |

| 2 | 3 | 1 | 3 | Heikkinen, Miss. Laina | female | 26.0 | 0 | 0 | STON/O2. 3101282 | 7.9250 | NaN | S |

| 3 | 4 | 1 | 1 | Futrelle, Mrs. Jacques Heath (Lily May Peel) | female | 35.0 | 1 | 0 | 113803 | 53.1000 | C123 | S |

| 4 | 5 | 0 | 3 | Allen, Mr. William Henry | male | 35.0 | 0 | 0 | 373450 | 8.0500 | NaN | S |

#写入代码

text = pd.read_csv('clear_data.csv')

text.head()

| PassengerId | Pclass | Age | SibSp | Parch | Fare | Sex_female | Sex_male | Embarked_C | Embarked_Q | Embarked_S | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 3 | 22.0 | 1 | 0 | 7.2500 | 0 | 1 | 0 | 0 | 1 |

| 1 | 1 | 1 | 38.0 | 1 | 0 | 71.2833 | 1 | 0 | 1 | 0 | 0 |

| 2 | 2 | 3 | 26.0 | 0 | 0 | 7.9250 | 1 | 0 | 0 | 0 | 1 |

| 3 | 3 | 1 | 35.0 | 1 | 0 | 53.1000 | 1 | 0 | 0 | 0 | 1 |

| 4 | 4 | 3 | 35.0 | 0 | 0 | 8.0500 | 0 | 1 | 0 | 0 | 1 |

#写入代码

# 去除了一些列,并将几个列的值类型作为新的列

模型搭建

- 处理完前面的数据我们就得到建模数据,下一步是选择合适模型

- 在进行模型选择之前我们需要先知道数据集最终是进行监督学习还是无监督学习

- 模型的选择一方面是通过我们的任务来决定的。

- 除了根据我们任务来选择模型外,还可以根据数据样本量以及特征的稀疏性来决定

- 刚开始我们总是先尝试使用一个基本的模型来作为其baseline,进而再训练其他模型做对比,最终选择泛化能力或性能比较好的模型

这里我的建模,并不是从零开始,自己一个人完成完成所有代码的编译。我们这里使用一个机器学习最常用的一个库(sklearn)来完成我们的模型的搭建

下面给出sklearn的算法选择路径,供大家参考

# sklearn模型算法选择路径图

Image('sklearn.png')

【思考】数据集哪些差异会导致模型在拟合数据是发生变化

#思考回答

# 1. 样本数量

# 2. 样本是否具有标签

# 3. 样本数据是否准确

任务一:切割训练集和测试集

这里使用留出法划分数据集

- 将数据集分为自变量和因变量

- 按比例切割训练集和测试集(一般测试集的比例有30%、25%、20%、15%和10%)

- 使用分层抽样

- 设置随机种子以便结果能复现

【思考】

- 划分数据集的方法有哪些?

- 为什么使用分层抽样,这样的好处有什么?

任务提示1

- 切割数据集是为了后续能评估模型泛化能力

- sklearn中切割数据集的方法为

train_test_split - 查看函数文档可以在jupyter noteboo里面使用

train_test_split?后回车即可看到 - 分层和随机种子在参数里寻找

要从clear_data.csv和train.csv中提取train_test_split()所需的参数

train['Cabin'] = train['Cabin'].fillna('NA')

train['Embarked']= train['Embarked'].fillna('S')

train['Age'] = train['Age'].fillna(train['Age'].mean())

train.isnull().mean().sort_values(ascending=False)

Embarked 0.0

Cabin 0.0

Fare 0.0

Ticket 0.0

Parch 0.0

SibSp 0.0

Age 0.0

Sex 0.0

Name 0.0

Pclass 0.0

Survived 0.0

PassengerId 0.0

dtype: float64

data = train[['Pclass','Sex','Age','SibSp','Parch','Fare','Embarked']]

data = pd.get_dummies(data)

data.head()

| Pclass | Age | SibSp | Parch | Fare | Sex_female | Sex_male | Embarked_C | Embarked_Q | Embarked_S | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 3 | 22.0 | 1 | 0 | 7.2500 | 0 | 1 | 0 | 0 | 1 |

| 1 | 1 | 38.0 | 1 | 0 | 71.2833 | 1 | 0 | 1 | 0 | 0 |

| 2 | 3 | 26.0 | 0 | 0 | 7.9250 | 1 | 0 | 0 | 0 | 1 |

| 3 | 1 | 35.0 | 1 | 0 | 53.1000 | 1 | 0 | 0 | 0 | 1 |

| 4 | 3 | 35.0 | 0 | 0 | 8.0500 | 0 | 1 | 0 | 0 | 1 |

#写入代码

from sklearn.model_selection import train_test_split

X = data

y = train['Survived']

X_train, X_test, y_train, y_test = train_test_split(X,y, stratify=y, random_state=0)

X_train.shape, X_test.shape

((668, 10), (223, 10))

【思考】

- 什么情况下切割数据集的时候不用进行随机选取

#思考回答

# 数据集已经随机处理过后则不需要随机选取。

任务二:模型创建

- 创建基于线性模型的分类模型(逻辑回归)

- 创建基于树的分类模型(决策树、随机森林)

- 分别使用这些模型进行训练,分别的到训练集和测试集的得分

- 查看模型的参数,并更改参数值,观察模型变化

提示

- 逻辑回归不是回归模型而是分类模型,不要与

LinearRegression混淆 - 随机森林其实是决策树集成为了降低决策树过拟合的情况

- 线性模型所在的模块为

sklearn.linear_model - 树模型所在的模块为

sklearn.ensemble

#写入代码

from sklearn.linear_model import LogisticRegression

from sklearn.ensemble import RandomForestClassifier

#写入代码

lr = LogisticRegression()

lr.fit(X_train,y_train)

LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='warn',

n_jobs=None, penalty='l2', random_state=None, solver='warn',

tol=0.0001, verbose=0, warm_start=False)

#写入代码

print("Training set score: {:.2f}".format(lr.score(X_train, y_train)))

print("Testing set score: {:.2f}".format(lr.score(X_test, y_test)))

Training set score: 0.80

Testing set score: 0.78

#写入代码

lr2 = LogisticRegression(C=100)

lr2.fit(X_train, y_train)

LogisticRegression(C=100, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='warn',

n_jobs=None, penalty='l2', random_state=None, solver='warn',

tol=0.0001, verbose=0, warm_start=False)

print("Training set score: {:.2f}".format(lr2.score(X_train, y_train)))

print("Testing set score: {:.2f}".format(lr2.score(X_test, y_test)))

Training set score: 0.80

Testing set score: 0.79

rfc = RandomForestClassifier()

rfc.fit(X_train, y_train)

RandomForestClassifier(bootstrap=True, class_weight=None, criterion='gini',

max_depth=None, max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=10, n_jobs=None,

oob_score=False, random_state=None, verbose=0,

warm_start=False)

print("Training set score: {:.2f}".format(rfc.score(X_train, y_train)))

print("Testing set score: {:.2f}".format(rfc.score(X_test, y_test)))

Training set score: 0.97

Testing set score: 0.80

rfc2 = RandomForestClassifier(n_estimators=100, max_depth=5)

rfc2.fit(X_train, y_train)

RandomForestClassifier(bootstrap=True, class_weight=None, criterion='gini',

max_depth=5, max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=100, n_jobs=None,

oob_score=False, random_state=None, verbose=0,

warm_start=False)

print("Training set score: {:.2f}".format(rfc2.score(X_train, y_train)))

print("Testing set score: {:.2f}".format(rfc2.score(X_test, y_test)))

Training set score: 0.86

Testing set score: 0.81

【思考】

- 为什么线性模型可以进行分类任务,背后是怎么的数学关系

- 对于多分类问题,线性模型是怎么进行分类的

#思考回答

# 线性模型能进行分类,是将输出的值进行分块,当落在一块区域就对应一个类。

任务三:输出模型预测结果

- 输出模型预测分类标签

- 输出不同分类标签的预测概率

提示3

- 一般监督模型在sklearn里面有个

predict能输出预测标签,predict_proba则可以输出标签概率

#写入代码

pred = lr.predict(X_train)

pred[:10]

array([0, 1, 1, 1, 0, 0, 1, 0, 1, 1], dtype=int64)

#写入代码

pred_proba = lr.predict_proba(X_train)

pred_proba[:10]

# 第一列代表标签为0的概率,第二列代表标签为1的概率

array([[0.62887228, 0.37112772],

[0.14897191, 0.85102809],

[0.47161963, 0.52838037],

[0.20365643, 0.79634357],

[0.86428117, 0.13571883],

[0.90338863, 0.09661137],

[0.13829319, 0.86170681],

[0.89516113, 0.10483887],

[0.05735121, 0.94264879],

[0.13593244, 0.86406756]])

【思考】

- 预测标签的概率对我们有什么帮助

#思考回答

# 观察不同标签的概率是否接近来判断结果是否具有可信度。

3.2 模型搭建和评估-评估

根据之前的模型的建模,我们知道如何运用sklearn这个库来完成建模,以及我们知道了的数据集的划分等等操作。那么一个模型我们怎么知道它好不好用呢?以至于我们能不能放心的使用模型给我的结果呢?那么今天的学习的评估,就会很有帮助。

加载下面的库

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

from IPython.display import Image

from sklearn.linear_model import LogisticRegression

from sklearn.ensemble import RandomForestClassifier

%matplotlib inline

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.figsize'] = (10, 6) # 设置输出图片大小

任务:加载数据并分割测试集和训练集

#写入代码

train = pd.read_csv('train.csv')

train['Cabin'] = train['Cabin'].fillna('NA')

train['Embarked']= train['Embarked'].fillna('S')

train['Age'] = train['Age'].fillna(train['Age'].mean())

train.isnull().mean().sort_values(ascending=False)

data = train[['Pclass','Sex','Age','SibSp','Parch','Fare','Embarked']]

data = pd.get_dummies(data)

data.head()

| Pclass | Age | SibSp | Parch | Fare | Sex_female | Sex_male | Embarked_C | Embarked_Q | Embarked_S | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 3 | 22.0 | 1 | 0 | 7.2500 | 0 | 1 | 0 | 0 | 1 |

| 1 | 1 | 38.0 | 1 | 0 | 71.2833 | 1 | 0 | 1 | 0 | 0 |

| 2 | 3 | 26.0 | 0 | 0 | 7.9250 | 1 | 0 | 0 | 0 | 1 |

| 3 | 1 | 35.0 | 1 | 0 | 53.1000 | 1 | 0 | 0 | 0 | 1 |

| 4 | 3 | 35.0 | 0 | 0 | 8.0500 | 0 | 1 | 0 | 0 | 1 |

from sklearn.model_selection import train_test_split

X = data

y = train['Survived']

X_train, X_test, y_train, y_test = train_test_split(X,y, stratify=y, random_state=0)

X_train.shape, X_test.shape

((668, 10), (223, 10))

模型评估

- 模型评估是为了知道模型的泛化能力。

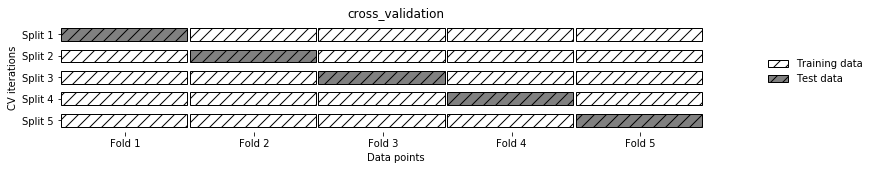

- 交叉验证(cross-validation)是一种评估泛化性能的统计学方法,它比单次划分训练集和测试集的方法更加稳定、全面。

- 在交叉验证中,数据被多次划分,并且需要训练多个模型。

- 最常用的交叉验证是 k 折交叉验证(k-fold cross-validation),其中 k 是由用户指定的数字,通常取 5 或 10。

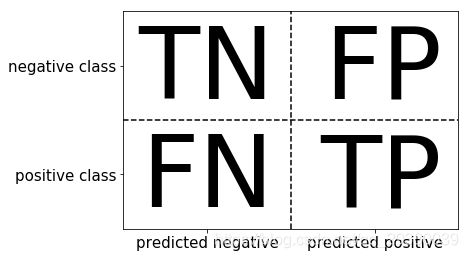

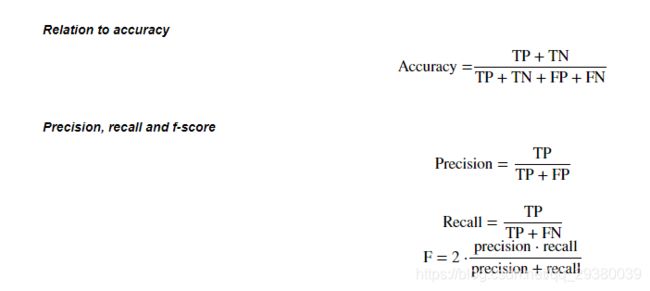

- 准确率(precision)度量的是被预测为正例的样本中有多少是真正的正例

- 召回率(recall)度量的是正类样本中有多少被预测为正类

- f-分数是准确率与召回率的调和平均

任务一:交叉验证

- 用10折交叉验证来评估之前的逻辑回归模型

- 计算交叉验证精度的平均值

#提示:交叉验证

Image('Snipaste_2020-01-05_16-37-56.png')

提示

- 交叉验证在sklearn中的模块为

sklearn.model_selection

#写入代码

from sklearn.model_selection import cross_val_score

#写入代码

lr = LogisticRegression(C=100)

scores = cross_val_score(lr, X_train,y_train,cv=10)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

#写入代码

scores

array([0.82352941, 0.79411765, 0.80597015, 0.80597015, 0.8358209 ,

0.88059701, 0.72727273, 0.86363636, 0.75757576, 0.71212121])

#写入代码

print("Average cross-validation score: {:.2f}".format(scores.mean()))

Average cross-validation score: 0.80

任务二:混淆矩阵

- 计算二分类问题的混淆矩阵

- 计算精确率、召回率以及f-分数

【思考】什么是二分类问题的混淆矩阵,理解这个概念,知道它主要是运算到什么任务中的

#思考回答

#提示:混淆矩阵

Image('Snipaste_2020-01-05_16-38-26.png')

#提示:准确率 (Accuracy),精确度(Precision),Recall,f-分数计算方法

Image('Snipaste_2020-01-05_16-39-27.png')

提示5

- 混淆矩阵的方法在sklearn中的

sklearn.metrics模块 - 混淆矩阵需要输入真实标签和预测标签

- 精确率、召回率以及f-分数可使用

classification_report模块

#写入代码

from sklearn.metrics import confusion_matrix

lr = LogisticRegression(C=100)

lr.fit(X_train,y_train)

G:\anaconda1\lib\site-packages\sklearn\linear_model\logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

LogisticRegression(C=100, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='warn',

n_jobs=None, penalty='l2', random_state=None, solver='warn',

tol=0.0001, verbose=0, warm_start=False)

#写入代码

pred = lr.predict(X_train)

confusion_matrix(y_train, pred)

array([[350, 62],

[ 71, 185]], dtype=int64)

#写入代码

from sklearn.metrics import classification_report

print(classification_report(y_train,pred))

precision recall f1-score support

0 0.83 0.85 0.84 412

1 0.75 0.72 0.74 256

micro avg 0.80 0.80 0.80 668

macro avg 0.79 0.79 0.79 668

weighted avg 0.80 0.80 0.80 668

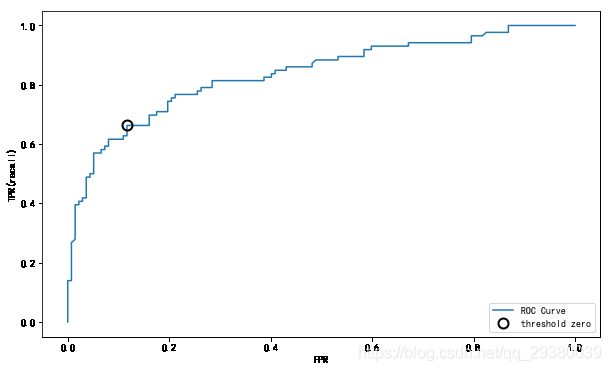

任务三:ROC曲线

- 绘制ROC曲线

【思考】什么是ROC曲线,ROC曲线的存在是为了解决什么问题?

#思考

# ROC曲线代表分类模型的性能

# x轴为FP y轴为TP

# ROC曲线下的面积,介于0.1和1之间,作为数值可以直观的评价分类器的好坏,值越大越好。

提示6

- ROC曲线在sklearn中的模块为

sklearn.metrics - ROC曲线下面所包围的面积越大越好

#写入代码

from sklearn.metrics import roc_curve

#写入代码

fpr, tpr, thresholds = roc_curve(y_test,lr.decision_function(X_test))

plt.plot(fpr, tpr, label='ROC Curve')

plt.xlabel("FPR")

plt.ylabel("TPR(recall)")

close_zero = np.argmin(np.abs(thresholds))

plt.plot(fpr[close_zero], tpr[close_zero], 'o', markersize=10, label="threshold zero",

fillstyle="none", c='k',mew=2)

plt.legend(loc=4)