tqdm 详解

文章目录

- 1. 简介

- 2. 使用方法

- 3. 实例 - 手写数字识别

1. 简介

tqdm是 Python 进度条库,可以在 Python长循环中添加一个进度提示信息。用户只需要封装任意的迭代器,是一个快速、扩展性强的进度条工具库。

2. 使用方法

- 传入可迭代对象

import time

from tqdm import *

for i in tqdm(range(100)):

time.sleep(0.01)

trange(i):tqdm(range(i))的简单写法

for t in trange(100):

time.sleep(0.01)

update()方法手动控制进度条更新的进度

with tqdm(total=200) as pbar:

for i in range(20): # 总共更新 20 次

pbar.update(10) # 每次更新步长为 10

time.sleep(1)

或者

pbar = tqdm(total=200)

for i in range (20):

pbar.update(10)

time.sleep(1)

pbar.close()

write()方法

pbar = trange(10)

for i in pbar:

time.sleep(1)

if not (i % 3):

tqdm.write('Done task %i' %i)

- 通过

set_description()和set_postfix()设置进度条显示信息

from random import random,randint

with trange(10) as t:

for i in t:

t.set_description("GEN %i"%i) # 进度条左边显示信息

t.set_postfix(loss=random(), gen=randint(1,999), str="h", lst=[1,2]) # 进度条右边显示信息

time.sleep(0.1)

3. 实例 - 手写数字识别

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

from torch.utils.data import DataLoader

import torchvision.datasets as datasets

import torchvision.transforms as transforms

from tqdm import tqdm

class CNN(nn.Module):

def __init__(self,in_channels=1,num_classes=10):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=1,out_channels=8,kernel_size=(3,3),stride=(1,1),padding=(1,1))

self.pool = nn.MaxPool2d(kernel_size=(2,2),stride=(2,2))

self.conv2 = nn.Conv2d(in_channels=8,out_channels=16,kernel_size=(3,3),stride=(1,1),padding=(1,1))

self.fc1 = nn.Linear(16*7*7,num_classes)

def forward(self,x):

x = F.relu(self.conv1(x))

x = self.pool(x)

x = F.relu(self.conv2(x))

x = self.pool(x)

x = x.reshape(x.shape[0],-1)

x = self.fc1(x)

return x

device = torch.device("cuda"if torch.cuda.is_available() else "cpu")

in_channels = 1

num_classes = 10

learning_rate = 0.001

batch_size = 64

num_epochs = 5

train_dataset = datasets.MNIST(root="dataset/",train=True,transform=transforms.ToTensor(),download=True)

train_loader = DataLoader(dataset=train_dataset,batch_size=batch_size,shuffle=True)

test_dataset = datasets.MNIST(root="dataset/",train=False,transform=transforms.ToTensor(),download=True)

test_loader = DataLoader(dataset=train_dataset,batch_size=batch_size,shuffle=True)

model = CNN().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(),lr=learning_rate)

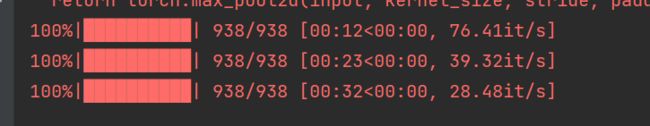

for index,(data,targets) in tqdm(enumerate(train_loader),total=len(train_loader),leave = True):

for data,targets in tqdm(train_loader):

# Get data to cuda if possible

data = data.to(device=device)

targets = targets.to(device=device)

# forward

scores = model(data)

loss = criterion(scores,targets)

# backward

optimizer.zero_grad()

loss.backward()

# gardient descent or adam step

optimizer.step()

【转载自】

- 【PyTorch总结】tqdm的使用;

- Python 超方便的迭代进度条 (Tqdm);