VMware环境配置

文章目录

- 一、环境配置

-

- 1、修改主机名,然后切换到root用户

- 2、确认主机网关

-

- a.确认windows主机网关

- b.确认虚拟机主机网关

- 3、修改网络配置

- 4、设置DNS域名解析的配置文件resolv.conf。

- 5、修改hosts文件

- 6、重启网络服务

- 7、验证网络服务

-

- a.虚拟机ping百度

- b.主机ping虚拟机

- 二、Hadoop伪分布式安装

-

- 1、创建hadoop用户

-

- a.新建用户

- b.添加用户组

- c.赋予root权限

- 2、切换到hadoop,创建压缩包上传文件和安装文件目录

- 3、上传压缩包

- 4、解压jdk和hadoop

- 5、配置jdk、hadoop环境变量

- 6、修改hadoop配置文件

-

- core-site.xml

- hdfs-site.xml

- hadoop-env.xml

- 7、执行NameNode的格式化

- 8、配置免密登录

- 9、启动hadoop集群

- 三、hive安装

-

- 1、上传安装包

- 2、上传安装包并解压

- 3、将hive添加到环境变量

- 4、修改hive配置文件

-

- Hive.env.xml

- Hive.site.xml

- hive-log4j2.properties

- 5、安装mysql

- 6\配置Hive相关配置文件

- 7、初始化hive

- 8、Hive启动

- 四、redis安装

-

-

-

- !!!出现的问题!!!

- !!!解决!!!

-

-

一、环境配置

1、修改主机名,然后切换到root用户

sudo hostnamectl set-hostname Master001

su -l root

2、确认主机网关

a.确认windows主机网关

b.确认虚拟机主机网关

3、修改网络配置

vi /etc/sysconfig/network-scripts/ifcfg-ens33

ONBOOT=yes

IPADDR=192.168.241.101

NETWASK=255.255.255.0

PREFIX=24

GATEWAY=192.168.241.2

BOOTPROTO=static

4、设置DNS域名解析的配置文件resolv.conf。

vi /etc/resolv.conf

5、修改hosts文件

vi /etc/hosts

6、重启网络服务

nmcli connection reload

nmcli connection up ens33

nmcli d connect ens33

7、验证网络服务

a.虚拟机ping百度

b.主机ping虚拟机

二、Hadoop伪分布式安装

1、创建hadoop用户

a.新建用户

adduser hadoop

passwd hadoop

b.添加用户组

usermod -a -G hadoop hadoop

c.赋予root权限

vi /etc/sudoers

hadoop ALL=(ALL) ALL

2、切换到hadoop,创建压缩包上传文件和安装文件目录

3、上传压缩包

4、解压jdk和hadoop

tar -zxf jdk-8u221-linux-x64.tar.gz -C /home/hadoop/module/

tar -zxf hadoop-3.3.1.tar.gz -C /home/hadoop/module/

5、配置jdk、hadoop环境变量

vi /etc/profile

#JAVA

export JAVA_HOME=/home/hadoop/module/jdk1.8.0_221

export PATH=$PATH:$JAVA_HOME/bin

#HADOOP

export HADOOP_HOME=/home/hadoop/module/hadoop-3.3.1

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH

6、修改hadoop配置文件

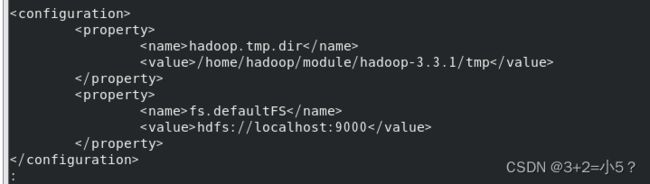

core-site.xml

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/module/hadoop-3.3.1/tmp</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop/module/hadoop-3.3.1/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoop/module/hadoop-3.3.1/tmp/dfs/data</value>

</property>

hadoop-env.xml

7、执行NameNode的格式化

如果要多次执行格式化,要删除data目录,否则datanode进程无法启动

hdfs namenode -format

8、配置免密登录

ssh-keygen -t rsa -P ''

ssh-copy-id Master001

9、启动hadoop集群

三、hive安装

1、上传安装包

安装包下载:https://hive.apache.org/downloads.html

Hive安装

2、上传安装包并解压

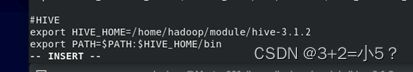

3、将hive添加到环境变量

export HIVE_HOME=/home/hadoop/module/hive-3.1.2

export PATH=$PATH:$HIVE_HOME/bin

4、修改hive配置文件

Hive.env.xml

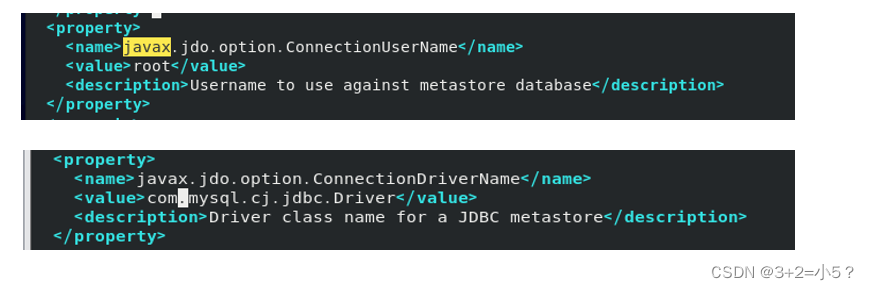

Hive.site.xml

hive-log4j2.properties

![]()

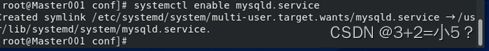

5、安装mysql

查看linux系统中是否自带数据库

rpm –qa | grep mysql

安装mysql 数据库

yum install –y mysql-server mysql mysql-devel

service mysqld start

Service mysqld status

mysql –u root -p

6\配置Hive相关配置文件

下载mysql-connector-java-8.0.26.java,上传到hive安装目录lib目录下

cp mysql-connector-java-8.0.26.jar /home/hadoop/module/hive-3.1.2/lib/

7、初始化hive

schematool -dbType mysql -initSchema

8、Hive启动

四、redis安装

!!!出现的问题!!!

1、Master001: ERROR: Unable to write in /home/hadoop/module/hadoop-3.3.1/logs. Aborting.

2、Warning: Permanently added ‘localhost’ (ECDSA) to the list of known hosts

3、root用户hadoop启动报错:Attempting to operate on hdfs namenode as root

4、执行yum install -y mysql-server mysql mysql-devel报错

为 repo ‘appstream’ 下载元数据失败 : Cannot prepare internal mirrorlist: No URLs in mirrorlist

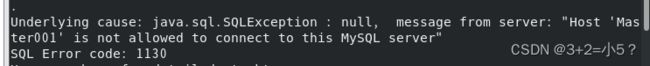

5、Underlying cause: java.sql.SQLException : null, message from server: “Host ‘Master001’ is not allowed to connect to this MySQL server”

!!!解决!!!

1、权限不够,授予权限

sudo chmod 777 /home/hadoop/module/hadoop-3.3.1/logs/

vi /etc/profile

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=roo

使环境变量生效

source /etc/profile

4、可以在/etc/yum.repos.d中更新一下源。使用vault.centos.org代替mirror.centos.org。

执行一下两行代码进行修改

sudo sed -i -e "s|mirrorlist=|#mirrorlist=|g" /etc/yum.repos.d/CentOS-*

sudo sed -i -e "s|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g" /etc/yum.repos.d/CentOS-*

update user set host ='%' where user ='root';

重启mysql:

service mysqld stop;

service mysqld start;