离线混合部署,tf-operator, tensorflow部署,Prometheus+grafana监控

文章目录

- 部署环境及要求

- 具体部署

-

- 1、K8s搭建完毕(docker等)

- 2、kubeflow部署方法1:

-

- 2.1 下载kfctl包与源码包

- 可能的错误1)** 导致在执行kfctl命令时报错:Segmentation fault

- 基于winscp传输

-

- 2.2 apply yaml文件

- 2.3 创建pv pvc

- 2.4 阿里云构建拉取所需要的镜像

- 2.5 修改各个deploy statefulset 的镜像下载策略

- 3、kubeflow部署方法2

-

- 3.1 kustomize

- 3.2 修改kustomize镜像

- 3.3 修改PVC,使用动态存储

- 可能的错误2)

- 可能的错误3)

-

- 3.4 apply yaml文件

- 可能的错误4)

-

- 成功:

- TensorFlow部署

-

- 离线:

- 在线

- prometheus+grafana监控资源

-

- 1 部署node-exporter组件

- 2 rbac.yaml

- 3 configmap.yaml

- 4 Prometheus deployment

- 5 apply

- 6 查看相关pod

- 7 查看metrics

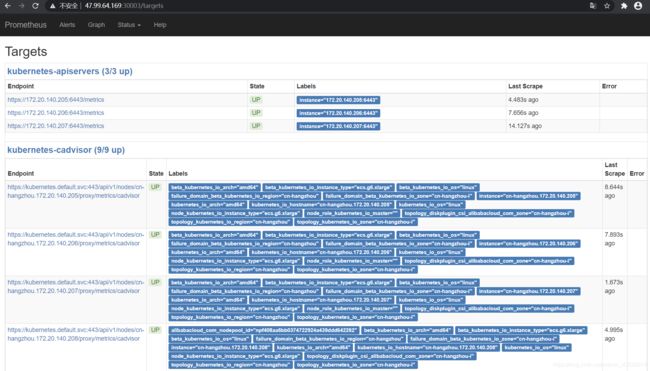

- 8 查看targets

- grafana

-

- 1 grafana deployment

- 2 apply

- 3 查看grafana pod和service

- 4 访问

部署环境及要求

阿里云k8s集群

k8s version :1.14.6

工作节点配置:

4核 16G Memory 50G HD

由于配置要求相对较高,因此推荐使用阿里云ECS或直接使用集群来部署,避免资源不足的情况。

具体部署

1、K8s搭建完毕(docker等)

2、kubeflow部署方法1:

2.1 下载kfctl包与源码包

下载源码包

//任一

wget https://github.com/kubeflow/manifests/archive/v1.0.2.tar.gz

wget https://github.com/kubeflow/kfctl/archive/v1.0.2.tar.gz

作者在这一步花费了很多的时间,因为GitHub中的fkctl包下载速度非常缓慢。

你可以考虑直接使用wegt在云服务器上进行操作,从github中拉取,但作者本人用这种方式拉取的包会存在缺失不完整的情况。

可能的错误1)** 导致在执行kfctl命令时报错:Segmentation fault

当然,这种错误也可能是由于硬盘没有达到规定要求所导致。

因此最终采用的方法是,先直接从github上下载(更佳,下载速度更快),在从本地上传至阿里云服务器对应目录。

基于winscp传输

利用winscp在安装kubeflow的过程中发挥了重大的作用,

3、下载yaml文件并修改

wget https://raw.githubusercontent.com/kubeflow/manifests/v1.0-branch/kfdef/kfctl_k8s_istio.v1.0.2.yaml

//修改 kfctl_k8s_istio.v1.0.2.yaml 内容

将 https://github.com/kubeflow/manifests/archive/v1.0.2.tar.gz 改为 file:///root/kubeflow/v1.0.2.tar.gz

2.2 apply yaml文件

tar -xvf kfctl_v1.0.2_linux.tar.gz

export PATH=$PATH:""

export KF_NAME=

export BASE_DIR=

export KF_DIR=${BASE_DIR}/${KF_NAME}

export CONFIG_URI="https://raw.githubusercontent.com/kubeflow/manifests/v1.0-branch/kfdef/kfctl_k8s_istio.v1.0.2.yaml"

mkdir -p ${KF_DIR}

cd ${KF_DIR}

kfctl apply -V -f ${CONFIG_URI}

2.3 创建pv pvc

在法二中详述

2.4 阿里云构建拉取所需要的镜像

github链接:

link.

克隆之后,在阿里云镜像仓库中构建,再从阿里云拉取,可以避免被墙的问题

gcr.io/kubeflow-images-public/ingress-setup:latest

gcr.io/kubeflow-images-public/admission-webhook:v1.0.0-gaf96e4e3

gcr.io/kubeflow-images-public/kubernetes-sigs/application:1.0-beta

argoproj/argoui:v2.3.0

gcr.io/kubeflow-images-public/centraldashboard:v1.0.0-g3ec0de71

gcr.io/kubeflow-images-public/jupyter-web-app:v1.0.0-g2bd63238

gcr.io/kubeflow-images-public/katib/v1alpha3/katib-controller:v0.8.0

gcr.io/kubeflow-images-public/katib/v1alpha3/katib-db-manager:v0.8.0

mysql:8

gcr.io/kubeflow-images-public/katib/v1alpha3/katib-ui:v0.8.0

gcr.io/kubebuilder/kube-rbac-proxy:v0.4.0

gcr.io/kfserving/kfserving-controller:0.2.2

metacontroller/metacontroller:v0.3.0

mysql:8.0.3

gcr.io/kubeflow-images-public/metadata:v0.1.11

gcr.io/ml-pipeline/envoy:metadata-grpc

gcr.io/tfx-oss-public/ml_metadata_store_server:v0.21.1

gcr.io/kubeflow-images-public/metadata-frontend:v0.1.8

minio/minio:RELEASE.2018-02-09T22-40-05Z

gcr.io/ml-pipeline/api-server:0.2.5

gcr.io/ml-pipeline/visualization-server:0.2.5

gcr.io/ml-pipeline/persistenceagent:0.2.5

gcr.io/ml-pipeline/scheduledworkflow:0.2.5

gcr.io/ml-pipeline/frontend:0.2.5

gcr.io/ml-pipeline/viewer-crd-controller:0.2.5

mysql:5.6

gcr.io/kubeflow-images-public/notebook-controller:v1.0.0-gcd65ce25

gcr.io/kubeflow-images-public/profile-controller:v1.0.0-ge50a8531

gcr.io/kubeflow-images-public/kfam:v1.0.0-gf3e09203

gcr.io/kubeflow-images-public/pytorch-operator:v1.0.0-g047cf0f

docker.io/seldonio/seldon-core-operator:1.0.1

gcr.io/spark-operator/spark-operator:v1beta2-1.0.0-2.4.4

gcr.io/spark-operator/spark-operator:v1beta2-1.0.0-2.4.4

gcr.io/spark-operator/spark-operator:v1beta2-1.0.0-2.4.4

gcr.io/google_containers/spartakus-amd64:v1.1.0

tensorflow/tensorflow:1.8.0

gcr.io/kubeflow-images-public/tf_operator:v1.0.0-g92389064

argoproj/workflow-controller:v2.3.0

2.5 修改各个deploy statefulset 的镜像下载策略

下载策略为Always ,需要修改为(imagePullPolicy后面值改为 IfNotPresent)

例如:

#kubectl edit deploy deploy名字 -n kubeflow

部署成功

博主本人在使用上述官方方法部署时遭遇了诸多问题,更推荐下列方法:

3、kubeflow部署方法2

3.1 kustomize

在方法一中利用kfctl安装,但本质上是使用kustomize安装

git clone https://github.com/kubeflow/manifests

cd manifests

git checkout v0.6-branch

cd /base

kubectl kustomize . | tee 3.2 修改kustomize镜像

grc_image = [

"gcr.io/kubeflow-images-public/ingress-setup:latest",

"gcr.io/kubeflow-images-public/admission-webhook:v20190520-v0-139-gcee39dbc-dirty-0d8f4c",

"gcr.io/kubeflow-images-public/kubernetes-sigs/application:1.0-beta",

"gcr.io/kubeflow-images-public/centraldashboard:v20190823-v0.6.0-rc.0-69-gcb7dab59",

"gcr.io/kubeflow-images-public/jupyter-web-app:9419d4d",

"gcr.io/kubeflow-images-public/katib/v1alpha2/katib-controller:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/katib-manager:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/katib-manager-rest:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-bayesianoptimization:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-grid:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-hyperband:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-nasrl:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-random:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/katib-ui:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/metadata:v0.1.8",

"gcr.io/kubeflow-images-public/metadata-frontend:v0.1.8",

"gcr.io/ml-pipeline/api-server:0.1.23",

"gcr.io/ml-pipeline/persistenceagent:0.1.23",

"gcr.io/ml-pipeline/scheduledworkflow:0.1.23",

"gcr.io/ml-pipeline/frontend:0.1.23",

"gcr.io/ml-pipeline/viewer-crd-controller:0.1.23",

"gcr.io/kubeflow-images-public/notebook-controller:v20190603-v0-175-geeca4530-e3b0c4",

"gcr.io/kubeflow-images-public/profile-controller:v20190619-v0-219-gbd3daa8c-dirty-1ced0e",

"gcr.io/kubeflow-images-public/kfam:v20190612-v0-170-ga06cdb79-dirty-a33ee4",

"gcr.io/kubeflow-images-public/pytorch-operator:v1.0.0-rc.0",

"gcr.io/google_containers/spartakus-amd64:v1.1.0",

"gcr.io/kubeflow-images-public/tf_operator:v0.6.0.rc0",

"gcr.io/arrikto/kubeflow/oidc-authservice:v0.2"

]

doc_image = [

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.ingress-setup:latest",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.admission-webhook:v20190520-v0-139-gcee39dbc-dirty-0d8f4c",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.kubernetes-sigs.application:1.0-beta",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.centraldashboard:v20190823-v0.6.0-rc.0-69-gcb7dab59",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.jupyter-web-app:9419d4d",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-controller:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-manager:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-manager-rest:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-bayesianoptimization:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-grid:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-hyperband:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-nasrl:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-random:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-ui:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.metadata:v0.1.8",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.metadata-frontend:v0.1.8",

"registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.api-server:0.1.23",

"registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.persistenceagent:0.1.23",

"registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.scheduledworkflow:0.1.23",

"registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.frontend:0.1.23",

"registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.viewer-crd-controller:0.1.23",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.notebook-controller:v20190603-v0-175-geeca4530-e3b0c4",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.profile-controller:v20190619-v0-219-gbd3daa8c-dirty-1ced0e",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.kfam:v20190612-v0-170-ga06cdb79-dirty-a33ee4",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.pytorch-operator:v1.0.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/google_containers.spartakus-amd64:v1.1.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.tf_operator:v0.6.0.rc0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/arrikto.kubeflow.oidc-authservice:v0.2"

]

3.3 修改PVC,使用动态存储

采用local-path-provisioner动态分配PV

否则:

可能的错误2)

调用StorageClass的几个pod例如mysql始终处于pending状态,无法正常运行。

解决方法:

安装local-path-provisioner:

kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

kubectl apply -f local-path-storage.yaml

apiVersion: v1

kind: Namespace

metadata:

name: local-path-storage

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-path-provisioner-role

rules:

- apiGroups: [""]

resources: ["nodes", "persistentvolumeclaims"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["endpoints", "persistentvolumes", "pods"]

verbs: ["*"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-path-provisioner-bind

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: local-path-provisioner-role

subjects:

- kind: ServiceAccount

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: local-path-provisioner

namespace: local-path-storage

spec:

replicas: 1

selector:

matchLabels:

app: local-path-provisioner

template:

metadata:

labels:

app: local-path-provisioner

spec:

serviceAccountName: local-path-provisioner-service-account

containers:

- name: local-path-provisioner

image: rancher/local-path-provisioner:v0.0.11

imagePullPolicy: IfNotPresent

command:

- local-path-provisioner

- --debug

- start

- --config

- /etc/config/config.json

volumeMounts:

- name: config-volume

mountPath: /etc/config/

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: config-volume

configMap:

name: local-path-config

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

annotations: #添加为默认StorageClass

storageclass.beta.kubernetes.io/is-default-class: "true"

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

---

kind: ConfigMap

apiVersion: v1

metadata:

name: local-path-config

namespace: local-path-storage

data:

config.json: |-

{

"nodePathMap":[

{

"node":"DEFAULT_PATH_FOR_NON_LISTED_NODES",

"paths":["/opt/local-path-provisioner"]

}

]

}

可能的错误3)

![]()

存在crash的问题,原因:防火墙(iptables)规则错乱或者缓存导致

解决方案:

iptables --flush

iptables -tnat --flush

3.4 apply yaml文件

先创建namespace

namespce.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kubeflow

内容较多,不做赘述,到此步可以采用别人集成好的

一键安装: link.

注意:针对于防火墙和动态存储的处理仍然必要!且应该先于apply yaml的过程,即链接中的python文件

可能的错误4)

![]()

可能有个别pod会一直处于pending状态,查看日志发现是资源不足的问题

增加节点服务器

因此再次推荐阿里云ECS或直接使用集群

成功:

成功的pvc和pv

kubectl get pv

kubectl get pvc

成功的kubeflow部署(节选)

kubectl get pods -n kubeflow

TensorFlow部署

TensorFlow 是由 Google Brain 团队为深度神经网络(DNN)开发的功能强大的开源软件库。

允许将深度神经网络的计算部署到任意数量的 CPU 或 GPU 的服务器、PC 或移动设备上,且只利用一个 TensorFlow API。

TensorFlow 则还有更多的特点:

1、支持所有流行语言,如 Python、C++、Java、R和Go

2、可以在多种平台上工作,甚至是移动平台和分布式平台

3、它受到所有云服务(AWS、Google和Azure)的支持

4、Keras——高级神经网络 API,已经与 TensorFlow 整合

5、与 Torch/Theano 比较,TensorFlow 拥有更好的计算图表可视化

6、允许模型部署到工业生产中,并且容易使用

7、有良好的社区支持

8、TensorFlow 不仅仅是一个软件库,它是一套包括 TensorFlow,TensorBoard 和 TensorServing 的软件

kubeflow通过几个核心组件帮助简化tensorflow的部署:

1、TF operator

tf-operator是Kubeflow的第一个CRD实现,解决的是TensorFlow模型训练的问题,它提供了广泛的灵活性和可配置,可以与阿里云上的NAS,OSS无缝集成,并且提供了简单的UI查看训练的历史记录。

2、TF job

一个新的资源类型,无需编写复杂的配置,只需要关注数据的输入,代码的运行和日志的输入输出。

TFJob 对象

| 属性 | 类型 | 描述 |

|---|---|---|

| apiVersion | string | api版本,目前为 kubeflow.org/v1alpha1 |

| kind | string | REST资源的类型. 这里是TFJob |

| metadata | ObjectMeta | 标准元数据定义 |

| spec | TFJobSpec | TensorFlow job的定义 |

3、TF hub

是一个用于促进机器学习模型中可复用部分再次进行探索与发布的库,主要将预训练过的TensorFlow模型片段再次利用到新的任务上

离线:

离线任务不需要对任务进行快速响应,但是计算量相对较大、占用资源多,在学习中我们可以直接认为运行一个pod

在线

采用分布式Tensorflow(多GPU)的方式

参考内容:

链接1: link.

链接2: link.

prometheus+grafana监控资源

1 部署node-exporter组件

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-system

labels:

k8s-app: node-exporter

spec:

template:

metadata:

labels:

k8s-app: node-exporter

spec:

containers:

- image: prom/node-exporter

name: node-exporter

ports:

- containerPort: 9100

protocol: TCP

name: http

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: node-exporter

name: node-exporter

namespace: kube-system

spec:

ports:

- name: http

port: 9100

nodePort: 31672

protocol: TCP

type: NodePort

selector:

k8s-app: node-exporter

2 rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

3 configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

4 Prometheus deployment

yaml文件:

apiVersion: apps/v1beta2

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v2.0.0

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: data

emptyDir: {}

- name: config-volume

configMap:

name: prometheus-config

---

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: kube-system

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30003

selector:

app: prometheus

5 apply

kubectl create -f node-exporter.yaml

kubectl create -f rbac-setup.yaml

kubectl create -f configmap.yaml

kubectl create -f prometheus.yaml

6 查看相关pod

kubectl get pods -n kube-system

kubectl get svc -n kube-system

7 查看metrics

http://localhost:31672/metrics

利用阿里云则选用公网ip

8 查看targets

http://localhost:30003/targets

如果你采用阿里云ECS进行部署,你会发现这与ECS自带的Prometheus监控界面是相同的。

你可以通过上栏中的graph等功能实现更加具象的监控。

grafana

1 grafana deployment

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: grafana-core

namespace: kube-system

labels:

app: grafana

component: core

spec:

replicas: 1

template:

metadata:

labels:

app: grafana

component: core

spec:

containers:

- image: grafana/grafana:5.0.0

name: grafana-core

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

env:

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

readinessProbe:

httpGet:

path: /login

port: 3000

volumeMounts:

- name: grafana-persistent-storage

mountPath: /var

volumes:

- name: grafana-persistent-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

labels:

app: grafana

component: core

spec:

type: NodePort

ports:

- port: 3000

nodePort: 31000

selector:

app: grafana

2 apply

kubectl create -f grafana.yaml

3 查看grafana pod和service

kubectl get pod -n kube-system

kubectl get svc -n kube-system

4 访问

http://localhost:31000

//初始用户名: admin

//初始密码: admin

我们采用在database设置中采用Prometheus的格式,并设置相应的url

grafna相对于Prometheus更加多样化,因此也具有较多的模板,在学习过程中发现编号为315的模板比较通用,可以直接在import中导入即可进行全面的管理。

例如不同pod的cpu use都在此处呈现: