k8s上部署Harbor通过Nginx-Ingress域名访问

目录

1、k8s集群环境,通过kubesphere安装部署。

1.1 集群基本信息

1.2 集群节点信息

2、安装Harbor

2.1、使用Helm添加Harbor仓库

2.2 、通过openssl生成证书

2.3、 创建secret

2.4、 创建nfs存储目录

2.5、 创建pv

2.6、创建pvc

2.7、values.yaml配置文件

2.8、部署执行命令

2.9、编辑ingress文件,类型vim操作

2.9.1、部署nginx-ingress-controller

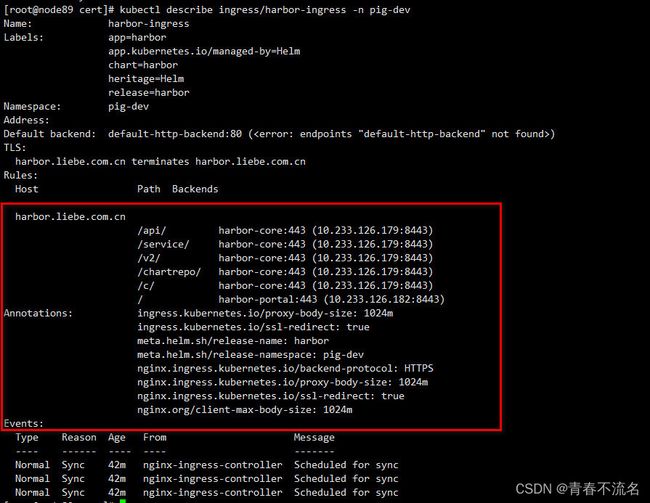

2.9.2、查看配置Ingress的配置结果

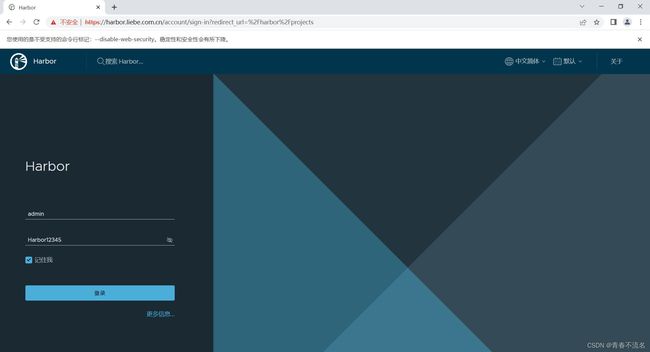

3、访问

3.1、window配置hosts

3.2、访问地址

1、k8s集群环境,通过kubesphere安装部署。

1.1 集群基本信息

1.2 集群节点信息

2、安装Harbor

2.1、使用Helm添加Harbor仓库

helm repo add harbor https://helm.goharbor.io

helm pull harbor/harbor运行上面命令,得到文件harbor-1.10.2.tgz,将文件解压,并重命名为harbor。

2.2 、通过openssl生成证书

harbor目录下存在cert,执行cp -r cert bak,对默认的cert文件进行备份。

cd cert

openssl genrsa -des3 -passout pass:over4chars -out tls.pass.key 2048

...

openssl rsa -passin pass:over4chars -in tls.pass.key -out tls.key

# Writing RSA key

rm -rf tls.pass.key

openssl req -new -key tls.key -out tls.csr

...

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:CN

State or Province Name (full name) []:Beijing

Locality Name (eg, city) [Default City]:Beijing

Organization Name (eg, company) [Default Company Ltd]:liebe

Organizational Unit Name (eg, section) []:liebe

Common Name (eg, your name or your server's hostname) []:harbon.liebe.com.cn

Email Address []:你的邮箱地址

Please enter the following 'extra' attributes

to be sent with your certificate request

A challenge password []:talent

An optional company name []:liebe

生成 SSL 证书

自签名 SSL 证书是从私钥和文件生成的。tls.keytls.csr

openssl x509 -req -sha256 -days 365 -in tls.csr -signkey tls.key -out tls.crt2.3、 创建secret

执行命令

kubectl create secret tls harbor.liebe.com.cn --key tls.key --cert tls.crt -n pig-dev

查看创建结果

kubectl get secret -n pig-dev

2.4、 创建nfs存储目录

mkdir -p /home/data/nfs-share/harbor/registry

mkdir -p /home/data/nfs-share/harbor/chartmuseum

mkdir -p /home/data/nfs-share/harbor/jobservice

mkdir -p /home/data/nfs-share/harbor/database

mkdir -p /home/data/nfs-share/harbor/redis

mkdir -p /home/data/nfs-share/harbor/trivy

mkdir -p /home/data/nfs-share/harbor/jobservicedata

mkdir -p /home/data/nfs-share/harbor/jobservicelog

chmod 777 /home/data/nfs-share/harbor/*2.5、 创建pv

#第1个

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-registry

namespace: pig-dev

labels:

app: harbor-registry

spec:

capacity:

storage: 150Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: "managed-nfs-storage"

mountOptions:

- hard

nfs:

path: /home/data/nfs-share/harbor/registry

server: 10.10.10.89

---

#第2个

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-chartmuseum

namespace: pig-dev

labels:

app: harbor-chartmuseum

spec:

capacity:

storage: 10G

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: "managed-nfs-storage"

mountOptions:

- hard

nfs:

path: /home/data/nfs-share/harbor/chartmuseum

server: 10.10.10.89

---

#第3个

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-jobservicelog

namespace: pig-dev

labels:

app: harbor-jobservicelog

spec:

capacity:

storage: 10G

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: "managed-nfs-storage"

mountOptions:

- hard

nfs:

path: /home/data/nfs-share/harbor/jobservicelog

server: 10.10.10.89

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-jobservicedata

namespace: pig-dev

labels:

app: harbor-jobservicedata

spec:

capacity:

storage: 10G

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: "managed-nfs-storage"

mountOptions:

- hard

nfs:

path: /home/data/nfs-share/harbor/jobservicedata

server: 10.10.10.89

---

#第4个

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-database

namespace: pig-dev

labels:

app: harbor-database

spec:

capacity:

storage: 10G

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: "managed-nfs-storage"

mountOptions:

- hard

nfs:

path: /home/data/nfs-share/harbor/database

server: 10.10.10.89

---

#第5个

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-redis

namespace: pig-dev

labels:

app: harbor-redis

spec:

capacity:

storage: 10G

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: "managed-nfs-storage"

mountOptions:

- hard

nfs:

path: /home/data/nfs-share/harbor/redis

server: 10.10.10.89

---

#第6个

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-trivy

namespace: pig-dev

labels:

app: harbor-trivy

spec:

capacity:

storage: 10G

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: "managed-nfs-storage"

mountOptions:

- hard

nfs:

path: /home/data/nfs-share/harbor/trivy

server: 10.10.10.892.6、创建pvc

#第1个

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-registry

namespace: pig-dev

spec:

accessModes:

- ReadWriteOnce

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 150Gi

---

#第2个

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-chartmuseum

namespace: pig-dev

spec:

accessModes:

- ReadWriteOnce

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 10Gi

---

#第3个

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-jobservicelog

namespace: pig-dev

spec:

accessModes:

- ReadWriteOnce

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 5Gi

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-jobservicedata

namespace: pig-dev

spec:

accessModes:

- ReadWriteOnce

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 5Gi

---

#第4个

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-database

namespace: pig-dev

spec:

accessModes:

- ReadWriteOnce

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 10Gi

---

#第5个

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-redis

namespace: pig-dev

spec:

accessModes:

- ReadWriteOnce

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 10Gi

---

#第6个

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-trivy

namespace: pig-dev

spec:

accessModes:

- ReadWriteOnce

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 10Gi2.7、values.yaml配置文件

expose:

# Set how to expose the service. Set the type as "ingress", "clusterIP", "nodePort" or "loadBalancer"

# and fill the information in the corresponding section

type: ingress

tls:

# Enable TLS or not.

# Delete the "ssl-redirect" annotations in "expose.ingress.annotations" when TLS is disabled and "expose.type" is "ingress"

# Note: if the "expose.type" is "ingress" and TLS is disabled,

# the port must be included in the command when pulling/pushing images.

# Refer to https://github.com/goharbor/harbor/issues/5291 for details.

enabled: true

# The source of the tls certificate. Set as "auto", "secret"

# or "none" and fill the information in the corresponding section

# 1) auto: generate the tls certificate automatically

# 2) secret: read the tls certificate from the specified secret.

# The tls certificate can be generated manually or by cert manager

# 3) none: configure no tls certificate for the ingress. If the default

# tls certificate is configured in the ingress controller, choose this option

certSource: "secret"

auto:

# The common name used to generate the certificate, it's necessary

# when the type isn't "ingress"

commonName: ""

secret:

# The name of secret which contains keys named:

# "tls.crt" - the certificate

# "tls.key" - the private key

secretName: "harbor.liebe.com.cn"

# The name of secret which contains keys named:

# "tls.crt" - the certificate

# "tls.key" - the private key

# Only needed when the "expose.type" is "ingress".

notarySecretName: "harbor.liebe.com.cn"

ingress:

hosts:

core: harbor.liebe.com.cn

notary: notary-harbor.liebe.com.cn

# set to the type of ingress controller if it has specific requirements.

# leave as `default` for most ingress controllers.

# set to `gce` if using the GCE ingress controller

# set to `ncp` if using the NCP (NSX-T Container Plugin) ingress controller

# set to `alb` if using the ALB ingress controller

controller: default

## Allow .Capabilities.KubeVersion.Version to be overridden while creating ingress

kubeVersionOverride: ""

className: ""

annotations:

# note different ingress controllers may require a different ssl-redirect annotation

# for Envoy, use ingress.kubernetes.io/force-ssl-redirect: "true" and remove the nginx lines below

ingress.kubernetes.io/ssl-redirect: "true"

ingress.kubernetes.io/proxy-body-size: "1024m"

#### 如果是 traefik ingress,则按下面配置:

# kubernetes.io/ingress.class: "traefik"

# traefik.ingress.kubernetes.io/router.tls: 'true'

# traefik.ingress.kubernetes.io/router.entrypoints: websecure

#### 如果是 nginx ingress,则按下面配置:

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "1024m"

nginx.org/client-max-body-size: "1024m"

notary:

# notary ingress-specific annotations

annotations: {}

# notary ingress-specific labels

labels: {}

harbor:

# harbor ingress-specific annotations

annotations: {}

# harbor ingress-specific labels

labels: {}

clusterIP:

# The name of ClusterIP service

name: harbor

# Annotations on the ClusterIP service

annotations: {}

ports:

# The service port Harbor listens on when serving HTTP

httpPort: 80

# The service port Harbor listens on when serving HTTPS

httpsPort: 443

# The service port Notary listens on. Only needed when notary.enabled

# is set to true

notaryPort: 4443

nodePort:

# The name of NodePort service

name: harbor

ports:

http:

# The service port Harbor listens on when serving HTTP

port: 80

# The node port Harbor listens on when serving HTTP

nodePort: 30102

https:

# The service port Harbor listens on when serving HTTPS

port: 443

# The node port Harbor listens on when serving HTTPS

nodePort: 30103

# Only needed when notary.enabled is set to true

notary:

# The service port Notary listens on

port: 4443

# The node port Notary listens on

nodePort: 30104

loadBalancer:

# The name of LoadBalancer service

name: harbor

# Set the IP if the LoadBalancer supports assigning IP

IP: ""

ports:

# The service port Harbor listens on when serving HTTP

httpPort: 80

# The service port Harbor listens on when serving HTTPS

httpsPort: 443

# The service port Notary listens on. Only needed when notary.enabled

# is set to true

notaryPort: 4443

annotations: {}

sourceRanges: []

# The external URL for Harbor core service. It is used to

# 1) populate the docker/helm commands showed on portal

# 2) populate the token service URL returned to docker/notary client

#

# Format: protocol://domain[:port]. Usually:

# 1) if "expose.type" is "ingress", the "domain" should be

# the value of "expose.ingress.hosts.core"

# 2) if "expose.type" is "clusterIP", the "domain" should be

# the value of "expose.clusterIP.name"

# 3) if "expose.type" is "nodePort", the "domain" should be

# the IP address of k8s node

#

# If Harbor is deployed behind the proxy, set it as the URL of proxy

externalURL: https://harbor.liebe.com.cn

# The internal TLS used for harbor components secure communicating. In order to enable https

# in each components tls cert files need to provided in advance.

internalTLS:

# If internal TLS enabled

enabled: true

# There are three ways to provide tls

# 1) "auto" will generate cert automatically

# 2) "manual" need provide cert file manually in following value

# 3) "secret" internal certificates from secret

certSource: "auto"

# The content of trust ca, only available when `certSource` is "manual"

trustCa: ""

# core related cert configuration

core:

# secret name for core's tls certs

secretName: ""

# Content of core's TLS cert file, only available when `certSource` is "manual"

crt: ""

# Content of core's TLS key file, only available when `certSource` is "manual"

key: ""

# jobservice related cert configuration

jobservice:

# secret name for jobservice's tls certs

secretName: ""

# Content of jobservice's TLS key file, only available when `certSource` is "manual"

crt: ""

# Content of jobservice's TLS key file, only available when `certSource` is "manual"

key: ""

# registry related cert configuration

registry:

# secret name for registry's tls certs

secretName: ""

# Content of registry's TLS key file, only available when `certSource` is "manual"

crt: ""

# Content of registry's TLS key file, only available when `certSource` is "manual"

key: ""

# portal related cert configuration

portal:

# secret name for portal's tls certs

secretName: ""

# Content of portal's TLS key file, only available when `certSource` is "manual"

crt: ""

# Content of portal's TLS key file, only available when `certSource` is "manual"

key: ""

# chartmuseum related cert configuration

chartmuseum:

# secret name for chartmuseum's tls certs

secretName: ""

# Content of chartmuseum's TLS key file, only available when `certSource` is "manual"

crt: ""

# Content of chartmuseum's TLS key file, only available when `certSource` is "manual"

key: ""

# trivy related cert configuration

trivy:

# secret name for trivy's tls certs

secretName: ""

# Content of trivy's TLS key file, only available when `certSource` is "manual"

crt: ""

# Content of trivy's TLS key file, only available when `certSource` is "manual"

key: ""

ipFamily:

# ipv6Enabled set to true if ipv6 is enabled in cluster, currently it affected the nginx related component

ipv6:

enabled: true

# ipv4Enabled set to true if ipv4 is enabled in cluster, currently it affected the nginx related component

ipv4:

enabled: true

# The persistence is enabled by default and a default StorageClass

# is needed in the k8s cluster to provision volumes dynamically.

# Specify another StorageClass in the "storageClass" or set "existingClaim"

# if you already have existing persistent volumes to use

#

# For storing images and charts, you can also use "azure", "gcs", "s3",

# "swift" or "oss". Set it in the "imageChartStorage" section

persistence:

enabled: true

# Setting it to "keep" to avoid removing PVCs during a helm delete

# operation. Leaving it empty will delete PVCs after the chart deleted

# (this does not apply for PVCs that are created for internal database

# and redis components, i.e. they are never deleted automatically)

resourcePolicy: "keep"

persistentVolumeClaim:

registry:

# Use the existing PVC which must be created manually before bound,

# and specify the "subPath" if the PVC is shared with other components

existingClaim: "harbor-registry"

# Specify the "storageClass" used to provision the volume. Or the default

# StorageClass will be used (the default).

# Set it to "-" to disable dynamic provisioning

storageClass: "managed-nfs-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 150Gi

annotations: {}

chartmuseum:

existingClaim: "harbor-chartmuseum"

storageClass: "managed-nfs-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 10Gi

annotations: {}

jobservice:

jobLog:

existingClaim: "harbor-jobservicelog"

storageClass: "managed-nfs-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

annotations: {}

scanDataExports:

existingClaim: "harbor-jobservicedata"

storageClass: "managed-nfs-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

annotations: {}

# If external database is used, the following settings for database will

# be ignored

database:

existingClaim: "harbor-database"

storageClass: "managed-nfs-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 10Gi

annotations: {}

# If external Redis is used, the following settings for Redis will

# be ignored

redis:

existingClaim: "harbor-redis"

storageClass: "managed-nfs-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 10Gi

annotations: {}

trivy:

existingClaim: "harbor-trivy"

storageClass: "managed-nfs-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 10Gi

annotations: {}

# Define which storage backend is used for registry and chartmuseum to store

# images and charts. Refer to

# https://github.com/docker/distribution/blob/master/docs/configuration.md#storage

# for the detail.

imageChartStorage:

# Specify whether to disable `redirect` for images and chart storage, for

# backends which not supported it (such as using minio for `s3` storage type), please disable

# it. To disable redirects, simply set `disableredirect` to `true` instead.

# Refer to

# https://github.com/docker/distribution/blob/master/docs/configuration.md#redirect

# for the detail.

disableredirect: false

# Specify the "caBundleSecretName" if the storage service uses a self-signed certificate.

# The secret must contain keys named "ca.crt" which will be injected into the trust store

# of registry's and chartmuseum's containers.

# caBundleSecretName:

# Specify the type of storage: "filesystem", "azure", "gcs", "s3", "swift",

# "oss" and fill the information needed in the corresponding section. The type

# must be "filesystem" if you want to use persistent volumes for registry

# and chartmuseum

type: filesystem

filesystem:

rootdirectory: /storage

#maxthreads: 100

azure:

accountname: accountname

accountkey: base64encodedaccountkey

container: containername

#realm: core.windows.net

# To use existing secret, the key must be AZURE_STORAGE_ACCESS_KEY

existingSecret: ""

gcs:

bucket: bucketname

# The base64 encoded json file which contains the key

encodedkey: base64-encoded-json-key-file

#rootdirectory: /gcs/object/name/prefix

#chunksize: "5242880"

# To use existing secret, the key must be gcs-key.json

existingSecret: ""

useWorkloadIdentity: false

s3:

# Set an existing secret for S3 accesskey and secretkey

# keys in the secret should be AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY for chartmuseum

# keys in the secret should be REGISTRY_STORAGE_S3_ACCESSKEY and REGISTRY_STORAGE_S3_SECRETKEY for registry

#existingSecret: ""

region: us-west-1

bucket: bucketname

#accesskey: awsaccesskey

#secretkey: awssecretkey

#regionendpoint: http://myobjects.local

#encrypt: false

#keyid: mykeyid

#secure: true

#skipverify: false

#v4auth: true

#chunksize: "5242880"

#rootdirectory: /s3/object/name/prefix

#storageclass: STANDARD

#multipartcopychunksize: "33554432"

#multipartcopymaxconcurrency: 100

#multipartcopythresholdsize: "33554432"

swift:

authurl: https://storage.myprovider.com/v3/auth

username: username

password: password

container: containername

#region: fr

#tenant: tenantname

#tenantid: tenantid

#domain: domainname

#domainid: domainid

#trustid: trustid

#insecureskipverify: false

#chunksize: 5M

#prefix:

#secretkey: secretkey

#accesskey: accesskey

#authversion: 3

#endpointtype: public

#tempurlcontainerkey: false

#tempurlmethods:

oss:

accesskeyid: accesskeyid

accesskeysecret: accesskeysecret

region: regionname

bucket: bucketname

#endpoint: endpoint

#internal: false

#encrypt: false

#secure: true

#chunksize: 10M

#rootdirectory: rootdirectory

imagePullPolicy: IfNotPresent

# Use this set to assign a list of default pullSecrets

imagePullSecrets:

# - name: docker-registry-secret

# - name: internal-registry-secret

# The update strategy for deployments with persistent volumes(jobservice, registry

# and chartmuseum): "RollingUpdate" or "Recreate"

# Set it as "Recreate" when "RWM" for volumes isn't supported

updateStrategy:

type: RollingUpdate

# debug, info, warning, error or fatal

logLevel: info

# The initial password of Harbor admin. Change it from portal after launching Harbor

harborAdminPassword: "Harbor12345"

# The name of the secret which contains key named "ca.crt". Setting this enables the

# download link on portal to download the CA certificate when the certificate isn't

# generated automatically

caSecretName: ""

# The secret key used for encryption. Must be a string of 16 chars.

secretKey: "not-a-secure-key"

# If using existingSecretSecretKey, the key must be sercretKey

existingSecretSecretKey: ""

# The proxy settings for updating trivy vulnerabilities from the Internet and replicating

# artifacts from/to the registries that cannot be reached directly

proxy:

httpProxy:

httpsProxy:

noProxy: 127.0.0.1,localhost,.local,.internal

components:

- core

- jobservice

- trivy

# Run the migration job via helm hook

enableMigrateHelmHook: false

# The custom ca bundle secret, the secret must contain key named "ca.crt"

# which will be injected into the trust store for chartmuseum, core, jobservice, registry, trivy components

# caBundleSecretName: ""

## UAA Authentication Options

# If you're using UAA for authentication behind a self-signed

# certificate you will need to provide the CA Cert.

# Set uaaSecretName below to provide a pre-created secret that

# contains a base64 encoded CA Certificate named `ca.crt`.

# uaaSecretName:

# If service exposed via "ingress", the Nginx will not be used

nginx:

image:

repository: goharbor/nginx-photon

tag: v2.6.2

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

replicas: 1

revisionHistoryLimit: 10

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

## Additional deployment annotations

podAnnotations: {}

## The priority class to run the pod as

priorityClassName:

portal:

image:

repository: goharbor/harbor-portal

tag: v2.6.2

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

replicas: 1

revisionHistoryLimit: 10

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

## Additional deployment annotations

podAnnotations: {}

## The priority class to run the pod as

priorityClassName:

core:

image:

repository: goharbor/harbor-core

tag: v2.6.2

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

replicas: 1

revisionHistoryLimit: 10

## Startup probe values

startupProbe:

enabled: true

initialDelaySeconds: 10

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

## Additional deployment annotations

podAnnotations: {}

# Secret is used when core server communicates with other components.

# If a secret key is not specified, Helm will generate one.

# Must be a string of 16 chars.

secret: ""

# Fill the name of a kubernetes secret if you want to use your own

# TLS certificate and private key for token encryption/decryption.

# The secret must contain keys named:

# "tls.crt" - the certificate

# "tls.key" - the private key

# The default key pair will be used if it isn't set

secretName: ""

# The XSRF key. Will be generated automatically if it isn't specified

xsrfKey: ""

## The priority class to run the pod as

priorityClassName:

# The time duration for async update artifact pull_time and repository

# pull_count, the unit is second. Will be 10 seconds if it isn't set.

# eg. artifactPullAsyncFlushDuration: 10

artifactPullAsyncFlushDuration:

gdpr:

deleteUser: false

jobservice:

image:

repository: goharbor/harbor-jobservice

tag: v2.6.2

replicas: 1

revisionHistoryLimit: 10

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

maxJobWorkers: 10

# The logger for jobs: "file", "database" or "stdout"

jobLoggers:

- file

# - database

# - stdout

# The jobLogger sweeper duration (ignored if `jobLogger` is `stdout`)

loggerSweeperDuration: 14 #days

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

## Additional deployment annotations

podAnnotations: {}

# Secret is used when job service communicates with other components.

# If a secret key is not specified, Helm will generate one.

# Must be a string of 16 chars.

secret: ""

## The priority class to run the pod as

priorityClassName:

registry:

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

registry:

image:

repository: goharbor/registry-photon

tag: v2.6.2

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

controller:

image:

repository: goharbor/harbor-registryctl

tag: v2.6.2

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

replicas: 1

revisionHistoryLimit: 10

nodeSelector: {}

tolerations: []

affinity: {}

## Additional deployment annotations

podAnnotations: {}

## The priority class to run the pod as

priorityClassName:

# Secret is used to secure the upload state from client

# and registry storage backend.

# See: https://github.com/docker/distribution/blob/master/docs/configuration.md#http

# If a secret key is not specified, Helm will generate one.

# Must be a string of 16 chars.

secret: ""

# If true, the registry returns relative URLs in Location headers. The client is responsible for resolving the correct URL.

relativeurls: false

credentials:

username: "harbor_registry_user"

password: "harbor_registry_password"

# If using existingSecret, the key must be REGISTRY_PASSWD and REGISTRY_HTPASSWD

existingSecret: ""

# Login and password in htpasswd string format. Excludes `registry.credentials.username` and `registry.credentials.password`. May come in handy when integrating with tools like argocd or flux. This allows the same line to be generated each time the template is rendered, instead of the `htpasswd` function from helm, which generates different lines each time because of the salt.

# htpasswdString: $apr1$XLefHzeG$Xl4.s00sMSCCcMyJljSZb0 # example string

middleware:

enabled: false

type: cloudFront

cloudFront:

baseurl: example.cloudfront.net

keypairid: KEYPAIRID

duration: 3000s

ipfilteredby: none

# The secret key that should be present is CLOUDFRONT_KEY_DATA, which should be the encoded private key

# that allows access to CloudFront

privateKeySecret: "my-secret"

# enable purge _upload directories

upload_purging:

enabled: true

# remove files in _upload directories which exist for a period of time, default is one week.

age: 168h

# the interval of the purge operations

interval: 24h

dryrun: false

chartmuseum:

enabled: true

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

# Harbor defaults ChartMuseum to returning relative urls, if you want using absolute url you should enable it by change the following value to 'true'

absoluteUrl: false

image:

repository: goharbor/chartmuseum-photon

tag: v2.6.2

replicas: 1

revisionHistoryLimit: 10

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

## Additional deployment annotations

podAnnotations: {}

## The priority class to run the pod as

priorityClassName:

## limit the number of parallel indexers

indexLimit: 0

trivy:

# enabled the flag to enable Trivy scanner

enabled: true

image:

# repository the repository for Trivy adapter image

repository: goharbor/trivy-adapter-photon

# tag the tag for Trivy adapter image

tag: v2.6.2

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

# replicas the number of Pod replicas

replicas: 1

# debugMode the flag to enable Trivy debug mode with more verbose scanning log

debugMode: false

# vulnType a comma-separated list of vulnerability types. Possible values are `os` and `library`.

vulnType: "os,library"

# severity a comma-separated list of severities to be checked

severity: "UNKNOWN,LOW,MEDIUM,HIGH,CRITICAL"

# ignoreUnfixed the flag to display only fixed vulnerabilities

ignoreUnfixed: false

# insecure the flag to skip verifying registry certificate

insecure: false

# gitHubToken the GitHub access token to download Trivy DB

#

# Trivy DB contains vulnerability information from NVD, Red Hat, and many other upstream vulnerability databases.

# It is downloaded by Trivy from the GitHub release page https://github.com/aquasecurity/trivy-db/releases and cached

# in the local file system (`/home/scanner/.cache/trivy/db/trivy.db`). In addition, the database contains the update

# timestamp so Trivy can detect whether it should download a newer version from the Internet or use the cached one.

# Currently, the database is updated every 12 hours and published as a new release to GitHub.

#

# Anonymous downloads from GitHub are subject to the limit of 60 requests per hour. Normally such rate limit is enough

# for production operations. If, for any reason, it's not enough, you could increase the rate limit to 5000

# requests per hour by specifying the GitHub access token. For more details on GitHub rate limiting please consult

# https://developer.github.com/v3/#rate-limiting

#

# You can create a GitHub token by following the instructions in

# https://help.github.com/en/github/authenticating-to-github/creating-a-personal-access-token-for-the-command-line

gitHubToken: ""

# skipUpdate the flag to disable Trivy DB downloads from GitHub

#

# You might want to set the value of this flag to `true` in test or CI/CD environments to avoid GitHub rate limiting issues.

# If the value is set to `true` you have to manually download the `trivy.db` file and mount it in the

# `/home/scanner/.cache/trivy/db/trivy.db` path.

skipUpdate: false

# The offlineScan option prevents Trivy from sending API requests to identify dependencies.

#

# Scanning JAR files and pom.xml may require Internet access for better detection, but this option tries to avoid it.

# For example, the offline mode will not try to resolve transitive dependencies in pom.xml when the dependency doesn't

# exist in the local repositories. It means a number of detected vulnerabilities might be fewer in offline mode.

# It would work if all the dependencies are in local.

# This option doesn’t affect DB download. You need to specify skipUpdate as well as offlineScan in an air-gapped environment.

offlineScan: false

# Comma-separated list of what security issues to detect. Possible values are `vuln`, `config` and `secret`. Defaults to `vuln`.

securityCheck: "vuln"

# The duration to wait for scan completion

timeout: 5m0s

resources:

requests:

cpu: 200m

memory: 512Mi

limits:

cpu: 1

memory: 1Gi

nodeSelector: {}

tolerations: []

affinity: {}

## Additional deployment annotations

podAnnotations: {}

## The priority class to run the pod as

priorityClassName:

notary:

enabled: true

server:

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

image:

repository: goharbor/notary-server-photon

tag: v2.6.2

replicas: 1

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

## Additional deployment annotations

podAnnotations: {}

## The priority class to run the pod as

priorityClassName:

signer:

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

image:

repository: goharbor/notary-signer-photon

tag: v2.6.2

replicas: 1

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

## Additional deployment annotations

podAnnotations: {}

## The priority class to run the pod as

priorityClassName:

# Fill the name of a kubernetes secret if you want to use your own

# TLS certificate authority, certificate and private key for notary

# communications.

# The secret must contain keys named ca.crt, tls.crt and tls.key that

# contain the CA, certificate and private key.

# They will be generated if not set.

secretName: ""

database:

# if external database is used, set "type" to "external"

# and fill the connection informations in "external" section

type: internal

internal:

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

image:

repository: goharbor/harbor-db

tag: v2.6.2

# The initial superuser password for internal database

password: "changeit"

# The size limit for Shared memory, pgSQL use it for shared_buffer

# More details see:

# https://github.com/goharbor/harbor/issues/15034

shmSizeLimit: 512Mi

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

## The priority class to run the pod as

priorityClassName:

initContainer:

migrator: {}

# resources:

# requests:

# memory: 128Mi

# cpu: 100m

permissions: {}

# resources:

# requests:

# memory: 128Mi

# cpu: 100m

external:

host: "postgresql"

port: "5432"

username: "gitlab"

password: "passw0rd"

coreDatabase: "registry"

notaryServerDatabase: "notary_server"

notarySignerDatabase: "notary_signer"

# if using existing secret, the key must be "password"

existingSecret: ""

# "disable" - No SSL

# "require" - Always SSL (skip verification)

# "verify-ca" - Always SSL (verify that the certificate presented by the

# server was signed by a trusted CA)

# "verify-full" - Always SSL (verify that the certification presented by the

# server was signed by a trusted CA and the server host name matches the one

# in the certificate)

sslmode: "disable"

# The maximum number of connections in the idle connection pool per pod (core+exporter).

# If it <=0, no idle connections are retained.

maxIdleConns: 100

# The maximum number of open connections to the database per pod (core+exporter).

# If it <= 0, then there is no limit on the number of open connections.

# Note: the default number of connections is 1024 for postgre of harbor.

maxOpenConns: 900

## Additional deployment annotations

podAnnotations: {}

redis:

# if external Redis is used, set "type" to "external"

# and fill the connection informations in "external" section

type: internal

internal:

# set the service account to be used, default if left empty

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

image:

repository: goharbor/redis-photon

tag: v2.6.2

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

## The priority class to run the pod as

priorityClassName:

external:

# support redis, redis+sentinel

# addr for redis: :

# addr for redis+sentinel: :,:,:

addr: "192.168.0.2:6379"

# The name of the set of Redis instances to monitor, it must be set to support redis+sentinel

sentinelMasterSet: ""

# The "coreDatabaseIndex" must be "0" as the library Harbor

# used doesn't support configuring it

coreDatabaseIndex: "0"

jobserviceDatabaseIndex: "1"

registryDatabaseIndex: "2"

chartmuseumDatabaseIndex: "3"

trivyAdapterIndex: "5"

password: ""

# If using existingSecret, the key must be REDIS_PASSWORD

existingSecret: ""

## Additional deployment annotations

podAnnotations: {}

exporter:

replicas: 1

revisionHistoryLimit: 10

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

podAnnotations: {}

serviceAccountName: ""

# mount the service account token

automountServiceAccountToken: false

image:

repository: goharbor/harbor-exporter

tag: v2.6.2

nodeSelector: {}

tolerations: []

affinity: {}

cacheDuration: 23

cacheCleanInterval: 14400

## The priority class to run the pod as

priorityClassName:

metrics:

enabled: false

core:

path: /metrics

port: 8001

registry:

path: /metrics

port: 8001

jobservice:

path: /metrics

port: 8001

exporter:

path: /metrics

port: 8001

## Create prometheus serviceMonitor to scrape harbor metrics.

## This requires the monitoring.coreos.com/v1 CRD. Please see

## https://github.com/prometheus-operator/prometheus-operator/blob/master/Documentation/user-guides/getting-started.md

##

serviceMonitor:

enabled: false

additionalLabels: {}

# Scrape interval. If not set, the Prometheus default scrape interval is used.

interval: ""

# Metric relabel configs to apply to samples before ingestion.

metricRelabelings:

[]

# - action: keep

# regex: 'kube_(daemonset|deployment|pod|namespace|node|statefulset).+'

# sourceLabels: [__name__]

# Relabel configs to apply to samples before ingestion.

relabelings:

[]

# - sourceLabels: [__meta_kubernetes_pod_node_name]

# separator: ;

# regex: ^(.*)$

# targetLabel: nodename

# replacement: $1

# action: replace

trace:

enabled: false

# trace provider: jaeger or otel

# jaeger should be 1.26+

provider: jaeger

# set sample_rate to 1 if you wanna sampling 100% of trace data; set 0.5 if you wanna sampling 50% of trace data, and so forth

sample_rate: 1

# namespace used to differentiate different harbor services

# namespace:

# attributes is a key value dict contains user defined attributes used to initialize trace provider

# attributes:

# application: harbor

jaeger:

# jaeger supports two modes:

# collector mode(uncomment endpoint and uncomment username, password if needed)

# agent mode(uncomment agent_host and agent_port)

endpoint: http://hostname:14268/api/traces

# username:

# password:

# agent_host: hostname

# export trace data by jaeger.thrift in compact mode

# agent_port: 6831

otel:

endpoint: hostname:4318

url_path: /v1/traces

compression: false

insecure: true

timeout: 10s

# cache layer configurations

# if this feature enabled, harbor will cache the resource

# `project/project_metadata/repository/artifact/manifest` in the redis

# which help to improve the performance of high concurrent pulling manifest.

cache:

# default is not enabled.

enabled: false

# default keep cache for one day.

expireHours: 24

2.8、部署执行命令

kubectl apply -f harbor-pv.yaml

kubectl apply -f harbor-pvc.yaml

helm install harbor ./ -f values.yaml -n pig-dev

kubectl get pv,pvc -A删除的命令

helm list -A

helm uninstall harbor -n pig-dev

kubectl delete -f harbor-pvc.yaml

kubectl delete -f harbor-pv.yaml

2.9、编辑ingress文件,类型vim操作

kubectl edit ingress -n pig-dev harbor-ingress

kubectl edit ingress -n pig-dev harbor-ingress-notary

增减内容:ingressClassName: nginx

两个文件都要增加ingressClassName: nginx

2.9.1、部署nginx-ingress-controller

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx

namespace: pig-dev

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-admission

namespace: pig-dev

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx

namespace: pig-dev

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-admission

namespace: pig-dev

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx

namespace: pig-dev

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: pig-dev

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-admission

namespace: pig-dev

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: pig-dev

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: pig-dev

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: pig-dev

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-controller

namespace: pig-dev

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-controller

namespace: pig-dev

spec:

externalTrafficPolicy: Local

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-controller-admission

namespace: pig-dev

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

#kind: Deployment

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-controller

namespace: pig-dev

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

hostNetwork: true

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: zhxl1989/ingress-nginx-controller:v1.2.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirstWithHostNet

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-admission-create

namespace: pig-dev

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: zhxl1989/ingress-nginx-kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-admission-patch

namespace: pig-dev

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: zhxl1989/ingress-nginx-kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.1

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: pig-dev

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None2.9.2、查看配置Ingress的配置结果

kubectl describe ingress/harbor-ingress -n pig-dev

3、访问

3.1、window配置hosts

3.2、访问地址

https://harbor.liebe.com.cn/harbor/projects

用户名:admin

密码:Harbor12345