densepose与SMPL之IUV坐标转XYZ坐标

具体流程

一、SMPL模型

SMPL模型拥有6890个XYZ坐标的3D人体点,目前第一步需要将这6890个人体点进行分析,并将不同部位的点位进行归并,具体分为以下几个部分:头部,胸部,腰部,左臂,右臂,左腿,右腿。因6890个XYZ坐标并非连续的坐标,是先描述左半边身体,然后再描述右半边身体,所以需花费较长时间分类。

上述分类区域的部署是为了方便找到具体点位的XYZ坐标范围,方便对应IUV坐标。

二、具体流程

实现具体流程

若找到了各个点位的IUV坐标范围后,后期推理流程会省略将IUV坐标转换成XYZ坐标去匹配点位的XYZ坐标这个步骤,演变成下面流程:

其中SMPL人体XYZ坐标点充当中间变量,转换得到人体的IUV坐标后就不需用到了。

三、目前实现左眼位置代码

总体代码可以参考densepose的notebook示例,以及一个densepose的IUV坐标转换XYZ进行贴图的notebook示例。

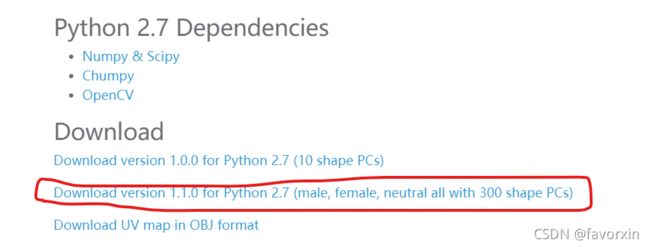

1、下载SMPL人体模型:

2、安装依赖:

pip install chumpy

pip install tqdm

3、具体代码

首先加载SMPL模型,并绘制具体人体模型:

# 导入包

import pickle

import numpy as np

import matplotlib.pyplot as plt

from tqdm import tqdm

from PIL import Image

from mpl_toolkits.mplot3d import axes3d, Axes3D

# Now read the smpl model.

with open('./models/basicmodel_m_lbs_10_207_0_v1.1.0.pkl', 'rb') as f:

data = pickle.load(f, encoding='iso-8859-1')

Vertices = data['v_template'] ## Loaded vertices of size (6890, 3)

X,Y,Z = [Vertices[:,0], Vertices[:,1],Vertices[:,2]]

fig = plt.figure(figsize=[150,30])

ax = fig.add_subplot(141, projection='3d')

ax.scatter(Z, X, Y, s=0.02, c='k')

smpl_view_set_axis_full_body(ax)

ax = fig.add_subplot(142, projection='3d')

ax.scatter(Z,X,Y,s=0.02,c='k')

smpl_view_set_axis_full_body(ax,45)

ax = fig.add_subplot(143, projection='3d')

ax.scatter(Z,X,Y,s=0.02,c='k')

smpl_view_set_axis_full_body(ax,90)

ax = fig.add_subplot(144, projection='3d')

ax.scatter(Z,X,Y, s=1, c='k')

smpl_view_set_axis_full_body(ax,0)

plt.show()

然后画出每几个连续点位然后区分人体区域:头部,胸部,腰部,左臂,右臂,左腿,右腿。目前左眼的部分点位区域为:2778~2800。

def plot_points(begin, end, b, e, body=False, face=True):

Z_ft, X_ft, Y_ft = Z[begin:end], X[begin:end], Y[begin:end]

fig = plt.figure(figsize=[40,10])

if body:

ax = fig.add_subplot(141, projection='3d')

ax.scatter(Z_ft, X_ft, Y_ft, s=0.02, c='k')

ax.scatter(Z_ft[b:e], X_ft[b:e], Y_ft[b:e], s=15, c='r')

smpl_view_set_axis_full_body(ax)

plt.show()

if face:

ax = fig.add_subplot(144, projection='3d')

ax.scatter(Z_ft, X_ft, Y_ft, s=1, c='k')

ax.scatter(Z_ft[b:e], X_ft[b:e], Y_ft[b:e], s=15, c='r')

smpl_view_set_axis_face(ax,0)

plt.show()

# 画出左眼点位图

plot_points(0, -1, 2778, 2800, False, True)

# 得到左眼位置的XYZ坐标范围

Z_ft, X_ft, Y_ft = Z[0:6800], X[0:6800], Y[0:6800]

Z_part, X_part, Y_part = Z_ft[2780:2800], X_ft[2780:2800], Y_ft[2780:2800]

Z_leye = []

X_leye = []

Y_leye = []

for i in range(len(Z_ft)):

if Z_ft[i] >= min(Z_part) and Z_ft[i] <= max(Z_part) and X_ft[i] >= min(X_part) and X_ft[i] <= max(X_part) and Y_ft[i] >= min(Y_part) and Y_ft[i] <= max(Y_part):

Z_leye.append(Z_ft[i])

X_leye.append(X_ft[i])

Y_leye.append(Y_ft[i])

定义densepose的IUV坐标转换为XYZ的代码:

import numpy as np

import copy

import cv2

from scipy.io import loadmat

import scipy.spatial.distance

import os

class DensePoseMethods:

def __init__(self):

#

ALP_UV = loadmat( './UV_Processed.mat' ) # Use your own path

self.FaceIndices = np.array( ALP_UV['All_FaceIndices']).squeeze()

self.FacesDensePose = ALP_UV['All_Faces']-1

self.U_norm = ALP_UV['All_U_norm'].squeeze()

self.V_norm = ALP_UV['All_V_norm'].squeeze()

self.All_vertices = ALP_UV['All_vertices'][0]

def barycentric_coordinates_fast(self, P0, P1, P2, P):

# This is a merge of barycentric_coordinates_exists & barycentric_coordinates.

# Inputs are (n, 3) shaped.

u = P1 - P0 #u is (n,3)

v = P2 - P0 #v is (n,3)

w = P.T - P0 #w is (n,3)

#

vCrossW = np.cross(v, w) #result is (n,3)

vCrossU = np.cross(v, u) #result is (n,3)

A = np.einsum('nd,nd->n', vCrossW, vCrossU) # vector-wise dot product. Result shape is (n,)

#

uCrossW = np.cross(u, w)

uCrossV = - vCrossU

B = np.einsum('nd,nd->n', uCrossW, uCrossV)

#

sq_denoms = np.einsum('nd,nd->n', uCrossV, uCrossV) #result shape is (n,)

sq_rs = np.einsum('nd,nd->n', vCrossW, vCrossW)

sq_ts = np.einsum('nd,nd->n', uCrossW, uCrossW)

rs = np.sqrt(sq_rs / sq_denoms) #result shape is (n,)

ts = np.sqrt(sq_ts / sq_denoms)

#

results = [None] * P0.shape[0]

for i in range(len(results)):

if not (A[i] < 0 or B[i] < 0):

if ((rs[i] <= 1) and (ts[i] <= 1) and (rs[i] + ts[i] <= 1)):

results[i] = (1 - (rs[i] + ts[i]) , rs[i], ts[i])

return results

def IUV2FBC_fast( self, I_point , U_point, V_point):

P = np.array([ U_point , V_point , 0 ])

FaceIndicesNow = np.where( self.FaceIndices == I_point )

FacesNow = self.FacesDensePose[FaceIndicesNow]

#

P_0 = np.vstack( (self.U_norm[FacesNow][:,0], self.V_norm[FacesNow][:,0], np.zeros(self.U_norm[FacesNow][:,0].shape))).transpose()

P_1 = np.vstack( (self.U_norm[FacesNow][:,1], self.V_norm[FacesNow][:,1], np.zeros(self.U_norm[FacesNow][:,1].shape))).transpose()

P_2 = np.vstack( (self.U_norm[FacesNow][:,2], self.V_norm[FacesNow][:,2], np.zeros(self.U_norm[FacesNow][:,2].shape))).transpose()

#

bcs = self.barycentric_coordinates_fast(P_0, P_1, P_2, P)

for i, bc in enumerate(bcs):

if bc is not None:

bc1,bc2,bc3 = bc

return(FaceIndicesNow[0][i],bc1,bc2,bc3)

#

# If the found UV is not inside any faces, select the vertex that is closest!

#

D1 = scipy.spatial.distance.cdist( np.array( [U_point,V_point])[np.newaxis,:] , P_0[:,0:2]).squeeze()

D2 = scipy.spatial.distance.cdist( np.array( [U_point,V_point])[np.newaxis,:] , P_1[:,0:2]).squeeze()

D3 = scipy.spatial.distance.cdist( np.array( [U_point,V_point])[np.newaxis,:] , P_2[:,0:2]).squeeze()

#

minD1 = D1.min()

minD2 = D2.min()

minD3 = D3.min()

#

if((minD1< minD2) & (minD1< minD3)):

return( FaceIndicesNow[0][np.argmin(D1)] , 1.,0.,0. )

elif((minD2< minD1) & (minD2< minD3)):

return( FaceIndicesNow[0][np.argmin(D2)] , 0.,1.,0. )

else:

return( FaceIndicesNow[0][np.argmin(D3)] , 0.,0.,1. )

def FBC2PointOnSurface( self, FaceIndex, bc1,bc2,bc3,Vertices ):

##

Vert_indices = self.All_vertices[self.FacesDensePose[FaceIndex]]-1

##

p = Vertices[Vert_indices[0],:] * bc1 + \

Vertices[Vert_indices[1],:] * bc2 + \

Vertices[Vert_indices[2],:] * bc3

##

return(p)

读取照片face1.png的IUV保存结果,并将IUV坐标转换为XYZ坐标并将在左眼位置的IUV坐标都保存下来。

iuv_arr = np.load('./saved/iuv_gao1.npy')

print('iuv_arr.shape: ', iuv_arr.shape)

INDS = iuv_arr[0,:,:]

pick_idx = 1 # PICK PERSON INDEX!

C = np.where(INDS >= pick_idx)

iuv0 = iuv_arr[0,:,:]

iuv1 = iuv_arr[1,:,:]

iuv2 = iuv_arr[2,:,:]

IUV = np.concatenate((iuv0[:,:,np.newaxis], iuv1[:,:,np.newaxis], iuv2[:,:,np.newaxis]), axis=2)

print('IUV shape:', IUV.shape)

print('num pts on picked person:', C[0].shape)

IUV_pick = IUV[C[0], C[1], :] # boolean indexing

IUV_pick = IUV_pick.astype(np.float)

IUV_pick[:, 1:3] = IUV_pick[:, 1:3] / 255.0

collected_x = np.zeros(C[0].shape)

collected_y = np.zeros(C[0].shape)

collected_z = np.zeros(C[0].shape)

# 开始将IUV坐标转换为XYZ坐标,并将对应位置的IUV坐标保存下来为iuv_list。

DP = DensePoseMethods()

pbar = tqdm(total=IUV_pick.shape[0])

iuv_list = []

for i in range(IUV_pick.shape[0]):

pbar.update(1) # Use tqdm to visualize the converting process

# Convert IUV to FBC (faceIndex and barycentric coordinates.)

FaceIndex,bc1,bc2,bc3 = DP.IUV2FBC_fast(IUV_pick[i, 0], IUV_pick[i, 1], IUV_pick[i, 2])

# Use FBC to get 3D coordinates on the surface.

p = DP.FBC2PointOnSurface( FaceIndex, bc1,bc2,bc3,Vertices )

#

collected_x[i] = p[0]

collected_y[i] = p[1]

collected_z[i] = p[2]

if p[0] >= min(X_leye) and p[0] <= max(X_leye) and p[1] >= min(Y_leye) and p[1] <= max(Y_leye) and p[2] >= min(Z_leye) and p[2] <= max(Z_leye):

save_iuv = [IUV_pick[i, 0], IUV_pick[i, 1], IUV_pick[i, 2]]

iuv_list.append(save_iuv)

pbar.close()

print('IUV to PointOnSurface done')

# 得到iuv单独的范围list

print(len(iuv_list))

i_leye_list = []

u_leye_list = []

v_leye_list = []

for cur_iuv_arr in iuv_list:

i_leye_list.append(cur_iuv_arr[0])

u_leye_list.append(cur_iuv_arr[1])

v_leye_list.append(cur_iuv_arr[2])

在原图中画出左眼区域:

img = cv2.imread('./saved/face1.png')

bbox_xywh = [4.8355975, 0., 462.35657, 667.8104] # densepose推理得到的人体区域

x, y, w, h = int(bbox_xywh[0]), int(bbox_xywh[1]), int(bbox_xywh[2]), int(bbox_xywh[3])

crop_img = img[y:y+h, x:x+w] # 人体区域图像

i_face1 = iuv_arr[0,:,:]

u_face1 = iuv_arr[1,:,:]

v_face1 = iuv_arr[2,:,:]

U = u_face1.astype(float) / 255.0

V = v_face1.astype(float) / 255.0

# 显示cv2格式的图

def show_pic_hor(pic_list):

nums = len(pic_list)

plt_nums = np.ceil(np.sqrt(nums))

for i in range(nums):

plt.subplot(1, nums, i + 1)

if pic_list[i].ndim == 3:

try:

cur_pic_rgb = cv2.cvtColor(pic_list[i], cv2.COLOR_BGR2RGB)

except:

cur_pic_rgb = pic_list[i]

plt.imshow(cur_pic_rgb)

elif pic_list[i].ndim == 2:

plt.imshow(pic_list[i], 'gray')

plt.show()

# 画左眼的函数

def plot_left_eye(crop_img, iuv_list, i_face1, U, V):

img_cut = crop_img.copy()

k = 0

for i in range(img_cut.shape[0]):

for j in range(img_cut.shape[1]):

if i_face1[i,j] == iuv_list[k][0] and U[i,j] == iuv_list[k][1] and V[i,j] == iuv_list[k][2]:

cv2.circle(img_cut, (j,i), 1, (0,0,255), 0)

k += 1

if k == len(iuv_list):

return img_cut

return img_cut

img_cut = plot_left_eye(crop_img, iuv_list, i_face1, U, V)

show_pic_hor([img_cut])

参考项目:

(1)Densepose:https://github.com/facebookresearch/DensePose/blob/main/notebooks/DensePose-COCO-on-SMPL.ipynb

(2)Detectron2:https://github.com/facebookresearch/detectron2

(2)Densepose IUV-XYZ:https://github.com/linjunyu/Detectron2-Densepose-IUV2XYZ