天池小布助手对话短文本语义匹配-文本二分类实践(pytorch)

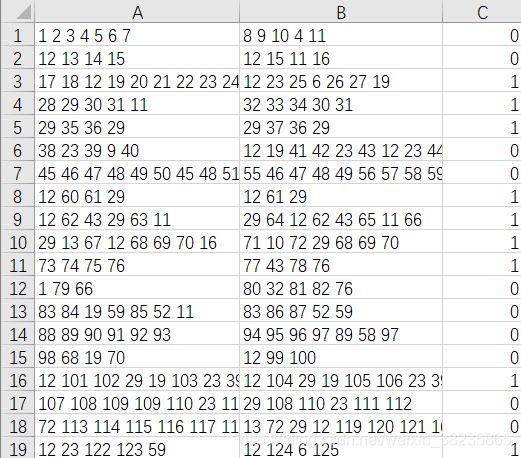

目标:对句子二分类,检测两个句子是否表达是同一个意思,模型数据来自天池全球人工智能技术创新大赛【赛道三】详情

模型:textcnn,lstm,lstm+attention最后选择用最后一种方法

步骤:

1.如果想利用词向量模型训练的结果做embeeding,则准备有标签语料,利用无标签的语料训练字向量模型,得到字向量权重矩阵和字典,向量训练过程可参考词向量模型训练实践,该数据已经脱敏了,拿不到字典,暂时不做词向量训练

加载训练好的字向量模型

model = word2vec.word2VecKeyedVectors.load_word2vec_format('字向量.vector',binary=False)

words_list = list(model.wv.vacab.keys)

word2idx_dic = {word:index for index,word in enumerate(words_list)}

embedding_matrix = model.wv.vectors

2.处理数据,遍历语料中每个句子的每一个字,如果该字不在字典中,设置为[UNK]对应的索引,在字典中,设置为字典的索引,长度不够N(此处设置为512)的句子,在句子后面用[PAD]对应的索引补全,这样处理后的每个句子长度都一样。

class Token(object):

def __init__(self, vocab_file_path, max_len=512):

self.vocab_file_path = vocab_file_path

self.max_len = max_len

self.word2id, self.id2word = self._load_vovab_file() # 得到词典

# 进行参数验证

if self.max_len > 512: # 表示超过了bert限定长度

raise Exception(print('设置序列最大长度超过bert限制长度,建议设置max_len<=510'))

# 加载词表生成word2id和id2word列表

def _load_vovab_file(self):

with open(self.vocab_file_path, 'r', encoding='utf-8') as fp:

vocab_list = [i.replace('\n', '') for i in fp.readlines()]

word2id = {}

id2word = {}

for index, i in enumerate(vocab_list):

word2id[i] = index

id2word[index] = i

return word2id, id2word

# 定义数据编码encode并生成pytorch所需的数据格式

def encode_str(self, txt_list: list):

# 针对所有的输入数据进行编码

return_txt_id_list = []

for txt in txt_list:

inner_str = txt

inner_str_list = list(inner_str)

# 开始构建各种索引

inner_seq_list = []

inner_seq_list.append('[CLS]')#句子开始标记

for char in inner_str_list:

char_index = self.word2id.get(char, False)

if char_index == False: # 表示该字符串不认识

inner_seq_list.append('[UNK]')

else:

inner_seq_list.append(char)

inner_seq_list.append('[SEP]') # 跟上结尾token

inner_seq_list.append('[PAD]') # 跟上PAD

return_txt_id_list.append(inner_seq_list)

return return_txt_id_list

3.编写模型,评价函数,加载数据等代码

需要加载的包:

import sklearn.metrics as metrics

import time

import torch

import torch.autograd as autograd

import torch.nn as nn

import torch.optim as optim

import torch.utils.data as data

import torch.nn.functional as F

import argparse

import pickle

from torch.utils.data import Dataset, DataLoader

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib as mpl

模型:

class LSTM_model(nn.Module):

def __init__(self, config):

super(LSTM_model, self).__init__()

self.config = config

hidden_size = config.word_embedding_dimension

self.rnn = nn.LSTM(batch_first=True,bidirectional=True,input_size=hidden_size,hidden_size=hidden_size,num_layers=2)

self.f1 = nn.Sequential(nn.Linear(hidden_size*2,100),nn.Dropout(0.8),nn.ReLU())

self.f2 = nn.Sequential(nn.Linear(100,2),nn.Softmax())

def forward(self, x):

x1,_ = self.rnn(x)#[256, 61, 100]

x2 = x1[:,-1,:] #torch.Size([256, 200])

x3 = self.f1(x2) #torch.Size([256, 100])

x4 = self.f2(x3) #torch.Size([100, 2])

return x4

模型配置,此处使用类,实际发现创建字典更简洁

# 参数 类/字典

class Config(object):

def __init__(self,

word_embedding_dimension=100, #向量维度

word_num=20000, #字典数量

epoch=5,

sentence_max_size=61, # 句子长度

cuda=False,

label_num=2,#标签个数

learning_rate=0.03,

batch_size=256,

out_channel=200

):

self.word_embedding_dimension = word_embedding_dimension # 词向量的维度

self.word_num = word_num

self.epoch = epoch # 遍历样本次数

self.sentence_max_size = sentence_max_size # 句子长度

self.label_num = label_num # 分类标签个数

self.lr = learning_rate

self.batch_size = batch_size

self.out_channel = out_channel

self.cuda = cuda

#创建对象

config = Config()

评价函数,如果是多分类

def eval_score(preds_prob, label):

row_index,col_index = np.where(preds_prob==np.max(preds_prob,axis=1).reshape(-1,1))

total_precision= metrics.precision_score(col_index,label)

total_recall= metrics.recall_score(col_index,label)

total_f1= metrics.f1_score(col_index,label)

total_accuracy = metrics.accuracy_score(col_index, label)

return (total_precision, total_recall, total_f1, total_accuracy), ['精准度', '召回率', 'f1值', '正确率']

加载数据并处理为批量大小

#dataset

class TextDataset(data.Dataset):

def __init__(self, data_list, label_list):

self.data = torch.tensor(data_list)

self.label = torch.tensor(label_list)

def __getitem__(self, index):

return (self.data[index], self.label[index])

def __len__(self):

return len(self.data)

def load_data():

path = './oppo_breeno_round1_data/'

df_train = pd.read_table(path + "gaiic_track3_round1_train_20210228.tsv",names=['q1', 'q2', 'label']).fillna("0") # (100000, 3)

df_test = pd.read_table(path + 'gaiic_track3_round1_testB_20210317.tsv',names=['q1', 'q2']).fillna("0") # (25000, 2)

train_num = int(0.7 * df_train.shape[0])

label_list = df_train['label'].values

label_list = [[line] for line in label_list]

train_data_list = (df_train['q1'] + " 19999 " + df_train['q2']).values # ndarray

train_data_list = [line.split(' ') for line in train_data_list] # list

train_data_list = [list(map(int, line)) for line in train_data_list]

test_data_list = (df_test['q1'] + " 19999 " + df_test['q2']).values # ndarray

test_data_list = [line.split(' ') for line in test_data_list] # list

test_data_list = [list(map(int, line)) for line in test_data_list]

sentence_max_size = 61

def add_padding(data_list):

for line_list in data_list:

if len(line_list) <= sentence_max_size:

line_list += [0] * (sentence_max_size - len(line_list))

return data_list

train_data_list = add_padding(train_data_list)

test_data_list = add_padding(test_data_list)

train_set = TextDataset(train_data_list[:train_num], label_list[:train_num])

dev_set = TextDataset(train_data_list[train_num:], label_list[train_num:])

training_iter = DataLoader(dataset=train_set,batch_size=config.batch_size,num_workers=2)

valid_iter = DataLoader(dataset=dev_set,batch_size=config.batch_size,num_workers=2)

return training_iter, valid_iter, test_data_list

训练和验证

def train_model(training_iter, valid_iter,test_data_list):

#model = TextCNN(config)

model = LSTM_model(config)

embeds = nn.Embedding(config.word_num, config.word_embedding_dimension)

if torch.cuda.is_available():

model.cuda()

embeds = embeds.cuda()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=config.lr)

# Train the model

loss_list = []

for epoch in range(config.epoch): #

start_time = time.time()

loss_sum = 0

count = 1

sum_out = np.array([])

sum_label = np.array([])

model.train() # 防止过拟合

for i, batch_data in enumerate(training_iter):

data = batch_data[0] # torch.Size([256, 61]) #batchsize_250

label = batch_data[1] # torch.Size([256, 1])

if torch.cuda.is_available(): # config.cuda and

data = data.cuda()

label = label.cuda()

input_data = embeds(autograd.Variable(data))

#input_data = input_data.unsqueeze(1) # [256, 202, 100]-->[256, 1, 202, 100]

optimizer.zero_grad()

out = model(input_data) # Expected 4-dimensional input for 4-dimensional weight [1, 1, 3, 100]

loss = criterion(out, label.squeeze()) # out:torch.Size([256, 2]),lable:torch.Size([256, 1])--> torch.Size([256])#autograd.Variable(label.float()))

loss_sum += loss

loss.backward()

optimizer.step()

count = count + 1

model.eval()

for i, batch_data in enumerate(valid_iter):

data = batch_data[0] # torch.Size([256, 60]) #batchsize_250

label = batch_data[1] # torch.Size([256, 1])

if torch.cuda.is_available(): # config.cuda and

data = data.cuda()

label = label.cuda()

input_data = embeds(autograd.Variable(data))

#input_data = input_data.unsqueeze(1) # (256,1,60,100)

optimizer.zero_grad()

out = model(input_data) # Expected 4-dimensional input for 4-dimensional weight [1, 1, 3, 100]

sum_out = out.cpu().detach().numpy() if i == 0 else np.append(sum_out, out.cpu().detach().numpy(), axis=0)

sum_label = label.cpu().detach().numpy() if i == 0 else np.append(sum_label, label.cpu().detach().numpy(),

axis=0)

print("epoch", epoch + 1, 'time:',time.time()-start_time)

print("train The average loss is: %.5f" % (loss_sum / count))

loss_list.append((loss_sum / count).cpu().detach().numpy())

total_res, total_label = eval_score(sum_out, sum_label)

total_precision, total_recall, total_f1, total_acc = total_res

print(f'valid的 precision:{total_precision} recall:{total_recall}, f1:{total_f1} acc: {total_acc}')

print('very_loss_list', loss_list)

4.加载模型并预测结果

input_data = embeds(autograd.Variable(torch.tensor(test_data_list)))

out = model(input_data)

result = out.cpu().detach().numpy()

pd.DataFrame(result).to_csv("result.csv", index=False, header=False)

torch.save(model.state_dict(), './model/params.pkl')

模型效果低于fasttext分类验证集test方法99多%,线上测试75%(可能过拟合)

改进:

- nn.embedding只有放进模型里面才会迭代改变

- 再加上attention算子,

- 评价函数AUC做指标

大概100多轮后,loss平缓,结果为precision:0.7288917597120974 recall:0.6538366029302211, f1:0.6893271664194083 acc: 0.7626666666666667,auc:0.805741948606774,时间原因,没有线上测试,但线上使用auc作为评价指标,近似线上测试80%超过baseline,fastext.

改进后全部代码如下:

import os

import sklearn.metrics as metrics

import time

import torch

import torch.autograd as autograd

import torch.autograd.variable as variable

import torch.nn as nn

import torch.optim as optim

import torch.utils.data as data

import torch.nn.functional as F

import argparse

import pickle

from torch.utils.data import Dataset, DataLoader

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib as mpl

# 参数 类/字典

class Config(object):

def __init__(self,

word_embedding_dimension=100, # 向量维度

word_num=22000, # 字典数量 21961

epoch=25,

sentence_max_size=61, # 句子长度

cuda=False,

label_num=2, # 标签个数

learning_rate=0.1,

batch_size=256,

out_channel=200

):

self.word_embedding_dimension = word_embedding_dimension # 词向量的维度

self.word_num = word_num

self.epoch = epoch # 遍历样本次数

self.sentence_max_size = sentence_max_size # 句子长度

self.label_num = label_num # 分类标签个数

self.lr = learning_rate

self.batch_size = batch_size

self.out_channel = out_channel

self.cuda = cuda

config = Config()

class TextDataset(data.Dataset):

def __init__(self, train_data_list, label_list):

self.data = torch.tensor(train_data_list)

self.label = torch.tensor(label_list)

def __getitem__(self, index):

return (self.data[index], self.label[index])

def __len__(self):

return len(self.data)

class TestTextDataset(data.Dataset):

def __init__(self, test_data_list):

self.data = torch.tensor(test_data_list)

def __getitem__(self, index):

return self.data[index]

def __len__(self):

return len(self.data)

class TextCNN(nn.Module):

def __init__(self, config):

super(TextCNN, self).__init__()

self.config = config

out_channel = config.out_channel

# in_channels=1,out_channels=1, kernel_size=(2,词向量维度大小100)

# 输入为(1个通道),输出为 1张特征图, 卷积核为2x100

self.conv3 = nn.Conv2d(1, out_channel, (2, config.word_embedding_dimension))

self.conv4 = nn.Conv2d(1, out_channel, (3, config.word_embedding_dimension))

self.conv5 = nn.Conv2d(1, out_channel, (4, config.word_embedding_dimension))

# 池化为(n,m)那么输出变为(w/self.config.sentence_max_size-1,h/1)

self.Max3_pool = nn.MaxPool2d((self.config.sentence_max_size - 2 + 1, 1))

self.Max4_pool = nn.MaxPool2d((self.config.sentence_max_size - 3 + 1, 1))

self.Max5_pool = nn.MaxPool2d((self.config.sentence_max_size - 4 + 1, 1))

self.linear1 = nn.Linear(out_channel * 6, config.label_num)

self.dropout = nn.Dropout(p=0.8) # dropout训练 0.6387666666666667

self.softmax = nn.Softmax()

def forward(self, x):

batch = x.shape[0]

# Convolution x:torch.Size([256, 1, 61, 100])

x1 = F.relu(self.conv3(x)) # torch.Size([256, 200, 60, 1])

x2 = F.relu(self.conv4(x)) # torch.Size([256, 200, 59, 1])

x3 = F.relu(self.conv5(x))

# Pooling

x1 = self.Max3_pool(x1) # torch.Size([256, 200, 1, 1])

x2 = self.Max4_pool(x2)

x3 = self.Max5_pool(x3)

# capture and concatenate the features

x = torch.cat((x1, x2, x3), -1) # torch.Size([256, 200, 1, 3])

x = x.view(batch, 1, -1) # torch.Size([256, 1, 600])200*1*3

x = self.dropout(x)

# project the features to the labels

x = self.linear1(x) # torch.Size([256, 1, 600])-->torch.Size([256, 1, 2])

x = x.view(-1, self.config.label_num)

return F.softmax(x, dim=1) # F.softmax(self.softmax(x), dim=1) #

class LSTM_model(nn.Module):

def __init__(self, config):

super(LSTM_model, self).__init__()

self.config = config

embedding_dim = config.word_embedding_dimension

self.embedding = nn.Embedding(config.word_num, config.word_embedding_dimension)

self.rnn = nn.LSTM(batch_first=True, bidirectional=True, input_size=embedding_dim, hidden_size=embedding_dim,

num_layers=2)

self.f1 = nn.Sequential(nn.Linear(embedding_dim * 2, 100), nn.Dropout(0.8), nn.ReLU())

self.f2 = nn.Sequential(nn.Linear(100, 2), nn.Softmax())

def forward(self, x):

x = self.embedding(x)

x1, _ = self.rnn(x) # [256, 61, 100]

x2 = x1[:, -1, :] # torch.Size([256, 200])

x3 = self.f1(x2) # torch.Size([256, 100])

x4 = self.f2(x3) # torch.Size([100, 2])

return x4

class BiLSTM_Attention(nn.Module):

def __init__(self, config):

super(BiLSTM_Attention, self).__init__()

self.embedding_dim = config.word_embedding_dimension

self.n_hidden = config.word_embedding_dimension

self.embedding = nn.Embedding(config.word_num, config.word_embedding_dimension)

self.lstm = nn.LSTM(self.embedding_dim, self.n_hidden, bidirectional=True, batch_first=True)

self.out = nn.Linear(self.n_hidden * 2, 2)

self.softmax = nn.Softmax()

# lstm_output : [batch_size, n_step, n_hidden * num_directions(=2)], F matrix

def attention_net(self, lstm_output, final_state):

hidden = final_state.view(-1, self.n_hidden * 2,

1) # hidden : [batch_size, n_hidden * num_directions(=2), 1(=n_layer)] torch.Size([256, 200, 1])

attn_weights = torch.bmm(lstm_output, hidden).squeeze(2) # attn_weights : [batch_size, n_step]

soft_attn_weights = F.softmax(attn_weights, 1) # torch.Size([256, 61])

# [batch_size, n_hidden * num_directions(=2), n_step] * [batch_size, n_step, 1] = [batch_size, n_hidden * num_directions(=2), 1]

context = torch.bmm(lstm_output.transpose(1, 2), soft_attn_weights.unsqueeze(2)).squeeze(2) # torch.Size([256, 200])

return context, soft_attn_weights.data # .numpy() # context : [batch_size, n_hidden * num_directions(=2)]

def forward(self, X):

input = self.embedding(X) # input : [batch_size, len_seq, embedding_dim]

# input = input.permute(1, 0, 2) # input : [len_seq, batch_size, embedding_dim]

hidden_state = variable(torch.zeros(1 * 2, len(X), self.n_hidden).to('cuda')) # [num_layers(=1) * num_directions(=2), batch_size, n_hidden]

cell_state = variable(torch.zeros(1 * 2, len(X), self.n_hidden).to('cuda')) # [num_layers(=1) * num_directions(=2), batch_size, n_hidden]

# final_hidden_state, final_cell_state : [num_layers(=1) * num_directions(=2), batch_size, n_hidden]

output, (final_hidden_state, final_cell_state) = self.lstm(input, (hidden_state, cell_state)) # torch.Size([256, 61, 100])

# output = output.permute(1, 0, 2) # output : [batch_size, len_seq, n_hidden] torch.Size([256, 61, 200])

attn_output, attention = self.attention_net(output, final_hidden_state)

output = self.out(attn_output)

return F.softmax(output, dim=1) # model , attention : [batch_size, num_classes], attention : [batch_size, n_step]

# 自定义token

class Token(object):

def __init__(self, vocab_file_path, max_len=202):

self.vocab_file_path = vocab_file_path

self.max_len = max_len

self.word2id, self.id2word = self._load_vovab_file() # 得到词典

# 进行参数验证

if self.max_len > 510: # 表示超过了bert限定长度

raise Exception(print('设置序列最大长度超过bert限制长度,建议设置max_len<=510'))

# 加载词表生成word2id和id2word列表

def _load_vovab_file(self):

with open(self.vocab_file_path, 'r', encoding='utf-8') as fp:

vocab_list = [i.replace('\n', '') for i in fp.readlines()]

word2id = {}

id2word = {}

for index, i in enumerate(vocab_list):

word2id[i] = index

id2word[index] = i

return word2id, id2word

# 定义数据编码encode并生成pytorch所需的数据格式

def encode_str(self, txt_list: list):

# 针对所有的输入数据进行编码

return_txt_id_list = []

for txt in txt_list:

inner_str = txt

inner_str_list = list(inner_str)

inner_seq_list = []

for char in inner_str_list:

char_index = self.word2id.get(char, False)

if char_index == False: # 表示该字符串不认识

inner_seq_list.append(self.word2id.get('[UNK]'))

else:

inner_seq_list.append(char_index)

# inner_seq_list.append(self.word2id.get('[SEP]')) # 跟上结尾token

# 执行padding操作

inner_seq_list += [self.word2id.get('[PAD]')] * (self.max_len - len(inner_str_list))

return_txt_id_list.append(inner_seq_list)

return return_txt_id_list # return_data

# 定义解码操作

def decode_str(self, index_list):

return ''.join([self.id2word.get(i) for i in index_list])

def eval_score(preds_prob, label):

row_index, col_index = np.where(preds_prob == np.max(preds_prob, axis=1).reshape(-1, 1))

total_precision = metrics.precision_score(col_index, label)

total_recall = metrics.recall_score(col_index, label)

total_f1 = metrics.f1_score(col_index, label)

total_accuracy = metrics.accuracy_score(col_index, label)

#fpr, tpr, thresholds = metrics.roc_curve(label, np.max(preds_prob, axis=1), pos_label=2)#实际值 预测值

print("-----sklearn:",metrics.roc_auc_score(label, preds_prob[:,1]))

# preds = [0 if row[0] > 0.5 else 1 for row in preds_prob]#ndarray

# pd_data = pd.concat([pd.DataFrame(preds, columns=['预测值']), pd.DataFrame(label, columns=['真实值'])], axis=1)

# 计算总体的召回率 精准度 f1值

# loc index,Single label,List of labels.

# total_TP = len(pd_data.loc[(pd_data.真实值 == 1) & (pd_data.预测值 == 1)]) # 真正例

# total_TN = len(pd_data.loc[(pd_data.真实值 == 0) & (pd_data.预测值 == 0)]) # 真反例

# total_FN = len(pd_data.loc[(pd_data.真实值 == 1) & (pd_data.预测值 == 0)]) # 假反例

# total_FP = len(pd_data.loc[(pd_data.真实值 == 0) & (pd_data.预测值 == 1)]) # 假正例

# # if total_TP + total_TP == 0: # 分母可能为0修改错误

# if total_TP + total_FP == 0: # 分母可能为0

# total_precision = 0

# else:

# total_precision = total_TP / (total_TP + total_FP)

# if total_TP + total_FN == 0:

# total_recall = 0

# else:

# total_recall = total_TP / (total_TP + total_FN)

# if total_recall + total_precision == 0:

# total_f1 = 0

# else:

# total_f1 = total_recall * total_precision / 2*(total_recall + total_precision)

# # 计算正确率

# acc = len(pd_data.loc[pd_data.真实值 == pd_data.预测值]) / len(pd_data)

return (total_precision, total_recall, total_f1, total_accuracy), ['精准度', '召回率', 'f1值', '正确率']

def load_data():

path = './oppo_breeno_round1_data/'

df_train = pd.read_table(path + "gaiic_track3_round1_train_20210228.tsv", names=['q1', 'q2', 'label']).fillna(

"0") # (100000, 3)

df_test = pd.read_table(path + 'gaiic_track3_round1_testB_20210317.tsv', names=['q1', 'q2']).fillna(

"0") # (25000, 2)

train_num = int(0.7 * df_train.shape[0])

label_list = df_train['label'].values

label_list = [[line] for line in label_list]

train_data_list = (df_train['q1'] + " 19999 " + df_train['q2']).values # ndarray

train_data_list = [line.split(' ') for line in train_data_list] # list

train_data_list = [list(map(int, line)) for line in train_data_list]

test_data_list = (df_test['q1'] + " 19999 " + df_test['q2']).values # ndarray

test_data_list = [line.split(' ') for line in test_data_list] # list

test_data_list = [list(map(int, line)) for line in test_data_list]

sentence_max_size = 61

def add_padding(data_list):

for line_list in data_list:

if len(line_list) <= sentence_max_size:

line_list += [0] * (sentence_max_size - len(line_list))

return data_list

train_data_list = add_padding(train_data_list)

test_data_list = add_padding(test_data_list)

train_set = TextDataset(train_data_list[:train_num], label_list[:train_num])

dev_set = TextDataset(train_data_list[train_num:], label_list[train_num:])

test_set = TestTextDataset(test_data_list)

training_iter = DataLoader(dataset=train_set, batch_size=config.batch_size, num_workers=2)

valid_iter = DataLoader(dataset=dev_set, batch_size=config.batch_size, num_workers=2)

test_iter = DataLoader(dataset=test_set, batch_size=config.batch_size, num_workers=2)

return training_iter, valid_iter, test_iter

def draw_loss(y):

plt.rcParams['font.sans-serif'] = ['SimHei']

x = np.arange(0, len(y))

plt.xlabel('iters')

plt.ylabel('loss')

plt.title('gensim_字向量训练loss曲线')

plt.plot(x, y, color='red')

plt.savefig('loss曲线.png', dpi=120)

plt.show()

def train_model(training_iter, valid_iter, test_iter):

# model = TextCNN(config)

# model = LSTM_model(config)

model = BiLSTM_Attention(config)

# embeds = nn.Embedding(config.word_num, config.word_embedding_dimension)

if torch.cuda.is_available():

model.cuda()

# embeds = embeds.cuda()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=config.lr)

# Train the model

loss_list = []

for epoch in range(config.epoch): #

start_time = time.time()

loss_sum = 0

count = 1

sum_out = np.array([])

sum_label = np.array([])

model.train() # 防止过拟合

for i, batch_data in enumerate(training_iter):

data = batch_data[0] # torch.Size([256, 61]) #batchsize_250

label = batch_data[1] # torch.Size([256, 1])

if torch.cuda.is_available(): # config.cuda and

data = data.cuda()

label = label.cuda()

input_data = autograd.Variable(data)

# input_data = input_data.unsqueeze(1) # [256, 202, 100]-->[256, 1, 202, 100]

optimizer.zero_grad()

out = model(input_data) # Expected 4-dimensional input for 4-dimensional weight [1, 1, 3, 100]

loss = criterion(out,

label.squeeze()) # out:torch.Size([256, 2]),lable:torch.Size([256, 1])--> torch.Size([256])#autograd.Variable(label.float()))

loss_sum += loss

loss.backward()

optimizer.step()

count = count + 1

model.eval()

for i, batch_data in enumerate(valid_iter):

data = batch_data[0] # torch.Size([256, 60]) #batchsize_250

label = batch_data[1] # torch.Size([256, 1])

if torch.cuda.is_available(): # config.cuda and

data = data.cuda()

label = label.cuda()

input_data = autograd.Variable(data)

# input_data = embeds(autograd.Variable(data))

# input_data = input_data.unsqueeze(1) # (256,1,60,100)

optimizer.zero_grad()

out = model(input_data) # Expected 4-dimensional input for 4-dimensional weight [1, 1, 3, 100]

sum_out = out.cpu().detach().numpy() if i == 0 else np.append(sum_out, out.cpu().detach().numpy(), axis=0)

sum_label = label.cpu().detach().numpy() if i == 0 else np.append(sum_label, label.cpu().detach().numpy(),

axis=0)

print("epoch", epoch + 1, 'time:', time.time() - start_time)

print("train The average loss is: %.5f" % (loss_sum / count))

loss_list.append((loss_sum / count).cpu().detach().numpy())

total_res, total_label = eval_score(sum_out, sum_label)

total_precision, total_recall, total_f1, total_acc = total_res

print(f'valid的 precision:{total_precision} recall:{total_recall}, f1:{total_f1} acc: {total_acc}')

print('every_loss_list', loss_list)

draw_loss(loss_list)

torch.save(model.state_dict(), 'params.pkl')

# # torch.save(model, './model/model.pkl')

# print('Model saved successfully')

torch.save(model.state_dict(), 'params.pkl')

# # torch.save(model, './model/model.pkl')

# print('Model saved successfully')

def predict(test_iter, model):

if torch.cuda.is_available():

model.cuda()

# 预测

result = np.array([0, 0])

for i, data in enumerate(test_iter):

if torch.cuda.is_available(): # config.cuda and

data = data.cuda()

input_data = data

out, attention = model(input_data) # device='cuda:0', grad_fn=)

# result.append(out.cpu().detach().numpy()) #'tuple' object has no attribute 'numpy' #把gpu的模型转到cpu来.cpu()

result = np.vstack((result, out.cpu().detach().numpy()))

pd.DataFrame(result[1:, :]).to_csv("./prediction_result/result.csv", index=False, header=False)

def train_model2(model,training_iter, valid_iter):

if torch.cuda.is_available():

model.cuda()

# embeds = embeds.cuda()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=config.lr)

# Train the model

loss_list = []

for epoch in range(config.epoch): #

start_time = time.time()

loss_sum = 0

count = 1

sum_out = np.array([])

sum_label = np.array([])

model.train() # 防止过拟合

for i, batch_data in enumerate(training_iter):

data = batch_data[0] # torch.Size([256, 61]) #batchsize_250

label = batch_data[1] # torch.Size([256, 1])

if torch.cuda.is_available(): # config.cuda and

data = data.cuda()

label = label.cuda()

input_data = autograd.Variable(data)

# input_data = input_data.unsqueeze(1) # [256, 202, 100]-->[256, 1, 202, 100]

optimizer.zero_grad()

out = model(input_data) # Expected 4-dimensional input for 4-dimensional weight [1, 1, 3, 100]

loss = criterion(out,

label.squeeze()) # out:torch.Size([256, 2]),lable:torch.Size([256, 1])--> torch.Size([256])#autograd.Variable(label.float()))

loss_sum += loss

loss.backward()

optimizer.step()

count = count + 1

model.eval()

for i, batch_data in enumerate(valid_iter):

data = batch_data[0] # torch.Size([256, 60]) #batchsize_250

label = batch_data[1] # torch.Size([256, 1])

if torch.cuda.is_available(): # config.cuda and

data = data.cuda()

label = label.cuda()

input_data = autograd.Variable(data)

# input_data = embeds(autograd.Variable(data))

# input_data = input_data.unsqueeze(1) # (256,1,60,100)

optimizer.zero_grad()

out = model(input_data) # Expected 4-dimensional input for 4-dimensional weight [1, 1, 3, 100]

sum_out = out.cpu().detach().numpy() if i == 0 else np.append(sum_out, out.cpu().detach().numpy(),

axis=0)

sum_label = label.cpu().detach().numpy() if i == 0 else np.append(sum_label,

label.cpu().detach().numpy(),

axis=0)

print("epoch", epoch + 1, 'time:', time.time() - start_time)

print("train The average loss is: %.5f" % (loss_sum / count))

loss_list.append((loss_sum / count).cpu().detach().numpy())

total_res, total_label = eval_score(sum_out, sum_label)

total_precision, total_recall, total_f1, total_acc = total_res

print(f'valid的 precision:{total_precision} recall:{total_recall}, f1:{total_f1} acc: {total_acc}')

print('every_loss_list', loss_list)

draw_loss(loss_list)

torch.save(model.state_dict(), 'params.pkl')

# # torch.save(model, './model/model.pkl')

print('Model saved successfully')

if __name__ == '__main__':

training_iter, valid_iter, test_iter = load_data() # 加载数据 该数据已经脱敏过了,类似句子1:1 23 34 454 232 句子二:1 34 43 11 标签: 0

model_path = 'params.pkl'

if os.path.exists(model_path)==False:

train_model(training_iter, valid_iter, test_iter)

else:

# 加载

model = BiLSTM_Attention(config)

model.load_state_dict(torch.load(model_path)) # 使用反序列化的 state_dict 加载模型的参数字典。

train_model2(model, training_iter, valid_iter)

# predict(test_iter, model)