2020 Domain Adaptation 最新论文:插图速览(二)

2020 Domain Adaptation 最新论文:插图速览(二)

目录

In-Domain GAN Inversion for Real Image Editing

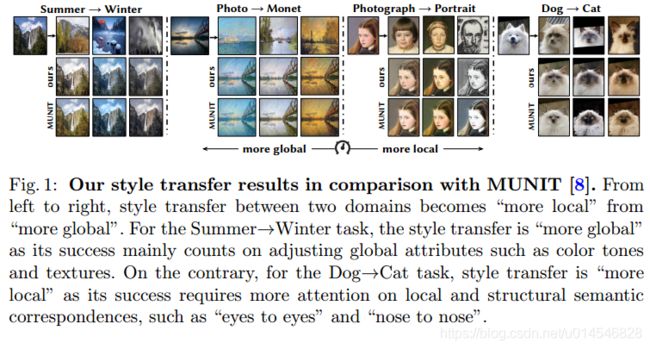

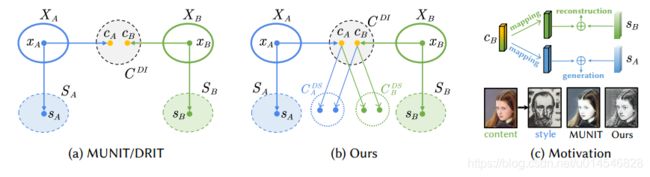

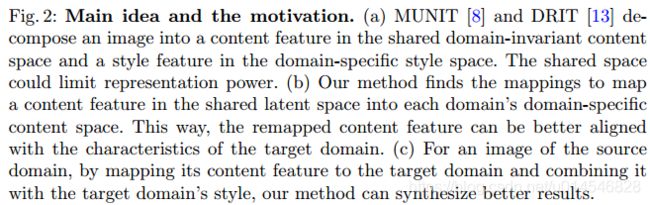

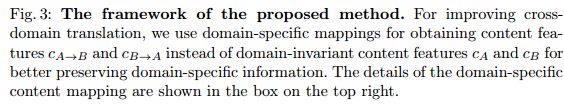

Domain-Specific Mappings for Generative Adversarial Style Transfer

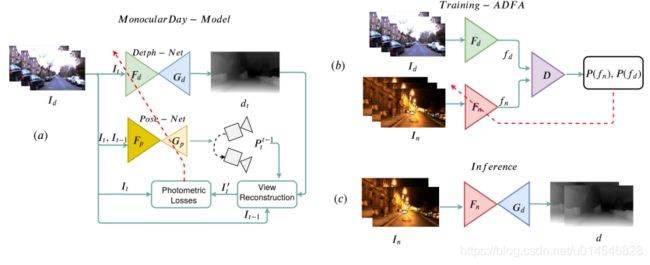

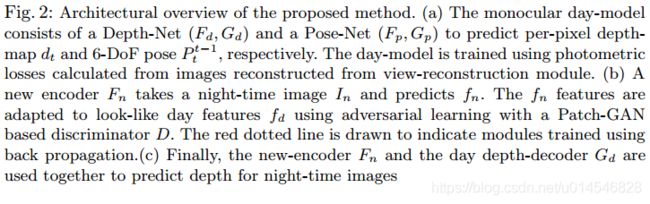

Unsupervised Monocular Depth Estimation for Night-time Images using Adversarial Domain Feature Adaptation

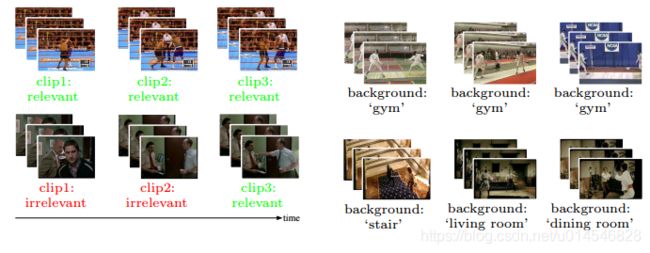

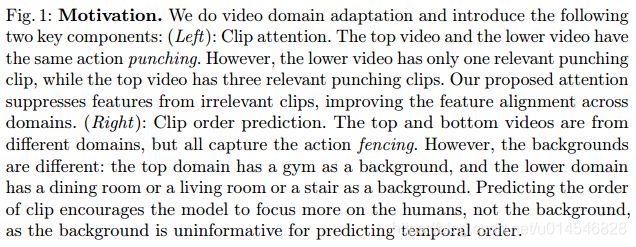

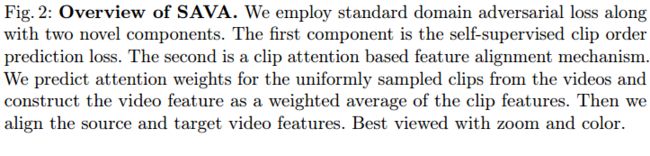

Shuffle and Attend: Video Domain Adaptation

Prior-based Domain Adaptive Object Detection for Hazy and Rainy Conditions

Self-Supervised CycleGAN for Object-Preserving Image-to-Image Domain Adaptation

Domain Adaptive Image-to-image Translation

Cross-domain Correspondence Learning for Exemplar-based Image Translation

One-Shot Domain Adaptation For Face Generation

Multi-Domain Learning for Accurate and Few-Shot Color Constancy

Unsupervised Adaptation Learning for Hyperspectral Imagery Super-resolution

DoveNet: Deep Image Harmonization via Domain Verification

Gradually Vanishing Bridge for Adversarial Domain Adaptation

Adversarial Learning for Zero-shot Domain Adaptation

Mind the Discriminability: Asymmetric Adversarial Domain Adaptation

Instance Adaptive Self-Training for Unsupervised Domain Adaptation

Cross-Domain Document Object Detection: Benchmark Suite and Method

Domain Balancing: Face Recognition on Long-Tailed Domains

Generalizing Hand Segmentation in Egocentric Videos with Uncertainty-Guided Model Adaptation

Exploring Categorical Regularization for Domain Adaptive Object Detection

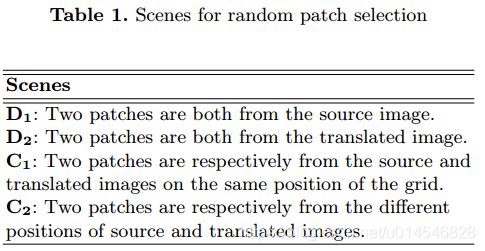

[2020 ECCV]

In-Domain GAN Inversion for Real Image Editing

[paper]

![]()

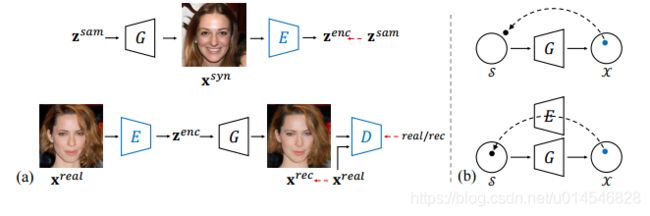

Fig. 1. Real image editing using the proposed in-domain GAN inversion with a fixed GAN generator. (a) Semantic manipulation with respect to various facial attributes. (b) Image interpolation by linearly interpolating two inverted codes. (c) Semantic diffusion which diffuses the target face to the context and makes them compatible.

Fig. 4. Qualitative comparison on image reconstruction with different GAN inversion methods. (a) Input image. (b) Conventional encoder [36]. (c) Image2StyleGAN [29]. (d) Our proposed domain-guided encoder. (e) Our proposed in-domain inversion.

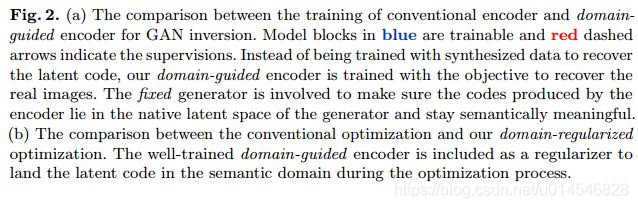

Fig. 5. Qualitative comparison on image interpolation between Image2StyleGAN [29] (odd rows) and our in-domain inversion (even rows).

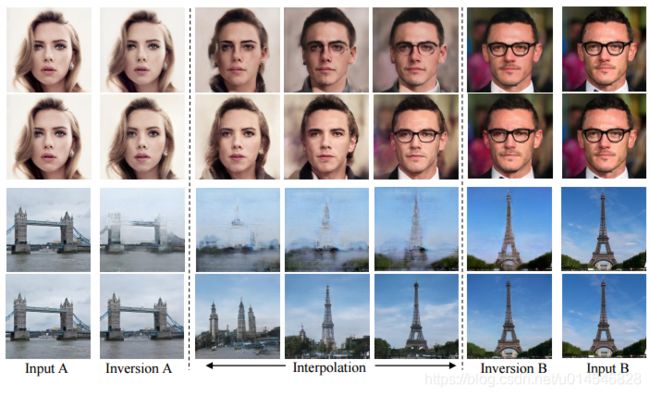

Fig. 6. Comparison of Image2StyleGAN [29] (top row) and our in-domain inversion (bottom row) on facial attribute manipulation.

Domain-Specific Mappings for Generative Adversarial Style Transfer

[paper]

Unsupervised Monocular Depth Estimation for Night-time Images using Adversarial Domain Feature Adaptation

[paper]

Fig. 1: The depth predictions of the proposed method on Oxford Night driving images. Top to bottom: (a) Input RGB night-time image. (b) Corresponding ground truth depth map generated from the LIDAR points. (c) The depth predictions using the proposed method

Fig. 3: A qualitative comparison of predicted depth-maps with different experiments. The first column shows the night-time images which are provided as input to different networks. The second column shows the output depth images obtained using photometric losses. As one can observe, these methods fail to maintain the structural layout of the scene. The third column shows the output of an image-translation network (CycleGAN) which are then applied to a day-depth estimation network to obtain depth-maps as shown in the fourth column. These are slightly better compared to the previous case but it introduces several artifacts which degrade the depth estimation in several cases. The last column shows the predictions using the proposed ADFA approach. As one can see, the proposed method provides better predictions compared to these methods and is capable of preserving structural attributes of the scene to a greater extent

Shuffle and Attend: Video Domain Adaptation

[paper]

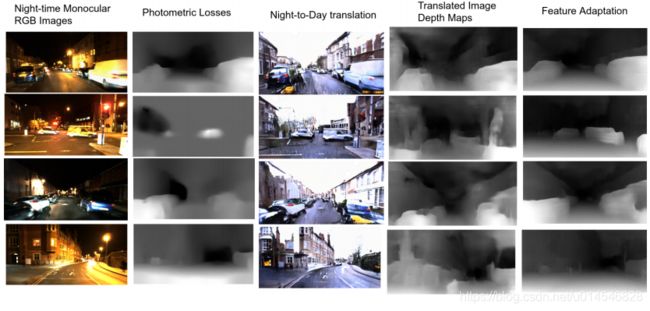

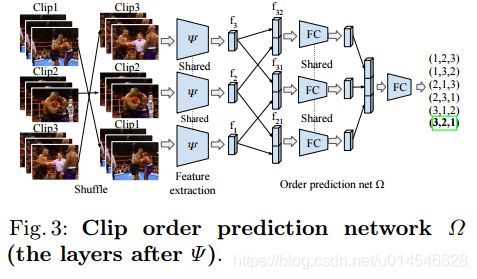

Prior-based Domain Adaptive Object Detection for Hazy and Rainy Conditions

[paper]

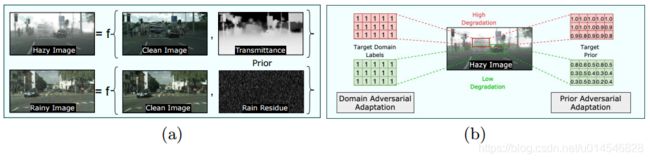

Fig. 1. (a) Weather conditions can be modeled as function of clean image and the weather-specific prior, which we use to define a novel prior-adversarial loss. (b) Existing adaptation approaches use constant domain label for the entire. Our method uses spatially-varying priors that are directly correlated to the amount of degradations.

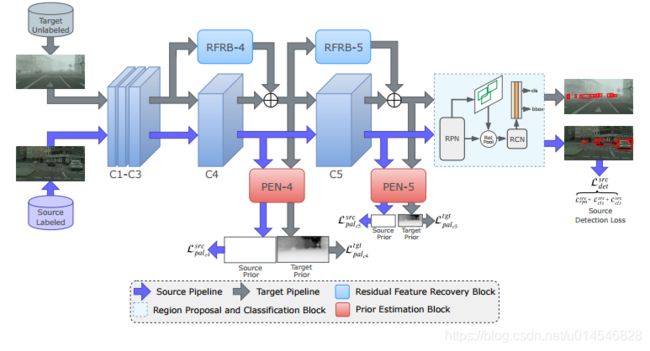

Fig. 2. Overview of the proposed adaptation method. We use prior adversarial loss to supervise the domain discriminators. For the source pipeline, additional supervision is provided by detection loss. For target pipeline, feed-forward through the detection network is modified by the residual feature recovery blocks.

Partially-Shared Variational Auto-encoders for Unsupervised Domain Adaptation with Target Shift

[paper]

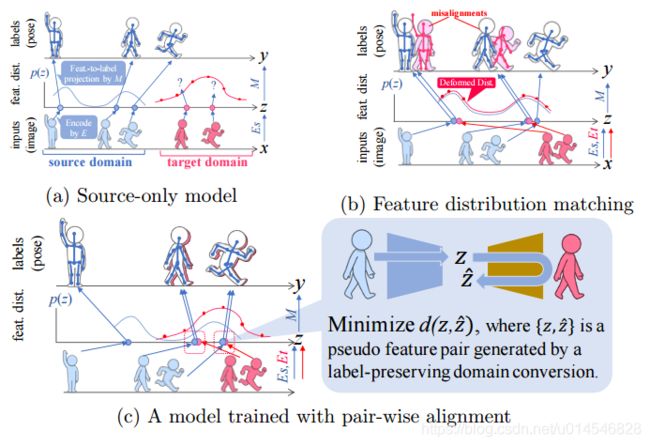

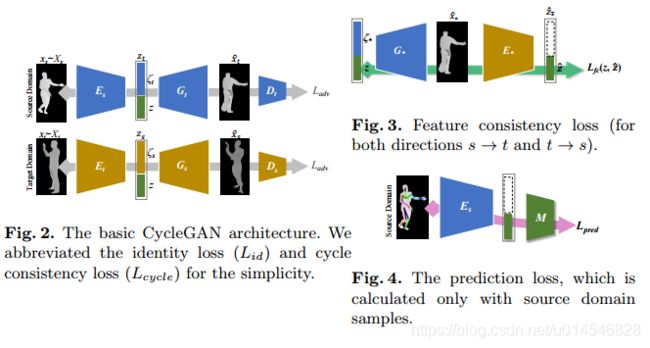

Fig. 1. (best viewed in color) Overview of the proposed approach on the problem of (2D) human pose estimation. Note that the feature-to-label projection M is trained only with source domain dataset, where Es and Et are domain specific image-to-feature encoders. (a) The naive approach fails in the target domain due to the differences in the feature distributions between the two domains: location difference that illustrates the affection by domain shift and shape difference caused by target shift (non-identical label distributions of the two domains). (b) While feature distribution matching attempts to adjust the shape of two feature distributions, it suffers from misalignment in label estimation due to the deformed target domain feature distribution. (c) The proposed method avoid this deformation problem by sample-wise feature distance minimization, where pseudo sample pairs with an identical label are generated via a CycleGAN-based architecture.

![]()

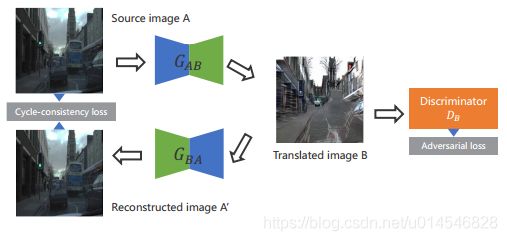

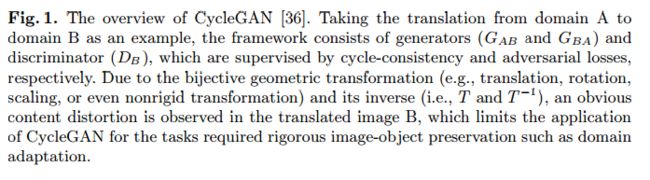

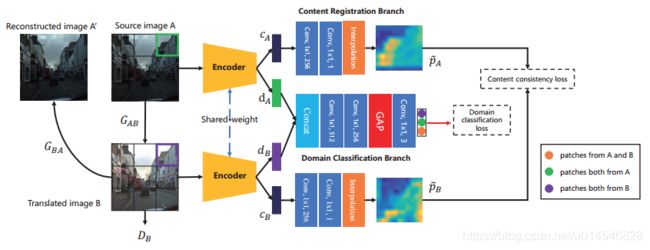

Self-Supervised CycleGAN for Object-Preserving Image-to-Image Domain Adaptation

[paper]

[2020 CVPR]

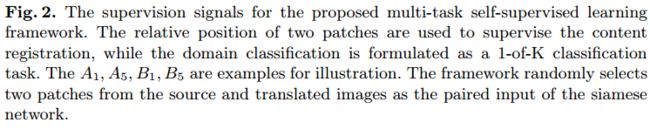

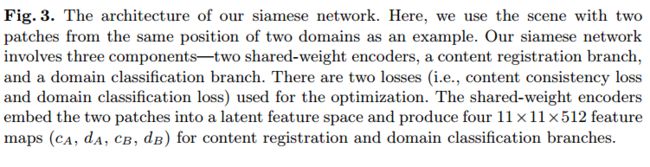

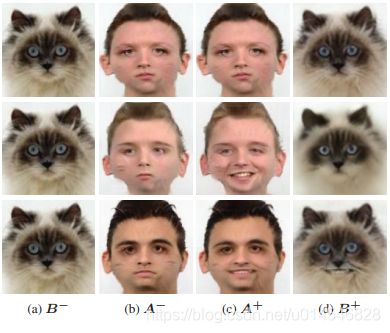

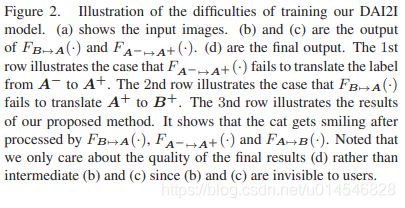

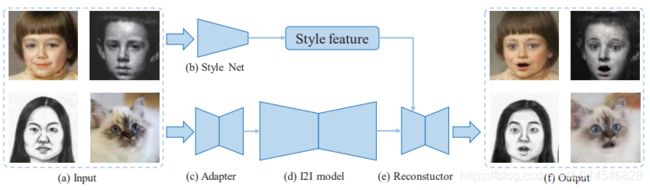

Domain Adaptive Image-to-image Translation

[paper]

Figure 1. Applying a neutral → smile I2I model on human and cat faces. The I2I model is trained on human faces. The 1st and 2nd rows are input and output respectively. (a) Result on a human face. (b) Directly applying the model on a cat face. (c) Our result.

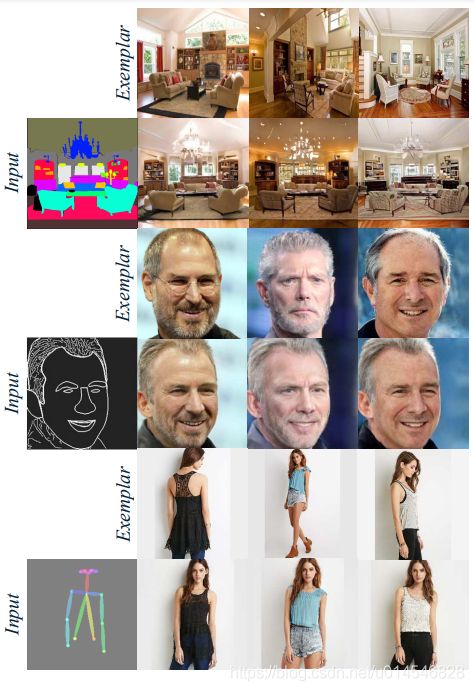

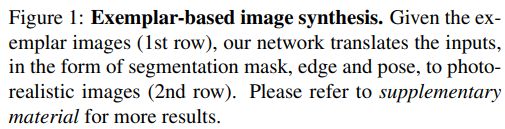

Cross-domain Correspondence Learning for Exemplar-based Image Translation

[paper]

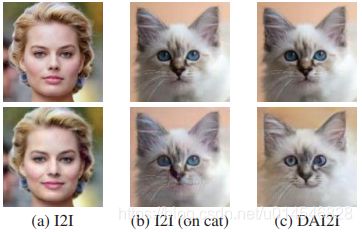

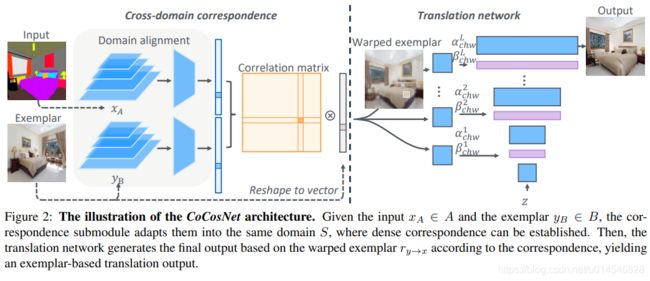

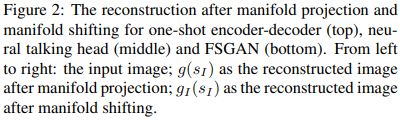

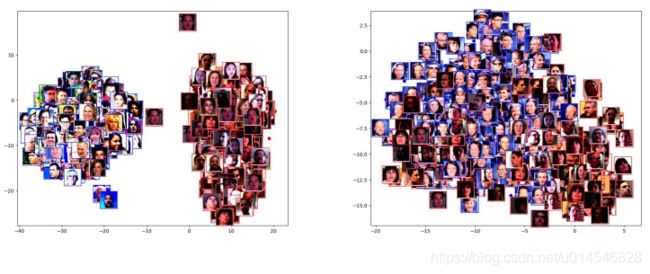

One-Shot Domain Adaptation For Face Generation

[paper]

Figure 3: Visual examples of one-shot DeepFake domain adaptation using StyleGAN. From top to bottom: encoder-decoder, neural talking head and FSGAN.

Figure 4: t-SNE embedding visualizations. Left: Embeddings of original StyleGAN generated images and encoderdecoder Deepfake images. Right: Embeddings of one-shot domain-adapted StyleGAN generated images and Deepfake images.

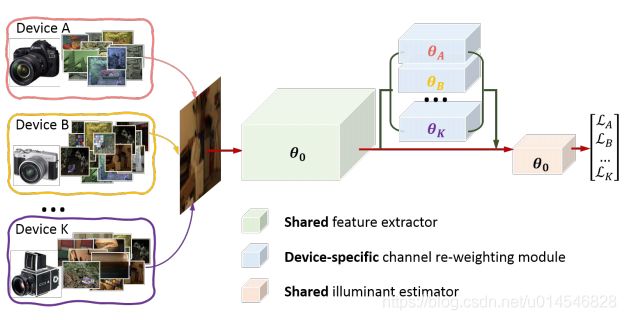

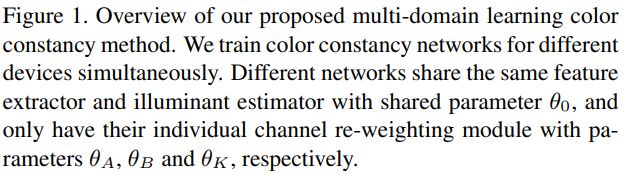

Multi-Domain Learning for Accurate and Few-Shot Color Constancy

[paper]

![]()

Figure 2. The proposed multi-domain color constancy network architecture. We used shared layers among multiple devices for feature extraction. A camera-specific channel re-weighting module was then used to adapt to each device. The illuminant estimation stage finally predicted the scene illuminant.

Unsupervised Adaptation Learning for Hyperspectral Imagery Super-resolution

[paper]

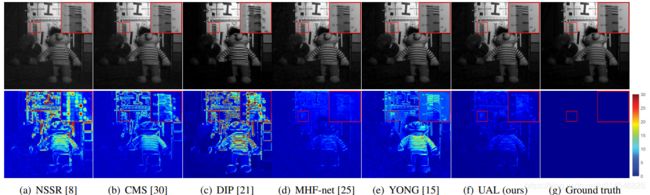

Figure 1. Visual SR results and reconstruction error maps on an image from the CAVE dataset [28] with unknown degeneration (e.g., no-Gaussian blur and random noise) when SR scale s is 8. Most existing methods shows obvious artifacts and reconstruction error.

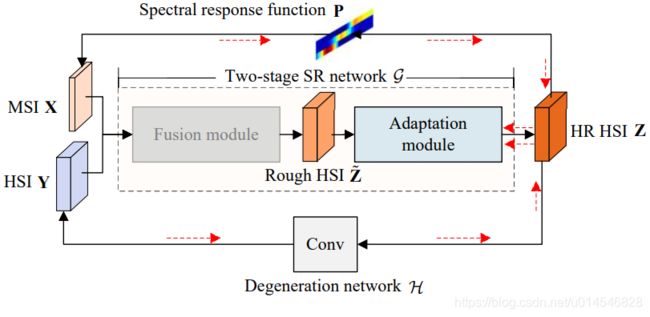

(a) The proposed UAL framework

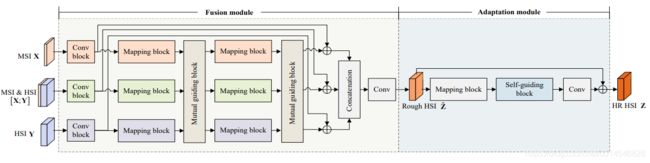

(b) The proposed two-stage SR network

Figure 2. Flow chart of the proposed UAL and the proposed two-stage SR network. In figure (a), red arrows indicate the back-propagated gradient in unsupervised learning, and we denote the fusion module in gray to indicate that its weights are fixed in unsupervised learning.

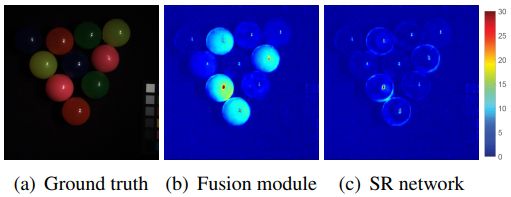

Figure 3. Reconstruction error maps generated by the pre-trained fusion module and the proposed two-stage SR network.

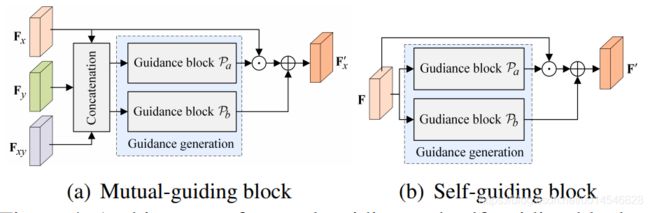

Figure 4. Architecture of mutual-guiding and self-guiding blocks.

DoveNet: Deep Image Harmonization via Domain Verification

[paper]

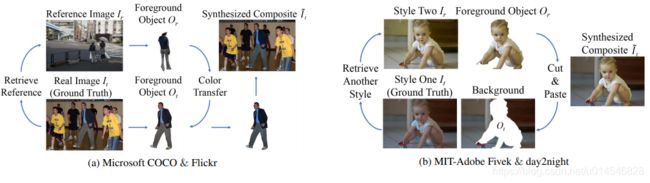

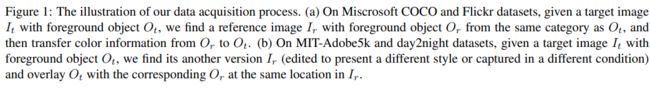

Figure 2: Illustration of DoveNet architecture, which consists of (a) attention enhanced U-Net generator, (b) global discriminator, and (c) our proposed domain verification discriminator.

Gradually Vanishing Bridge for Adversarial Domain Adaptation

[paper]

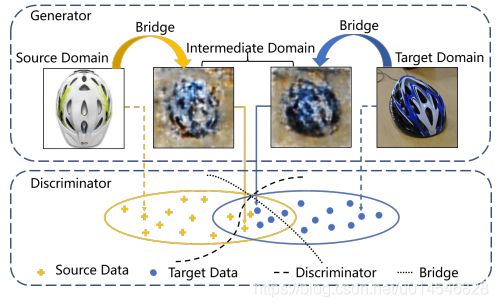

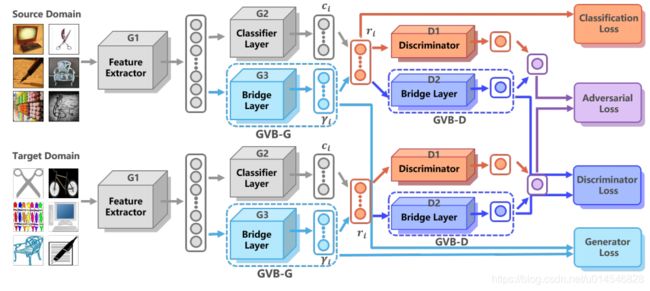

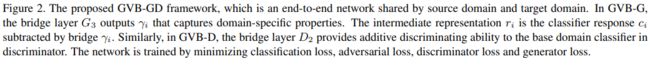

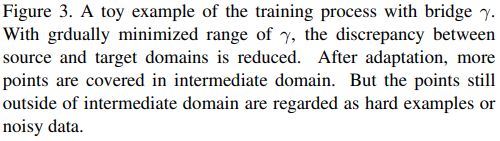

Figure 1. Illustration of the bridge for adversarial domain adaptation. On the generator, the bridge models domain-specific representations and connects either source or target domain to intermediate domain. On the discriminator, the bridge balances the adversarial game by providing additive adjustable discriminating ability. In these processes, the method to construct bridge is the key issue in our study.

[2020 ECCV]

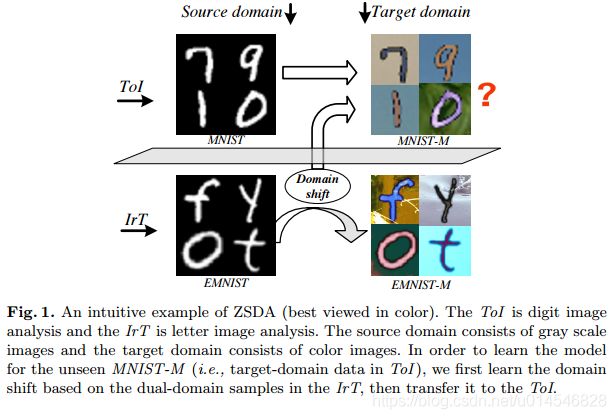

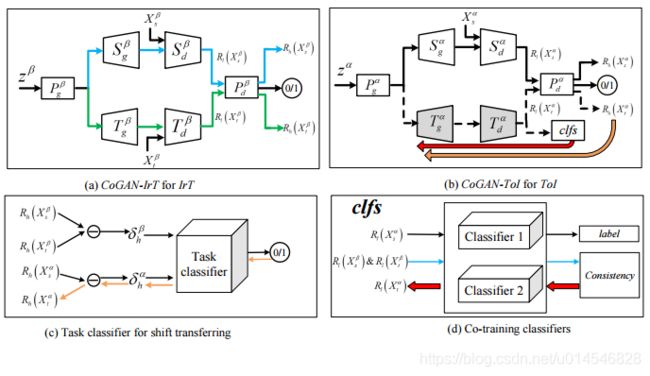

Adversarial Learning for Zero-shot Domain Adaptation

[paper]

Mind the Discriminability: Asymmetric Adversarial Domain Adaptation

[paper]

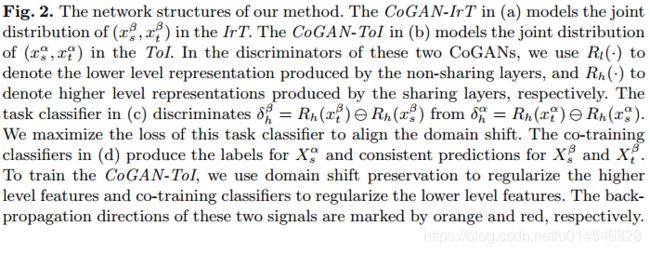

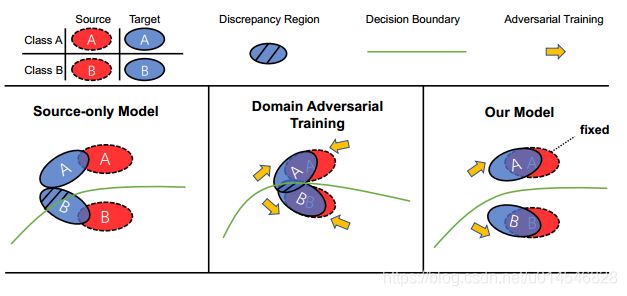

Fig. 1: Comparison between previous Domain Adversarial Training (DAT) method and ours. Left: The discrepancy region is large before adaptation. Middle: DAT based on a domain classifier aligns two domains together and pushes them as close as possible, which hurts the feature discriminability in the target domain. Right: AADA fixes the source domain that is regarded as the lowenergy space, and pushes the target domain to approach the source one, which makes use of the well-trained classifier in the labeled source domain.

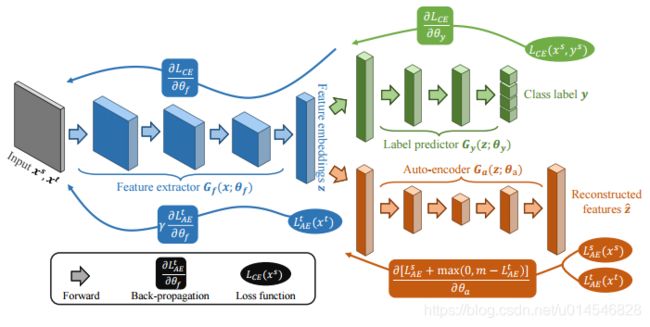

Fig. 2: AADA model constitutes a shared feature extract Gf , a classifier Gy and an autoencoder Ga. Except for supervised learning on the source domain, the autoencoder plays a domain discriminator role that learns to embed the source features and push the target features away, while the feature extractor learns to generate target features that can deceive the autoencoder. Such process is an asymmetric adversarial game that pushes the target domain to the source domain in the feature space.

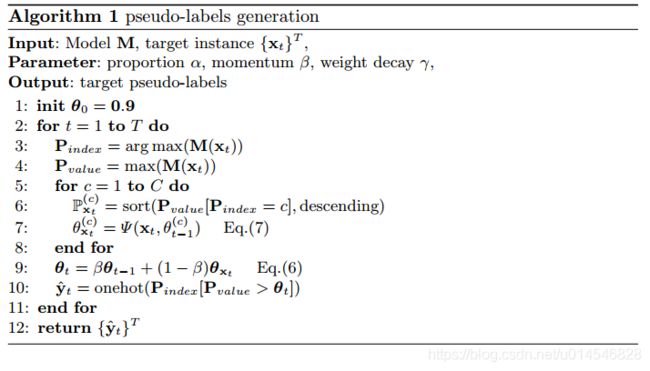

Instance Adaptive Self-Training for Unsupervised Domain Adaptation

[paper]

Fig. 1. Pseudo-label results. Columns correspond to original images with ground truth labels, class-balanced method, and our method

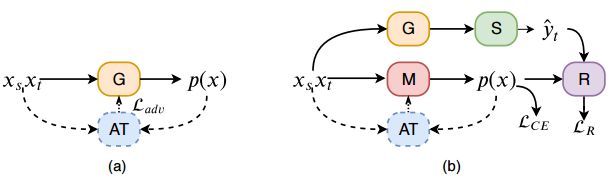

Fig. 2. IAST framework. (a) Warm-up phase, an initial model G is trained using any existing non-self-training method (eg. AT). (b) Self-training phase, the selector S filters the pseudo-labels generated by G, and R is the regularization

Fig. 3. Proposed IAST framework overview

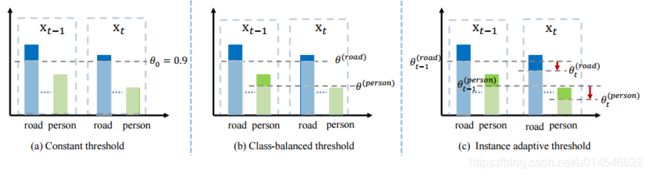

Fig. 4. Illustration of three different thresholding methods. xt−1 and xt represent two consecutive instances, the bars approximately represent the probabilities of each class. (a) A constant threshold is used for all instances. (b) class-balanced thresholds are used for all instances. (c) Our method adaptively adjusts the threshold of each class based on the instance

[2020 CVPR]

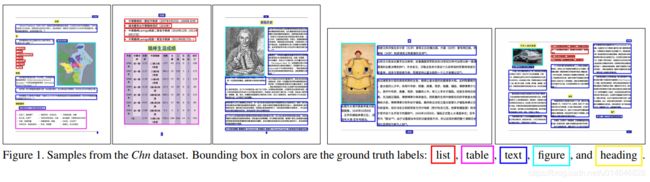

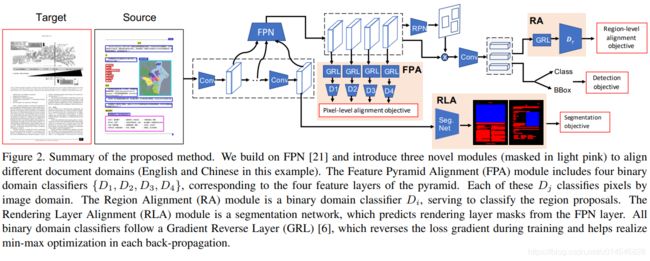

Cross-Domain Document Object Detection: Benchmark Suite and Method

[paper]

![]()

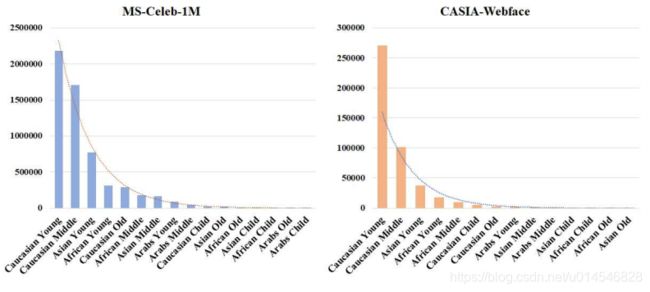

Domain Balancing: Face Recognition on Long-Tailed Domains

[paper]

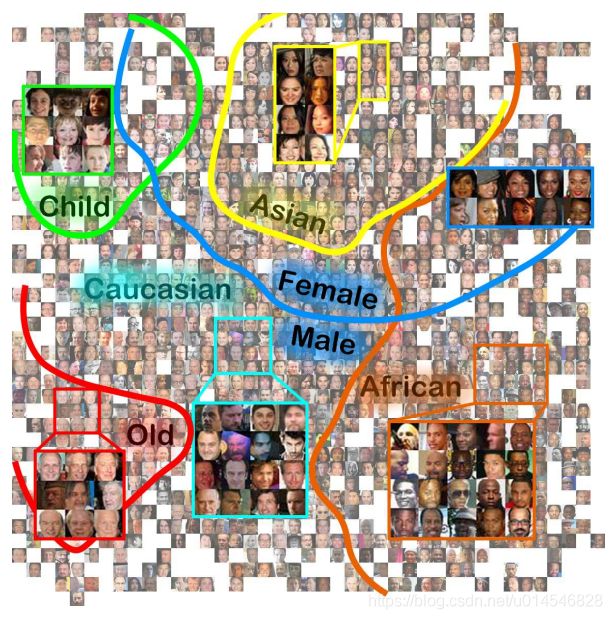

Figure 1. The long-tailed domain distribution demarcated by the mixed attributions (e.g., race and age) in the MS-Celeb-1M [8] and CASIA-Webface [36]. Number of classes per domain falls drastically, and only few domains have abundant classes. (Baidu API [1] is used to estimate the race and age)

Figure 2. The face features are trivially grouped together according to different attributions, visualized by t-SNE [20]

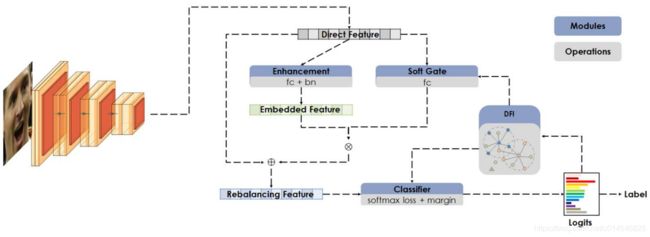

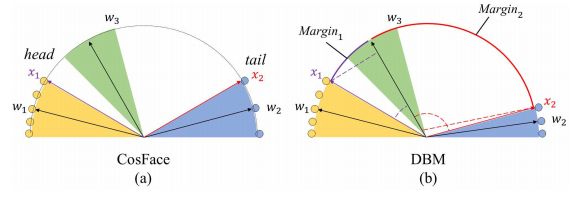

Figure 3. There are three main modules: DFI, RBM and DBM. The DFI indicates the local distances within a local region. The RBM harmonizes the representation ability in the network architecture, while the DBM balances the contribution in the loss.

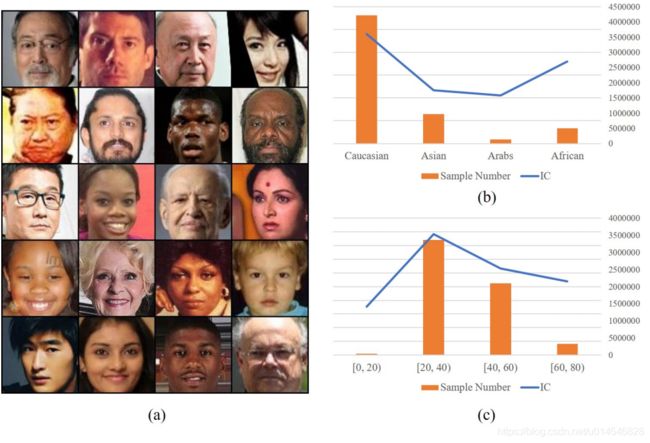

Figure 4. (a) Identities with small inter-class compactness value in the MS-Celeb-1M. (b) The inter-class compactness vs. race distribution. (c) The inter-class compactness vs. age distribution.

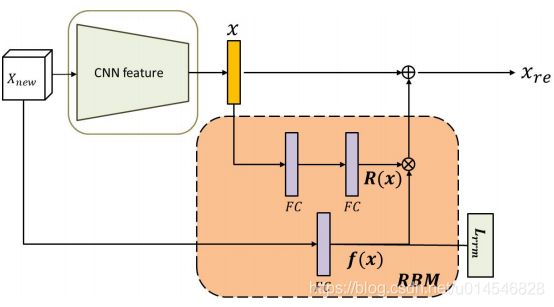

Figure 5. The Residual Balancing Module is designed with lightweighted structure and it can be easily attached to existing network architecture. The block dynamically enhances the feature according to DFI.

Figure 6. Geometrical interpretation of DBM from the feature perspective. Different color areas indicate feature space from distinct classes. Yellow area represents the head-domain class C1 and blue area represents the tailed-domain class C2. (a) CosFace assigns an uniform margin for all the classes. The sparse inter-class distribution in the tail domains makes the decision boundary easy to satisfy. (b) DBM assigns margin according to the inter-class compactness adaptively.

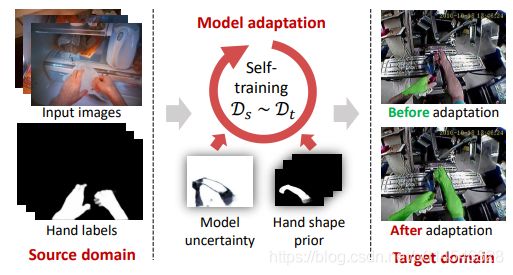

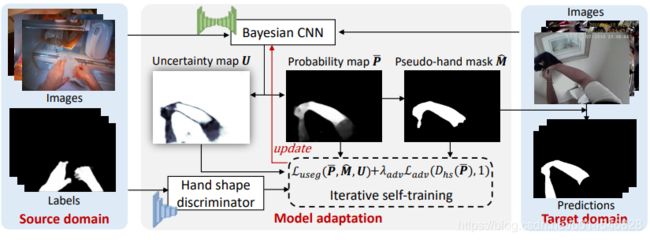

Generalizing Hand Segmentation in Egocentric Videos with Uncertainty-Guided Model Adaptation

[paper]

Figure 1. Illustration of the proposed model adaptation framework for hand segmentation in a new domain.

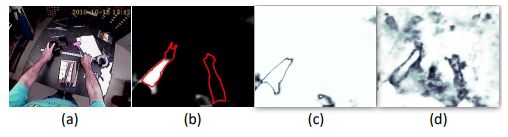

Figure 2. Comparison of different uncertainty maps: (a) input image (b) prediction probability (softmax output) from a standard CNN and ground-truth hand region (in red boundary) (c) uncertainty map obtained based on softmax output (d) uncertainty map obtained with a Bayesian CNN. The darker means less certain.

Figure 3. Overview of the proposed uncertainty-guided model adaptation.

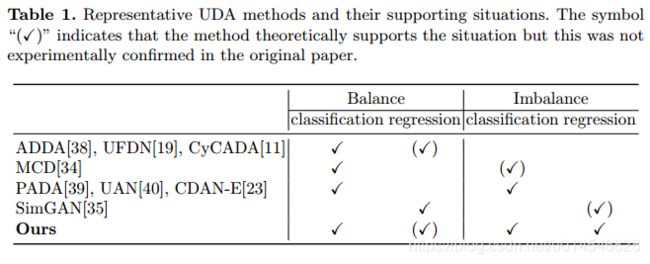

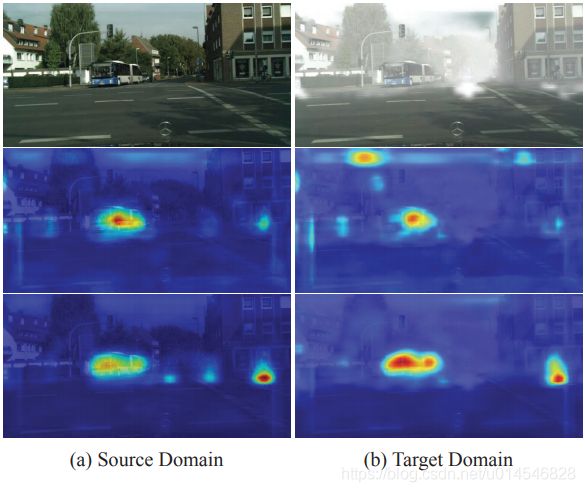

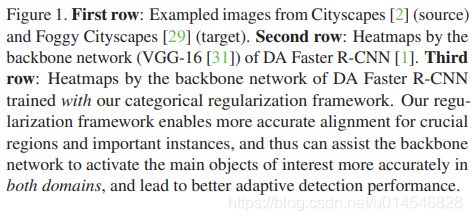

Exploring Categorical Regularization for Domain Adaptive Object Detection

[paper]

Figure 2. Overview of our categorical regularization framework: a plug-and-play component for the Domain Adaptive Faster R-CNN series [1, 28]. Our framework consists of two modules, i.e., image-level categorical regularization (ICR) and categorical consistency regularization (CCR). The ICR module is an image-level multi-label classifier upon the detection backbone, which exploits the weakly localization ability of classification CNNs to obtain crucial regions corresponding to categorical information. The CCR module considers the consistency between the image-level and instance-level predictions as a novel regularization factor, which can be used to automatically hunt for hard aligned instances in the target domain during instance-level alignment.