GluonCV:用Pascal VOC数据训练YOLO v3(下)训练部分

接上篇,GluonCV:用Pascal VOC数据训练YOLO v3(上)

本教程介绍了训练GluonCV提供的YOLOv3目标检测模型的基本步骤。

具体来说,展示了如何通过堆叠GluonCV组件来构建state-of-the-art的YOLOv3模型。

首先,关于训练有三点说明:

(1)初始学习率默认是0.001,我训练loss是nan,改为0.0001解决;

(2)如果八卡1080Ti,默认batch-size=64即可,四卡改成32,其他依次类推;

(3)关于下面的情况

[07:40:55] src/operator/nn/./cudnn/./cudnn_algoreg-inl.h:97: Running performance tests to find the bestconvolution algorithm, this can take a while... (setting env variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0to disable)

# 解决方法

# 运行train之前,输入

export MXNET_CUDNN_AUTOTUNE_DEFAULT = 01.数据集

请首先阅读上篇教程,在磁盘上准备好Pascal VOC数据集。

然后,我们准备加载训练和验证图像。

import gluoncv as gcv

from gluoncv.data import VOCDetection

# typically we use 2007+2012 trainval splits for training data

train_dataset = VOCDetection(splits=[(2007, 'trainval'), (2012, 'trainval')])

# and use 2007 test as validation data

val_dataset = VOCDetection(splits=[(2007, 'test')])

print('Training images:', len(train_dataset))

print('Validation images:', len(val_dataset))

Out:

Training images: 16551

Validation images: 49522. Data transform

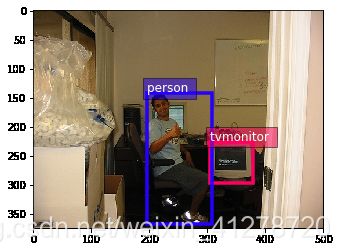

我们可以从训练数据集中读取图像-标签对

train_image, train_label = train_dataset[80]

bboxes = train_label[:, :4]

cids = train_label[:, 4:5]

print('image:', train_image.shape)

print('bboxes:', bboxes.shape, 'class ids:', cids.shape)

Out:

image: (375, 500, 3)

bboxes: (2, 4) class ids: (2, 1)用matplotlib绘制图像以及边界框标签:

from matplotlib import pyplot as plt

from gluoncv.utils import viz

ax = viz.plot_bbox(train_image.asnumpy(), bboxes, labels=cids, class_names=train_dataset.classes)

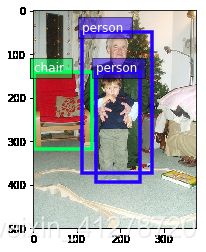

plt.show()验证图像与训练非常相似,因为它们基本上是随机分成不同的集合

val_image, val_label = val_dataset[120]

bboxes = val_label[:, :4]

cids = val_label[:, 4:5]

ax = viz.plot_bbox(val_image.asnumpy(), bboxes, labels=cids, class_names=train_dataset.classes)

plt.show()Transform

from gluoncv.data.transforms import presets

from gluoncv import utils

from mxnet import nd

width, height = 416, 416 # resize image to 416x416 after all data augmentation

train_transform = presets.yolo.YOLO3DefaultTrainTransform(width, height)

val_transform = presets.yolo.YOLO3DefaultValTransform(width, height)

utils.random.seed(123) # fix seed in this tutorial

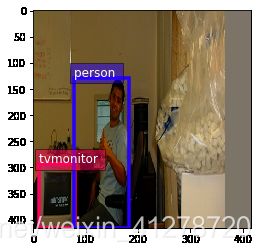

将变换应用于训练图像

train_image2, train_label2 = train_transform(train_image, train_label)

print('tensor shape:', train_image2.shape)

Out:

tensor shape: (3, 416, 416)张量中的图像被扭曲,因为它们不再位于(0,255)范围内。 让我们把它们转换回去,这样我们就能清楚地看到它们。

train_image2 = train_image2.transpose((1, 2, 0)) * nd.array((0.229, 0.224, 0.225)) + nd.array((0.485, 0.456, 0.406))

train_image2 = (train_image2 * 255).clip(0, 255)

ax = viz.plot_bbox(train_image2.asnumpy(), train_label2[:, :4],

labels=train_label2[:, 4:5],

class_names=train_dataset.classes)

plt.show()训练中使用的变换包括随机颜色失真,随机扩展/裁剪,随机翻转,调整大小和固定颜色标准化。 相比之下,验证仅涉及调整大小和颜色标准化。

3.Data Loader

我们将在训练期间多次遍历整个数据集。 请记住,在将原始图像输入神经网络之前,必须将原始图像转换为张量(mxnet使用BCHW格式)。

一个方便的DataLoader非常方便我们将不同的转换和聚合数据应用到mini-batches中。

因为目标的数量在图像间变化很大,所以我们也有不同的标签大小。 因此,我们需要将这些标签填充到相同的大小。 为了解决这个问题,GluonCV提供了gluoncv.data.batchify.Pad,它可以自动处理填充。 还有gluoncv.data.batchify.Stack,用于堆叠具有一致形状的NDArrays。 gluoncv.data.batchify.Tuple用于处理来自转换函数的多个输出的不同行为。

from gluoncv.data.batchify import Tuple, Stack, Pad

from mxnet.gluon.data import DataLoader

batch_size = 2 # for tutorial, we use smaller batch-size

num_workers = 0 # you can make it larger(if your CPU has more cores) to accelerate data loading

# behavior of batchify_fn: stack images, and pad labels

batchify_fn = Tuple(Stack(), Pad(pad_val=-1))

train_loader = DataLoader(train_dataset.transform(train_transform), batch_size, shuffle=True,

batchify_fn=batchify_fn, last_batch='rollover', num_workers=num_workers)

val_loader = DataLoader(val_dataset.transform(val_transform), batch_size, shuffle=False,

batchify_fn=batchify_fn, last_batch='keep', num_workers=num_workers)

for ib, batch in enumerate(train_loader):

if ib > 3:

break

print('data 0:', batch[0][0].shape, 'label 0:', batch[1][0].shape)

print('data 1:', batch[0][1].shape, 'label 1:', batch[1][1].shape)

Out:

data 0: (3, 416, 416) label 0: (6, 6)

data 1: (3, 416, 416) label 1: (6, 6)

data 0: (3, 416, 416) label 0: (3, 6)

data 1: (3, 416, 416) label 1: (3, 6)

data 0: (3, 416, 416) label 0: (2, 6)

data 1: (3, 416, 416) label 1: (2, 6)

data 0: (3, 416, 416) label 0: (2, 6)

data 1: (3, 416, 416) label 1: (2, 6)4. YOLOv3 Network

GluonCV的YOLOv3实现是综合的Gluon HybridBlock。 在结构方面,YOLOv3网络由基本特征提取网络,卷积过渡层,上采样层和专门设计的YOLOv3输出层组成。

Gluon Model Zoo有一些内置的YOLO网络,可以使用一行简单的代码加载:

(为避免在本教程中下载mdoel,我们设置pretrained_base = False,实际上我们通常希望通过设置pretrained_base = True来加载预先训练的imagenet模型。)

from gluoncv import model_zoo

net = model_zoo.get_model('yolo3_darknet53_voc', pretrained_base=False)

print(net)

Out:

YOLOV3(

(_target_generator): YOLOV3TargetMerger(

(_dynamic_target): YOLOV3DynamicTargetGeneratorSimple(

(_batch_iou): BBoxBatchIOU(

(_pre): BBoxSplit(

)

)

)

)

(_loss): YOLOV3Loss(batch_axis=0, w=None)

(stages): HybridSequential(

(0): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(2): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(3): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(4): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(5): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(6): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(7): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(8): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(9): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(10): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(11): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(12): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(13): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(14): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

)

(1): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(2): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(3): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(4): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(5): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(6): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(7): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(8): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

)

(2): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(2): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(3): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(4): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

)

)

(transitions): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(yolo_blocks): HybridSequential(

(0): YOLODetectionBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(2): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(3): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(4): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(tip): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(1): YOLODetectionBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(2): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(3): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(4): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(tip): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(2): YOLODetectionBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(2): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(3): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(4): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(tip): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(yolo_outputs): HybridSequential(

(0): YOLOOutputV3(

(prediction): Conv2D(None -> 75, kernel_size=(1, 1), stride=(1, 1))

)

(1): YOLOOutputV3(

(prediction): Conv2D(None -> 75, kernel_size=(1, 1), stride=(1, 1))

)

(2): YOLOOutputV3(

(prediction): Conv2D(None -> 75, kernel_size=(1, 1), stride=(1, 1))

)

)

)YOLOv3网络可以使用图像张量进行调用

import mxnet as mx

x = mx.nd.zeros(shape=(1, 3, 416, 416))

net.initialize()

cids, scores, bboxes = net(x)YOLOv3返回三个值,其中cids是类标签,scores是每个预测的置信度分数,而bbox是相应边界框的绝对坐标。

5. 训练目标

端到端YOLOv3训练涉及四个损失。 损失惩罚不正确的class/box预测,并在gluoncv.loss.YOLOV3Loss中定义

loss = gcv.loss.YOLOV3Loss()

# which is already included in YOLOv3 network

print(net._loss)

Out:

YOLOV3Loss(batch_axis=0, w=None)为了加速训练,我们让CPU预先计算一些训练目标。 当CPU功能强大且可以使用 -j num_workers来使用多核CPU时,这一点尤为出色。

如果我们为训练变换函数提供网络,它将计算部分训练目标

from mxnet import autograd

train_transform = presets.yolo.YOLO3DefaultTrainTransform(width, height, net)

# return stacked images, center_targets, scale_targets, gradient weights, objectness_targets, class_targets

# additionally, return padded ground truth bboxes, so there are 7 components returned by dataloader

batchify_fn = Tuple(*([Stack() for _ in range(6)] + [Pad(axis=0, pad_val=-1) for _ in range(1)]))

train_loader = DataLoader(train_dataset.transform(train_transform), batch_size, shuffle=True,

batchify_fn=batchify_fn, last_batch='rollover', num_workers=num_workers)

for ib, batch in enumerate(train_loader):

if ib > 0:

break

print('data:', batch[0][0].shape)

print('label:', batch[6][0].shape)

with autograd.record():

input_order = [0, 6, 1, 2, 3, 4, 5]

obj_loss, center_loss, scale_loss, cls_loss = net(*[batch[o] for o in input_order])

# sum up the losses

# some standard gluon training steps:

# autograd.backward(sum_loss)

# trainer.step(batch_size)

Out:

data: (3, 416, 416)

label: (4, 4)我们可以看到data loader实际上正在为我们返回训练目标。 然后加载数据和Gluon训练很自然地循环,并让它更新权重。

参考资料

-

GluonCV Github

-

准备pascal VOC数据集

-

训练yolo v3

-

Linux终端没有GUI,如何使用matplotlib绘图

-

Redmon, Joseph, and Ali Farhadi. “Yolov3: An incremental improvement.” arXiv preprint arXiv:1804.02767 (2018).