动漫图片生成实战(GAN,WGAN)

动漫图片使用的是一组二次元动漫头像的数据集, 共 51223 张图片,无标注信息,图片主

体已裁剪、 对齐并统一缩放到96 × 96大小。这里使用GAN来生成这些图片。

一、数据集的加载以及预处理

对于自定义的数据集,需要自行完成数据的加载和预处理工作,代码贴在后面,使用make_anime_dataset 函数返回已经处理好的数据集对象。

# 获取数据路径

img_path = glob.glob(r'E:\Tensorflow\tensorflowstudy\GAN\anime-faces\*.jpg') + \

glob.glob(r'E:\Tensorflow\tensorflowstudy\GAN\anime-faces\*.png')

print('images num:', len(img_path))

# 构造数据集对象

dataset, img_shape, _ = make_anime_dataset(img_path, batch_size, resize=64)

print(dataset, img_shape)

sample = next(iter(dataset)) # 采样

print(sample.shape, tf.reduce_max(sample).numpy(), tf.reduce_min(sample).numpy())

dataset = dataset.repeat(100) # 重复100次

db_iter = iter(dataset)

dataset 对象就是 tf.data.Dataset 类实例,已经完成了随机打散、预处理和批量化等操作,可以直接迭代获得样本批, img_shape 是预处理后的图片大小。

二、网络模型构建

1.生成器

生成网络 G 由 5 个转置卷积层单元堆叠而成,实现特征图高宽的层层放大,特征图通道数的层层减少。 首先将长度为 100 的隐藏向量通过 Reshape 操作调整为[, 1,1,100]的 4维张量,并依序通过转置卷积层,放大高宽维度,减少通道数维度,最后得到高宽为 64,通道数为 3 的彩色图片。每个卷积层中间插入 BN 层来提高训练稳定性,卷积层选择不使用偏置向量

# 生成器网络

class Generator(keras.Model):

def __init__(self):

super(Generator, self).__init__()

filter = 64

# 转置卷积层1,输出channel为filter*8, 核大小为4,步长为1,不使用padding, 不使用偏置

self.conv1 = layers.Conv2DTranspose(filter * 8, (4, 4), strides=1, padding='valid', use_bias=False)

self.bn1 = layers.BatchNormalization()

# 转置卷积层2

self.conv2 = layers.Conv2DTranspose(filter * 4, (4, 4), strides=2, padding='same', use_bias=False)

self.bn2 = layers.BatchNormalization()

# 转置卷积层3

self.conv3 = layers.Conv2DTranspose(filter * 2, (4, 4), strides=2, padding='same', use_bias=False)

self.bn3 = layers.BatchNormalization()

# 转置卷积层4

self.conv4 = layers.Conv2DTranspose(filter * 1, (4, 4), strides=2, padding='same', use_bias=False)

self.bn4 = layers.BatchNormalization()

# 转置卷积层5

self.conv5 = layers.Conv2DTranspose(3, (4, 4), strides=2, padding='same', use_bias=False)

def call(self, inputs, training=None):

x = inputs # [z, 100]

# Reshape为4D张量,方便后续转置卷积运算:(b, 1, 1, 100)

x = tf.reshape(x, (x.shape[0], 1, 1, x.shape[1]))

x = tf.nn.relu(x)

# 转置卷积-BN-激活函数:(b, 4, 4, 512) 4x4 : o = (i-1)s+k = (1-1)*1 + 4 = 4

x = tf.nn.relu(self.bn1(self.conv1(x), training=training)) # bn层要设置参数是否训练

# 转置卷积-BN-激活函数:(b, 8, 8, 256) 8x8 : o = i*s = 4*2 = 8

x = tf.nn.relu(self.bn2(self.conv2(x), training=training))

# 转置卷积-BN-激活函数:(b, 16, 16, 128)

x = tf.nn.relu(self.bn3(self.conv3(x), training=training))

# 转置卷积-BN-激活函数:(b, 32, 32, 64)

x = tf.nn.relu(self.bn4(self.conv4(x), training=training))

# 转置卷积-激活函数:(b, 64, 64, 3)

x = self.conv5(x)

x = tf.tanh(x) # 输出x: [-1,1],与预处理一样

return x经过转置卷积后输出大小算法:

当设置 padding=’VALID’时,输出大小表达为: = ( - 1) +

当设置 padding=’SAME’时,输出大小表达为: = ∙

i:转置卷积输入大小s:strides, 步长

k: 卷积核大小

生成网络的输出大小为[, 64,64,3]的图片张量,数值范围为-1~1

2.判别器

判别网络 D 与普通的分类网络相同,接受大小为[, 64,64,3]的图片张量,连续通过 5个卷积层实现特征的层层提取,卷积层最终输出大小为[, 2,2,1024],再通过池化层GlobalAveragePooling2D 将特征大小转换为[, 1024],最后通过一个全连接层获得二分类任务的概率

# 判别器

class Discriminator(keras.Model):

def __init__(self):

super(Discriminator, self).__init__()

filter = 64

# 卷积层1

self.conv1 = layers.Conv2D(filter, (4, 4), strides=2, padding='valid', use_bias=False)

self.bn1 = layers.BatchNormalization()

# 卷积层2

self.conv2 = layers.Conv2D(filter * 2, (4, 4), strides=2, padding='valid', use_bias=False)

self.bn2 = layers.BatchNormalization()

# 卷积层3

self.conv3 = layers.Conv2D(filter * 4, (4, 4), strides=2, padding='valid', use_bias=False)

self.bn3 = layers.BatchNormalization()

# 卷积层4

self.conv4 = layers.Conv2D(filter * 8, (3, 3), strides=1, padding='valid', use_bias=False)

self.bn4 = layers.BatchNormalization()

# 卷积层5

self.conv5 = layers.Conv2D(filter * 16, (3, 3), strides=1, padding='valid', use_bias=False)

self.bn5 = layers.BatchNormalization()

# 全局池化层 相当于全连接层,去掉中间2个维度

self.pool = layers.GlobalAveragePooling2D()

# 特征打平

self.faltten = layers.Flatten()

# 2分类全连接层

self.fc = layers.Dense(1)

def call(self, inputs, training=None):

# 卷积-BN-激活函数:(4, 31, 31, 64)

x = tf.nn.leaky_relu(self.bn1(self.conv1(inputs), training=training))

# 卷积-BN-激活函数:(4, 14, 14, 128)

x = tf.nn.leaky_relu(self.bn2(self.conv2(x), training=training))

# 卷积-BN-激活函数:(4, 6, 6, 256)

x = tf.nn.leaky_relu(self.bn3(self.conv3(x), training=training))

# 卷积-BN-激活函数:(4, 4, 4, 512)

x = tf.nn.leaky_relu(self.bn4(self.conv4(x), training=training))

# 卷积-BN-激活函数:(4, 2, 2, 1024)

x = tf.nn.leaky_relu(self.bn5(self.conv5(x), training=training))

# 卷积-BN-激活函数:(4, 1024)

x = self.pool(x)

# 打平

x = self.faltten(x)

# 输出: [b,1024] => [b,1]

logits = self.fc(x)

return logits判别器的输出大小为[, 1],类内部没有使用 Sigmoid 激活函数,通过 Sigmoid 激活函数后可获得个样本属于真实样本的概率

三、网络装配与训练

判别网络的训练目标是最大化ℒ(, )函数,使得真实样本预测为真的概率接近于 1,生成样本预测为真的概率接近于 0。将判断器的误差函数实现在 d_loss_fn 函数中, 将所有真实样本标注为 1, 所有生成样本标注为 0,并通过最小化对应的交叉熵损失函数来实现最大化ℒ(, )函数

def celoss_ones(logits):

# 计算属于与标签1的交叉熵

y = tf.ones_like(logits)

loss = keras.losses.binary_crossentropy(y, logits, from_logits=True)

return tf.reduce_mean(loss)

def celoss_zeros(logits):

# 计算属于标签0的交叉熵

y = tf.zeros_like(logits)

loss = keras.losses.binary_crossentropy(y, logits, from_logits=True)

return loss

def d_loss_fn(generator, discriminator, batch_z, batch_x, training):

# 计算判别器的误差函数

# 采样生成图片

fake_image = generator(batch_z, training)

# 判定生成图片

d_fake_logits = discriminator(fake_image, training)

# 判断真实图片

d_real_logits = discriminator(batch_x, training)

# 真实图片与1之间的误差

d_loss_real = celoss_ones(d_real_logits)

# 生成图片与0之间的误差

d_loss_fake = celoss_zeros(d_fake_logits)

# 合并误差

loss = d_loss_fake + d_loss_real

return loss

def g_loss_fn(generator, discriminator, batch_z, training):

# 采样生成图片

fake_image = generator(batch_z, training)

# 在训练生成网络使,需要迫使生成图片判定为真

d_fake_logits = discriminator(fake_image, training)

# 计算生成图片与1之间的误差

loss = celoss_ones(d_fake_logits)

return loss

celoss_ones 函数计算当前预测概率与标签 1 之间的交叉熵损失,celoss_zeros 函数计算当前预测概率与标签 0 之间的交叉熵损失

生成网络的训练目标是最小化ℒ(, )目标函数,由于真实样本与生成器无关,因此误差函数只需要考虑最小化~(∙)log (1 - (()))项即可。可以通过将生成的样本标注为 1,最小化此时的交叉熵误差。需要注意的是,在反向传播误差的过程中,判别器也参与了计算图的构建,但是此阶段只需要更新生成器网络参数,而不更新判别器的网络参数。

网络装配:创建生成网络和判别网络,并分别创建对应的优化器和学习率

generator = Generator() # 创建生成器

generator.build(input_shape=(4, z_dim))

discriminator = Discriminator() # 创建判别器

discriminator.build(input_shape=(4, 64, 64, 3))

# 分别为生成器和判别器创建优化器

g_optimizer = keras.optimizers.Adam(learning_rate=learning_rate, beta_1=0.5)

d_optimizer = keras.optimizers.Adam(learning_rate=learning_rate, beta_1=0.5)在每个 Epoch, 首先从先验分布 (∙)中随机采样隐藏向量,从真实数据集中随机采样真实图片,通过生成器和判别器计算判别器网络的损失,并优化判别器网络参数。 在训练生成器时,需要借助于判别器来计算误差,但是只计算生成器的梯度信息并更新。

for epoch in range(epochs): # 训练epochs次

# 训练判别器

for _ in range(5):

# 采样隐藏向量

batch_z = tf.random.normal([batch_size, z_dim])

batch_x = next(db_iter) # 采样真实图片

# 判别器向前计算

with tf.GradientTape() as tape:

d_loss = d_loss_fn(generator, discriminator, batch_z, batch_x, training)

grads = tape.gradient(d_loss, discriminator.trainable_variables)

d_optimizer.apply_gradients(zip(grads, discriminator.trainable_variables))

# 采样隐藏向量

batch_z = tf.random.normal([batch_size, z_dim])

batch_x = next(db_iter) # 采样真实图片

# 生成器向前计算

with tf.GradientTape() as tape:

g_loss = g_loss_fn(generator, discriminator, batch_z, training)

grads = tape.gradient(g_loss, generator.trainable_variables)

g_optimizer.apply_gradients(zip(grads, generator.trainable_variables))

if epoch % 100 == 0:

print(epoch, 'd-loss:', float(d_loss), 'g-loss:', float(g_loss))

# 可视化

z = tf.random.normal([100, z_dim])

fake_image = generator(z, training=False)

img_path = os.path.join('gan_images', 'gan-%d.png' % epoch)

save_result(fake_image.numpy(), 10, img_path, color_mode='P')每间隔 100 个 Epoch,进行一次图片生成测试。通过从先验分布中随机采样隐向量,送入生成器获得生成图片,并保存为文件。

结果:

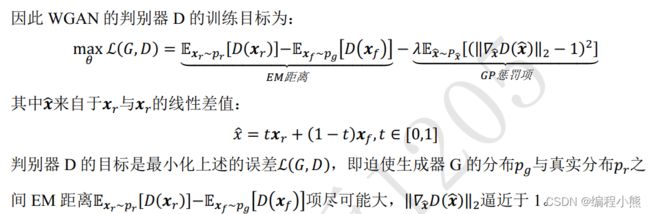

四、WGAN-GP

WGAN-GP 模型可以在原来 GAN 代码实现的基础修改。 WGAN-GP 模型的判别器 D 的输出不再是样本类别的概率,输出不需要加 Sigmoid 激活函数,同时添加梯度惩罚项

梯度惩罚项的计算:

def gradient_penalty(discriminator, batch_x, fake_image):

# 梯度惩罚项计算函数

batchsz = batch_x.shape[0]

# [b, h, w, c]

# 每个样本均随机采样t,用于插值

t = tf.random.uniform([batchsz, 1, 1, 1])

# 自动扩展为 x 的形状 [b, 1, 1, 1] => [b, h, w, c]

t = tf.broadcast_to(t, batch_x.shape)

# 在真假图片之间做线性插值

interplate = t * batch_x + (1 - t) * fake_image

# 在梯度环境中计算 D 对插值样本的梯度

with tf.GradientTape() as tape:

tape.watch([interplate]) # 加入梯度观察列表

d_interplote_logits = discriminator(interplate, training=True)

grads = tape.gradient(d_interplote_logits, interplate)

# # 计算每个样本的梯度的范数:[b, h, w, c] => [b, -1]

grads = tf.reshape(grads, [grads.shape[0], -1])

gp = tf.norm(grads, axis=1) #[b]

# 计算梯度惩罚项

gp = tf.reduce_mean((gp-1)**2)

return gp

WGAN 判别器的损失函数计算与 GAN 不一样, WGAN 是直接最大化真实样本的输出值,最小化生成样本的输出值,并没有交叉熵计算的过程。

def d_loss_fn(generator, discriminator, batch_z, batch_x, training):

# 计算判别器的误差函数

# 采样生成图片

fake_image = generator(batch_z, training)

# 判定生成图片,假样本的输出

d_fake_logits = discriminator(fake_image, training)

# 判断真实图片,真样本的输出

d_real_logits = discriminator(batch_x, training)

# 计算梯度惩罚项

gp = gradient_penalty(discriminator, batch_x, fake_image)

# WGAN-GP d损失函数的定义,这里并不是计算交叉熵,而是直接最大化正样本的输出

# 最小化假样本的输出和梯度惩罚项

loss = tf.reduce_mean(d_fake_logits) - tf.reduce_mean(d_real_logits) + 10. * gp

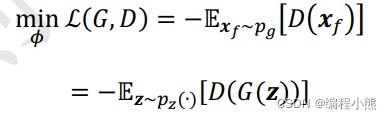

return loss, gpWGAN 生成器 G 的损失函数是只需要最大化生成样本在判别器 D 的输出值即可,同样没有交叉熵的计算步骤。生成器G的训练目标:

def g_loss_fn(generator, discriminator, batch_z, training):

# 生成器的损失函数

# 采样生成图片

fake_image = generator(batch_z, training)

# 在训练生成网络使,需要迫使生成图片判定为真

d_fake_logits = discriminator(fake_image, training)

# WGAN-GP G损失函数,最大化假样本的输出值

loss = -tf.reduce_mean(d_fake_logits)

return loss

WGAN 的主训练逻辑基本相同,与原始的 GAN 相比,判别器 D 的作用是作为一个EM 距离的计量器存在,因此判别器越准确,对生成器越有利,可以在训练一个 Step 时训练判别器 D 多次,训练生成器 G 一次,从而获得较为精准的 EM 距离估计

五、程序

gan.py

# -*- codeing = utf-8 -*-

# @Time : 23:25

# @Author:Paranipd

# @File : gan.py

# @Software:PyCharm

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, Sequential

# 生成器网络

class Generator(keras.Model):

def __init__(self):

super(Generator, self).__init__()

filter = 64

# 转置卷积层1,输出channel为filter*8, 核大小为4,步长为1,不使用padding, 不使用偏置

self.conv1 = layers.Conv2DTranspose(filter * 8, (4, 4), strides=1, padding='valid', use_bias=False)

self.bn1 = layers.BatchNormalization()

# 转置卷积层2

self.conv2 = layers.Conv2DTranspose(filter * 4, (4, 4), strides=2, padding='same', use_bias=False)

self.bn2 = layers.BatchNormalization()

# 转置卷积层3

self.conv3 = layers.Conv2DTranspose(filter * 2, (4, 4), strides=2, padding='same', use_bias=False)

self.bn3 = layers.BatchNormalization()

# 转置卷积层4

self.conv4 = layers.Conv2DTranspose(filter * 1, (4, 4), strides=2, padding='same', use_bias=False)

self.bn4 = layers.BatchNormalization()

# 转置卷积层5

self.conv5 = layers.Conv2DTranspose(3, (4, 4), strides=2, padding='same', use_bias=False)

def call(self, inputs, training=None):

x = inputs # [z, 100]

# Reshape为4D张量,方便后续转置卷积运算:(b, 1, 1, 100)

x = tf.reshape(x, (x.shape[0], 1, 1, x.shape[1]))

x = tf.nn.relu(x)

# 转置卷积-BN-激活函数:(b, 4, 4, 512) 4x4 : o = (i-1)s+k = (1-1)*1 + 4 = 4

x = tf.nn.relu(self.bn1(self.conv1(x), training=training)) # bn层要设置参数是否训练

# 转置卷积-BN-激活函数:(b, 8, 8, 256) 8x8 : o = i*s = 4*2 = 8

x = tf.nn.relu(self.bn2(self.conv2(x), training=training))

# 转置卷积-BN-激活函数:(b, 16, 16, 128)

x = tf.nn.relu(self.bn3(self.conv3(x), training=training))

# 转置卷积-BN-激活函数:(b, 32, 32, 64)

x = tf.nn.relu(self.bn4(self.conv4(x), training=training))

# 转置卷积-激活函数:(b, 64, 64, 3)

x = self.conv5(x)

x = tf.tanh(x) # 输出x: [-1,1],与预处理一样

return x

# 判别器

class Discriminator(keras.Model):

def __init__(self):

super(Discriminator, self).__init__()

filter = 64

# 卷积层1

self.conv1 = layers.Conv2D(filter, (4, 4), strides=2, padding='valid', use_bias=False)

self.bn1 = layers.BatchNormalization()

# 卷积层2

self.conv2 = layers.Conv2D(filter * 2, (4, 4), strides=2, padding='valid', use_bias=False)

self.bn2 = layers.BatchNormalization()

# 卷积层3

self.conv3 = layers.Conv2D(filter * 4, (4, 4), strides=2, padding='valid', use_bias=False)

self.bn3 = layers.BatchNormalization()

# 卷积层4

self.conv4 = layers.Conv2D(filter * 8, (3, 3), strides=1, padding='valid', use_bias=False)

self.bn4 = layers.BatchNormalization()

# 卷积层5

self.conv5 = layers.Conv2D(filter * 16, (3, 3), strides=1, padding='valid', use_bias=False)

self.bn5 = layers.BatchNormalization()

# 全局池化层 相当于全连接层,去掉中间2个维度

self.pool = layers.GlobalAveragePooling2D()

# 特征打平

self.faltten = layers.Flatten()

# 2分类全连接层

self.fc = layers.Dense(1)

def call(self, inputs, training=None):

# 卷积-BN-激活函数:(4, 31, 31, 64)

x = tf.nn.leaky_relu(self.bn1(self.conv1(inputs), training=training))

# 卷积-BN-激活函数:(4, 14, 14, 128)

x = tf.nn.leaky_relu(self.bn2(self.conv2(x), training=training))

# 卷积-BN-激活函数:(4, 6, 6, 256)

x = tf.nn.leaky_relu(self.bn3(self.conv3(x), training=training))

# 卷积-BN-激活函数:(4, 4, 4, 512)

x = tf.nn.leaky_relu(self.bn4(self.conv4(x), training=training))

# 卷积-BN-激活函数:(4, 2, 2, 1024)

x = tf.nn.leaky_relu(self.bn5(self.conv5(x), training=training))

# 卷积-BN-激活函数:(4, 1024)

x = self.pool(x)

# 打平

x = self.faltten(x)

# 输出: [b,1024] => [b,1]

logits = self.fc(x)

return logits

def main():

d = Discriminator()

g = Generator()

x = tf.random.normal([2, 64, 64, 3])

z = tf.random.normal([2, 100])

prob = d(x)

print(prob)

x_hat = g(z)

print(x_hat.shape)

if __name__ == '__main__':

main()

dataset.py,数据集的加载

# -*- codeing = utf-8 -*-

# @Time : 10:09

# @Author:Paranipd

# @File : dataset.py

# @Software:PyCharm

import multiprocessing

import tensorflow as tf

def make_anime_dataset(img_paths, batch_size, resize=64, drop_remainder=True, shuffle=True, repeat=1):

# @tf.function

def _map_fn(img):

img = tf.image.resize(img, [resize, resize])

# img = tf.image.random_crop(img,[resize, resize])

# img = tf.image.random_flip_left_right(img)

# img = tf.image.random_flip_up_down(img)

img = tf.clip_by_value(img, 0, 255)

img = img / 127.5 - 1 #-1~1

return img

dataset = disk_image_batch_dataset(img_paths,

batch_size,

drop_remainder=drop_remainder,

map_fn=_map_fn,

shuffle=shuffle,

repeat=repeat)

img_shape = (resize, resize, 3)

len_dataset = len(img_paths) // batch_size

return dataset, img_shape, len_dataset

def batch_dataset(dataset,

batch_size,

drop_remainder=True,

n_prefetch_batch=1,

filter_fn=None,

map_fn=None,

n_map_threads=None,

filter_after_map=False,

shuffle=True,

shuffle_buffer_size=None,

repeat=None):

# set defaults

if n_map_threads is None:

n_map_threads = multiprocessing.cpu_count()

if shuffle and shuffle_buffer_size is None:

shuffle_buffer_size = max(batch_size * 128, 2048) # set the minimum buffer size as 2048

# [*] it is efficient to conduct `shuffle` before `map`/`filter` because `map`/`filter` is sometimes costly

if shuffle:

dataset = dataset.shuffle(shuffle_buffer_size)

if not filter_after_map:

if filter_fn:

dataset = dataset.filter(filter_fn)

if map_fn:

dataset = dataset.map(map_fn, num_parallel_calls=n_map_threads)

else: # [*] this is slower

if map_fn:

dataset = dataset.map(map_fn, num_parallel_calls=n_map_threads)

if filter_fn:

dataset = dataset.filter(filter_fn)

dataset = dataset.batch(batch_size, drop_remainder=drop_remainder)

dataset = dataset.repeat(repeat).prefetch(n_prefetch_batch)

return dataset

def memory_data_batch_dataset(memory_data,

batch_size,

drop_remainder=True,

n_prefetch_batch=1,

filter_fn=None,

map_fn=None,

n_map_threads=None,

filter_after_map=False,

shuffle=True,

shuffle_buffer_size=None,

repeat=None):

"""Batch dataset of memory data.

Parameters

----------

memory_data : nested structure of tensors/ndarrays/lists

"""

dataset = tf.data.Dataset.from_tensor_slices(memory_data)

dataset = batch_dataset(dataset,

batch_size,

drop_remainder=drop_remainder,

n_prefetch_batch=n_prefetch_batch,

filter_fn=filter_fn,

map_fn=map_fn,

n_map_threads=n_map_threads,

filter_after_map=filter_after_map,

shuffle=shuffle,

shuffle_buffer_size=shuffle_buffer_size,

repeat=repeat)

return dataset

def disk_image_batch_dataset(img_paths,

batch_size,

labels=None,

drop_remainder=True,

n_prefetch_batch=1,

filter_fn=None,

map_fn=None,

n_map_threads=None,

filter_after_map=False,

shuffle=True,

shuffle_buffer_size=None,

repeat=None):

"""Batch dataset of disk image for PNG and JPEG.

Parameters

----------

img_paths : 1d-tensor/ndarray/list of str

labels : nested structure of tensors/ndarrays/lists

"""

if labels is None:

memory_data = img_paths

else:

memory_data = (img_paths, labels)

def parse_fn(path, *label):

img = tf.io.read_file(path)

img = tf.image.decode_jpeg(img, channels=3) # fix channels to 3

return (img,) + label

if map_fn: # fuse `map_fn` and `parse_fn`

def map_fn_(*args):

return map_fn(*parse_fn(*args))

else:

map_fn_ = parse_fn

dataset = memory_data_batch_dataset(memory_data,

batch_size,

drop_remainder=drop_remainder,

n_prefetch_batch=n_prefetch_batch,

filter_fn=filter_fn,

map_fn=map_fn_,

n_map_threads=n_map_threads,

filter_after_map=filter_after_map,

shuffle=shuffle,

shuffle_buffer_size=shuffle_buffer_size,

repeat=repeat)

return dataset

gan_train.py

# -*- codeing = utf-8 -*-

# @Time : 10:10

# @Author:Paranipd

# @File : gan_train.py

# @Software:PyCharm

import os

import numpy as np

import tensorflow as tf

from tensorflow import keras

# from scipy.misc import toimage

from PIL import Image

import glob

from gan import Generator, Discriminator

from dataset import make_anime_dataset

def save_result(val_out, val_block_size, image_path, color_mode):

def preprocess(img):

img = ((img + 1.0) * 127.5).astype(np.uint8)

# img = img.astype(np.uint8)

return img

preprocesed = preprocess(val_out)

final_image = np.array([])

single_row = np.array([])

for b in range(val_out.shape[0]):

# concat image into a row

if single_row.size == 0:

single_row = preprocesed[b, :, :, :]

else:

single_row = np.concatenate((single_row, preprocesed[b, :, :, :]), axis=1)

# concat image row to final_image

if (b+1) % val_block_size == 0:

if final_image.size == 0:

final_image = single_row

else:

final_image = np.concatenate((final_image, single_row), axis=0)

# reset single row

single_row = np.array([])

if final_image.shape[2] == 1:

final_image = np.squeeze(final_image, axis=2)

Image.fromarray(final_image).save(image_path)

def celoss_ones(logits):

# 计算属于与标签1的交叉熵

y = tf.ones_like(logits)

loss = keras.losses.binary_crossentropy(y, logits, from_logits=True)

return tf.reduce_mean(loss)

def celoss_zeros(logits):

# 计算属于标签0的交叉熵

y = tf.zeros_like(logits)

loss = keras.losses.binary_crossentropy(y, logits, from_logits=True)

return loss

def d_loss_fn(generator, discriminator, batch_z, batch_x, training):

# 计算判别器的误差函数

# 采样生成图片

fake_image = generator(batch_z, training)

# 判定生成图片

d_fake_logits = discriminator(fake_image, training)

# 判断真实图片

d_real_logits = discriminator(batch_x, training)

# 真实图片与1之间的误差

d_loss_real = celoss_ones(d_real_logits)

# 生成图片与0之间的误差

d_loss_fake = celoss_zeros(d_fake_logits)

# 合并误差

loss = d_loss_fake + d_loss_real

return loss

def g_loss_fn(generator, discriminator, batch_z, training):

# 采样生成图片

fake_image = generator(batch_z, training)

# 在训练生成网络使,需要迫使生成图片判定为真

d_fake_logits = discriminator(fake_image, training)

# 计算生成图片与1之间的误差

loss = celoss_ones(d_fake_logits)

return loss

def main():

tf.random.set_seed(100)

np.random.seed(100)

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

assert tf.__version__.startswith('2.')

z_dim = 100 # 隐藏向量的长度

epochs = 300000 # 训练次数

batch_size = 64

learning_rate = 0.0002

training = True

# 获取数据路径

img_path = glob.glob(r'E:\Tensorflow\tensorflowstudy\GAN\anime-faces\*.jpg') + \

glob.glob(r'E:\Tensorflow\tensorflowstudy\GAN\anime-faces\*.png')

print('images num:', len(img_path))

# 构造数据集对象

dataset, img_shape, _ = make_anime_dataset(img_path, batch_size, resize=64)

print(dataset, img_shape)

sample = next(iter(dataset)) # 采样

print(sample.shape, tf.reduce_max(sample).numpy(), tf.reduce_min(sample).numpy())

dataset = dataset.repeat(100) # 重复100次

db_iter = iter(dataset)

generator = Generator() # 创建生成器

generator.build(input_shape=(4, z_dim))

discriminator = Discriminator() # 创建判别器

discriminator.build(input_shape=(4, 64, 64, 3))

# 分别为生成器和判别器创建优化器

g_optimizer = keras.optimizers.Adam(learning_rate=learning_rate, beta_1=0.5)

d_optimizer = keras.optimizers.Adam(learning_rate=learning_rate, beta_1=0.5)

# generator.load_weights('generator.ckpt')

# discriminator.load_weights('discriminator')

# print('Loaded chpt!')

d_losses, g_losses = [], []

for epoch in range(epochs): # 训练epochs次

# 训练判别器

for _ in range(5):

# 采样隐藏向量

batch_z = tf.random.normal([batch_size, z_dim])

batch_x = next(db_iter) # 采样真实图片

# 判别器向前计算

with tf.GradientTape() as tape:

d_loss = d_loss_fn(generator, discriminator, batch_z, batch_x, training)

grads = tape.gradient(d_loss, discriminator.trainable_variables)

d_optimizer.apply_gradients(zip(grads, discriminator.trainable_variables))

# 采样隐藏向量

batch_z = tf.random.normal([batch_size, z_dim])

batch_x = next(db_iter) # 采样真实图片

# 生成器向前计算

with tf.GradientTape() as tape:

g_loss = g_loss_fn(generator, discriminator, batch_z, training)

grads = tape.gradient(g_loss, generator.trainable_variables)

g_optimizer.apply_gradients(zip(grads, generator.trainable_variables))

if epoch % 100 == 0:

print(epoch, 'd-loss:', float(d_loss), 'g-loss:', float(g_loss))

# 可视化

z = tf.random.normal([100, z_dim])

fake_image = generator(z, training=False)

img_path = os.path.join('gan_images', 'gan-%d.png' % epoch)

save_result(fake_image.numpy(), 10, img_path, color_mode='P')

# d_losses.append(float(d_loss))

# g_losses.append(float(g_loss))

# if epoch % 10000 == 1:

# # print(d_losses)

# # print(g_losses)

# generator.save_weights('generator.ckpt')

# discriminator.save_weights('discriminator.ckpt')

if __name__ == '__main__':

main()