OpenCV基于NCC多角度模板匹配

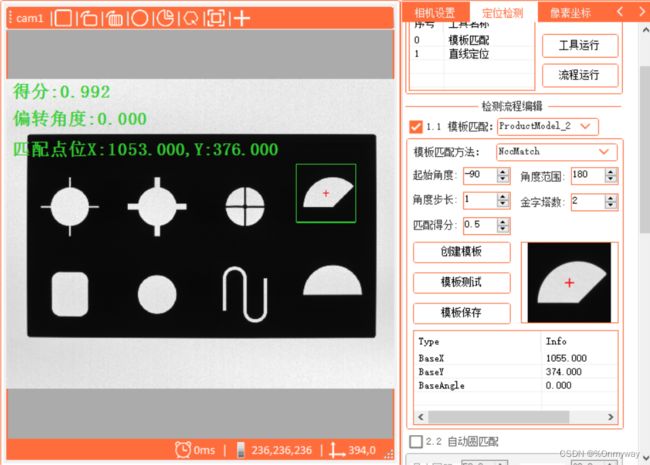

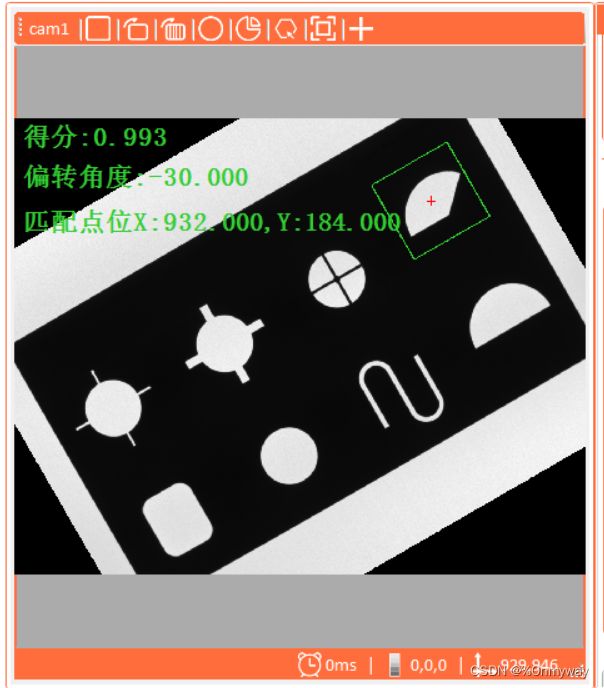

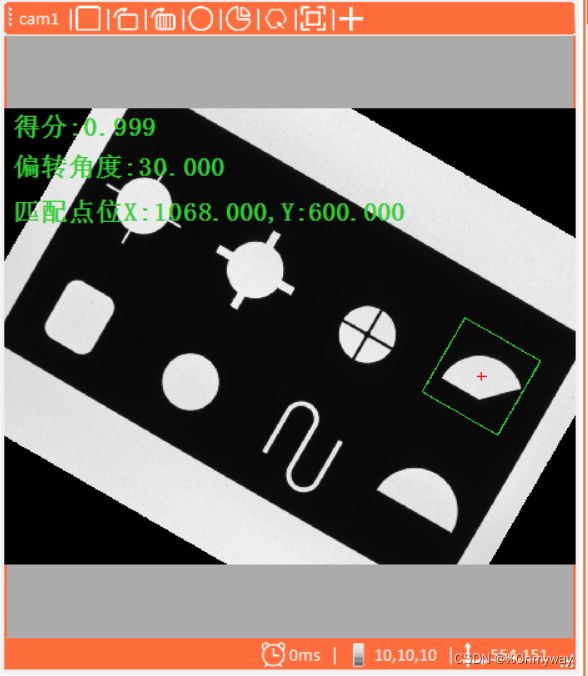

1. 废话不多说,先看测试效果图

a)模板原图:

b) 逆时针旋转30°:

c)顺时针旋转30°:

2. 下面分享一下开发过程

a) 为了提升搜索匹配速度,需要构建金字塔模型,金字塔层数不宜过多,一般2~3层,以实际图像质量为依据,代码实现方式:

//对模板图像和待检测图像分别进行图像金字塔下采样

for (int i = 0; i < numLevels; i++)

{

Cv2.PyrDown(src, src, new Size(src.Cols / 2, src.Rows / 2));

Cv2.PyrDown(model, model, new Size(model.Cols / 2, model.Rows / 2));

}

//为了提升速度,直接上采样到最底层

for (int i = 0; i < numLevels; i++)

{

Cv2.PyrUp(src, src, new Size(src.Cols * 2,

src.Rows * 2));//下一层,放大2倍

Cv2.PyrUp(model, model, new Size(model.Cols * 2,

model.Rows * 2));//下一层,放大2倍

}b) 多角度匹配时需要将图像按照一定的角度步长循环进行搜索,旋转的图像可以是当前实时图也可以是模板图,不过基于速度考虑选择了模板来作为旋转,同时模板图像也是固定不变的,代码实现方式:

///

/// 图像旋转,并获旋转后的图像边界旋转矩形

///

///

///

///

/// ();

double cos = Math.Abs(mIndex[0, 0]);

double sin = Math.Abs(mIndex[0, 1]);

int nW = (int)((image.Height * sin) + (image.Width * cos));

int nH = (int)((image.Height * cos) + (image.Width * sin));

mIndex[0, 2] += (nW / 2) - pt.X;

mIndex[1, 2] += (nH / 2) - pt.Y;

Cv2.WarpAffine(image, newImg, M, new CVSize(nW, nH));

//获取图像边界旋转矩形

Rect rect = new CVRect(0, 0, image.Width, image.Height);

Point2f[] srcPoint2Fs = new Point2f[4]

{

new Point2f(rect.Left,rect.Top),

new Point2f (rect.Right,rect.Top),

new Point2f (rect.Right,rect.Bottom),

new Point2f (rect.Left,rect.Bottom)

};

Point2f[] boundaryPoints = new Point2f[4];

var A = M.Get(0, 0);

var B = M.Get(0, 1);

var C = M.Get(0, 2); //Tx

var D = M.Get(1, 0);

var E = M.Get(1, 1);

var F = M.Get(1, 2); //Ty

for(int i=0;i<4;i++)

{

boundaryPoints[i].X = (float)((A * srcPoint2Fs[i].X) + (B * srcPoint2Fs[i].Y) + C);

boundaryPoints[i].Y = (float)((D * srcPoint2Fs[i].X) + (E * srcPoint2Fs[i].Y) + F);

if (boundaryPoints[i].X < 0)

boundaryPoints[i].X = 0;

else if (boundaryPoints[i].X > nW)

boundaryPoints[i].X = nW;

if (boundaryPoints[i].Y < 0)

boundaryPoints[i].Y = 0;

else if (boundaryPoints[i].Y > nH)

boundaryPoints[i].Y = nH;

}

Point2f cenP = new Point2f((boundaryPoints[0].X + boundaryPoints[2].X) / 2,

(boundaryPoints[0].Y + boundaryPoints[2].Y) / 2);

double ang = angle;

double width1=Math.Sqrt(Math.Pow(boundaryPoints[0].X- boundaryPoints[1].X ,2)+

Math.Pow(boundaryPoints[0].Y - boundaryPoints[1].Y,2));

double width2 = Math.Sqrt(Math.Pow(boundaryPoints[0].X - boundaryPoints[3].X, 2) +

Math.Pow(boundaryPoints[0].Y - boundaryPoints[3].Y, 2));

//double width = width1 > width2 ? width1 : width2;

//double height = width1 > width2 ? width2 : width1;

imgBounding = new RotatedRect(cenP, new Size2f(width1, width2), (float)ang);

Mat mask = new Mat(newImg.Size(), MatType.CV_8UC3, Scalar.Black);

mask.DrawRotatedRect(imgBounding, Scalar.White, 1);

Cv2.FloodFill(mask, new CVPoint(imgBounding.Center.X, imgBounding.Center.Y), Scalar.White);

// mask.ConvertTo(mask, MatType.CV_8UC1);

//mask.CopyTo(maskMat);

//掩膜复制给maskMat

Cv2.CvtColor(mask, maskMat, ColorConversionCodes.BGR2GRAY);

Mat _maskRoI = new Mat();

Cv2.CvtColor(mask, _maskRoI, ColorConversionCodes.BGR2GRAY);

Mat buf = new Mat();

//# 黑白反转

Cv2.BitwiseNot(_maskRoI, buf);

Mat dst = new Mat();

Cv2.BitwiseAnd(newImg, newImg, dst, _maskRoI);

//Mat dst2 = new Mat();

//Cv2.BitwiseOr(buf, dst, dst2);

return dst;

} c) 旋转后的模板图像会产生无效区域,如果将他们也参与匹配计算,那么相似度会有所下降,影响匹配效果,因此,我们需要加入mask掩膜图像,屏蔽掉无效区域;上面的代码同时也计算出旋转后的无效区域同时将它作为mask。

newtemplate = ImageRotate(model, start + step * i, ref rotatedRect, ref mask);

if (newtemplate.Width > src.Width || newtemplate.Height > src.Height)

continue;

Cv2.MatchTemplate(src, newtemplate, result, matchMode, mask);

Cv2.MinMaxLoc(result, out double minval, out double maxval, out CVPoint minloc, out CVPoint maxloc, new Mat());

d)搜索的角度步长可以根据需要的精度,在上采样的过程逐渐增加,如果底层需要的精度为1,在下采样的过程可以指数逐级递增,毕竟在实际的使用过程我们还是需要它们以较快的速度完成整个搜索过程的,上采样的过程主要是逐级提升检测精度,位置和角度。以上一层搜索到的点位为中心,左右一倍模板的宽度,上下一倍模板的高度来作为新的搜索区域,逐级来完成上采样。

//开始上采样

Rect cropRegion = new CVRect(0, 0, 0, 0);

//for (int j = numLevels - 1; j >= 0; j--)

{

//为了提升速度,直接上采样到最底层

for (int i = 0; i < numLevels; i++)

{

Cv2.PyrUp(src, src, new Size(src.Cols * 2,

src.Rows * 2));//下一层,放大2倍

Cv2.PyrUp(model, model, new Size(model.Cols * 2,

model.Rows * 2));//下一层,放大2倍

}

location.X *= (int)Math.Pow(2, numLevels);

location.Y *= (int)Math.Pow(2, numLevels);

modelrrect = new RotatedRect(new Point2f((float)(modelrrect.Center.X * Math.Pow(2, numLevels)),//下一层,放大2倍

(float)(modelrrect.Center.Y * Math.Pow(2, numLevels))),

new Size2f(modelrrect.Size.Width * Math.Pow(2, numLevels),

modelrrect.Size.Height * Math.Pow(2, numLevels)), 0);

CVPoint cenP = new CVPoint(location.X + modelrrect.Center.X,

location.Y + modelrrect.Center.Y);//投影到下一层的匹配点位中心

int startX = cenP.X - model.Width;

int startY = cenP.Y - model.Height;

int endX = cenP.X + model.Width;

int endY = cenP.Y + model.Height;

cropRegion = new CVRect(startX, startY, endX - startX, endY - startY);

cropRegion = cropRegion.Intersect(new CVRect(0, 0, src.Width, src.Height));

Mat newSrc = MatExtension.Crop_Mask_Mat(src, cropRegion);

//每下一层金字塔,角度间隔减少2倍

step = 1;

//角度开始和范围

range = 20;

start = angle - 10;

bool testFlag = false;

for (int k = 0; k <= (int)range / step; k++)

{

newtemplate = ImageRotate(model, start + step * k, ref rotatedRect, ref mask);

if (newtemplate.Width > newSrc.Width || newtemplate.Height > newSrc.Height)

continue;

Cv2.MatchTemplate(newSrc, newtemplate, result, TemplateMatchModes.CCoeffNormed);

Cv2.MinMaxLoc(result, out double minval, out double maxval,

out CVPoint minloc, out CVPoint maxloc, new Mat());

if (maxval > temp)

{

//局部坐标

location.X = maxloc.X;

location.Y = maxloc.Y;

temp = maxval;

angle = start + step * k;

//局部坐标

modelrrect = rotatedRect;

testFlag = true;

}

}

if (testFlag)

{

//局部坐标--》整体坐标

location.X += cropRegion.X;

location.Y += cropRegion.Y;

}

}e)最后就是将测试的结果绘制出来,作为OVERLAY显示在图像上面

可参考代码:

if (result.Score<= 0)

{

currvisiontool.DrawText(new TextEx("模板匹配失败!") { x = 1000, y = 10, brush = new SolidBrush(Color.Red) });

currvisiontool.AddTextBuffer(new TextEx("模板匹配失败!") { x = 1000, y = 10, brush = new SolidBrush(Color.Red) });

stuModelMatchData.runFlag = false;

return;

}

currvisiontool.DrawText(new TextEx("得分:" + result.Score.ToString("f3")));

currvisiontool.AddTextBuffer(new TextEx("得分:" + result.Score.ToString("f3")));

currvisiontool.DrawText(new TextEx("偏转角度:" + result.T.ToString("f3")) { x = 10, y = 100 });

currvisiontool.AddTextBuffer(new TextEx("偏转角度:" + result.T.ToString("f3")) { x = 10, y = 100 });

currvisiontool.DrawText(new TextEx(string.Format("匹配点位X:{0},Y:{1}", result.X.ToString("f3"),

result.Y.ToString("f3")))

{ x = 10, y = 200 });

currvisiontool.AddTextBuffer(new TextEx(string.Format("匹配点位X:{0},Y:{1}", result.X.ToString("f3"),

result.Y.ToString("f3")))

{ x = 10, y = 200 });3. 过程效果演示图

a) 创建模板:选择具有稳定特征,和背景有一定的对比区分的图像区域来制作模板

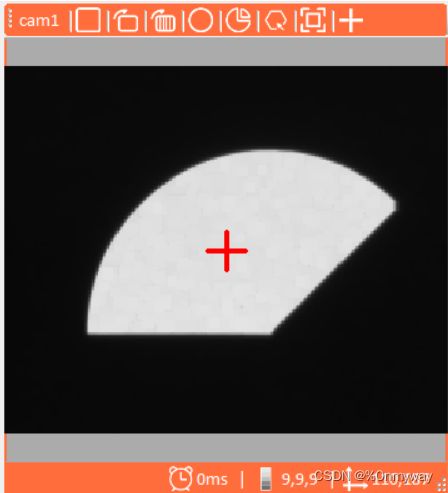

b)模板旋转后的图像

c)旋转后对应的掩膜图像

4. NCC多角度模板匹配核心代码段如下:

///

/// 创建NCC模板

///

///

///

/// ///

/// 多角度模板匹配方法(NCC:归一化相关系数)

///

/// 待匹配图像

/// 模板图像

/// 起始角度

/// 角度范围

/// 角度步长

/// 金字塔层级

/// 得分阈值

/// 5. 总结

好了,到这步基本完成了整个过程,当然还有一些需要优化的过程,比如:重叠覆盖,亚像素,以及搜索速度在当前金字塔的基础上进一步优化等,有兴趣的朋友以后可以一起探讨一下,网上C#的资料较少,当前也是参考了大佬们C++和python的技术资料,希望能做一个较好版本的C#解决方案。

ps: 学习如逆水行舟,不进则退!