Layer Norm

参考ConvNeXt中的Layer Normalization(LN) - 海斌的文章 - 知乎

https://zhuanlan.zhihu.com/p/481901798

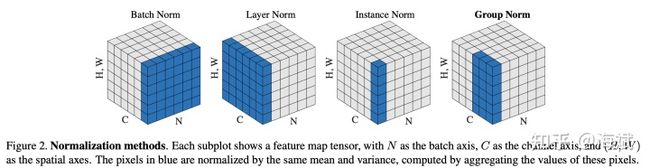

Layer Norm本来是一个样本norm自己,如图所示:

也就是说,在[C,H,W]维进行归一化

而ConvNeXt中是这样:

也就是在C的维度归一化,即单一像素的所有channel做归一化。

两者实现方式上有所不同。

可以用F.layer_norm实现,也可以用nn.layer_norm实现,这里选用F.layer_norm

看ConvNeXt的实现

class LayerNorm2d(nn.LayerNorm):

"""LayerNorm on channels for 2d images.

Args:

num_channels (int): The number of channels of the input tensor.

eps (float): a value added to the denominator for numerical stability.

Defaults to 1e-5.

elementwise_affine (bool): a boolean value that when set to ``True``,

this module has learnable per-element affine parameters initialized

to ones (for weights) and zeros (for biases). Defaults to True.

"""

def __init__(self, num_channels: int, **kwargs) -> None:

super().__init__(num_channels, **kwargs)

self.num_channels = self.normalized_shape[0]

def forward(self, x):

assert x.dim() == 4, 'LayerNorm2d only supports inputs with shape ' \

f'(N, C, H, W), but got tensor with shape {x.shape}'

return F.layer_norm(

x.permute(0, 2, 3, 1), self.normalized_shape, self.weight,

self.bias, self.eps).permute(0, 3, 1, 2)

LayerNorm2d继承的nn.LayerNorm,在初始化时调用了父类的init函数,我们来看看父类是干了啥?

def __init__(self, normalized_shape: _shape_t, eps: float = 1e-5, elementwise_affine: bool = True,

device=None, dtype=None) -> None:

***

self.normalized_shape = tuple(normalized_shape)

实际上这里仅仅是把num_channels,通道数,存入了self.normalized_shape,并且用一个tuple保存。

所以说,关键是forward里面的F.layer_norm

我们看看传入的参数

第一个参数是转置后的x,也就是N,C,H,W转置为N,H,W,C,把通道数放在最后一个维度,过F.layer_norm,normalized_shape实际上就是前面init函数里传入的num_channels,就是一个数字。

接下来我们看看F.layer_norm的用法:

其实和nn.layernorm基本是一样的,只是不用事先实例化,这样的话参数要一起传进去。

对于nn.layernorm来说,

args:

normalized_shape (int or list or torch.Size): input shape from an expected input of size

If a single integer is used, it is treated as a singleton list, and this module will

normalize over the last dimension which is expected to be of that specific size.

eps: a value added to the denominator for numerical stability. Default: 1e-5

上面这段话的意思就是说,这个normalized_shape可以是一个数,也可以是一个list,如果是一个数,则默认在最后一维归一化,且这个数需要等于最后一维的维度;如果是一个list,则这个list需要匹配从后往前的不同维度的维数

eg.

a.shape = (100. 96, 8, 8)#也就是100个96维的8x8的feature

如果是普通的layer norm,normalized_shape=[96, 8, 8]

如果这个参数是8,则在最后一维进行归一化

如果希望在所有point的channel归一化,如ConvNeXt

则先转置,把channel转到最后一维,然后normalized_shape=num_channel即可

那么ConvNeXt的layer norm如何改成普通的layer norm呢?

如代码所示:

class LayerNorm2d(nn.LayerNorm):

def __init__(self, num_channels: int, **kwargs) -> None:

super().__init__(num_channels, **kwargs)

self.num_channels = self.normalized_shape[0]

def forward(self, x):

return F.layer_norm(

x.permute(0, 2, 3, 1), self.normalized_shape, self.weight,

self.bias, self.eps).permute(0, 3, 1, 2)

实际上只需要改forward里即可,即不转置,且normalized_shape参数传入x的最后三3维度

class LayerNorm2d(nn.LayerNorm):

def __init__(self, num_channels: int, **kwargs) -> None:

super().__init__(num_channels, **kwargs)

self.num_channels = self.normalized_shape[0]

def forward(self, x):

return F.layer_norm(

x, x.shape[1:], self.weight,

self.bias, self.eps))