【论文阅读】Revisiting self-supervised visual representation learning

0、写在前面

比起其他设计 novel SSL pretext task 的文章,这篇文章主要是做实验探究:network architecture 对 SSL pretext task 后学到 representation 好坏的影响。

1、结论

- Architecture choices which negligibly affect performance in the fully labeled setting, may significantly affect performance in the self-supervised setting.

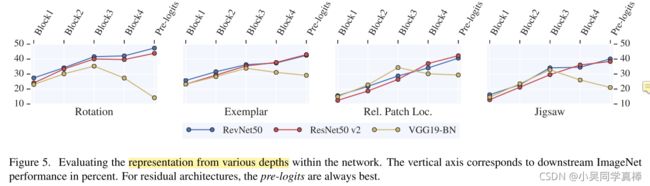

- In contrast to previous observations with the AlexNet architecture [11, 48, 31], the quality of learned representations in CNN architectures with skip-connections does not degrade towards the end of the model.

- Increasing the number of filters in a CNN model and, consequently, the size of the representation significantly and consistently increases the quality of the learned visual representations.

- The evaluation procedure, where a linear model is trained on a fixed visual representation using stochastic gradient descent, is sensitive to the learning rate schedule and may take many epochs to converge.

2、实验

Architectures of CNN models:ResNet、RevNet、VGG

SSL pretext tasks:Rotation [11]、Exemplar [9]、Jigsaw [31]、Relative Patch Location [7]

Datasets:ImageNet、Places205

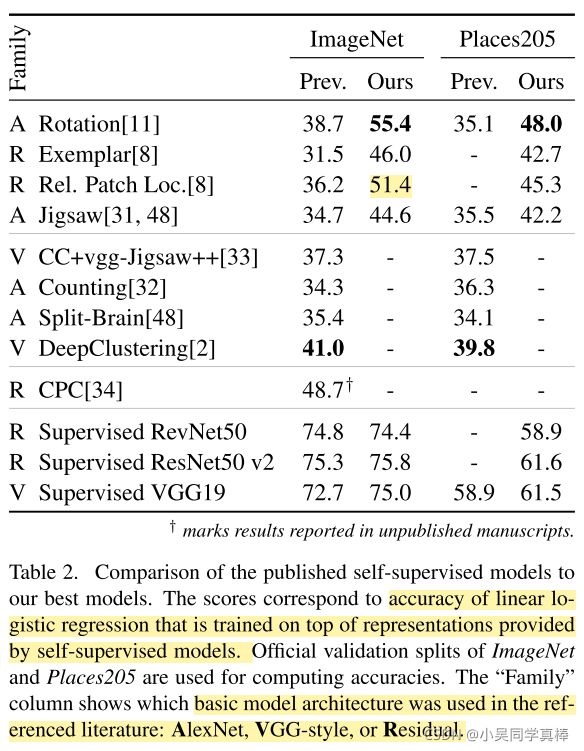

Obeservation 1:similar models often result in visual representations that have significantly different performance. Importantly, neither is the ranking of architectures consistent across different methods, nor is the ranking of methods consistent across architectures.

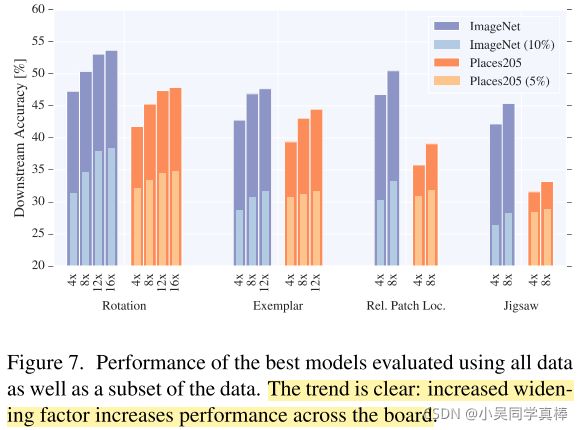

Obeservation 2:increasing the number of channels in CNN models improves performance of self- supervised models.

Obeservation 3:ranking of models evaluated on Places205 is consistent with that of models evaluated on ImageNet, indicating that our findings generalize to new datasets.

Obeservation 4:As a result of selecting the right architecture for each self-supervision and increasing the widening factor, our models significantly outperform previously reported results. These results reinforce our main insight that in self-supervised learning architecture choice matters as much as choice of a pretext task.

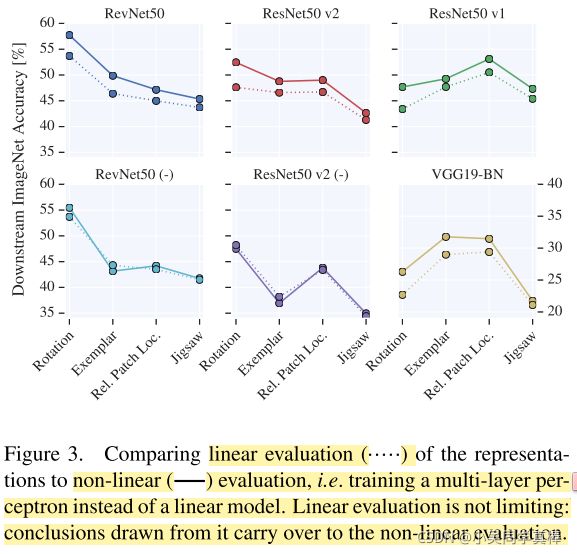

Obeservation 5:the MLP provides only marginal improvement over the linear evaluation and the relative performance of various settings is mostly unchanged. We thus conclude that the linear model is adequate for evaluation purposes.

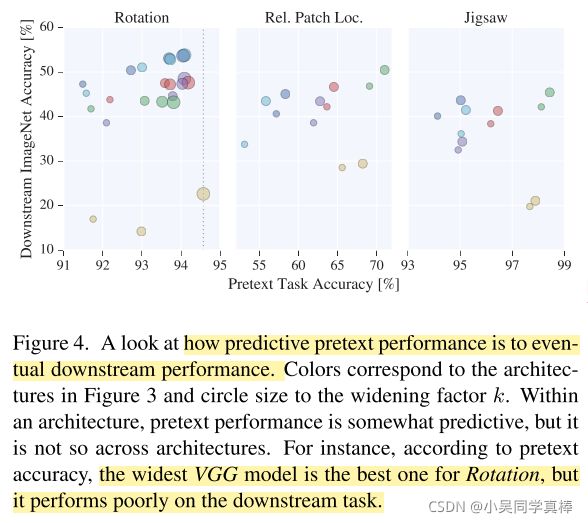

Obeservation 6:Better performance on the pretext task does not always translate to better representations.

Obeservation 7:Skip-connections prevent degradation of representation quality towards the end of CNNs. Similar to prior observations [11, 48, 31] for AlexNet [25], the quality of representations in VGG19-BN deteriorates towards the end of the network. We believe that this happens because the models specialize to the pretext task in the later layers and, consequently, discard more general semantic features present in the middle layers.

Obeservation 8:In essence, it is possible to increase performance by increasing either model capacity, or representation size, but increasing both jointly helps most. Besides, we observe that increasing the widening factor consistently boosts performance in both the full- and low-data regimes.

Obeservation 9:the importance of the SGD optimization schedule for training logistic regression in downstream tasks. Our initial experiments suggest that when the first decay is made has a large influence on the final accuracy. Surprisingly, we observe that very long training (≈ 500 epochs) results in higher accuracy. Thus, we conclude that SGD optimization hyperparameters play an important role and need to be reported.