Jenkins 持续集成十七(基于 K8S 构建 Jenkins 持续集成平台一)

目录

-

- 一、Jenkins 的 Master-Slave 分布式构建

- 1. 什么是 Master-Slave 分布式构建

- 2. 如何实现 Master-Slave 分布式构建

-

- 2.1 开启代理程序的 TCP 端口

- 2.2 新建节点

- 2.3 slave1 操作

- 2.4 自由风格项目测试

- 2.5 流水线项目测试

- 二、K8S 实现 Master-Slave 分布式构建方案

-

- 1. 传统 Jenkins 的 Master-Slave 方案的缺陷

- 2.K8S + Docker + Jenkins 持续集成架构图

- 3. K8S + Docker + Jenkins 持续集成方案好处

- 三、集群环境搭建

-

- 1. 所需虚拟机

- 2. K8S 环境安装

-

- 2.1 修改三台机器的 hostname 及 hosts 文件

- 2.2 关闭防火墙,selinux,swap

- 2.3 设置系统参数,加载 br_netfilter 模块

- 2.4 设置允许路由转发,不对 bridge 的数据进行处理

- 2.5 安装 docker

- 2.6 kube-proxy 开启 ipvs 的前置条件

- 2.7 设置 yum 安装源

- 2.8 安装 K8S(三个节点)

- 2.9 部署 Master 节点

- 2.10 node 节点加入集群

- 2.11 安装 Calico 网络插件(master 节点)

- 2.12 node 节点启动 kubelet

- 3. kubectl 常用命令

- 四,安装和配置 NFS

-

- 1. NFS 简介

- 2. NFS 安装

- 五,在 Kubernetes 安装 Jenkins-Master

-

- 1. 创建 NFS client provisioner

- 2. 安装 Jenkins-Master

- 3. 设置插件下载地址

- 4. 安装基本的插件

- 六,Jenkins 与 Kubernetes 整合

-

- 1. 安装 Kubernetes 插件

- 2. 实现 Jenkins 与 Kubernetes 整合

- 3. 构建 Jenkins-Slave 自定义镜像

- 4. 将镜像上传仓库

- 5. 测试 Jenkins-Slave 是否可以创建

-

- 5.1 创建凭据

- 5.2 创建一个 Jenkins 流水线项目

- 5.3 构建查看结果

- 七,Jenkins + K8S + Docker 微服务持续集成测试

-

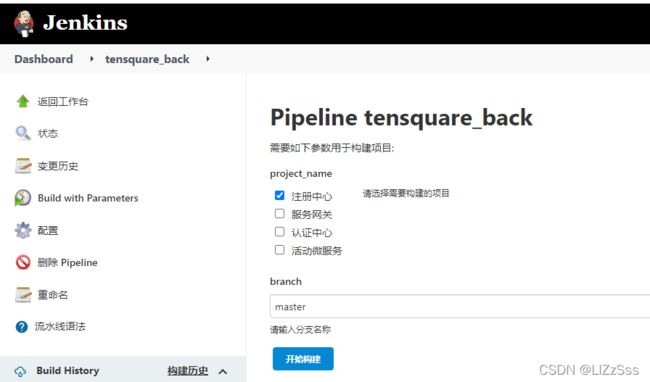

- 1. Jenkins 创建项目

- 2. 拉取代码,构建镜像

- 八,基于 K8S 平台微服务完整部署过程

-

- 1. 安装插件

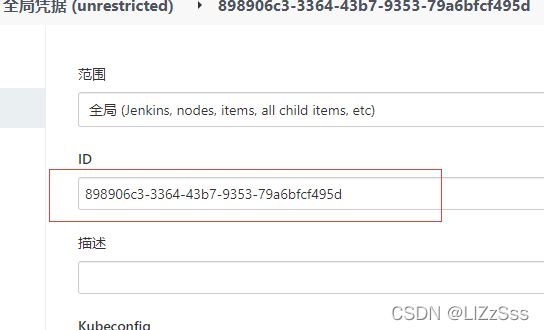

- 2. 创建 K8S 凭证

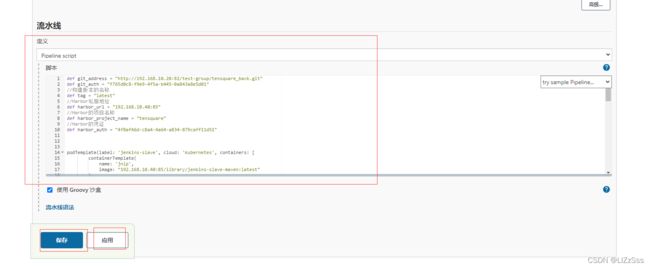

- 3. 修改 Pipeline script

- 4. IDEA 项目添加配置文件

-

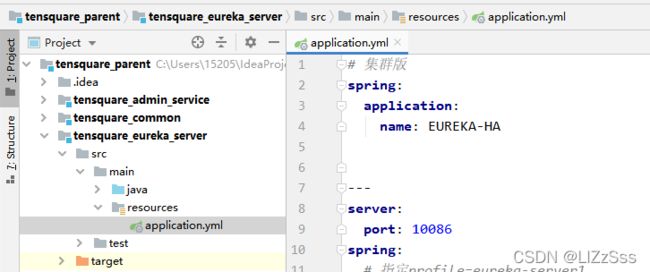

- 4.1 eureka

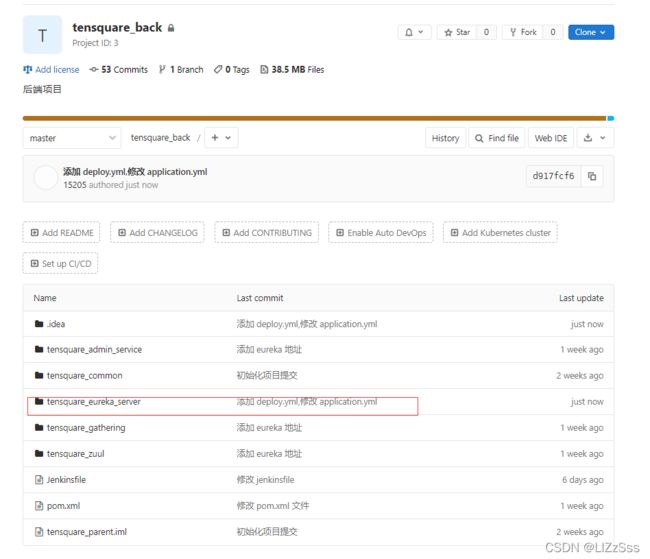

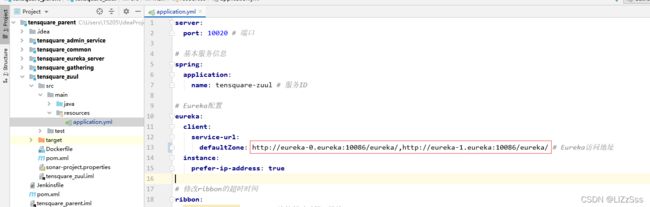

- 4.2 zuul

- 4.3 admin

- 4.4 gathering

- 5. K8S 集群 设置 harbor 访问权限

- 6. 构建微服务

-

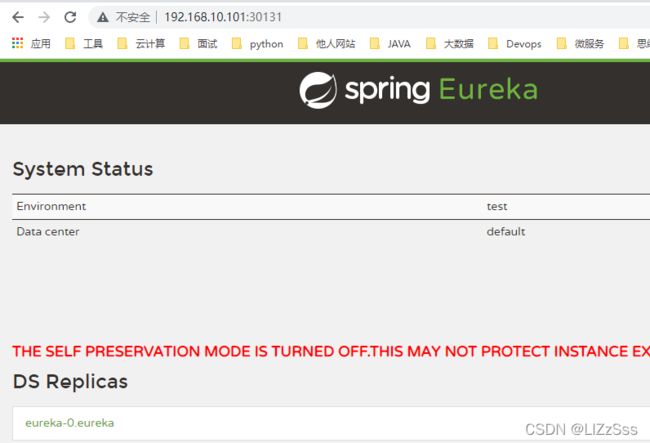

- 6.1 eureka

- 6.2 zuul

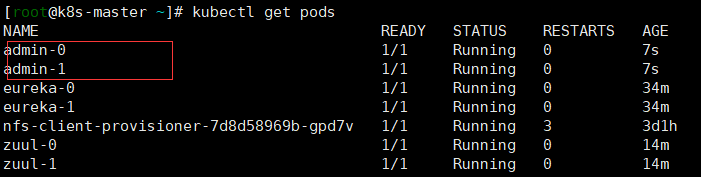

- 6.3 admin

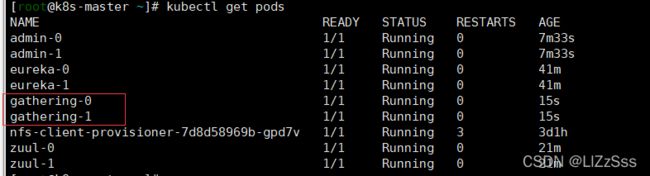

- 6.4 gathering

- 7. postman 测试数据库

- 8. 前端与后端对接

一、Jenkins 的 Master-Slave 分布式构建

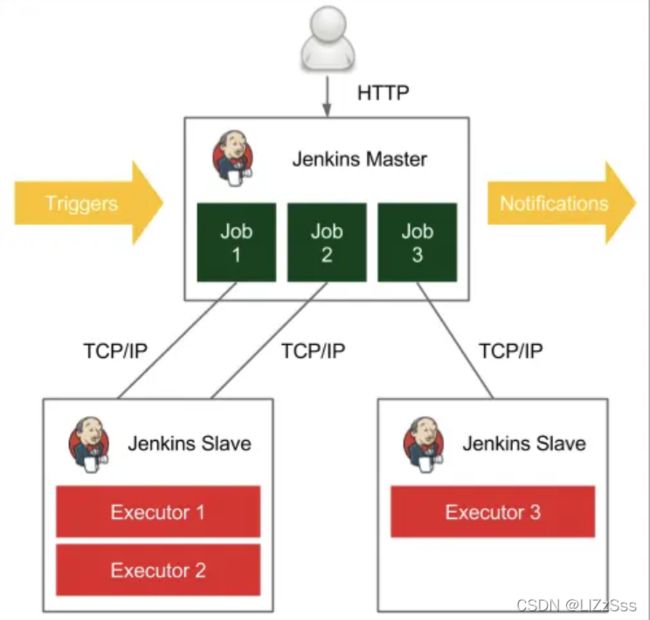

1. 什么是 Master-Slave 分布式构建

Jenkins 的 Master-Slave 分布式构建,就是通过将构建过程分配到从属 Slave 节点上,从而减轻 Master 节点的压力,而且可以同时构建多个,有点类似负载均衡的概念。

Jenkins 集群不同于 redis 集群(redis 集群是去中心化的,失效一个节点其他的还能用),Jenkins 集群是 master-slave 的形式,Jenkinsmaster 负责中心调度,如果 master 是单节点,其发生故障后集群就失去了功能。所以我们用 K8S 部署 Jenkins 集群,Jenkins 以 Pod 的形式存在,这样 master 就具有了自愈功能。

2. 如何实现 Master-Slave 分布式构建

先不使用 K8S 集群方式,使用裸金属形式集群

2.1 开启代理程序的 TCP 端口

Manage Jenkins -> Configure Global Security

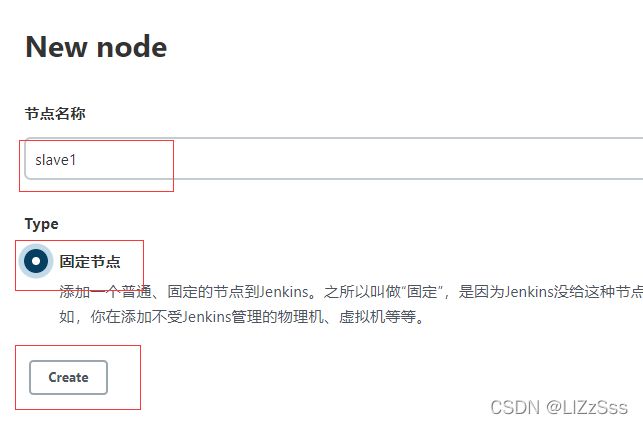

2.2 新建节点

Manage Jenkins -> Manage Nodes -> 新建节点

点击 slave1

下载的 agent.jar 需要上传到 slave1

2.3 slave1 操作

192.168.10.116

[root@c7-6 ~]#mkdir /root/jenkins

[root@c7-6 ~]#yum -y install git &> /dev/null

[root@c7-6 ~]#rz -E

rz waiting to receive.

[root@c7-6 ~]#ll

总用量 1488

-rw-r--r-- 1 root root 1522173 3月 7 15:56 agent.jar

drwxr-xr-x 2 root root 6 3月 7 15:10 jenkins

[root@c7-6 ~]#yum install java-1.8.0-openjdk* -y

......

[root@c7-6 ~]#java -version

......

[root@c7-6 ~]#java -jar agent.jar -jnlpUrl http://192.168.10.30:8888/computer/slave1/jenkins-agent.jnlp -secret 84da3507a13a1c6b65a83fff1352dc14c85579dad83c3feaea4b1fc802039a11 -workDir "/root/jenkins"

......

三月 07, 2022 4:09:01 下午 hudson.remoting.jnlp.Main$CuiListener status

信息: Remote identity confirmed: 12:9d:a7:ef:1b:c6:ea:20:4f:44:c3:e4:84:4a:fb:c0

三月 07, 2022 4:09:01 下午 hudson.remoting.jnlp.Main$CuiListener status

信息: Connected

非阻塞式启动:nohup … &

slave1 已经加入了集群

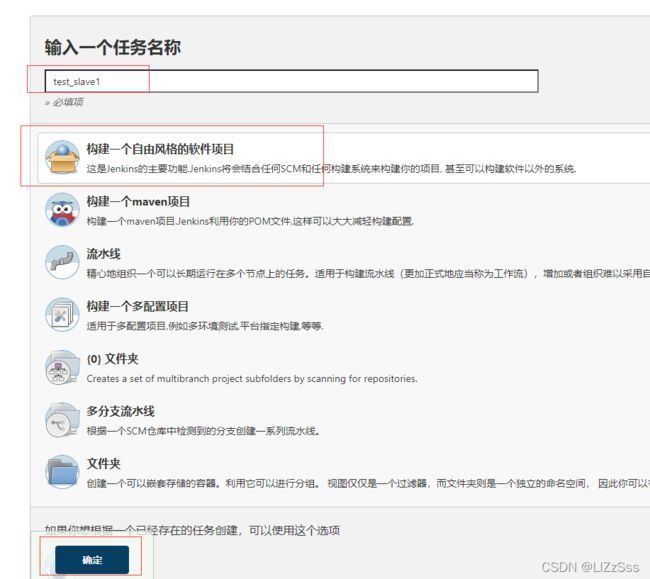

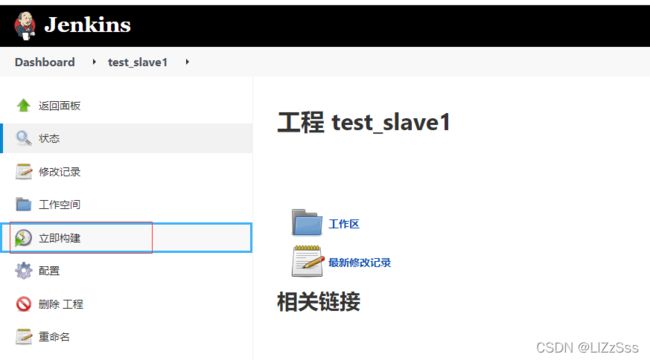

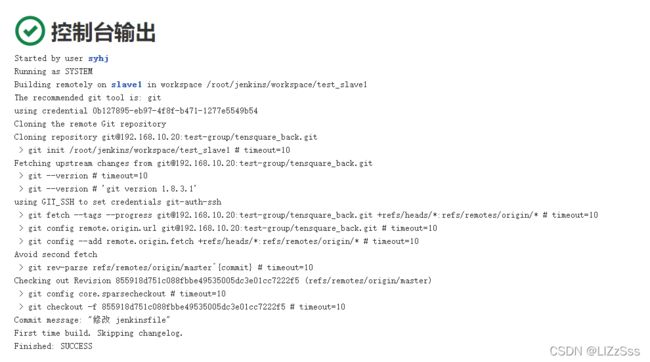

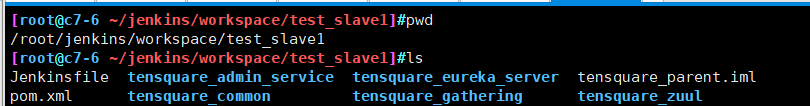

2.4 自由风格项目测试

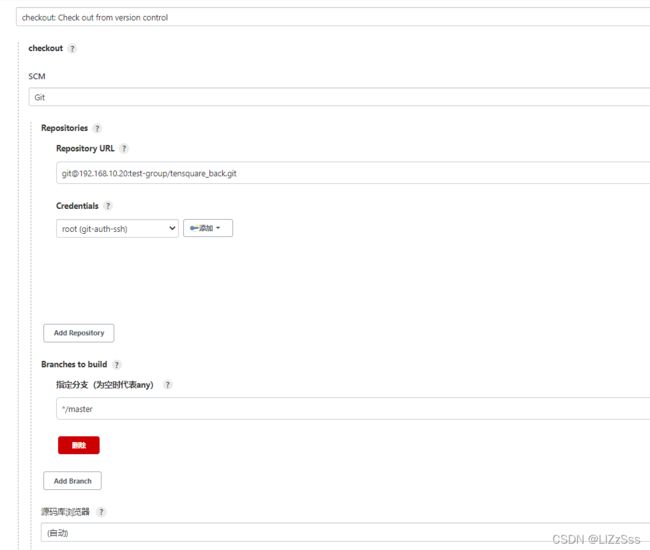

2.5 流水线项目测试

node('slave1') {

stage('pull code') {

checkout([$class: 'GitSCM', branches: [[name: '*/master']], extensions: [], userRemoteConfigs: [[credentialsId: '0b127895-eb97-4f8f-b471-1277e5549b54', url: '[email protected]:test-group/tensquare_back.git']]])

}

}

二、K8S 实现 Master-Slave 分布式构建方案

1. 传统 Jenkins 的 Master-Slave 方案的缺陷

- Master 节点发生单点故障时,整个流程都不可用了。

- 每个 Slave 节点的配置环境不一样,来完成不同语言的编译打包等操作,但是这些差异化的配置导致管理起来非常不方便,维护起来也是比较费劲。

- 资源分配不均衡,有的 Slave 节点要运行的 job 出现排队等待,而有的 Slave 节点处于空闲状态。

- 资源浪费,每台 Slave 节点可能是实体机或者 VM,当 Slave 节点处于空闲状态时,也不会完全释放掉资源。

以上种种问题,我们可以引入 Kubernates 来解决!

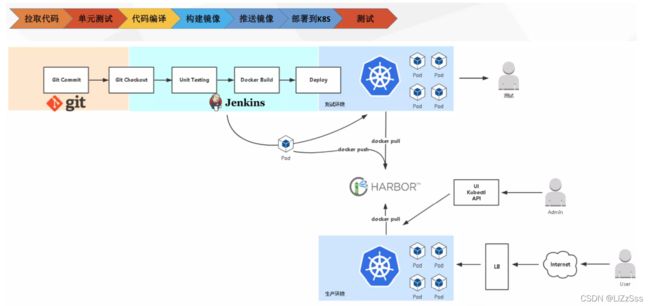

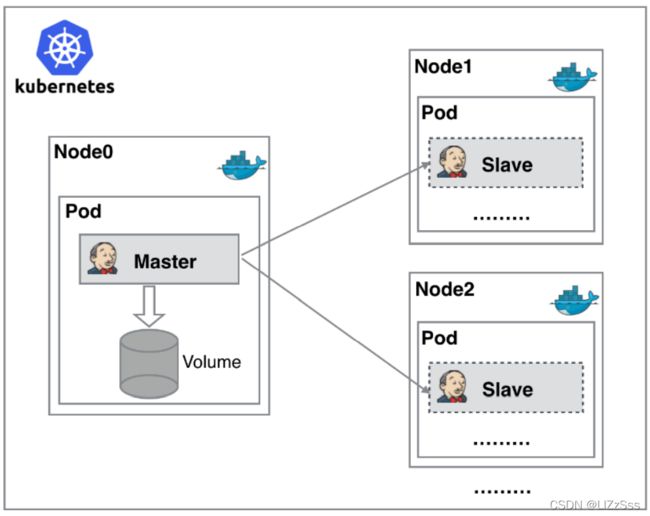

2.K8S + Docker + Jenkins 持续集成架构图

大致工作流程:

手动/自动构建 -> Jenkins 调度 K8S API -> 动态生成 Jenkins Slave pod -> Slave pod 拉取 Git 代码/编译/打包镜像 -> 推送到镜像仓库 Harbor -> Slave 工作完成,Pod 自动销毁 -> 部署到测试或生产 Kubernetes 平台。(完全自动化,无需人工干预)

3. K8S + Docker + Jenkins 持续集成方案好处

- 服务高可用:当 Jenkins Master 出现故障时,Kubernetes 会自动创建一个新的 Jenkins Master 容器,并且将 Volume 分配给新创建的容器,保证数据不丢失,从而达到集群服务高可用。

- 动态伸缩,合理使用资源:每次运行 Job 时,会自动创建一个 Jenkins Slave,Job 完成后,Slave 自动注销并删除容器,资源自动释放,而且 Kubernetes 会根据每个资源的使用情况,动态分配 Slave 到空闲的节点上创建,降低出现因某节点资源利用率高,还排队等待在该节点的情况。

- 扩展性好:当 Kubernetes 集群的资源严重不足而导致 Job 排队等待时,可以很容易的添加一个 Kubernetes Node 到集群中,从而实现扩展。

三、集群环境搭建

1. 所需虚拟机

| 主机名称 | IP 地址 | 安装的软件 |

|---|---|---|

| 主机名称 IP 地址 安装的软件 | ||

| 代码托管服务器 | 192.168.10.20 | Gitlab-12.4.2 |

| Docker 仓库服务器 | 192.168.10.40 | Harbor1.9.2 |

| k8s-master | 192.168.10.100 | kube-apiserver、kube-controller-manager、kube- scheduler、docker、etcd、calico,NFS |

| k8s-node1 | 192.168.10.101 | kubelet、kubeproxy、Docker18.06.1-ce |

| k8s-node2 | 192.168.10.102 | kubelet、kubeproxy、Docker18.06.1-ce |

保留 Gitlab 和 harbor 服务器,其他的挂起。

2. K8S 环境安装

2.1 修改三台机器的 hostname 及 hosts 文件

hostname

hostnamectl set-hostname k8s-master && su

hostnamectl set-hostname k8s-node1 && su

hostnamectl set-hostname k8s-node2 && su

/etc/hosts

cat >>/etc/hosts<2.2 关闭防火墙,selinux,swap

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

swapoff -a

2.3 设置系统参数,加载 br_netfilter 模块

modprobe br_netfilter

2.4 设置允许路由转发,不对 bridge 的数据进行处理

cat >>/etc/sysctl.d/k8s.conf <sysctl -p /etc/sysctl.d/k8s.conf

2.5 安装 docker

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum -y install epel-release && yum clean all && yum makecache #如果无法安装docker再执行

yum -y install docker-ce-18.06.1.ce-3.el7 #版本可自选,该版本比较稳定

# yum install docker-ce-20.10.7 docker-ce-cli-20.10.7 containerd.io -y

systemctl start docker && systemctl enable docker

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

systemctl restart docker

2.6 kube-proxy 开启 ipvs 的前置条件

cat > /etc/sysconfig/modules/ipvs.modules <chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

2.7 设置 yum 安装源

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2.8 安装 K8S(三个节点)

yum install -y kubelet-1.17.0 kubeadm-1.17.0 kubectl-1.17.0

systemctl enable kubelet

2.9 部署 Master 节点

初始化 kubeadm

kubeadm init \

--kubernetes-version=1.17.0 \

--apiserver-advertise-address=192.168.10.100 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

systemctl restart kubelet

配置 kubectl 工具

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

2.10 node 节点加入集群

2.11 安装 Calico 网络插件(master 节点)

wget --no-check-certificate https://docs.projectcalico.org/v3.10/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

sed -i 's/192.168.0.0/10.244.0.0/g' calico.yaml

kubectl apply -f calico.yaml

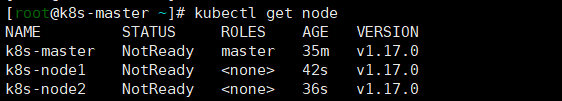

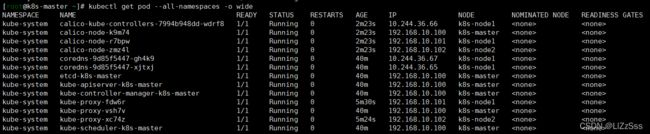

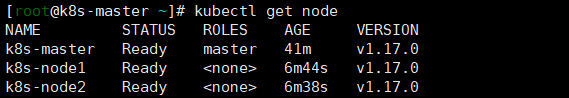

查看所有 Pod 的状态,确保所有 Pod 都是 Running 状态

kubectl get pod --all-namespaces -o wide

2.12 node 节点启动 kubelet

systemctl start kubelet

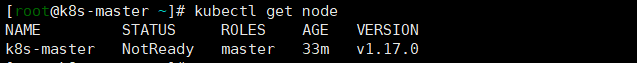

3. kubectl 常用命令

kubectl get nodes #查看所有主从节点的状态

kubectl get ns #获取所有 namespace 资源

kubectl get pods -n {$nameSpace} #获取指定 namespace 的 pod

kubectl describe [pod的名称] -n {$nameSpace} #查看某个 pod 的执行过程

kubectl logs --tail=1000 [pod的名称] | less #查看日志

kubectl apply -f xxx.yml #通过配置文件创建一个集群资源对象

kubectl delete -f xxx.yml #通过配置文件删除一个集群资源对象

四,安装和配置 NFS

1. NFS 简介

NFS(Network File System),它最大的功能就是可以通过网络,让不同的机器、不同的操作系统可以共享彼此的文件。我们可以利用 NFS 共享 Jenkins 运行的配置文件、Maven 的仓库依赖文件等。

2. NFS 安装

我们把 nfs-server 安装在 192.168.10.100(master)机器上

安装 NFS 服务(所有 k8s 节点执行)

yum install -y nfs-utils

创建共享目录(master 节点)

mkdir -p /opt/nfs/jenkins # 存放 jenkins 配置文件

vim /etc/exports

#编写 NFS 的共享配置

/opt/nfs/jenkins *(rw,no_root_squash)

# * 代表对所有 IP 都开放此目录,rw 是读写

启动服务(master)

systemctl enable nfs && systemctl start nfs

查看 NFS 共享目录

showmount -e 192.168.10.100

五,在 Kubernetes 安装 Jenkins-Master

1. 创建 NFS client provisioner

nfs-client-provisioner 是一个 Kubernetes 的简易 NFS 的外部 provisioner,本身不提供 NFS,需要现有的 NFS 服务器提供存储

上传 nfs-client-provisioner 构建文件

注意修改 deployment.yaml,使用之前配置的 NFS 服务器和目录

deployment.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: lizhenliang/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.10.100

- name: NFS_PATH

value: /opt/nfs/jenkins/

volumes:

- name: nfs-client-root

nfs:

server: 192.168.10.100

path: /opt/nfs/jenkins/

class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "true"

rbac.yaml

kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-client-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

构建 nfs-client-provisioner 的 pod 资源

[root@k8s-master ~]# mkdir nfs-client

[root@k8s-master ~]# cd nfs-client

# 上传三个 yaml 文件

[root@k8s-master ~/nfs-client]# vim deployment.yaml

[root@k8s-master ~/nfs-client]# ls

class.yaml deployment.yaml rbac.yaml

[root@k8s-master ~/nfs-client]# kubectl apply -f .

storageclass.storage.k8s.io/managed-nfs-storage created

serviceaccount/nfs-client-provisioner created

deployment.apps/nfs-client-provisioner created

serviceaccount/nfs-client-provisioner unchanged

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

[root@k8s-master ~/nfs-client]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-7d8d58969b-gpd7v 1/1 Running 0 47s

2. 安装 Jenkins-Master

上传 Jenkins-Master 构建文件

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: jenkins

namespace: kube-ops

rules:

- apiGroups: ["extensions", "apps"]

resources: ["deployments"]

verbs: ["create", "delete", "get", "list", "watch", "patch", "update"]

- apiGroups: [""]

resources: ["services"]

verbs: ["create", "delete", "get", "list", "watch", "patch", "update"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/exec"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get","list","watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: jenkins

namespace: kube-ops

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: jenkins

subjects:

- kind: ServiceAccount

name: jenkins

namespace: kube-ops

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: jenkinsClusterRole

namespace: kube-ops

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/exec"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get","list","watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: jenkinsClusterRuleBinding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: jenkinsClusterRole

subjects:

- kind: ServiceAccount

name: jenkins

namespace: kube-ops

Service.yaml

apiVersion: v1

kind: Service

metadata:

name: jenkins

namespace: kube-ops

labels:

app: jenkins

spec:

selector:

app: jenkins

type: NodePort

ports:

- name: web

port: 8080

targetPort: web

- name: agent

port: 50000

targetPort: agent

ServiceaAcount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: jenkins

namespace: kube-ops

StatefulSet.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: jenkins

labels:

name: jenkins

namespace: kube-ops

spec:

serviceName: jenkins

selector:

matchLabels:

app: jenkins

replicas: 1

updateStrategy:

type: RollingUpdate

template:

metadata:

name: jenkins

labels:

app: jenkins

spec:

terminationGracePeriodSeconds: 10

serviceAccountName: jenkins

containers:

- name: jenkins

image: jenkins/jenkins:lts-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

name: web

protocol: TCP

- containerPort: 50000

name: agent

protocol: TCP

resources:

limits:

cpu: 1

memory: 1Gi

requests:

cpu: 0.5

memory: 500Mi

env:

- name: LIMITS_MEMORY

valueFrom:

resourceFieldRef:

resource: limits.memory

divisor: 1Mi

- name: JAVA_OPTS

value: -Xmx$(LIMITS_MEMORY)m -XshowSettings:vm -Dhudson.slaves.NodeProvisioner.initialDelay=0 -Dhudson.slaves.NodeProvisioner.MARGIN=50 -Dhudson.slaves.NodeProvisioner.MARGIN0=0.85

volumeMounts:

- name: jenkins-home

mountPath: /var/jenkins_home

livenessProbe:

httpGet:

path: /login

port: 8080

initialDelaySeconds: 60

timeoutSeconds: 5

failureThreshold: 12

readinessProbe:

httpGet:

path: /login

port: 8080

initialDelaySeconds: 60

timeoutSeconds: 5

failureThreshold: 12

securityContext:

fsGroup: 1000

volumeClaimTemplates:

- metadata:

name: jenkins-home

spec:

storageClassName: "managed-nfs-storage"

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

创建 kube-ops 的 namespace

[root@k8s-master ~]# kubectl create namespace kube-ops

namespace/kube-ops created

[root@k8s-master ~]# kubectl get ns

NAME STATUS AGE

default Active 19h

kube-node-lease Active 19h

kube-ops Active 3s

kube-public Active 19h

kube-system Active 19h

构建 Jenkins-Master 的 pod 资源

[root@k8s-master ~]# mkdir jenkins-master

[root@k8s-master ~]# cd jenkins-master

# 上传 yaml 文件

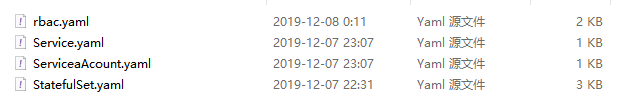

[root@k8s-master ~/jenkins-master]# ls

rbac.yaml ServiceaAcount.yaml Service.yaml StatefulSet.yaml

[root@k8s-master ~/jenkins-master]# kubectl apply -f .

service/jenkins created

serviceaccount/jenkins created

statefulset.apps/jenkins created

role.rbac.authorization.k8s.io/jenkins created

rolebinding.rbac.authorization.k8s.io/jenkins created

clusterrole.rbac.authorization.k8s.io/jenkinsClusterRole created

rolebinding.rbac.authorization.k8s.io/jenkinsClusterRuleBinding created

查看 pod 是否创建成功

[root@k8s-master ~/jenkins-master]# kubectl get pods -n kube-ops

NAME READY STATUS RESTARTS AGE

jenkins-0 1/1 Running 0 95s

查看 Pod 运行在哪个 Node 上

[root@k8s-master ~]# kubectl describe pods -n kube-ops

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 3m36s (x2 over 3m36s) default-scheduler error while running "VolumeBinding" filter plugin for pod "jenkins-0": pod has unbound immediate PersistentVolumeClaims

Normal Scheduled 3m35s default-scheduler Successfully assigned kube-ops/jenkins-0 to k8s-node2

Normal Pulling 3m34s kubelet, k8s-node2 Pulling image "jenkins/jenkins:lts-alpine"

Normal Pulled 3m14s kubelet, k8s-node2 Successfully pulled image "jenkins/jenkins:lts-alpine"

Normal Created 3m14s kubelet, k8s-node2 Created container jenkins

Normal Started 3m14s kubelet, k8s-node2 Started container jenkins

查看分配的端口

[root@k8s-master ~]# kubectl get service -n kube-ops

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

jenkins NodePort 10.1.192.252 8080:30037/TCP,50000:31057/TCP 5m4s

访问 Jenkins(三个节点都可以)

http://192.168.10.100:30037

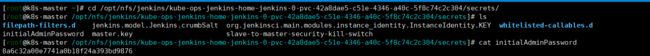

拿到密钥登录

3. 设置插件下载地址

[root@k8s-master ~]# cd /opt/nfs/jenkins/kube-ops-jenkins-home-jenkins-0-pvc-42a8dae5-c51e-4346-a40c-5f8c74c2c304/updates/

[root@k8s-master /opt/nfs/jenkins/kube-ops-jenkins-home-jenkins-0-pvc-42a8dae5-c51e-4346-a40c-5f8c74c2c304/updates]# ls

default.json hudson.tasks.Maven.MavenInstaller

[root@k8s-master /opt/nfs/jenkins/kube-ops-jenkins-home-jenkins-0-pvc-42a8dae5-c51e-4346-a40c-5f8c74c2c304/updates]# sed -i 's/http:\/\/updates.jenkins- ci.org\/download/https:\/\/mirrors.tuna.tsinghua.edu.cn\/jenkins/g' default.json && sed -i 's/http:\/\/www.google.com/https:\/\/www.baidu.com/g' default.json

#----------------------------------------------

sed -i 's/http:\/\/updates.jenkins- ci.org\/download/https:\/\/mirrors.tuna.tsinghua.edu.cn\/jenkins/g' default.json && sed -i 's/http:\/\/www.google.com/https:\/\/www.baidu.com/g' default.json

Manage Plugins 点击 Advanced,把 Update Site 改为国内插件下载地址

https://mirrors.tuna.tsinghua.edu.cn/jenkins/updates/update-center.json

4. 安装基本的插件

Chinese

Git

Pipeline

Extended Choice Parameter

重启 Jenkins

六,Jenkins 与 Kubernetes 整合

1. 安装 Kubernetes 插件

2. 实现 Jenkins 与 Kubernetes 整合

系统管理 -> 系统配置 -> 云 -> 新建云 -> Kubernetes

https://kubernetes.default.svc.cluster.local

http://jenkins.kube-ops.svc.cluster.local:8080

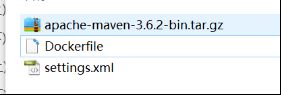

3. 构建 Jenkins-Slave 自定义镜像

Jenkins-Master 在构建 Job 的时候,Kubernetes 会创建 Jenkins-Slave 的 Pod 来完成 Job 的构建。我们选择运行 Jenkins-Slave 的镜像为官方推荐镜像:jenkins/jnlp-slave:latest,但是这个镜像里面并没有 Maven 环境,为了方便使用,我们需要自定义一个新的镜像。

wget http://49.232.8.65/maven/apache-maven-3.6.2-bin.tar.gz

Dockerfile

FROM jenkins/jnlp-slave:latest

MAINTAINER syhj

# 切换到 root 账户进行操作

USER root

# 安装 maven

COPY apache-maven-3.6.2-bin.tar.gz .

RUN tar -zxf apache-maven-3.6.2-bin.tar.gz && \

mv apache-maven-3.6.2 /usr/local && \

rm -f apache-maven-3.6.2-bin.tar.gz && \

ln -s /usr/local/apache-maven-3.6.2/bin/mvn /usr/bin/mvn && \

ln -s /usr/local/apache-maven-3.6.2 /usr/local/apache-maven && \

mkdir -p /usr/local/apache-maven/repo

COPY settings.xml /usr/local/apache-maven/conf/settings.xml

USER jenkins

settings.xml

wget http://49.232.8.65/maven/settings.xml

构建镜像

[root@k8s-master ~]# mkdir jenkins-slave

[root@k8s-master ~]# cd jenkins-slave/

# 上传文件

[root@k8s-master ~/jenkins-slave]# ls

apache-maven-3.6.2-bin.tar.gz Dockerfile settings.xml

[root@k8s-master ~/jenkins-slave]# docker build -t jenkins-slave-maven:latest .

Sending build context to Docker daemon 9.157MB

......

Successfully tagged jenkins-slave-maven:latest

[root@k8s-master ~/jenkins-slave]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

jenkins-slave-maven latest 38b66b0537c7 59 seconds ago 487MB

......

构建出一个新镜像:jenkins-slave-maven:latest

4. 将镜像上传仓库

k8s 集群 docker 指向 harbor 地址

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://4iv7219l.mirror.aliyuncs.com"],

"insecure-registries": ["192.168.10.40:85"]

}

systemctl restart docker

登录 harbor

docker login -u admin -p Harbor12345 192.168.10.40:85

给镜像打标签

docker tag jenkins-slave-maven:latest 192.168.10.40:85/library/jenkins-slave-maven:latest

推送到 harbor

docker push 192.168.10.40:85/library/jenkins-slave-maven:latest

5. 测试 Jenkins-Slave 是否可以创建

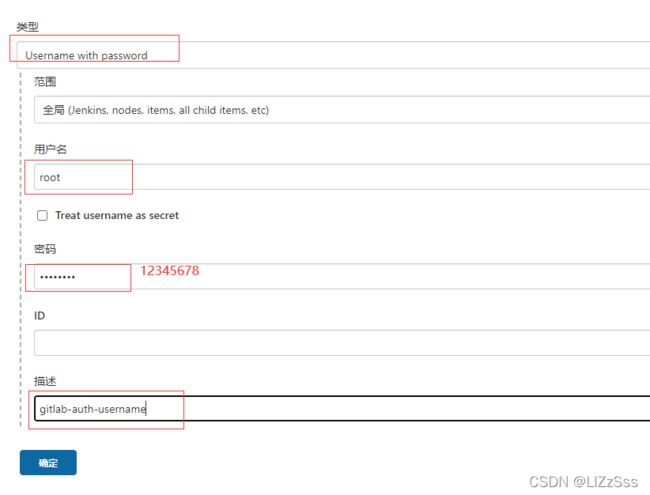

5.1 创建凭据

f765d0c8-f9e9-4f5a-b445-0a843a8e5d01

5.2 创建一个 Jenkins 流水线项目

def git_address = "http://192.168.10.20:82/test-group/tensquare_back.git"

def git_auth = "f765d0c8-f9e9-4f5a-b445-0a843a8e5d01"

//创建一个Pod的模板,label为jenkins-slave

podTemplate(label: 'jenkins-slave', cloud: 'kubernetes', containers: [

containerTemplate(

name: 'jnlp',

image: "192.168.10.40:85/library/jenkins-slave-maven:latest"

)

]

)

{

//引用jenkins-slave的pod模块来构建Jenkins-Slave的pod

node("jenkins-slave"){

stage('拉取代码'){

checkout([$class: 'GitSCM', branches: [[name: '*/master']], extensions: [], userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_address}"]]])

}

}

}

5.3 构建查看结果

创建的时候可以打开 node 管理查看临时节点,master 指挥 slave 节点操作,操作结束释放资源。

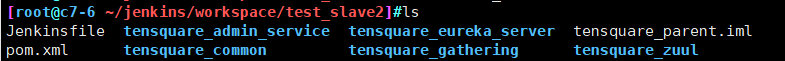

七,Jenkins + K8S + Docker 微服务持续集成测试

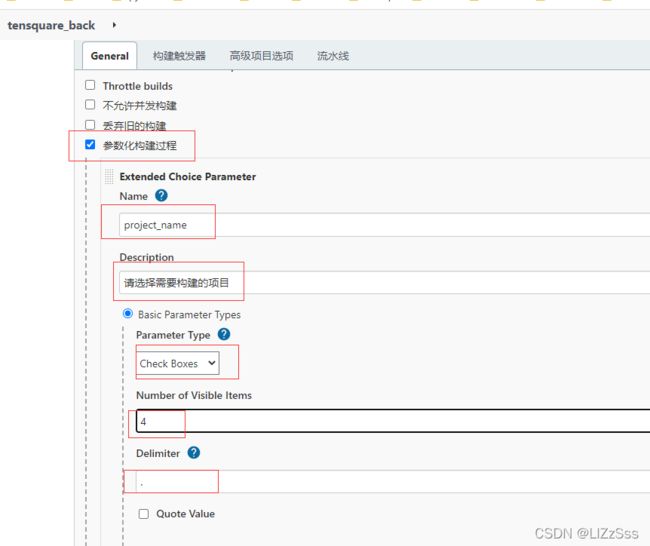

1. Jenkins 创建项目

2. 拉取代码,构建镜像

创建 NFS 共享目录

Jenkins-master 创建 maven 共享目录

[root@k8s-master ~]# mkdir /opt/nfs/maven

[root@k8s-master ~]# chmod -R 777 /opt/nfs/maven

让所有 Jenkins-Slave 构建指向 NFS 的 Maven 的共享仓库目录

vi /etc/exports

#添加内容,在 master 执行

/opt/nfs/jenkins *(rw,no_root_squash)

/opt/nfs/maven *(rw,no_root_squash)

systemctl restart nfs #重启 NFS

在 slave 节点执行 showmount -e 192.168.10.100 查看 master 共享的目录。

[root@k8s-node1 ~]# showmount -e 192.168.10.100

Export list for 192.168.10.100:

/opt/nfs/maven *

/opt/nfs/jenkins *

设置 Docker 权限(三个节点)

chmod 777 /var/run/docker.sock

# 不要重启 docker,权限会恢复

4f8af46d-c8a4-4a64-a834-879caff11d51

tensquare_eureka_server@10086,tensquare_zuul@10020,tensquare_admin_service@9001,tensquare_gathering@9002

def git_address = "http://192.168.10.20:82/test-group/tensquare_back.git"

def git_auth = "f765d0c8-f9e9-4f5a-b445-0a843a8e5d01"

//构建版本的名称

def tag = "latest"

//Harbor私服地址

def harbor_url = "192.168.10.40:85"

//Harbor的项目名称

def harbor_project_name = "tensquare"

//Harbor的凭证

def harbor_auth = "4f8af46d-c8a4-4a64-a834-879caff11d51"

podTemplate(label: 'jenkins-slave', cloud: 'kubernetes', containers: [

containerTemplate(

name: 'jnlp',

image: "192.168.10.40:85/library/jenkins-slave-maven:latest"

),

containerTemplate(

name: 'docker',

image: "docker:stable",

ttyEnabled: true,

command: 'cat'

),

],

volumes: [

hostPathVolume(mountPath: '/var/run/docker.sock', hostPath: '/var/run/docker.sock'),

nfsVolume(mountPath: '/usr/local/apache-maven/repo', serverAddress: '192.168.10.100' , serverPath: '/opt/nfs/maven'),

],

)

{

node("jenkins-slave"){

// 第一步

stage('pull code'){

checkout([$class: 'GitSCM', branches: [[name: '*/master']], extensions: [], userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_address}"]]])

}

// 第二步

stage('make public sub project'){

//编译并安装公共工程

sh "mvn -f tensquare_common clean install"

}

// 第三步

stage('make image'){

//把选择的项目信息转为数组

def selectedProjects = "${project_name}".split(',')

for(int i=0;i

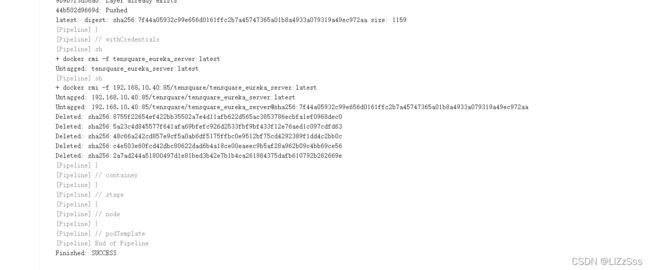

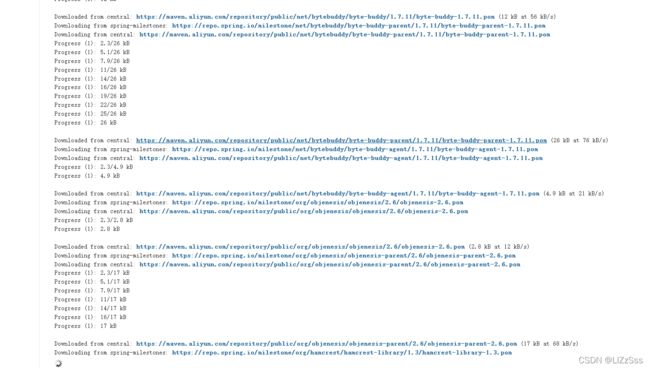

构建测试

第一次构建需要下载 spring 相关依赖,时间很长。

# maven 组件下载在此目录

[root@k8s-master ~]# cd /opt/nfs/maven/

[root@k8s-master /opt/nfs/maven]# ls

antlr classworlds commons-configuration io net

aopalliance com commons-io javax org

asm commons-cli commons-jxpath joda-time stax

backport-util-concurrent commons-codec commons-lang junit xmlpull

ch commons-collections commons-logging log4j xpp3

八,基于 K8S 平台微服务完整部署过程

1. 安装插件

2. 创建 K8S 凭证

[root@k8s-master ~]# ls

calico.yaml docker.sh jenkins-master jenkins-slave nfs-client

[root@k8s-master ~]# cd .kube/

[root@k8s-master ~/.kube]# ls

cache config http-cache

[root@k8s-master ~/.kube]# cat config

......

898906c3-3364-43b7-9353-79a6bfcf495d

3. 修改 Pipeline script

def git_address = "http://192.168.10.20:82/test-group/tensquare_back.git"

def git_auth = "f765d0c8-f9e9-4f5a-b445-0a843a8e5d01"

//构建版本的名称

def tag = "latest"

//Harbor私服地址

def harbor_url = "192.168.10.40:85"

//Harbor的项目名称

def harbor_project_name = "tensquare"

//Harbor的凭证

def harbor_auth = "4f8af46d-c8a4-4a64-a834-879caff11d51"

//k8s的凭证

def k8s_auth="898906c3-3364-43b7-9353-79a6bfcf495d"

//定义k8s-barbor的凭证

def secret_name="registry-auth-secret"

podTemplate(label: 'jenkins-slave', cloud: 'kubernetes', containers: [

containerTemplate(

name: 'jnlp',

image: "192.168.10.40:85/library/jenkins-slave-maven:latest"

),

containerTemplate(

name: 'docker',

image: "docker:stable",

ttyEnabled: true,

command: 'cat'

),

],

volumes: [

hostPathVolume(mountPath: '/var/run/docker.sock', hostPath: '/var/run/docker.sock'),

nfsVolume(mountPath: '/usr/local/apache-maven/repo', serverAddress: '192.168.10.100' , serverPath: '/opt/nfs/maven'),

],

)

{

node("jenkins-slave"){

// 第一步

stage('pull code'){

checkout([$class: 'GitSCM', branches: [[name: '*/master']], extensions: [], userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_address}"]]])

}

// 第二步

stage('make public sub project'){

//编译并安装公共工程

sh "mvn -f tensquare_common clean install"

}

// 第三步

stage('make image'){

//把选择的项目信息转为数组

def selectedProjects = "${project_name}".split(',')

for(int i=0;i4. IDEA 项目添加配置文件

4.1 eureka

---

apiVersion: v1

kind: Service

metadata:

name: eureka

labels:

app: eureka

spec:

type: NodePort

ports:

- port: 10086

name: eureka

targetPort: 10086

selector:

app: eureka

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: eureka

spec:

serviceName: "eureka"

replicas: 2

selector:

matchLabels:

app: eureka

template:

metadata:

labels:

app: eureka

spec:

imagePullSecrets:

- name: $SECRET_NAME

containers:

- name: eureka

image: $IMAGE_NAME

ports:

- containerPort: 10086

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: EUREKA_SERVER

value: "http://eureka-0.eureka:10086/eureka/,http://eureka- 1.eureka:10086/eureka/"

- name: EUREKA_INSTANCE_HOSTNAME

value: ${MY_POD_NAME}.eureka

podManagementPolicy: "Parallel"

server:

port: ${PORT:10086}

spring:

application:

name: eureka

eureka:

server:

# 续期时间,即扫描失效服务的间隔时间(缺省为60*1000ms)

eviction-interval-timer-in-ms: 5000

enable-self-preservation: false

use-read-only-response-cache: false

client:

# eureka client间隔多久去拉取服务注册信息 默认30s

registry-fetch-interval-seconds: 5

serviceUrl:

defaultZone: ${EUREKA_SERVER:http://127.0.0.1:${server.port}/eureka/}

instance:

# 心跳间隔时间,即发送一次心跳之后,多久在发起下一次(缺省为30s)

lease-renewal-interval-in-seconds: 5

# 在收到一次心跳之后,等待下一次心跳的空档时间,大于心跳间隔即可,即服务续约到期时间(缺省为90s)

lease-expiration-duration-in-seconds: 10

instance-id: ${EUREKA_INSTANCE_HOSTNAME:${spring.application.name}}:${server.port}@${random.l ong(1000000,9999999)}

hostname: ${EUREKA_INSTANCE_HOSTNAME:${spring.application.name}}

4.2 zuul

修改 application.yml 中的 eureka 地址

http://eureka-0.eureka:10086/eureka/,http://eureka-1.eureka:10086/eureka/

---

apiVersion: v1

kind: Service

metadata:

name: zuul

labels:

app: zuul

spec:

type: NodePort

ports:

- port: 10020

name: zuul

targetPort: 10020

selector:

app: zuul

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: zuul

spec:

serviceName: "zuul"

replicas: 2

selector:

matchLabels:

app: zuul

template:

metadata:

labels:

app: zuul

spec:

imagePullSecrets:

- name: $SECRET_NAME

containers:

- name: zuul

image: $IMAGE_NAME

ports:

- containerPort: 10020

podManagementPolicy: "Parallel"

上传到 gitlab

4.3 admin

application.yml

http://eureka-0.eureka:10086/eureka/,http://eureka-1.eureka:10086/eureka/

deploy.yml

---

apiVersion: v1

kind: Service

metadata:

name: admin

labels:

app: admin

spec:

type: NodePort

ports:

- port: 9001

name: admin

targetPort: 9001

selector:

app: admin

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: admin

spec:

serviceName: "admin"

replicas: 2

selector:

matchLabels:

app: admin

template:

metadata:

labels:

app: admin

spec:

imagePullSecrets:

- name: $SECRET_NAME

containers:

- name: admin

image: $IMAGE_NAME

ports:

- containerPort: 9001

podManagementPolicy: "Parallel"

提交 gitlab

4.4 gathering

application.yml

---

apiVersion: v1

kind: Service

metadata:

name: gathering

labels:

app: gathering

spec:

type: NodePort

ports:

- port: 9002

name: gathering

targetPort: 9002

selector:

app: gathering

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: gathering

spec:

serviceName: "gathering"

replicas: 2

selector:

matchLabels:

app: gathering

template:

metadata:

labels:

app: gathering

spec:

imagePullSecrets:

- name: $SECRET_NAME

containers:

- name: gathering

image: $IMAGE_NAME

ports:

- containerPort: 9002

podManagementPolicy: "Parallel"

提交 gitlab

5. K8S 集群 设置 harbor 访问权限

在 k8s 所有主机上操作

docker login -u tom -p Zc120604 192.168.10.40:85

master 操作

kubectl create secret docker-registry registry-auth-secret --docker-server=192.168.10.40:85 --docker-username=tom --docker-password=Zc120604 -- [email protected]

6. 构建微服务

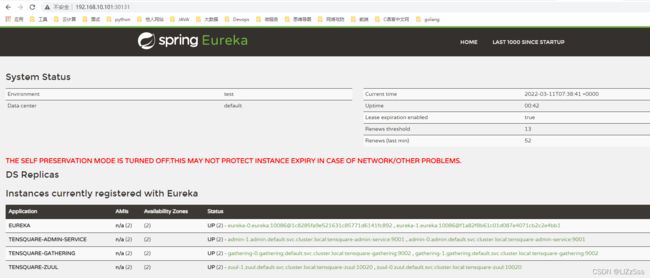

6.1 eureka

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

eureka-0 1/1 Running 0 20s

eureka-1 1/1 Running 0 20s

nfs-client-provisioner-7d8d58969b-gpd7v 1/1 Running 3 3d

访问

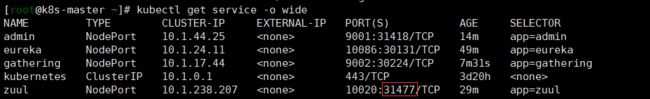

[root@k8s-master ~]# kubectl get service -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

eureka NodePort 10.1.24.11 10086:30131/TCP 2m43s app=eureka

kubernetes ClusterIP 10.1.0.1 443/TCP 3d19h

6.2 zuul

手动上传父工程依赖到 NFS 的 Maven 共享仓库目录中

[root@k8s-master /opt/nfs/maven/com/tensquare]# pwd

/opt/nfs/maven/com/tensquare

[root@k8s-master /opt/nfs/maven/com/tensquare]# ls

tensquare_common

[root@k8s-master /opt/nfs/maven/com/tensquare]# rz -E

rz waiting to receive.

[root@k8s-master /opt/nfs/maven/com/tensquare]# ls

tensquare_common tensquare_parent.zip

[root@k8s-master /opt/nfs/maven/com/tensquare]# unzip tensquare_parent.zip

Archive: tensquare_parent.zip

creating: tensquare_parent/

creating: tensquare_parent/1.0-SNAPSHOT/

inflating: tensquare_parent/1.0-SNAPSHOT/maven-metadata-local.xml

inflating: tensquare_parent/1.0-SNAPSHOT/resolver-status.properties

inflating: tensquare_parent/1.0-SNAPSHOT/tensquare_parent-1.0-SNAPSHOT.pom

inflating: tensquare_parent/1.0-SNAPSHOT/_remote.repositories

inflating: tensquare_parent/maven-metadata-local.xml

[root@k8s-master /opt/nfs/maven/com/tensquare]# ls

tensquare_common tensquare_parent tensquare_parent.zip

[root@k8s-master /opt/nfs/maven/com/tensquare]# rm -rf tensquare_parent.zip

[root@k8s-master /opt/nfs/maven/com/tensquare]# ls

tensquare_common tensquare_parent

6.3 admin

6.4 gathering

7. postman 测试数据库

因为各个微服务的 application.yml 中 mysql 数据库的地址为 192.168.10.30,我们还用以前实验的那台机器中的数据库

现在的生产服务器地址为 k8s 节点的地址,访问端口为映射的端口

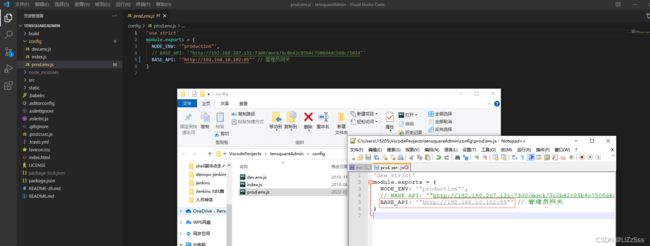

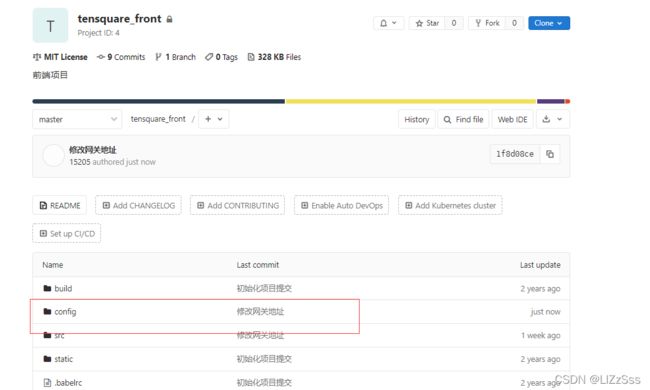

8. 前端与后端对接

前端单节点:前端单节点

前端双节点:前端双节点

两个 k8s-node 节点安装 Nginx

yum -y install epel-release

yum -y install nginx

修改 node1 nginx.conf

vi /etc/nginx/nginx.conf

#-----------------------------

server {

listen 9090;

listen [::]:9090;

server_name _;

root /usr/share/nginx/html;

启动 nginx

systemctl enable nginx && systemctl start nginx

修改 node2 nginx.conf

......

include /etc/nginx/conf.d/*.conf;

upstream zuulServer{

server 192.168.10.101:31477 weight=1;

server 192.168.10.102:31477 weight=1;

}

server {

listen 85;

listen [::]:85;

server_name _;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

location / {

proxy_pass http://zuulServer/;

}

......

启动 nginx

systemctl enable nginx && systemctl start nginx