部署k8s集群存储类 必懂storageclass

是什么

storageclass连接存储插件,根据PVC的消费动态生成PV,简言之,就是在创建pod之前,NFS的另一种形式存储,只要一次定义一个类,以后不用声明,会自动挂载此类NFS

示例

提示:node节点也要下载 nfs-utils,否则将不能挂载

步骤:

1、 开启NFS

vim /etc/exports

/nfsdata *(rw,sync,no_root_squash)

[root@master ~]# systemctl start rpcbind

[root@master ~]# systemctl start nfs-server

[root@master ~]# systemctl enable rpcbind

[root@master ~]# systemctl enable nfs-server

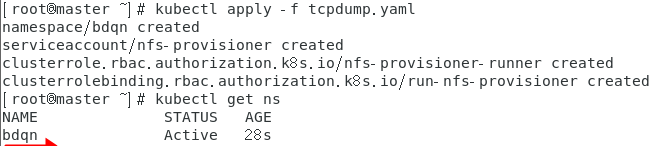

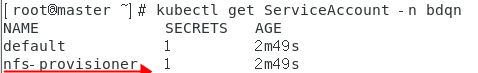

2、开启rbac权限

RBAC基于角色的访问控制–全拼Role-Based Access Control

[root@master ~]# vim tcpdump.yaml

kind: Namespace

apiVersion: v1

metadata:

name: bdqn #自定义了一个名称空间

---

apiVersion: v1

kind: ServiceAccount #服务类账号 除了服务类账号,还有普通类账号

metadata:

name: nfs-provisioner #起个名字

namespace: bdqn #指定好自定义的名称空间。

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole #集群类的角色

metadata:

name: nfs-provisioner-runner

namespace: bdqn

rules: #规则,指定有什么样的权限

- apiGroups: [""] #“”不写代表对所有api组具有的权限

resources: ["persistentvolumes"] #对pv可以进行什么样的操作

verbs: ["get", "list", "watch", "create", "delete"] #有获得,创建,删掉等 等权限

- apiGroups: [""]

resources: ["persistentvolumeclaims"] #pvc有什么样的权限

verbs: ["get", "list", "watch", "update"] #具体权限

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["services", "endpoints"]

verbs: ["get","create","list", "watch","update"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["nfs-provisioner"]

verbs: ["use"]

---

kind: ClusterRoleBinding #cluster开头的指的都是整个k8s的权限范围,在集群内都生效

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-provisioner

namespace: bdqn

roleRef:

kind: ClusterRole

name: nfs-provisioner-runner

apiGroup: rbac.authorization.k8s.io

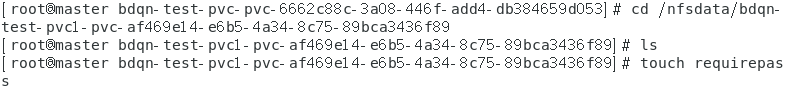

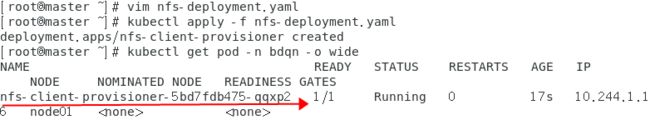

3、 创建nfs-deployment.yaml

作用是:中间件,将pv发布出去

[root@master sc]# vim nfs-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

namespace: bdqn

spec:

selector:

matchLabels:

app: nfs-client-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccount: nfs-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/open-ali/nfs-client-provisioner

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: bdqn-test

- name: NFS_SERVER

value: 172.16.0.168

- name: NFS_PATH

value: /nfsdata

volumes:

- name: nfs-client-root

nfs:

server: 172.16.0.168

path: /nfsdata

重要参数释义:

nfs-client-provisioner这个镜像的作用,它通过k8s集群内置的NFS驱动,挂载远端的NFS服务器到本地目录(这里的本地目录指的是容器内的目录),然后将自身作为storage provisioner,关联到storageclass。

并且暴露了一个环境变量

env:

name: PROVISIONER_NAME #提供者的名称 重要! 记住这个名字

value: bdqn-test #真正提供存储的是bdqn-test

name: NFS_SERVER #用的是nfs服务

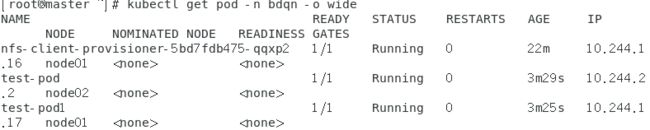

验证:无镜像会从网络下载

4、 创建storageclass资源

可以查看一下sc的帮助

kubectl explain sc

[root@master ~]# vim storageclass.yaml #创建一个最简略的sc资源一共7行

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: storageclass

namespace: bdqn #所在名称空间

provisioner: bdqn-test #存储提供者是谁 需要和上段env里指定的name下的 value:bdqn-test一致

reclaimPolicy: Retain

5、创建PVC验证

[root@master sc]# vim test-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-pvc #给pod资源或其他资源管理用的名称

namespace: bdqn

spec:

storageClassName: storageclass

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 200Mi #设置存储大小

6、创建一个Pod测试

vim test-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pod

namespace: bdqn

spec:

containers:

- name: test-pod

image: busybox

args:

- /bin/sh

- -c

- sleep 3000

volumeMounts:

- name: nfs-pv

mountPath: /test #随便自定义一个目录即可

volumes:

- name: nfs-pv

persistentVolumeClaim:

claimName: test-pvc #关联上我们上面创建的pvc名字