【美味蟹堡王今日营业】论文学习笔记10-04

Language-Based Image Editing with Recurrent Attentive Models[paper]

- 主要是颜色

Abstract

We investigate the problem of Language-Based Image Editing (LBIE). Given a source image and a natural language description, we want to generate a target image by editing the source image based on the description. We propose a generic modeling framework for two subtasks of LBIE: language-based image segmentation and image colorization. The framework uses recurrent attentive models to fuse image and language features. Instead of using a fixed step size, we introduce for each region of the image a termination gate to dynamically determine after each inference step whether to continue extrapolating additional information from the textual description. The effectiveness of the framework is validated on three datasets. First, we introduce a synthetic dataset, called CoSaL, to evaluate the end-to-end performance of our LBIE system. Second, we show that the framework leads to state-of-the-art performance on image segmentation on the ReferIt dataset. Third, we present the first language-based colorization result on the Oxford-102 Flowers dataset.

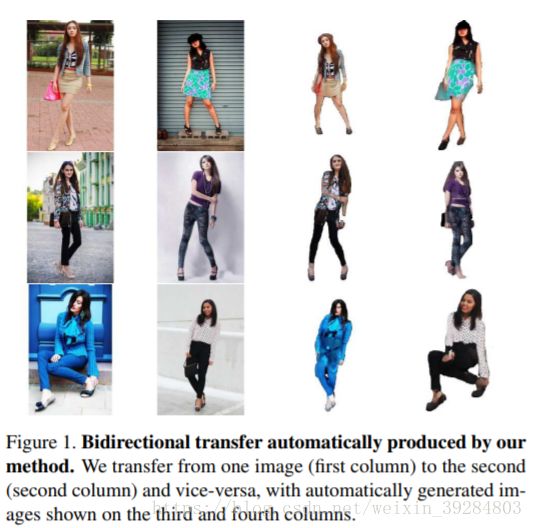

Human Appearance Transfer[paper]

Abstract

We propose an automatic person-to-person appearance transfer model based on explicit parametric 3d human representations and learned, constrained deep translation network architectures for photographic image synthesis. Given a single source image and a single target image, each corresponding to different human subjects, wearing different clothing and in different poses, our goal is to photorealistically transfer the appearance from the source image onto the target image while preserving the target shape and clothing segmentation layout. Our solution to this new problem is formulated in terms of a computational pipeline that combines (1) 3d human pose and body shape estimation from monocular images, (2) identifying 3d surface colors elements (mesh triangles) visible in both images, that can be transferred directly using barycentric procedures, and (3) predicting surface appearance missing in the first image but visible in the second one using deep learning-based image synthesis techniques. Our model achieves promising results as supported by a perceptual user study where the participants rated around 65% of our results as good, very good or perfect, as well in automated tests (Inception scores and a Faster-RCNN human detector responding very similarly to real and model generated images). We further show how the proposed architecture can be profiled to automatically generate images of a person dressed with different clothing transferred from a person in another image, opening paths for applications in entertainment and photo-editing (e.g. embodying and posing as friends or famous actors), the fashion industry, or affordable online shopping of clothing.

Every Smile is Unique: Landmark-guided Diverse Smile Generation[paper]

Abstract

Each smile is unique: one person surely smiles in different ways (e.g. closing/opening the eyes or mouth). Given one input image of a neutral face, can we generate multiple smile videos with distinctive characteristics? To tackle this one-to-many video generation problem, we propose a novel deep learning architecture named Conditional MultiMode Network (CMM-Net). To better encode the dynamics of facial expressions, CMM-Net explicitly exploits facial landmarks for generating smile sequences. Specifically, a variational auto-encoder is used to learn a facial landmark embedding. This single embedding is then exploited by a conditional recurrent network which generates a landmark embedding sequence conditioned on a specific expression (e.g. spontaneous smile). Next, the generated landmark embeddings are fed into a multi-mode recurrent landmark generator, producing a set of landmark sequences still associated to the given smile class but clearly distinct from each other. Finally, these landmark sequences are translated into face videos. Our experimental results demonstrate the effectiveness of our CMM-Net in generating realistic videos of multiple smile expressions.

InverseFaceNet: Deep Monocular Inverse Face Rendering[paper]

Abstract

We introduce InverseFaceNet, a deep convolutional inverse rendering framework for faces that jointly estimates facial pose, shape, expression, reflectance and illumination from a single input image. By estimating all parameters from just a single image, advanced editing possibilities on a single face image, such as appearance editing and relighting, become feasible in real time. Most previous learning-based face reconstruction approaches do not jointly recover all dimensions, or are severely limited in terms of visual quality. In contrast, we propose to recover high-quality facial pose, shape, expression, reflectance and illumination using a deep neural network that is trained using a large, synthetically created training corpus. Our approach builds on a novel loss function that measures model-space similarity directly in parameter space and significantly improves reconstruction accuracy. We further propose a self-supervised bootstrapping process in the network training loop, which iteratively updates the synthetic training corpus to better reflect the distribution of real-world imagery. We demonstrate that this strategy outperforms completely synthetically trained networks. Finally, we show high-quality reconstructions and compare our approach to several state-of-the-art approaches.

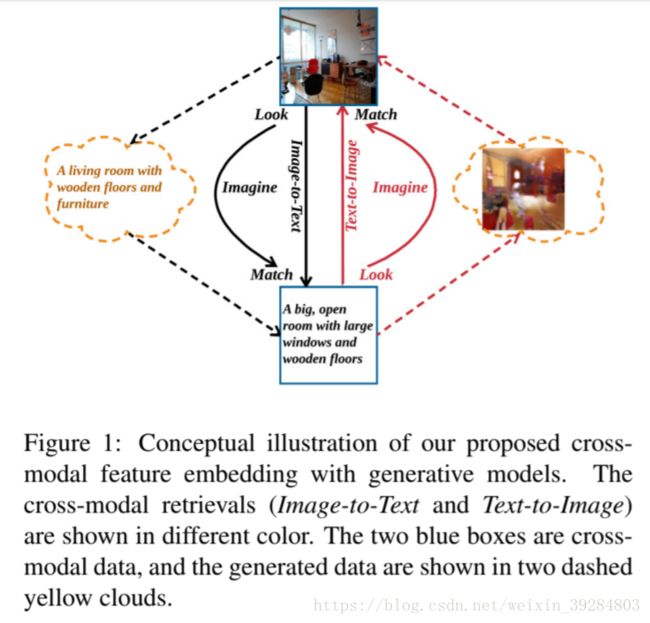

Look, Imagine and Match: Improving Textual-Visual Cross-Modal Retrieval with Generative Models[paper]

Abstract

Textual-visual cross-modal retrieval has been a hot research topic in both computer vision and natural language processing communities. Learning appropriate representations for multi-modal data is crucial for the cross-modal retrieval performance. Unlike existing image-text retrieval approaches that embed image-text pairs as single feature vectors in a common representational space, we propose to incorporate generative processes into the cross-modal feature embedding, through which we are able to learn not only the global abstract features but also the local grounded features. Extensive experiments show that our framework can well match images and sentences with complex content, and achieve the state-of-the-art cross-modal retrieval results on MSCOCO dataset.

Introduction(part)

This paper focuses on the problem in multi-modal information retrieval, which is to retrieve the images (resp. texts) that are relevant to a given textual (resp. image) query. The fundamental challenge in cross-modal retrieval lies in the heterogeneity of different modalities of data. Thus, the learning of a common representation shared by data with different modalities plays the key role in cross-modal retrieval. For textural-visual crossmodal embedding, the common way is to first encode individual modalities into their respective features, and then map them into a common semantic space, which is often optimized via a ranking loss that encourages the similarity of the mapped features of ground-truth image-text pairs to be greater than that of any other negative pair. Once the common representation is obtained, the relevance / similarity between the two modalities can be easily measured by computing the distance (e.g. l2) between their representations in the common space. Although the feature representations in the learned common representation space have been successfully used to describe high-level semantic concepts of multi-modal data, they are not sufficient to retrieve images with detailed local similarity (e.g., spatial layout) or sentences with word-level similarity. In contrast, as humans, we can relate a textual (resp. image) query to relevant images (resp. texts) more accurately, if we pay more attention to the finer details of the images (resp. texts). In other words, if we can ground the representation of one modality to the objects in the other modality, we can learn a better mapping. Inspired by this concept, in this paper we propose to incorporate generative models into textual-visual feature embedding for cross-modal retrieval. In particular, in addition to the conventional cross-modal feature embedding at the global semantic level, we also introduce an additional cross-modal feature embedding at the local level, which is grounded by two generative models: image-to-text and text-to-image. Figure 1 illustrates the concept of our proposed cross-modal feature embedding with generative models at high level, which includes three learning steps: look, imagine, and match. Given a query in image or text, we first look at the query to extract an abstract representation. Then, we imagine what the target item (text or image) in the other modality should look like, and get a more concrete grounded representation. We accomplish this by asking the representation of one modality (to be estimated) to generate the item in the other modality, and comparing the generated items with gold standards. After that, we match the right image-text pairs using the relevance score which is calculated based on a combination of grounded and abstract representations.

The contributions of this paper are twofold.

First, we incorporate two generative models into the conventional textual-visual feature embedding, which is able to learn concrete grounded representations that capture the detailed similarity between the two modalities. Second, we conduct extensive experimentations on the benchmark dataset, MSCOCO. Our empirical results demonstrate that the combination of the grounded and the abstract representations can significantly improve the state-of-the-art performance on cross-modal image-caption retrieval.

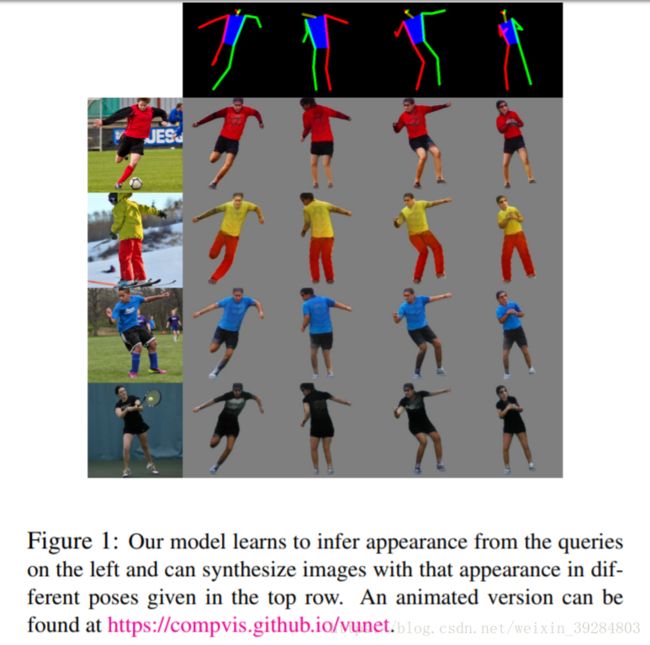

A Variational U-Net for Conditional Appearance and Shape Generation[paper]

Abstract

Deep generative models have demonstrated great performance in image synthesis. However, results deteriorate in case of spatial deformations, since they generate images of objects directly, rather than modeling the intricate interplay of their inherent shape and appearance. We present a conditional U-Net [30] for shape-guided image generation, conditioned on the output of a variational autoencoder for appearance. The approach is trained end-to-end on images, without requiring samples of the same object with varying pose or appearance. Experiments show that the model enables conditional image generation and transfer. Therefore, either shape or appearance can be retained from a query image, while freely altering the other. Moreover, appearance can be sampled due to its stochastic latent representation, while preserving shape. In quantitative and qualitative experiments on COCO [20], DeepFashion [21, 23], shoes [43], Market-1501 [47] and handbags [49] the approach demonstrates significant improvements over the state-of-the-art.

- 这些小人细节上都长得一样

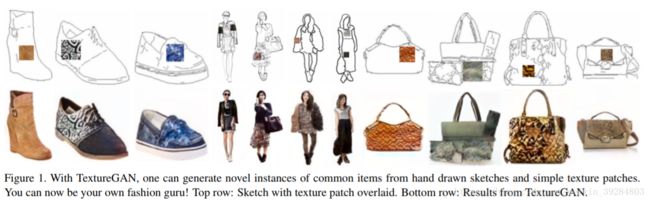

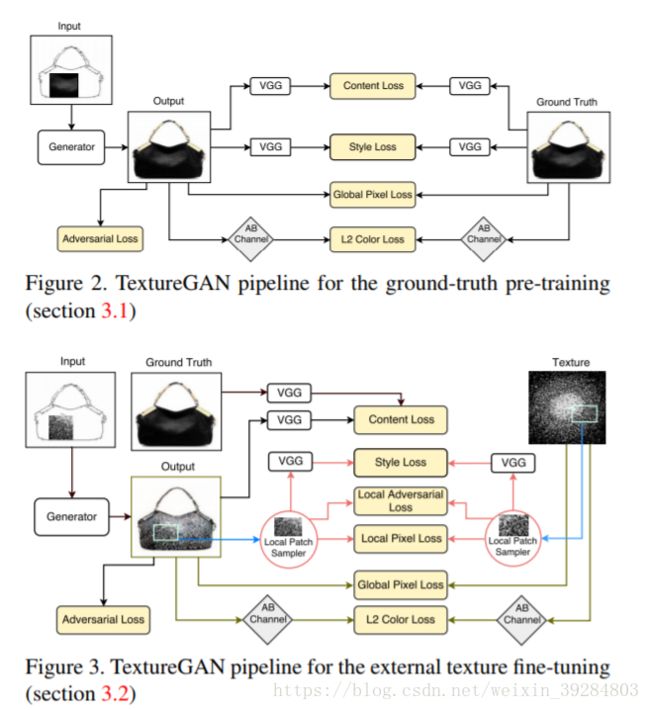

TextureGAN: Controlling Deep Image Synthesis with Texture Patches[paper]

Abstract

In this paper, we investigate deep image synthesis guided by sketch, color, and texture. Previous image synthesis methods can be controlled by sketch and color strokes but we are the first to examine texture control. We allow a user to place a texture patch on a sketch at arbitrary locations and scales to control the desired output texture. Our generative network learns to synthesize objects consistent with these texture suggestions. To achieve this, we develop a local texture loss in addition to adversarial and content loss to train the generative network. We conduct experiments using sketches generated from real images and textures sampled from a separate texture database and results show that our proposed algorithm is able to generate plausible images that are faithful to user controls. Ablation studies show that our proposed pipeline can generate more realistic images than adapting existing methods directly.

Introduction (part)

One of the “Grand Challenges” of computer graphics is to allow anyone to author realistic visual content. The traditional 3d rendering pipeline can produce astonishing and realistic imagery, but only in the hands of talented and trained artists. The idea of short-circuiting the traditional 3d modeling and rendering pipeline dates back at least 20 years to image-based rendering techniques [33]. These techniques and later “image-based” graphics approaches focus on re-using image content from a database of training images [22]. For a limited range of image synthesis and editing scenarios, these non-parametric techniques allow nonexperts to author photorealistic imagery. In the last two years, the idea of direct image synthesis without using the traditional rendering pipeline has gotten significant interest because of promising results from deep network architectures such as Variational Autoencoders (VAEs) [21] and Generative Adversarial Networks (GANs) [11]. However, there has been little investigation of fine-grained texture control in deep image synthesis (as opposed to coarse texture control through “style transfer” methods [9]). In this paper we introduce TextureGAN, the first deep image synthesis method which allows users to control object texture. Users “drag” one or more example textures onto sketched objects and the network realistically applies these textures to the indicated objects. This “texture fill” operation is difficult for a deep network to learn for several reasons: (1) Existing deep networks aren’t particularly good at synthesizing highresolution texture details even without user constraints. Typical results from recent deep image synthesis methods are at low resolution (e.g. 64x64) where texture is not prominent or they are higher resolution but relatively flat (e.g. birds with sharp boundaries but few fine-scale details). (2) For TextureGAN, the network must learn to propagate textures to the relevant object boundaries – it is undesirable to leave an object partially textured or to have the texture spill into the background. To accomplish this, the network must implicitly segment the sketched objects and perform texture synthesis, tasks which are individually difficult. (3) The network should additionally learn to foreshorten textures as they wrap around 3d object shapes, to shade textures according to ambient occlusion and lighting direction, and to understand that some object parts (handbag clasps) are not to be textured but should occlude the texture. These texture manipulation steps go beyond traditional texture synthesis in which a texture is assumed to be stationary. To accomplish these steps the network needs a rich implicit model of the visual world that involves some partial 3d understanding. Fortunately, the difficulty of this task is somewhat balanced by the availability of training data. Like recent unsupervised learning methods based on colorization [47, 23], training pairs can be generated from unannotated images. In our case, input training sketches and texture suggestions are automatically extracted from real photographs which in turn serve as the ground truth for initial training. We introduce local texture loss to further fine-tune our networks to handle diverse textures unseen on ground truth objects.

We make the following contributions:

• We are the first to demonstrate the plausibility of finegrained texture control in deep image synthesis. In concert with sketched object boundaries, this allows non-experts to author realistic visual content. Our network is feed-forward and thus can run interactively as users modify sketch or texture suggestions.

• We propose a “drag and drop” texture interface where users place particular textures onto sparse, sketched object boundaries. The deep generative network directly operates on these localized texture patches and sketched object boundaries.

• We explore novel losses for training deep image synthesis. In particular we formulate a local texture loss which encourages the generative network to handle new textures never seen on existing objects.