hadoop+hive+spark安装

hadoop+hive+spark安装

- 大数据环境安装和配置

-

- 1.虚拟机环境配置

- 2.hadoop3.2.3环境安装

- 3.mysql5.7.29环境安装

- 4.hive3.1.2环境安装

- 5.spark2.4.5编译安装

大数据环境安装和配置

版本详情:hadoop3.2.3+hive3.1.2+spark2.4.5 + mysql5.7.29

机器配置:

| 节点 | hadoop1 | hadoop2 | hadoop3 |

|---|---|---|---|

| hdfs | NameNode SecondaryNameNode DataNode | DataNode | DataNode |

| yarn | NodeManager ResourceManager | NodeManager | NodeManager |

| ntpd | yes | no | no |

说明:生产环境不能这么配置

1.虚拟机环境配置

虚拟机使用最小化安装

安装参考 https://blog.csdn.net/weixin_42283048/article/details/116702690

配置参考 https://www.jianshu.com/p/cb38b14ba9d9

具体操作:

1.1网络配置

BOOTPROTO=static

IPADDR=192.168.122.135

NETMASK=255.255.255.0

DNS1=192.168.122.1

GATEWAY=192.168.122.1

ONBOOT=yes

ifdown eth0

ifup eth0

1.2配置主机名称映射

vim /etc/hosts

192.168.122.135 hadoop1

192.168.122.136 hadoop2

192.168.122.137 hadoop3

1.3关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

1.3设置java环境

cd /opt

mkdir module

mkdir software

rpm -qa | grep -i java | xargs -n1 sudo rpm -e --nodeps

ls /opt/software/

tar -zxvf jdk-8u212-linux-x64.tar.gz -C /opt/module/

增加配置文件 /etc/profile.d/my_env.sh

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk1.8.0_212

export PATH=$PATH:$JAVA_HOME/bin

1.4ssh无密钥登陆设置

ssh-keygen -t rsa

ssh-copy-id hadoop1

ssh-copy-id hadoop2

ssh-copy-id hadoop3

1.5安装额外依赖包

yum install -y epel-release

yum install -y psmisc nc net-tools rsync vim lrzsz ntp libzstd openssl-static tree iotop git

1.6时间服务器

- 时间服务器配置(必须root用户) hadoop1作为时间服务器

a. 在所有节点关闭ntp服务和自启动

systemctl stop ntpd

systemctl disable ntpd

b. 修改ntp配置文件

vim /etc/ntp.conf

修改内容如下

a)修改1(授权192.168.122.0-192.168.2.255网段上的所有机器可以从这台机器上查询和同步时间)

#restrict 192.168.122.0 mask 255.255.255.0 nomodify notrap

restrict 192.168.122.0 mask 255.255.255.0 nomodify notrap

b)修改2(集群在局域网中,不使用其他互联网上的时间)注释

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

c)添加3(当该节点丢失网络连接,依然可以采用本地时间作为时间服务器为集群中的其他节点提供时间同步)

server 127.127.1.0

fudge 127.127.1.0 stratum 10

c. 修改/etc/sysconfig/ntpd 文件

vim /etc/sysconfig/ntpd

增加内容如下(让硬件时间与系统时间一起同步)

SYNC_HWCLOCK=yes

d. 重新启动ntpd服务

systemctl start ntpd

e. 设置ntpd服务开机启动

systemctl enable ntpd

- 其他机器配置(必须root用户)

a. 在其他机器配置10分钟与时间服务器同步一次

crontab -e

编写定时任务如下:

*/10 * * * * /usr/sbin/ntpdate hadoop1

b. 修改任意机器时间

date -s "2017-9-11 11:11:11"

c.十分钟后查看机器是否与时间服务器同步

date

说明:测试的时候可以将10分钟调整为1分钟,节省时间。

1.7重启服务器

reboot

2.hadoop3.2.3环境安装

安装步骤参考:https://blog.csdn.net/qq_40421109/article/details/103563455

在Hadoop1节点执行下面操作

1.下载hadoop包,放在/opt/software下面,并解压到/opt/module下面

wget --no-check-certificate https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.2.3/hadoop-3.2.3.tar.gz

2.配置脚本/etc/profile.d/my_env.sh 追加内容 (在Hadoop1-3同时操作)

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk1.8.0_212

#HADOOP_HOME

export HADOOP_HOME=/opt/module/hadoop3.2.3

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

3.创建存储文件夹(在Hadoop1-3同时操作)

mkdir /usr/local/hadoop

mkdir /usr/local/hadoop/data

mkdir /usr/local/hadoop/data/tmp

mkdir /usr/local/hadoop/dfs

mkdir /usr/local/hadoop/dfs/data

mkdir /usr/local/hadoop/dfs/name

mkdir /usr/local/hadoop/tmp

4.在文件/opt/module/hadoop-3.2.3/etc/hadoop/hadoop-env.sh 增加内容:

export JAVA_HOME=/opt/module/jdk1.8.0_212

5.修改文件/opt/module/hadoop-3.2.3/etc/hadoop/core-site.xml,内容为

<configuration>

<property>

<name>hadoop.tmp.dirname>

<value>/usr/local/hadoop/tmpvalue>

property>

<property>

<name>fs.defaultFSname>

<value>hdfs://hadoop1:9000value>

property>

configuration>

6.修改文件/opt/module/hadoop-3.2.3/etc/hadoop/hdfs-site.xml,内容为

<configuration>

<property>

<name>dfs.namenode.http-addressname>

<value>hadoop1:50070value>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>/usr/local/hadoop/dfs/namevalue>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>/usr/local/hadoop/dfs/datavalue>

property>

<property>

<name>dfs.replicationname>

<value>1value>

property>

configuration>

7.修改文件/opt/module/hadoop-3.2.3/etc/hadoop/yarn-site.xml内容为

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.webapp.addressname>

<value>hadoop1:8088value>

property>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>hadoop1value>

property>

<property>

<name>yarn.application.classpathname>

<value>/opt/module/hadoop-3.2.3/etc/hadoop:/opt/module/hadoop-3.2.3/share/hadoop/common/lib/*:/opt/module/hadoop-3.2.3/share/hadoop/common/*:/opt/module/hadoop-3.2.3/share/hadoop/hdfs:/opt/module/hadoop-3.2.3/share/hadoop/hdfs/lib/*:/opt/module/hadoop-3.2.3/share/hadoop/hdfs/*:/opt/module/hadoop-3.2.3/share/hadoop/mapreduce/lib/*:/opt/module/hadoop-3.2.3/share/hadoop/mapreduce/*:/opt/module/hadoop-3.2.3/share/hadoop/yarn:/opt/module/hadoop-3.2.3/share/hadoop/yarn/lib/*:/opt/module/hadoop-3.2.3/share/hadoop/yarn/*value>

property>

<property>

<name>yarn.nodemanager.pmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

configuration>

8.修改文件/opt/module/hadoop-3.2.3/etc/hadoop/mapred-site.xml,内容为

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

configuration>

9.修改文件/opt/module/hadoop-3.2.3/etc/hadoop/workers,内容为

hadoop2

hadoop3

10.修改文件/opt/module/hadoop-3.2.3/sbin/start-yarn.sh

加入如下

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

11.修改文件 /opt/module/hadoop-3.2.3/sbin/stop-yarn.sh

加入

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

12.修改文件/opt/module/hadoop-3.2.3/sbin/start-dfs.sh

加入

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

13.修改文件/opt/module/hadoop-3.2.3/sbin/stop-dfs.sh

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

14.将目录传送到hadoop2-3中

scp -r ./hadoop-3.2.3/ root@hadoop2:/opt/module/

scp -r ./hadoop-3.2.3/ root@hadoop3:/opt/module/

15.初始化hdfs并启动和查看

hdfs namenode -format # 使用其他命令,format有可能失败

start-all.sh

stop-all.sh

hdfs dfs -ls /

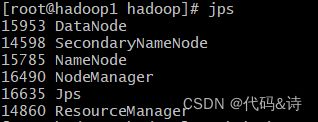

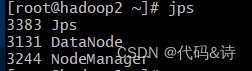

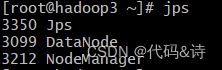

16.查看Hadoop1-3节点的进程

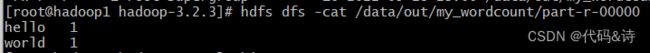

14.用mapreduce计算wordcount

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.3.jar wordcount /data/input/ /data/out/my_wordcount

hdfs dfs -cat /data/out/my_wordcount/part-r-00000

3.mysql5.7.29环境安装

安装参考:https://www.linuxidc.com/Linux/2016-09/135288.htm

wget http://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm

yum localinstall mysql57-community-release-el7-8.noarch.rpm

yum repolist enabled | grep "mysql.*-community.*"

yum install mysql-community-server

systemctl start mysqld

systemctl status mysqld

systemctl enable mysqld

systemctl daemon-reload

实验环境,将mysql密码校验机制关闭,修改/etc/my.cnf,增加validate_password = off,并重启systemctl restart mysqld 生效。

查看默认密码 grep ‘temporary password’ /var/log/mysqld.log,并登录 mysql -uroot -p ,修改密码

ALTER USER 'root'@'localhost' IDENTIFIED BY 'MyNewPass4!';

GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY 'MyNewPass4!' WITH GRANT OPTION;

配置默认编码为utf-8,修改配置文件/etc/my.cnf,增加

character_set_server=utf8

init_connect=‘SET NAMES utf8’

默认配置文件路径:

配置文件:/etc/my.cnf

日志文件:/var/log//var/log/mysqld.log

服务启动脚本:/usr/lib/systemd/system/mysqld.service

socket文件:/var/run/mysqld/mysqld.pid

4.hive3.1.2环境安装

参考:https://www.jianshu.com/p/7f92dd0b3f13

下载并安装hive-3.1.2

wget --no-check-certificate https://mirrors.tuna.tsinghua.edu.cn/apache/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

tar -zxvf apache-hive-3.1.2-bin.tar.gz -C /opt/module/

修改文件/etc/profile.d/my_env.sh,增加

#HIVE_HOME

export HIVE_HOME=/opt/module/apache-hive-3.1.2-bin

export PATH=$PATH:$HIVE_HOME/bin

让文件生效

source /etc/profile.d/my_env.sh

解决jar包冲突:

cd /opt/module/hive/lib

mv log4j-slf4j-impl-2.10.0.jar log4j-slf4j-impl-2.10.0.jar.bak

mv guava-19.0.jar guava-19.0.jar_bak

cp /opt/module/hadoop-3.2.3/share/hadoop/common/lib/guava-27.0-jre.jar /opt/module/apache-hive-3.1.2-bin/lib

hive对mysql的相关配置

cp mysql-connector-java-5.1.48.jar /opt/module/hive/lib/

修改/opt/module/apache-hive-3.1.2-bin/conf/hive-site.xml文件

<configuration>

<property>

<name>javax.jdo.option.ConnectionURLname>

<value>jdbc:mysql://hadoop1:3306/metastore?useSSL=falsevalue>

property>

<property>

<name>javax.jdo.option.ConnectionDriverNamename>

<value>com.mysql.jdbc.Drivervalue>

property>

<property>

<name>javax.jdo.option.ConnectionUserNamename>

<value>rootvalue>

property>

<property>

<name>javax.jdo.option.ConnectionPasswordname>

<value>123456value>

property>

<property>

<name>hive.metastore.warehouse.dirname>

<value>/user/hive/warehousevalue>

property>

<property>

<name>hive.metastore.schema.verificationname>

<value>falsevalue>

property>

<property>

<name>hive.metastore.urisname>

<value>thrift://hadoop1:9083value>

property>

<property>

<name>hive.server2.thrift.portname>

<value>10000value>

property>

<property>

<name>hive.server2.thrift.bind.hostname>

<value>hadoop1value>

property>

<property>

<name>hive.metastore.event.db.notification.api.authname>

<value>falsevalue>

property>

<property>

<name>hive.cli.print.headername>

<value>truevalue>

property>

<property>

<name>hive.cli.print.current.dbname>

<value>truevalue>

property>

<property>

<name>hive.aux.jars.pathname>

<value>file:///opt/module/apache-hive-3.1.2-bin/lib/hive-contrib-3.1.2.jarvalue>

<description>Added by tiger.zeng on 20120202.These JAR file are available to all users for all jobsdescription>

property>

configuration>

mysql增加hive元数据

create database metastore;

初始化hive元数据

schematool -initSchema -dbType mysql -verbose

新建hive启动命令, /opt/module/hive/bin新增hiveservices.sh

#!/bin/bash

HIVE_LOG_DIR=$HIVE_HOME/logs

mkdir -p $HIVE_LOG_DIR

#检查进程是否运行正常,参数1为进程名,参数2为进程端口

function check_process()

{

pid=$(ps -ef 2>/dev/null | grep -v grep | grep -i $1 | awk '{print $2}')

ppid=$(netstat -nltp 2>/dev/null | grep $2 | awk '{print $7}' | cut -d '/' -f 1)

echo $pid

[[ "$pid" =~ "$ppid" ]] && [ "$ppid" ] && return 0 || return 1

}

function hive_start()

{

metapid=$(check_process HiveMetastore 9083)

cmd="nohup hive --service metastore >$HIVE_LOG_DIR/metastore.log 2>&1 &"

cmd=$cmd" sleep 4; hdfs dfsadmin -safemode wait >/dev/null 2>&1"

[ -z "$metapid" ] && eval $cmd || echo "Metastroe服务已启动"

server2pid=$(check_process HiveServer2 10000)

cmd="nohup hive --service hiveserver2 >$HIVE_LOG_DIR/hiveServer2.log 2>&1 &"

[ -z "$server2pid" ] && eval $cmd || echo "HiveServer2服务已启动"

}

function hive_stop()

{

metapid=$(check_process HiveMetastore 9083)

[ "$metapid" ] && kill $metapid || echo "Metastore服务未启动"

server2pid=$(check_process HiveServer2 10000)

[ "$server2pid" ] && kill $server2pid || echo "HiveServer2服务未启动"

}

case $1 in

"start")

hive_start

;;

"stop")

hive_stop

;;

"restart")

hive_stop

sleep 2

hive_start

;;

"status")

check_process HiveMetastore 9083 >/dev/null && echo "Metastore服务运行正常" || echo "Metastore服务运行异常"

check_process HiveServer2 10000 >/dev/null && echo "HiveServer2服务运行正常" || echo "HiveServer2服务运行异常"

;;

*)

echo Invalid Args!

echo 'Usage: '$(basename $0)' start|stop|restart|status'

;;

esac

添加执行权限 chmod +x hiveservices.sh

启动,查询,关闭:

hiveservices.sh start,status,stop

建表并塞入数据,看hive on yarn 是否执行成功:

create table music(id int,name string);

insert into table music values(1,'hello');

5.spark2.4.5编译安装

安装前提环境:java环境(包括javac)

官方包含mvn包,可以不用自己安装mvn。

安装参考1:https://spark.apache.org/docs/2.4.5/building-spark.html

安装参考2:https://blog.csdn.net/LLJJYY001/article/details/104018599

安装参考3:https://blog.csdn.net/qq_43591172/article/details/126575084

下载tar包地址: https://archive.apache.org/dist/spark/spark-2.4.5/spark-2.4.5.tgz

更改pom,增加maven仓库,加速安装

<repositories>

<repository>

<id>centralid>

<url>http://maven.aliyun.com/nexus/content/groups/public/url>

<name>aliyunname>

repository>

<repository>

<id>cloudera.repoid>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/url>

repository>

repositories>

增加mvn编译时候的内存

export MAVEN_OPTS="-Xmx2g -XX:ReservedCodeCacheSize=512m"

执行编译命令:

ps: spark2.4.5 在编译的时候使用hadoop2.7.3版本。

./dev/make-distribution.sh --name with-hadoopAndHive --tgz -Phive -Phive-thriftserver -Pyarn -Pscala-2.12 -Pparquet-provided -Porc-provided -Phadoop-2.7 -Dhadoop.version=2.7.3 -Dscala.version=2.12.12 -Dscala.binary.version=2.12 -DskipTests

# ./dev/make-distribution.sh --name without-hiveAndThriftServer --tgz -Pyarn -Phadoop-3.2 -Pscala-2.12 -Pparquet-provided -Porc-provided -Dhadoop.version=3.2.3 -Dscala.version=2.12.12 -Dscala.binary.version=2.12 -DskipTests

将生成后的tgz包放入到对应机器后,解压。

修改配置文件/etc/profile.d/my_env.sh,增加下面SPARK_HOME环境变量

#SPARK

export SPARK_HOME=/opt/module/spark-2.4.5

export PATH=$PATH:$SPARK_HOME/bin

source 使其生效

新增配置文件/opt/module/spark/conf/spark-env.sh,配置下面内容

export JAVA_HOME=/opt/module/jdk1.8.0_212/

export HIVE_HOME=/opt/module/apache-hive-3.1.2-bin

export PATH=$PATH:$HIVE_HOME/bin

export SPARK_DIST_CLASSPATH=$(hadoop classpath):/opt/module/apache-hive-3.1.2-bin/lib/*

export HADOOP_CONF_DIR=/opt/module/hadoop-3.2.3/etc/hadoop/

export YARN_CONF_DIR=/opt/module/hadoop-3.2.3/etc/hadoop

export SPARK_HISTORY_OPTS="

-Dspark.history.ui.port=18080

-Dspark.history.fs.logDirectory=hdfs://hadoop1:9000/spark-history

-Dspark.history.retainedApplications=30"

添加spark配置文件spark-defaults.conf,其中 hdfs://hadoop1:9000是配置的hdfs服务的主机名和绑定端口名,要和hdfs配置的保持一致。

内容如下:

spark.master yarn

spark.eventLog.enabled true

spark.eventLog.dir hdfs://hadoop1:9000/spark-history

spark.driver.memory 8g

yarn.scheduler.maximum-allocation-mb = 8G

spark.executor.memory = 4G

spark.yarn.executor.memoryOverhead =3090MB

将冲突的包修改

cd /opt/module/spark-2.4.5/jars/

cp /opt/module/apache-hive-3.1.2-bin/lib/guava-27.0-jre.jar ./

mv guava-14.0.1.jar guava-14.0.1.jar_bak

mv parquet-hadoop-bundle-1.6.0.jar parquet-hadoop-bundle-1.6.0.jar_bak

cp /opt/module/apache-hive-3.1.2-bin/lib/parquet-hadoop-bundle-1.10.0.jar ./

# cp /opt/module/scala-2.12.12/lib/jline-2.14.6.jar ./

在hdfs创建目录并上传jar包:

hadoop fs -mkdir /spark-history

hadoop fs -mkdir /spark-jars

hadoop fs -put /opt/module/spark-2.4.5/jars/* /spark-jars

测试正常使用yarn集群,执行测试命令(yarn模式不需要预先执行spark的start-all.sh命令):

./bin/run-example org.apache.spark.examples.SparkPi

![]()

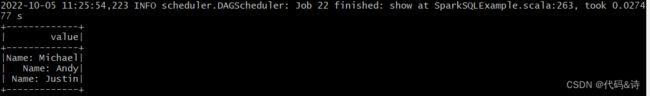

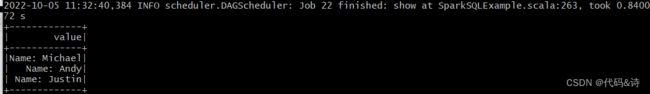

测试spark-sql (并不会和hive交互),执行测试命令:

cd /opt/module/spark-2.4.5

hadoop fs -mkdir -p /user/root/examples/src/main/resources/

hadoop fs -put ./examples/src/main/resources/people.* /user/root/examples/src/main/resources/

./bin/run-example org.apache.spark.examples.sql.SparkSQLExample --jars "/opt/module/apache-hive-3.1.2-bin/lib/*"

spark-submit --master yarn --deploy-mode client --queue default --num-executors 2 --driver-memory 1g --class org.apache.spark.examples.sql.SparkSQLExample ./examples/jars/spark-examples_2.11-2.4.5.jar

spark-submit --master yarn --deploy-mode client --queue default --num-executors 2 --driver-memory 1g --class org.apache.spark.examples.SparkPi ./examples/jars/spark-examples_2.11-2.4.5.jar

![]()

测试spark-shell,如果出现 “java.lang.IllegalArgumentException: Unrecognized Hadoop major version number: 3.1.2” 的报错,在spark的conf下增加下面文件 common-version-info.properties,内容为(使用“不包含hive的编译包”或者使用“同时包含hive和hadoop2.7的编译包”的不会出现这个问题):

version=2.7.6

再次启动spark-shell:

spark-shell --master yarn

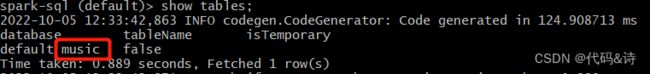

执行spark-sql,会发现没有hive下面的table表,此时就要把hive集成到spark中:

整合需要spark能够读取找到Hive的元数据以及数据存放位置。将hive的hive-site.xml文件拷贝到Spark的conf目录下,同时添加metastore的url配置(对应hive安装节点,我的为hadoop1节点)

拷贝hive中的mysql驱动jar包到spark中的jars目录下

再次执行spark-shell 和 spark-sql的时候,就能读取到hive当中的数据:

启动命令:

./sbin/start-all.sh

./sbin/start-history-server.sh

页面端地址:

Spark中Master的UI管理界面: http://hadoop1:8080/

Spark中Spark-shell的UI管理界面: http://hadoop1:4040/

Hadoop的UI管理界面: http://hadoop1:8088

查看spark历史:http://hadoop1:18080

为何不配置hiveon spark ?: hive3.1.3 on spark2.4.5 with hadoop3.2.3 有很多坑,要么hive 执行失败,要么spark-sql执行失败。另外spark-sql已经能够替代hive了,所以在经历过多次尝试失败后,放弃该方式。据说spark3已经把坑填上了。

spark在yarn执行的原理: https://www.modb.pro/db/73920