R3live+PaddleYOLO同步建图和目标检测

1.硬件环境

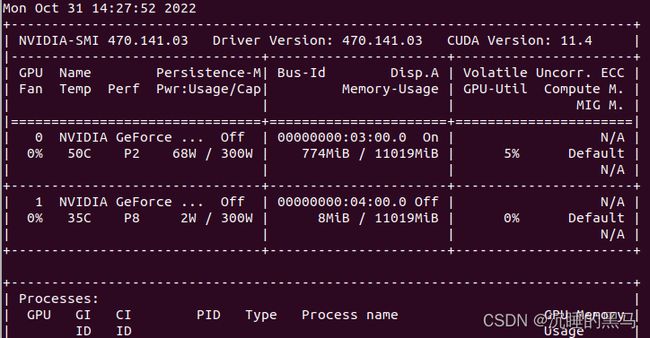

显卡2080ti,nvidia驱动470.141.03

系统ubuntu18.04,cuda-11.1,TensorRT-7.2.1.6, opencv-3.4.16

Python IDE: Anaconda、Pycharm

2.paddleyolo

2.1 环境搭建

1.源码下载

git clone https://github.com/PaddlePaddle/PaddleYOLO.git

2.Conda环境创建

cd PaddleYOLO

conda create -n paddledetect python=3.7

pip install -r requirements.txt -i http://mirrors.aliyun.com/pypi/simple/

3.Pycharm中安装paddle

pip install common dual tight data prox -i https://mirrors.aliyun.com/pypi/simple/

pip install paddle -i https://mirrors.aliyun.com/pypi/simple/

pip install paddlepaddle-gpu -i https://mirrors.aliyun.com/pypi/simple/

下面是我这边pip安装的包,你可以参考下,主要看下paddlepaddle-gpu的版本:

astor==0.8.1

Babel==2.10.3

bce-python-sdk==0.8.74

boto3==1.24.89

botocore==1.27.89

bottle==0.12.23

certifi==2022.9.24

charset-normalizer==2.1.1

click==8.1.3

common==0.1.2

cycler==0.11.0

Cython==0.29.32

data==0.4

decorator==5.1.1

dill==0.3.5.1

dual==0.0.10

dynamo3==0.4.10

filterpy==1.4.5

Flask==2.2.2

Flask-Babel==2.0.0

flywheel==0.5.4

fonttools==4.37.4

funcsigs==1.0.2

future==0.18.2

idna==3.4

importlib-metadata==5.0.0

itsdangerous==2.1.2

Jinja2==3.1.2

jmespath==1.0.1

joblib==1.2.0

kiwisolver==1.4.4

lap==0.4.0

MarkupSafe==2.1.1

matplotlib==3.5.3

mkl-fft==1.3.1

mkl-random==1.2.2

mkl-service==2.4.0

motmetrics==1.2.5

multiprocess==0.70.13

numpy==1.21.5

opencv-python==4.6.0.66

opt-einsum==3.3.0

packaging==21.3

paddle==1.0.2

paddle-bfloat==0.1.7

paddledet==2.4.0

paddlepaddle==2.3.2

paddlepaddle-gpu==2.3.2

pandas==1.3.5

peewee==3.15.3

Pillow==9.2.0

pip==22.2.2

protobuf==3.20.0

prox==0.0.17

pyclipper==1.3.0.post3

pycocotools==2.0.5

pycryptodome==3.15.0

pyparsing==3.0.9

PySocks==1.7.1

python-dateutil==2.8.2

python-geoip-python3==1.3

pytz==2022.4

PyYAML==6.0

requests==2.28.1

s3transfer==0.6.0

scikit-learn==1.0.2

scipy==1.7.3

setuptools==63.4.1

Shapely==1.8.4

six==1.16.0

sklearn==0.0

terminaltables==3.1.10

threadpoolctl==3.1.0

tight==0.1.0

tqdm==4.64.1

typeguard==2.13.3

typing_extensions==4.4.0

urllib3==1.26.12

visualdl==2.4.1

Werkzeug==2.2.2

wheel==0.37.1

xmltodict==0.13.0

zipp==3.8.1

2.2 模型导出

下面以yolov5-m为例进行操作:

cd PaddleYOLO

1.下载预训练模型权重

wget https://paddledet.bj.bcebos.com/models/yolov5_m_300e_coco.pdparams

2.模型导出

mkdir -p model/yolov5m/out_model

python tools/export_model.py -c configs/yolov5/yolov5_m_300e_coco.yml --output_dir=./model/yolov5m/out_model -o weights=./yolov5_m_300e_coco.pdparams

3.测试

python tools/infer.py -c ./configs/yolov5/yolov5_m_300e_coco.yml -o weights=./model/yolov5m/yolov5_m_300e_coco.pdparams --infer_img=demo/000000014439.jpg --draw_threshold=0.5

3.c++部署

3.1 环境配置

1.查看docs

cd PaddleYOLO/deploy/cpp

vim docs/linux_build.md

根据文档搭建paddle inference环境

2.修改环境路径和参数

vim scripts/build.sh

3.2 编译

bash scripts/build.sh

可能会有错误,这里只记录部分报错,仅供参考:

1.Could NOT find Git (missing: GIT_EXECUTABLE)

vim PaddleYOLO/deploy/cpp/cmake/yaml-cpp.cmake

把下面这行注释掉

find_package(Git REQUIRED)

2.CMake Error at CMakeLists.txt:94 (find_package):

Could not find a package configuration file provided by "OpenCV" with any

of the following names:

OpenCVConfig.cmake

opencv-config.cmake

vim PaddleYOLO/deploy/cpp/CMakeLists.txt

将把下面这行:

find_package(OpenCV REQUIRED PATHS ${OPENCV_DIR}/share/OpenCV NO_DEFAULT_PATH)

改为:

find_package(OpenCV REQUIRED)

3.fatal error: glog/logging.h: No such file or directory

sudo apt install libgoogle-glog-dev

3.3 测试

# cpu run

./build/main --model_dir=./model/yolov5m --image_file=./data/test.jpg

# gpu run

./build/main --model_dir=./model/yolov5m --image_file=./data/test.jpg --device=GPU --run_mode=trt_fp16

4.融合到R3Live

4.1 将目标检测相关文件部署到r3live

1.在r3live的src目录下创建一个目录

makdir -p detect/include

2.将上图中的paddle_yolov5的下的文件拷贝到detect下

cp paddle_yolov5/include/* r3live/src/detect/include

cp paddle_yolov5/src/object_detector.cc r3live/src/detect

cp paddle_yolov5/src/picodet_postprocess.cc r3live/src/detect

cp paddle_yolov5/src/preprocess_op.cc r3live/src/detect

cp paddle_yolov5/src/utils.cc r3live/src/detect

3.修改CMakeLists.txt 添加Detect 部分编译文件

下面文件是我这边的CMakeLists.txt文件,你可以参考下。

cmake_minimum_required(VERSION 2.8.3)

project(r3live)

set(CMAKE_BUILD_TYPE "Release")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++14")

set(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} -std=c++14 -O3 -lboost_system -msse2 -msse3 -pthread -Wenum-compare") # -Wall

set(CMAKE_CXX_STANDARD 14)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

set(CMAKE_CXX_EXTENSIONS OFF)

add_definitions(-DROOT_DIR=\"${CMAKE_CURRENT_SOURCE_DIR}/\")

# Add OpenCV if available

set(OpenCV_DIR "/opt/opencv-3.4.16/build")

FIND_PACKAGE(Boost REQUIRED COMPONENTS filesystem iostreams program_options system serialization)

if(Boost_FOUND)

INCLUDE_DIRECTORIES(${Boost_INCLUDE_DIRS})

LINK_DIRECTORIES(${Boost_LIBRARY_DIRS})

endif()

# image detect

option(WITH_MKL "Compile demo with MKL/OpenBlas support, default use MKL." ON)

option(WITH_GPU "Compile demo with GPU/CPU, default use CPU." ON)

option(WITH_STATIC_LIB "Compile demo with static/shared library, default use static." OFF)

option(USE_TENSORRT "Compile demo with TensorRT." ON)

option(WITH_ROCM "Compile demo with rocm." OFF)

set(PADDLE_LIB "/opt/r3live_ws/paddlepaddle/paddle_inference")

include_directories(${PADDLE_LIB})

set(PADDLE_LIB_THIRD_PARTY_PATH "${PADDLE_LIB}/third_party/install/")

include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}protobuf/include")

include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}glog/include")

include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}gflags/include")

include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}xxhash/include")

include_directories("${PADDLE_LIB_THIRD_PARTY_PATH}cryptopp/include")

link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}protobuf/lib")

link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}glog/lib")

link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}gflags/lib")

link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}xxhash/lib")

link_directories("${PADDLE_LIB_THIRD_PARTY_PATH}cryptopp/lib")

link_directories("${PADDLE_LIB}/paddle/lib")

if(WITH_MKL)

set(FLAG_OPENMP "-fopenmp")

endif()

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} ${FLAG_OPENMP}")

if(WITH_GPU)

if(NOT WIN32)

set(CUDA_LIB "/usr/local/cuda/lib64/" CACHE STRING "CUDA Library")

else()

if(CUDA_LIB STREQUAL "")

set(CUDA_LIB "C:\\Program\ Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v8.0\\lib\\x64")

endif()

endif(NOT WIN32)

endif()

if (USE_TENSORRT AND WITH_GPU)

set(TENSORRT_ROOT "/opt/TensorRT-7.2.1.6/")

if("${TENSORRT_ROOT}" STREQUAL "")

message(FATAL_ERROR "The TENSORRT_ROOT is empty, you must assign it a value with CMake command. Such as: -DTENSORRT_ROOT=TENSORRT_ROOT_PATH ")

endif()

set(TENSORRT_INCLUDE_DIR ${TENSORRT_ROOT}/include)

set(TENSORRT_LIB_DIR ${TENSORRT_ROOT}/lib)

file(READ ${TENSORRT_INCLUDE_DIR}/NvInfer.h TENSORRT_VERSION_FILE_CONTENTS)

string(REGEX MATCH "define NV_TENSORRT_MAJOR +([0-9]+)" TENSORRT_MAJOR_VERSION

"${TENSORRT_VERSION_FILE_CONTENTS}")

if("${TENSORRT_MAJOR_VERSION}" STREQUAL "")

file(READ ${TENSORRT_INCLUDE_DIR}/NvInferVersion.h TENSORRT_VERSION_FILE_CONTENTS)

string(REGEX MATCH "define NV_TENSORRT_MAJOR +([0-9]+)" TENSORRT_MAJOR_VERSION

"${TENSORRT_VERSION_FILE_CONTENTS}")

endif()

if("${TENSORRT_MAJOR_VERSION}" STREQUAL "")

message(SEND_ERROR "Failed to detect TensorRT version.")

endif()

string(REGEX REPLACE "define NV_TENSORRT_MAJOR +([0-9]+)" "\\1"

TENSORRT_MAJOR_VERSION "${TENSORRT_MAJOR_VERSION}")

message(STATUS "Current TensorRT header is ${TENSORRT_INCLUDE_DIR}/NvInfer.h. "

"Current TensorRT version is v${TENSORRT_MAJOR_VERSION}. ")

include_directories("${TENSORRT_INCLUDE_DIR}")

link_directories("${TENSORRT_LIB_DIR}")

endif()

if(WITH_MKL)

set(MATH_LIB_PATH "${PADDLE_LIB_THIRD_PARTY_PATH}mklml")

include_directories("${MATH_LIB_PATH}/include")

if(WIN32)

set(MATH_LIB ${MATH_LIB_PATH}/lib/mklml${CMAKE_STATIC_LIBRARY_SUFFIX}

${MATH_LIB_PATH}/lib/libiomp5md${CMAKE_STATIC_LIBRARY_SUFFIX})

else()

set(MATH_LIB ${MATH_LIB_PATH}/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX}

${MATH_LIB_PATH}/lib/libiomp5${CMAKE_SHARED_LIBRARY_SUFFIX})

endif()

set(MKLDNN_PATH "${PADDLE_LIB_THIRD_PARTY_PATH}mkldnn")

if(EXISTS ${MKLDNN_PATH})

include_directories("${MKLDNN_PATH}/include")

if(WIN32)

set(MKLDNN_LIB ${MKLDNN_PATH}/lib/mkldnn.lib)

else(WIN32)

set(MKLDNN_LIB ${MKLDNN_PATH}/lib/libmkldnn.so.0)

endif(WIN32)

endif()

else()

set(OPENBLAS_LIB_PATH "${PADDLE_LIB_THIRD_PARTY_PATH}openblas")

include_directories("${OPENBLAS_LIB_PATH}/include/openblas")

if(WIN32)

set(MATH_LIB ${OPENBLAS_LIB_PATH}/lib/openblas${CMAKE_STATIC_LIBRARY_SUFFIX})

else()

set(MATH_LIB ${OPENBLAS_LIB_PATH}/lib/libopenblas${CMAKE_STATIC_LIBRARY_SUFFIX})

endif()

endif()

if(WITH_STATIC_LIB)

set(DEPS ${PADDLE_LIB}/paddle/lib/libpaddle_inference${CMAKE_STATIC_LIBRARY_SUFFIX})

else()

if(WIN32)

set(DEPS ${PADDLE_LIB}/paddle/lib/paddle_inference${CMAKE_STATIC_LIBRARY_SUFFIX})

else()

set(DEPS ${PADDLE_LIB}/paddle/lib/libpaddle_inference${CMAKE_SHARED_LIBRARY_SUFFIX})

endif()

endif()

if (NOT WIN32)

set(EXTERNAL_LIB "-lrt -ldl -lpthread")

set(DEPS ${DEPS}

${MATH_LIB} ${MKLDNN_LIB}

glog gflags protobuf xxhash cryptopp

${EXTERNAL_LIB})

else()

set(DEPS ${DEPS}

${MATH_LIB} ${MKLDNN_LIB}

glog gflags_static libprotobuf xxhash cryptopp-static ${EXTERNAL_LIB})

set(DEPS ${DEPS} shlwapi.lib)

endif(NOT WIN32)

if(WITH_GPU)

if(NOT WIN32)

if (USE_TENSORRT)

set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/libnvinfer${CMAKE_SHARED_LIBRARY_SUFFIX})

set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/libnvinfer_plugin${CMAKE_SHARED_LIBRARY_SUFFIX})

endif()

set(DEPS ${DEPS} ${CUDA_LIB}/libcudart${CMAKE_SHARED_LIBRARY_SUFFIX})

else()

if(USE_TENSORRT)

set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/nvinfer${CMAKE_STATIC_LIBRARY_SUFFIX})

set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/nvinfer_plugin${CMAKE_STATIC_LIBRARY_SUFFIX})

if(${TENSORRT_MAJOR_VERSION} GREATER_EQUAL 7)

set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/myelin64_1${CMAKE_STATIC_LIBRARY_SUFFIX})

endif()

endif()

set(DEPS ${DEPS} ${CUDA_LIB}/cudart${CMAKE_STATIC_LIBRARY_SUFFIX} )

set(DEPS ${DEPS} ${CUDA_LIB}/cublas${CMAKE_STATIC_LIBRARY_SUFFIX} )

set(DEPS ${DEPS} ${CUDA_LIB}/cudnn${CMAKE_STATIC_LIBRARY_SUFFIX} )

endif()

endif()

if(WITH_ROCM)

if(NOT WIN32)

set(DEPS ${DEPS} ${ROCM_LIB}/libamdhip64${CMAKE_SHARED_LIBRARY_SUFFIX})

endif()

endif()

find_package(yaml-cpp REQUIRED)

include_directories(${YAML_CPP_INCLUDE_DIRS})

link_directories(${YAML_CPP_LIBRARIES})

set(DEPS ${DEPS} "-lyaml-cpp")

######################################################################

find_package(catkin REQUIRED COMPONENTS

roscpp

std_msgs

geometry_msgs

nav_msgs

tf

cv_bridge

livox_ros_driver

)

# find_package(Ceres REQUIRED)

find_package(PCL REQUIRED)

find_package(OpenCV 3.4 REQUIRED)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} ${OpenMP_CXX_FLAGS}")

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} ${OpenMP_C_FLAGS}")

### Find OpenMP #######

FIND_PACKAGE(OpenMP)

if(OPENMP_FOUND)

SET(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} ${OpenMP_CXX_FLAGS}")

#cmake only check for separate OpenMP library on AppleClang 7+

#https://github.com/Kitware/CMake/blob/42212f7539040139ecec092547b7d58ef12a4d72/Modules/FindOpenMP.cmake#L252

if (CMAKE_CXX_COMPILER_ID MATCHES "AppleClang" AND (NOT CMAKE_CXX_COMPILER_VERSION VERSION_LESS "7.0"))

SET(OpenMP_LIBS ${OpenMP_libomp_LIBRARY})

LIST(APPEND OpenMVS_EXTRA_LIBS ${OpenMP_LIBS})

endif()

else()

message("-- Can't find OpenMP. Continuing without it.")

endif()

# find_package(OpenMVS)

if(OpenMVS_FOUND)

include_directories(${OpenMVS_INCLUDE_DIRS})

add_definitions(${OpenMVS_DEFINITIONS})

endif()

# message(WARNING "OpenCV_VERSION: ${OpenCV_VERSION}")

include_directories(${catkin_INCLUDE_DIRS})

generate_messages(

DEPENDENCIES

geometry_msgs

)

set(CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake)

find_package(Eigen3)

include_directories(

${catkin_INCLUDE_DIRS}

${EIGEN3_INCLUDE_DIR}

${PCL_INCLUDE_DIRS}

${livox_ros_driver_INCLUDE_DIRS}

./src

./src/loam/include

./src/tools/

./src/rgb_map

./src/meshing

./src/detect/include

)

catkin_package()

message(STATUS "===== ${PROJECT_NAME}: OpenCV library status: =====")

message(STATUS "===== OpenCV ersion: ${OpenCV_VERSION} =====")

message(STATUS "===== OpenCV libraries: ${OpenCV_LIBS} =====")

message(STATUS "===== OpenCV include path: ${OpenCV_INCLUDE_DIRS} =====")

add_executable(r3live_LiDAR_front_end src/loam/LiDAR_front_end.cpp)

target_link_libraries(r3live_LiDAR_front_end ${catkin_LIBRARIES} ${PCL_LIBRARIES})

if(Ceres_FOUND)

message(STATUS "===== Find ceres, Version ${Ceres_VERSION} =====")

include_directories(${CERES_INCLUDE_DIRS})

add_executable(r3live_cam_cali src/r3live_cam_cali.cpp)

target_link_libraries(r3live_cam_cali ${catkin_LIBRARIES} ${OpenCV_LIBRARIES} ${CERES_LIBRARIES})

add_executable(r3live_cam_cali_create_cali_board src/r3live_cam_cali_create_cali_board.cpp)

target_link_libraries(r3live_cam_cali_create_cali_board ${catkin_LIBRARIES} ${OpenCV_LIBRARIES})

endif()

add_executable(test_timer src/tools/test_timer.cpp)

add_executable(r3live_mapping src/r3live.cpp

src/r3live_lio.cpp

src/loam/include/kd_tree/ikd_Tree.cpp

src/loam/include/FOV_Checker/FOV_Checker.cpp

src/loam/IMU_Processing.cpp

src/rgb_map/offline_map_recorder.cpp

# From VIO

src/r3live_vio.cpp

src/optical_flow/lkpyramid.cpp

src/rgb_map/rgbmap_tracker.cpp

src/rgb_map/image_frame.cpp

src/rgb_map/pointcloud_rgbd.cpp

# Detect

src/detect/object_detector.cpp

src/detect/picodet_postprocess.cpp

src/detect/preprocess_op.cpp

src/detect/utils.cpp

)

target_link_libraries(r3live_mapping

${catkin_LIBRARIES}

${Boost_LIBRARIES}

${Boost_FILESYSTEM_LIBRARY}

${Boost_SERIALIZATION_LIBRARY} # serialization

${OpenCV_LIBRARIES}

${DEPS}

pcl_common

pcl_io)

FIND_PACKAGE(CGAL REQUIRED)

if(CGAL_FOUND)

include_directories(${CGAL_INCLUDE_DIRS})

add_definitions(${CGAL_DEFINITIONS})

link_directories(${CGAL_LIBRARY_DIRS})

ADD_DEFINITIONS(-D_USE_BOOST -D_USE_EIGEN -D_USE_OPENMP)

SET(_USE_BOOST TRUE)

SET(_USE_OPENMP TRUE)

SET(_USE_EIGEN TRUE)

add_executable(r3live_meshing src/r3live_reconstruct_mesh.cpp

src/rgb_map/image_frame.cpp

src/rgb_map/pointcloud_rgbd.cpp

# Common

src/meshing/MVS/Common/Common.cpp

src/meshing/MVS/Common/Log.cpp

src/meshing/MVS/Common/Timer.cpp

src/meshing/MVS/Common/Types.cpp

src/meshing/MVS/Common/Util.cpp

# MVS

src/meshing/MVS/Mesh.cpp

src/meshing/MVS/PointCloud.cpp

src/meshing/MVS/Camera.cpp

src/meshing/MVS/Platform.cpp

src/meshing/MVS/PLY.cpp

src/meshing/MVS/OBJ.cpp

src/meshing/MVS/IBFS.cpp

)

target_link_libraries(r3live_meshing

${catkin_LIBRARIES}

${Boost_LIBRARIES}

${Boost_FILESYSTEM_LIBRARY}

${Boost_SERIALIZATION_LIBRARY} # serialization

${CGAL_LIBS}

${OpenCV_LIBRARIES}

${JPEG_LIBRARIES}

gmp

pcl_common

pcl_io

pcl_kdtree)

endif()

里面的路径需要你自己进行修改。

上面完成后,你可以先编译下有没有问题,如果没有问题就在进行下面的步骤。

4.2 编写目标检测代码

1.修改yaml文件

vim config/r3live_config.yaml

在文件末尾添加:

r3live_common:

det_img_en: 0

r3live_det:

model_dir: "/opt/r3live_ws/paddlepaddle/paddle_yolov5/model/yolov5m" # 路径需要根据你自己的环境修改

model_type: "yolov5m"

use_gpu: 1

use_trt: 1

trt_precision: "fp16" # fp32, fp16, int8

use_trt_dynamic_shape: 1

pub_det_img: 1

custom_scene: 0

2.修改r3live.hpp文件

#include "object_detector.h"

# 定义变量

ros::Publisher

int det_img_en;

std::string det_model_dir;

std::string det_model_type;

int det_use_gpu;

int det_use_trt;

std::string det_trt_precision;

int det_use_trt_dynamic_shape;

int det_custom_scene = 0;

int m_if_pub_det_img = 0;

std::shared_ptr detector;

void publish_det_img(cv::Mat & img);

void Image_Object_Detect(cv::Mat& org_img, cv::Mat& det_img);

get_ros_parameter( m_ros_node_handle, "r3live_common/det_img_en", det_img_en, 0);

if (det_img_en) {

get_ros_parameter( m_ros_node_handle, "r3live_det/model_dir", det_model_dir, std::string("model path"));

get_ros_parameter( m_ros_node_handle, "r3live_det/model_type", det_model_type, std::string("model type"));

get_ros_parameter( m_ros_node_handle, "r3live_det/use_gpu", det_use_gpu, 0);

get_ros_parameter( m_ros_node_handle, "r3live_det/use_trt", det_use_trt, 0);

get_ros_parameter( m_ros_node_handle, "r3live_det/trt_precision", det_trt_precision, std::string("fp16"));

get_ros_parameter( m_ros_node_handle, "r3live_det/use_trt_dynamic_shape", det_use_trt_dynamic_shape, 0);

get_ros_parameter( m_ros_node_handle, "r3live_det/pub_det_img", m_if_pub_det_img, 0);

get_ros_parameter( m_ros_node_handle, "r3live_det/custom_scene", det_custom_scene, 0);

detector.reset(new PaddleDetection::ObjectDetector(det_model_dir));

}

3.修改r3live_vio.cpp

# 添加函数

# 用于发布检测结果,通过rviz显示

void R3LIVE::publish_det_img( cv::Mat &img )

{

cv_bridge::CvImage out_msg;

out_msg.header.stamp = ros::Time::now(); // Same timestamp and tf frame as input image

out_msg.encoding = sensor_msgs::image_encodings::BGR8; // Or whatever

out_msg.image = img; // Your cv::Mat

pub_det_img.publish( out_msg );

}

# 目标检测函数

void R3LIVE::Image_Object_Detect(cv::Mat& org_img, cv::Mat& det_img) {

std::vector result;

std::vector bbox_num;

std::vector det_times;

std::vector batch_imgs;

batch_imgs.push_back(org_img);

double threshold = 0.5;

bool is_rbox = false;

//detect

detector->Predict(batch_imgs, threshold, 0, 1, &result, &bbox_num, &det_times);

//postprocess

auto labels = detector->GetLabelList();

auto colormap = PaddleDetection::GenerateColorMap(labels.size());

int item_start_idx = 0;

std::vector im_result;

int detect_num = 0;

for (int j = 0; j < bbox_num[0]; j++) {

PaddleDetection::ObjectResult item = result[item_start_idx + j];

if (item.confidence < threshold || item.class_id == -1) {

continue;

}

detect_num += 1;

im_result.push_back(item);

if (item.rect.size() > 6) {

is_rbox = true;

}

}

cv::Mat vis_img = PaddleDetection::VisualizeResult(

org_img, im_result, labels, colormap, is_rbox);

det_img = vis_img.clone();

}

void R3LIVE::process_image( cv::Mat &temp_img, double msg_time )

{

...

#添加图像检测

if (det_img_en) {

Image_Object_Detect(img_pose->m_img, img_pose->m_img_det);

}

}

void R3LIVE::service_VIO_update() {

...

#添加图像发布

if ( m_if_pub_det_img )

{

publish_det_img( img_pose->m_img_det );

}

}

5.测试结果

R3live+PaddleYOLO实现同时建图和目标检测