pytorch实现ResNet50模型(小白学习,详细讲解)

参考资料

作为新手学习难免会有很多不懂的地方,以下是我参考的一些资料:

ResNet源码:https://github.com/pytorch/vision/blob/master/torchvision/models/resnet.py

源码讲解:https://www.jianshu.com/p/ec0967460d08

ResNet论文:https://arxiv.org/pdf/1512.03385.pdf

ResNet50复现:https://note.youdao.com/ynoteshare1/index.html?id=5a7dbe1a71713c317062ddeedd97d98e&type=note

ResNet50复现讲解:https://www.bilibili.com/video/BV1154y1S7WC?from=search&seid=8328821625196427671

代码实现

import torch

from torch import nn

class Bottleneck(nn.Module):

#每个stage维度中扩展的倍数

extention=4

def __init__(self,inplanes,planes,stride,downsample=None):

'''

:param inplanes: 输入block的之前的通道数

:param planes: 在block中间处理的时候的通道数

planes*self.extention:输出的维度

:param stride:

:param downsample:

'''

super(Bottleneck, self).__init__()

self.conv1=nn.Conv2d(inplanes,planes,kernel_size=1,stride=stride,bias=False)

self.bn1=nn.BatchNorm2d(planes)

self.conv2=nn.Conv2d(planes,planes,kernel_size=3,stride=1,padding=1,bias=False)

self.bn2=nn.BatchNorm2d(planes)

self.conv3=nn.Conv2d(planes,planes*self.extention,kernel_size=1,stride=1,bias=False)

self.bn3=nn.BatchNorm2d(planes*self.extention)

self.relu=nn.ReLU(inplace=True)

#判断残差有没有卷积

self.downsample=downsample

self.stride=stride

def forward(self,x):

#参差数据

residual=x

#卷积操作

out=self.conv1(x)

out=self.bn1(out)

out=self.relu(out)

out=self.conv2(out)

out=self.bn2(out)

out=self.relu(out)

out=self.conv3(out)

out=self.bn3(out)

out=self.relu(out)

#是否直连(如果Indentity blobk就是直连;如果Conv2 Block就需要对残差边就行卷积,改变通道数和size

if self.downsample is not None:

residual=self.downsample(x)

#将残差部分和卷积部分相加

out+=residual

out=self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self,block,layers,num_class):

#inplane=当前的fm的通道数

self.inplane=64

super(ResNet, self).__init__()

#参数

self.block=block

self.layers=layers

#stem的网络层

self.conv1=nn.Conv2d(3,self.inplane,kernel_size=7,stride=2,padding=3,bias=False)

self.bn1=nn.BatchNorm2d(self.inplane)

self.relu=nn.ReLU()

self.maxpool=nn.MaxPool2d(kernel_size=3,stride=2,padding=1)

#64,128,256,512指的是扩大4倍之前的维度,即Identity Block中间的维度

self.stage1=self.make_layer(self.block,64,layers[0],stride=1)

self.stage2=self.make_layer(self.block,128,layers[1],stride=2)

self.stage3=self.make_layer(self.block,256,layers[2],stride=2)

self.stage4=self.make_layer(self.block,512,layers[3],stride=2)

#后续的网络

self.avgpool=nn.AvgPool2d(7)

self.fc=nn.Linear(512*block.extention,num_class)

def forward(self,x):

#stem部分:conv+bn+maxpool

out=self.conv1(x)

out=self.bn1(out)

out=self.relu(out)

out=self.maxpool(out)

#block部分

out=self.stage1(out)

out=self.stage2(out)

out=self.stage3(out)

out=self.stage4(out)

#分类

out=self.avgpool(out)

out=torch.flatten(out,1)

out=self.fc(out)

return out

def make_layer(self,block,plane,block_num,stride=1):

'''

:param block: block模板

:param plane: 每个模块中间运算的维度,一般等于输出维度/4

:param block_num: 重复次数

:param stride: 步长

:return:

'''

block_list=[]

#先计算要不要加downsample

downsample=None

if(stride!=1 or self.inplane!=plane*block.extention):

downsample=nn.Sequential(

nn.Conv2d(self.inplane,plane*block.extention,stride=stride,kernel_size=1,bias=False),

nn.BatchNorm2d(plane*block.extention)

)

# Conv Block输入和输出的维度(通道数和size)是不一样的,所以不能连续串联,他的作用是改变网络的维度

# Identity Block 输入维度和输出(通道数和size)相同,可以直接串联,用于加深网络

#Conv_block

conv_block=block(self.inplane,plane,stride=stride,downsample=downsample)

block_list.append(conv_block)

self.inplane=plane*block.extention

#Identity Block

for i in range(1,block_num):

block_list.append(block(self.inplane,plane,stride=1))

return nn.Sequential(*block_list)

resnet=ResNet(Bottleneck,[3,4,6,3],1000)

x=torch.randn(64,3,224,224)

X=resnet(x)

print(X.shape)

输出结果

torch.Size([64, 1000])

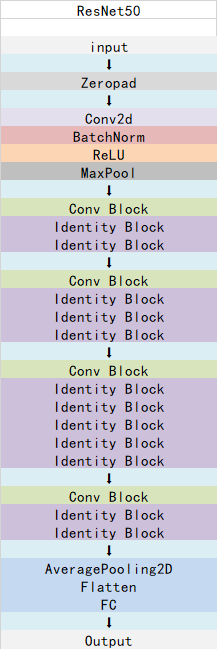

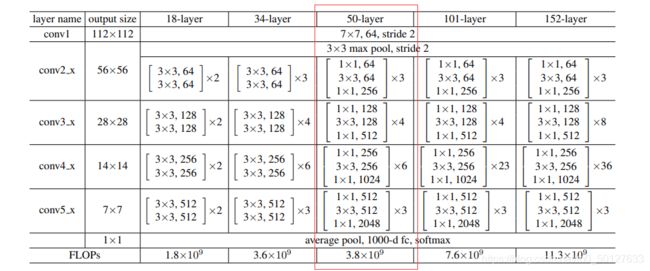

首先我们需要了解ResNet的原理和ResNet50的构造,如果参考我所上传的资料,完全可以搞懂。

代码讲解

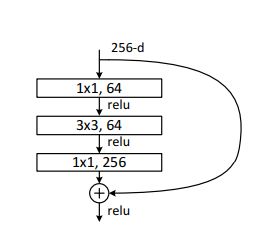

self.conv1=nn.Conv2d(inplanes,planes,kernel_size=1,stride=stride,bias=False)

self.bn1=nn.BatchNorm2d(planes)

self.conv2=nn.Conv2d(planes,planes,kernel_size=3,stride=1,padding=1,bias=False)

elf.bn2=nn.BatchNorm2d(planes)

self.conv3=nn.Conv2d(planes,planes*self.extention,kernel_size=1,stride=1,bias=False)

self.bn3=nn.BatchNorm2d(planes*self.extention)

self.relu=nn.ReLU(inplace=True)

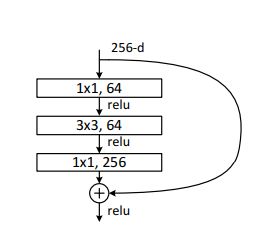

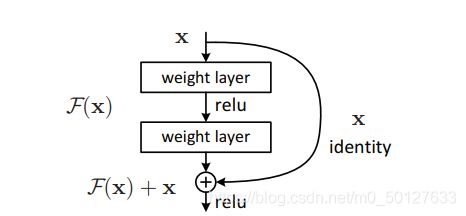

这是ResNet最核心的地方:

downsample是用来将残差数据和卷积数据的shape变的相同,可以直接进行相加操作。

if self.downsample is not None:

residual=self.downsample(x)

#将残差部分和卷积部分相加

out+=residual

out=self.relu(out)

ResNet

总体结构

class ResNet(nn.Module):

def __init__(self,block,layers,num_class):

#inplane=当前的fm的通道数

self.inplane=64

super(ResNet, self).__init__()

#参数

self.block=block

self.layers=layers

#stem的网络层

self.conv1=nn.Conv2d(3,self.inplane,kernel_size=7,stride=2,padding=3,bias=False)

self.bn1=nn.BatchNorm2d(self.inplane)

self.relu=nn.ReLU()

self.maxpool=nn.MaxPool2d(kernel_size=3,stride=2,padding=1)

#64,128,256,512指的是扩大4倍之前的维度,即Identity Block中间的维度

self.stage1=self.make_layer(self.block,64,layers[0],stride=1)

self.stage2=self.make_layer(self.block,128,layers[1],stride=2)

self.stage3=self.make_layer(self.block,256,layers[2],stride=2)

self.stage4=self.make_layer(self.block,512,layers[3],stride=2)

#后续的网络

self.avgpool=nn.AvgPool2d(7)

self.fc=nn.Linear(512*block.extention,num_class)

def forward(self,x):

#stem部分:conv+bn+maxpool

out=self.conv1(x)

out=self.bn1(out)

out=self.relu(out)

out=self.maxpool(out)

#block部分

out=self.stage1(out)

out=self.stage2(out)

out=self.stage3(out)

out=self.stage4(out)

#分类

out=self.avgpool(out)

out=torch.flatten(out,1)

out=self.fc(out)

return out

def make_layer(self,block,plane,block_num,stride=1):

'''

:param block: block模板

:param plane: 每个模块中间运算的维度,一般等于输出维度/4

:param block_num: 重复次数

:param stride: 步长

:return:

'''

block_list=[]

#先计算要不要加downsample

downsample=None

if(stride!=1 or self.inplane!=plane*block.extention):

downsample=nn.Sequential(

nn.Conv2d(self.inplane,plane*block.extention,stride=stride,kernel_size=1,bias=False),

nn.BatchNorm2d(plane*block.extention)

)

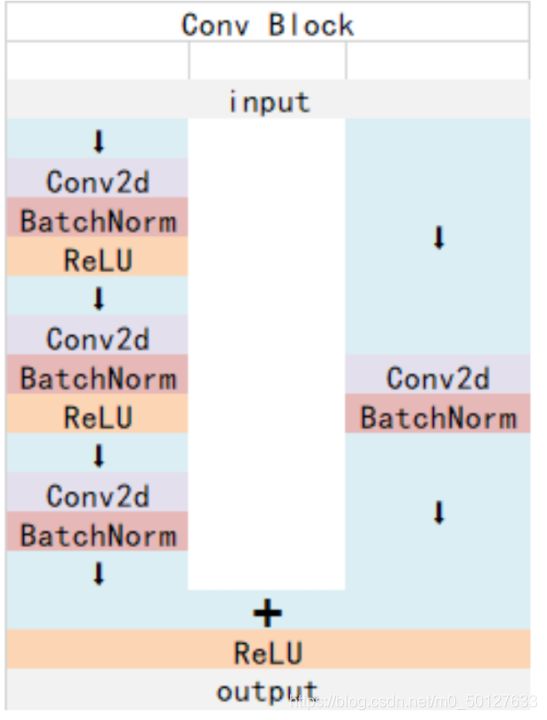

# Conv Block输入和输出的维度(通道数和size)是不一样的,所以不能连续串联,他的作用是改变网络的维度

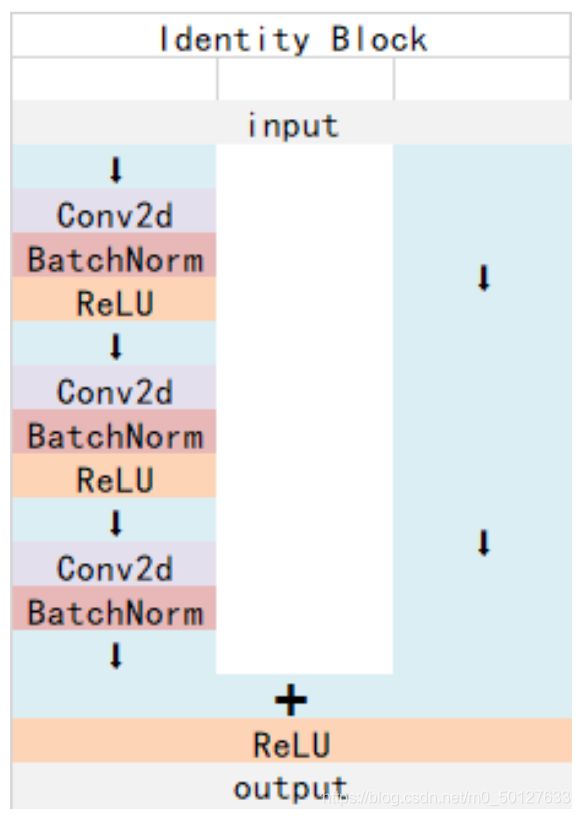

# Identity Block 输入维度和输出(通道数和size)相同,可以直接串联,用于加深网络

#Conv_block

conv_block=block(self.inplane,plane,stride=stride,downsample=downsample)

block_list.append(conv_block)

self.inplane=plane*block.extention

#Identity Block

for i in range(1,block_num):

block_list.append(block(self.inplane,plane,stride=1))

return nn.Sequential(*block_list)

self.conv1=nn.Conv2d(3,self.inplane,kernel_size=7,stride=2,padding=3,bias=False)

self.bn1=nn.BatchNorm2d(self.inplane)

self.relu=nn.ReLU()

self.maxpool=nn.MaxPool2d(kernel_size=3,stride=2,padding=1)

#64,128,256,512指的是扩大4倍之前的维度,即Identity Block中间的维度

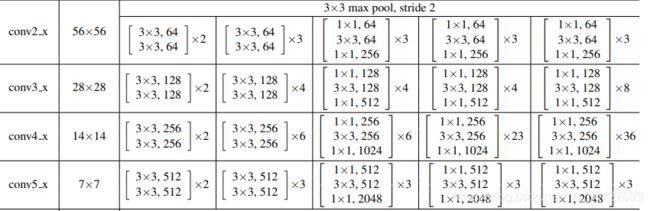

self.stage1=self.make_layer(self.block,64,layers[0],stride=1)

self.stage2=self.make_layer(self.block,128,layers[1],stride=2)

self.stage3=self.make_layer(self.block,256,layers[2],stride=2)

self.stage4=self.make_layer(self.block,512,layers[3],stride=2)

这段代码实现了

![]()

self.avgpool=nn.AvgPool2d(7)

self.fc=nn.Linear(512*block.extention,num_class)

make_layer

downsample是残差是否进行卷积的标识。

downsample=None

#残差进行卷积的条件

if(stride!=1 or self.inplane!=plane*block.extention):

downsample=nn.Sequential(

nn.Conv2d(self.inplane,plane*block.extention,stride=stride,kernel_size=1,bias=False),

nn.BatchNorm2d(plane*block.extention)

)

Conv Block输入和输出的维度(通道数和size)是不一样的,所以不能连续串联,他的作用是改变网络的维度

conv_block=block(self.inplane,plane,stride=stride,downsample=downsample)

Identity Block 输入维度和输出(通道数和size)相同,可以直接串联,用于加深网络

#Identity Block

for i in range(1,block_num):

block_list.append(block(self.inplane,plane,stride=1))