TensorRT使用(Tensorflow/Keras/Pytorch)

本文旨在记录TensorRT使用情况,本人实测TensorRT加速Tensorflow,Keras,Pytorch模型,同时还对比了腾讯的Forward框架,当然Forward框架使用情况本篇文章不做记录。

环境(一):

(1)cuda:11.0

(2)cudnn:8.0.4

(3)TensorRT:7.2.1.6

(4)pycuda:2019.1.2

环境(二):

(1)cuda:10.0

(2)cudnn:7.6.3

(3)TensorRT:7.0.0.11

(4)pycuda:2019.1.2

一、TensorRT使用

(一)利用TensortRT加速Tensorflow模型

TensorRT官方样例:基于手写数字体识别MNIST数据集的Lenet5模型为例。

主体思想: Tensorflow->TensorRT(pb->uff)

具体流程:(1)下载MNIST数据集;(2)训练Lenet5模型;(3)将保存的模型(Lenet5.pb)转为Lenet.uff模型。

# 下载MNIST数据集

cd <TensorRT Path>/data/mnist

python download_pgms.py

# 开始训练Lenet5模型

cd <TensorRT Path>/samples/python/end_to_end_tensorflow_mnist/

python model.py

# 可得到模型Lenet5.pb

# 转换Lenet5.pb模型为Lenet5.uff模型

convert-to-uff ./models/Lenet5.pb ./models/Lenet5.uff

最后为TensorRT加载Lenet5.uff并加速推理代码。

# -*- coding:UTF-8 -*-

from random import randint

from PIL import Image

import numpy as np

import pycuda.driver as cuda

import pycuda.autoinit

import tensorrt as trt

import sys, os

# 导入/samples/python/common.py

sys.path.insert(1, os.path.join(sys.path[0], ".."))

import common

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

class ModelData(object):

MODEL_FILE = "lenet5.uff" # 模型路径

INPUT_NAME ="input_1" # 输入层名称 (convert-to-uff时可显示)

INPUT_SHAPE = (1, 28, 28) # 输入尺寸

OUTPUT_NAME = "dense_1/Softmax" # 输出层名称(convert-to-uff时可显示)

def build_engine(model_file):

# For more information on TRT basics, refer to the introductory samples.

with trt.Builder(TRT_LOGGER) as builder, builder.create_network() as network, trt.UffParser() as parser:

builder.max_workspace_size = common.GiB(1)

# Parse the Uff Network

parser.register_input(ModelData.INPUT_NAME, ModelData.INPUT_SHAPE)

parser.register_output(ModelData.OUTPUT_NAME)

parser.parse(model_file, network)

# Build and return an engine.

return builder.build_cuda_engine(network)

# Loads a test case into the provided pagelocked_buffer.

def load_normalized_test_case(data_paths, pagelocked_buffer, case_num=randint(0, 9)):

test_case_path = os.path.join(data_paths, str(case_num) + ".pgm")

# Flatten the image into a 1D array, normalize, and copy to pagelocked memory.

img = np.array(Image.open(test_case_path)).ravel()

np.copyto(pagelocked_buffer, 1.0 - img / 255.0)

return case_num

def main():

data_paths = './data'

model_path = os.environ.get("MODEL_PATH") or os.path.join(os.path.dirname(__file__), "models")

model_file = os.path.join(model_path, ModelData.MODEL_FILE)

with build_engine(model_file) as engine:

inputs, outputs, bindings, stream = common.allocate_buffers(engine)

with engine.create_execution_context() as context:

case_num = load_normalized_test_case(data_paths, pagelocked_buffer=inputs[0].host)

[output] = common.do_inference(context, bindings=bindings, inputs=inputs, outputs=outputs, stream=stream)

pred = np.argmax(output)

print("Test Case: " + str(case_num))

print("Prediction: " + str(pred))

if __name__ == '__main__':

main()

(二)利用TensortRT加速Keras模型

主体思想: Keras->ONNX->TensorRT(h5->onnx->engine)

以Densenet为例,该模型为数字识别模型。注: 本人动态输入(Batch-size, channels, height, width)为:(1, 1, 32, -1),动态输出(识别模型为19个类别)为:(1,-1,19)。

首先,待转换的模型需要包含图结构以及模型参数,利用keras2onnx工具,将keras模型转存为onnx模型;

import keras

import keras2onnx

import onnx

from tensorflow.keras.models import load_model

model = load_model('./models/densenet_num18.h5')

onnx_model = keras2onnx.convert_keras(model, model.name)

temp_model_file = './models/densenet_num18.onnx'

onnx.save_model(onnx_model, temp_model_file)

接下来有两种方法将onnx模型转换为TensorRT模型 :

法一:利用第三方工具onnx2trt将onnx模型转为uff模型。(目前因为动态输入问题未走通)

法二:利用TensorRT自带工具trtexec将onnx模型转为engine模型。(已走通固定尺寸输入)

cd <TensorRT Path>/bin

# 利用trtexec工具转换onnx模型为TensorRT可加载的engine模型

./trtexec \

--onnx=./keras/models/densenet_num18.onnx \

--shapes=the_input:1x32x148x1 \

--workspace=4096 \

--saveEngine=./keras/densenet_num18.engine

# 关于如何解决动态输入尺寸问题

# 将输入尺寸固定未每个不同batch_size进行保存engine,后续需修改--minShapes= \

# --optShapes= --maxShapes= 来进行动态尺寸输入。

# 如需16Bit量化,--fp16即可

最后加载engine模型并推理加速。

import os

import time

import numpy as np

import tensorrt as trt

from PIL import Image

import pycuda.driver as cuda

import pycuda.autoinit

class load_engine_inference(object):

def __init__(self, file_path):

self.engine = self.loadEngine2TensorRT(file_path)

def loadEngine2TensorRT(self, filepath):

G_LOGGER = trt.Logger(trt.Logger.WARNING)

# 反序列化引擎

with open(filepath, "rb") as f, trt.Runtime(G_LOGGER) as runtime:

engine = runtime.deserialize_cuda_engine(f.read())

return engine

def do_inference(self, img_path, do_print=False):

img = Image.open(img_path)

img = img.convert('L')

X = img.reshape([1, 32, width, 1])

input = X

# start_time = time.time()

output = np.empty((1, 18, 19), dtype=np.float32)

#创建上下文

self.context = self.engine.create_execution_context()

# 分配内存

d_input = cuda.mem_alloc(1 * input.size * input.dtype.itemsize)

d_output = cuda.mem_alloc(1 * output.size * output.dtype.itemsize)

bindings = [int(d_input), int(d_output)]

# pycuda操作缓冲区

self.stream = cuda.Stream()

# 将输入数据放入device

start_time = time.time()

self.pred_img(input, d_input, bindings, output, d_output)

end_time = time.time()

# 线程同步

self.stream.synchronize()

# 释放内存

self.context.__del__()

return output, end_time - start_time

def pred_img(self, input, d_input, bindings, output, d_output):

cuda.memcpy_htod_async(d_input, input, self.stream)

# 执行模型

self.context.execute_async(1, bindings, self.stream.handle, None)

# 将预测结果从从缓冲区取出

cuda.memcpy_dtoh_async(output, d_output, self.stream)

self.stream.synchronize()

img_path = '../tf/num_1_true.bmp'

engine_infer = load_engine_inference('./densenet_num18.engine')

## 第一次推理

res_1, use_time = engine_infer.do_inference(img_path)

print('first inference time: ', np.round((use_time)*1000, 2), 'ms')

法三:直接在代码中通过载入onnx模型并创建engine即可。(已走通动态输入)

# -*- coding:UTF-8 -*-

# @Time : 2021/5/24

# @Author : favorxin

# @Func : 利用TensorRT对ONNX模型进行加速的推理代码

import os

import sys

import time

import math

import copy

import numpy as np

import tensorrt as trt

import pycuda.autoinit

import pycuda.driver as cuda

from PIL import Image

### 正常TensorRT定义变量

TRT_LOGGER = trt.Logger(trt.Logger.INFO)

a = (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)

EXPLICIT_BATCH = 1 << (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)

device = 'cuda:0'

### 分配内存不超过30G

def GiB(val):

return val * 1 << 30

### 读取onnx模型并构建engine

def build_engine(onnx_path, using_half,engine_file,dynamic_input=True):

trt.init_libnvinfer_plugins(None, '')

with trt.Builder(TRT_LOGGER) as builder, builder.create_network(EXPLICIT_BATCH) as network, trt.OnnxParser(network, TRT_LOGGER) as parser:

builder.max_batch_size = 1 # always 1 for explicit batch

config = builder.create_builder_config()

config.max_workspace_size = GiB(4) # 设置4G的创建engine的显存占用

if using_half:

config.set_flag(trt.BuilderFlag.FP16) # 半精度FP6

# Load the Onnx model and parse it in order to populate the TensorRT network.

with open(onnx_path, 'rb') as model:

if not parser.parse(model.read()):

print ('ERROR: Failed to parse the ONNX file.')

for error in range(parser.num_errors):

print (parser.get_error(error))

return None

### 设置动态输入尺寸设置三个尺寸,依次最小尺寸,最佳尺寸,最大尺寸。

### (Batch-size, channel, height, width)输入最好按照该顺序,本人尝试通道数放最后未走通,动态输入会报错。

if dynamic_input:

profile = builder.create_optimization_profile();

profile.set_shape("the_input", (1,1,32,80), (1,1,32,148), (1,1,32,250))

config.add_optimization_profile(profile)

return builder.build_engine(network, config)

### 为输入,输出分配内存

def allocate_buffers(engine, is_explicit_batch=False, input_shape=None, output_shape=18):

inputs = []

outputs = []

bindings = []

class HostDeviceMem(object):

def __init__(self, host_mem, device_mem):

self.host = host_mem

self.device = device_mem

def __str__(self):

return "Host:\n" + str(self.host) + "\nDevice:\n" + str(self.device)

def __repr__(self):

return self.__str__()

for binding in engine:

dims = engine.get_binding_shape(binding)

### 此处是动态输入和动态输出所需设置的输入和输出尺寸大小。

if dims[-1] == -1 and len(dims) == 4:

assert (input_shape is not None)

dims[-1] = input_shape

elif dims[-2] == -1 and len(dims) == 3:

assert (output_shape is not None)

dims[-2] = output_shape

size = trt.volume(dims) * engine.max_batch_size # 设置推理所需的最大batch-size.

dtype = trt.nptype(engine.get_binding_dtype(binding))

# 分配内存

host_mem = cuda.pagelocked_empty(size, dtype)

device_mem = cuda.mem_alloc(host_mem.nbytes)

bindings.append(int(device_mem))

if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

return inputs, outputs, bindings

### 输入图片的预处理,输出预处理过后图片以及该图片预处理过后的宽。

def preprocess_image(imagepath):

img = Image.open(imagepath)

img = img.convert('L')

width, height = img.size[0], img.size[1]

scale = height * 1.0 / 32

new_width = int(width / scale)

img = img.resize([new_width, 32], Image.ANTIALIAS)

img = np.array(img).astype(np.float32) / 255.0 - 0.5

X = img.reshape([1, 1, 32, new_width])

return X, new_width

### 根据输入图片的宽度计算最终模型输出的尺寸大小。(因本人模型为动态输入,动态输出,故需计算输出尺寸大小,并分配输出的内存占用)。

def compute_out_shape(input_shape):

x2 = input_shape

x2_ft = math.floor((x2 - 5 + 2 * 2) / 2) + 1

for i in range(2):

x2_ft = int(x2_ft/2) if x2_ft % 2 == 0 else int((x2_ft-1)/2)

return x2_ft

### 根据engine推理代码

def profile_trt(engine, imagepath, batch_size):

assert (engine is not None)

### 确定模型输出尺寸,从而为输入输出分配内存

input_image, input_shape = preprocess_image(imagepath)

output_shape = compute_out_shape(input_shape)

segment_inputs, segment_outputs, segment_bindings = allocate_buffers(engine, True, input_shape, output_shape)

stream = cuda.Stream()

with engine.create_execution_context() as context:

context.active_optimization_profile = 0

origin_inputshape = context.get_binding_shape(0)

# 本人此处输入图片的宽度为动态的,故最后一位为动态的,并根据输入图片尺寸进行固定

if (origin_inputshape[-1] == -1 and len(origin_inputshape) == 4):

origin_inputshape[-1] = input_shape

context.set_binding_shape(0, (origin_inputshape))

# 本人此处模型输出结果为动态 的,为倒数第二位为动态的,可以根据输入图片的宽度确定

elif (origin_inputshape[-2] == -1 and len(origin_inputshape) == 3):

origin_inputshape[-2] = output_shape

context.set_binding_shape(0, (origin_inputshape))

segment_inputs[0].host = input_image

start_time = time.time()

[cuda.memcpy_htod_async(inp.device, inp.host, stream) for inp in segment_inputs]

context.execute_async(bindings=segment_bindings, stream_handle=stream.handle)

[cuda.memcpy_dtoh_async(out.host, out.device, stream) for out in segment_outputs]

stream.synchronize()

use_time = time.time() - start_time

infer_out = [out.host for out in segment_outputs]

results = infer_out[0]

return results, use_time

if __name__ == '__main__':

onnx_path = './onnx_models/densenet_num18_rmnode1.onnx'

usinghalf = True

batch_size = 1

imagepath = './data/num_5_true.bmp'

engine_file = 'densenet_num18_t_dynamic.engine'

init_engine = True

load_engine = True

### 初始化并创建engine,根据onnx模型创建engine,该步骤较为费时,故正常会将engine保存下来,方便后期推理。

if init_engine:

trt_engine = build_engine(onnx_path, usinghalf, engine_file, dynamic_input=True)

print('engine built successfully!')

with open(engine_file, "wb") as f:

f.write(trt_engine.serialize())

print('save engine successfully')

### 利用上方创建的engine进行推理。平时推理时可以将init_engine设为False,因为engine已保存。

if load_engine:

trt.init_libnvinfer_plugins(None, '')

with open(engine_file, "rb") as f, trt.Runtime(TRT_LOGGER) as runtime:

trt_engine = runtime.deserialize_cuda_engine(f.read())

trt_result, use_time = profile_trt(trt_engine, imagepath, batch_size)

print(trt_result)

(三)利用TensortRT加速Pytorch模型

主体思想: Pytorch->onnx->TensorRT(pth->onnx->engine)

以图片二分类模型resnet18为例,输入为(1,3,224,224),输出为(1,2)。

首先,利用Pytorch自带onnx转换工具,将Pytorch模型转换为onnx模型;

import torch

resnet_model_path = './models/resnet_state.pth'

model = ResNet()

model.load_state_dict(torch.load(resnet_model_path))

model.eval()

model.cuda()

## 转换ONNX模型并保存

export_onnx_file = "./models/resnet18.onnx"

x=torch.onnx.export(model, # 待转换的网络模型和参数

torch.randn(1, 3, 224, 224, device='cpu'), # 虚拟的输入,用于确定输入尺寸和推理计算图每个节点的尺寸

export_onnx_file, # 输出文件的名称

verbose=False, # 是否以字符串的形式显示计算图

input_names=["input"]+ ["params_%d"%i for i in range(120)], # 输入节点的名称,这里也可以给一个list,list中名称分别对应每一层可学习的参数,便于后续查询

output_names=["output"], # 输出节点的名称

opset_version=10, # onnx 支持采用的operator set

do_constant_folding=True, # 是否压缩常量

dynamic_axes={"input":{0: "batch_size"}, "output":{0: "batch_size"},} #设置动态维度,此处指明input节点的第0维度可变,命名为batch_size

)

然后利用TensorRT自带工具trtexec将onnx模型转化为engine模型。(同keras模型转engine模型一致)

cd <TensorRT Path>/bin

# 利用trtexec工具转换onnx模型为TensorRT可加载的engine模型

./trtexec \

--onnx=./torch/models/resnet18.onnx \

--shapes=input:1x3x224x224 \

--workspace=4096 \

--saveEngine=./torch/resnet18.engine

推理代码同keras推理代码一致。

二、实验结果

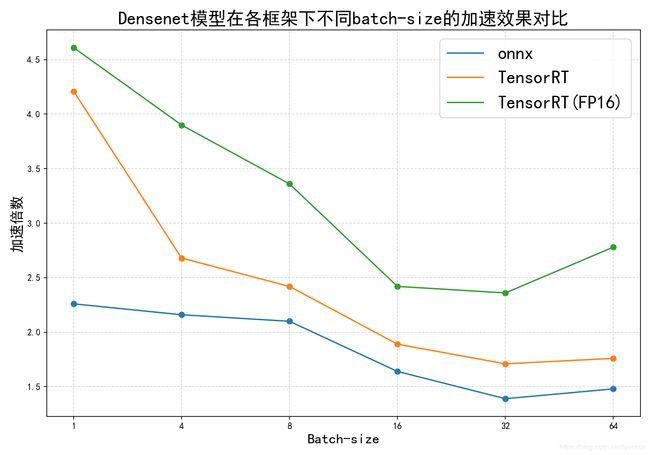

Keras:Densenet识别模型在2080Ti上的性能对比:

| 参数 | 模型 | 速度(ms) | 加速比 | 显存占用(MB) |

|---|---|---|---|---|

| batch_size=1 | keras | 5.72 | 1 x | 2737 |

| onnx | 2.53 | 2.26 x | 716 | |

| TensorRT | 1.36 | 4.21 x | 776 | |

| TensorRT(FP16) | 1.24 | 4.61 x | 774 | |

| batch_size=4 | keras | 5.73 | 1 x | 2847 |

| onnx | 2.65 | 2.16 x | 722 | |

| TensorRT | 2.14 | 2.68 x | 798 | |

| TensorRT(FP16) | 1.47 | 3.9 x | 620 | |

| batch_size=8 | keras | 6.25 | 1 x | 2959 |

| onnx | 2.98 | 2.1 x | 732 | |

| TensorRT | 2.58 | 2.42 x | 612 | |

| TensorRT(FP16) | 1.86 | 3.36 x | 624 | |

| batch_size=16 | keras | 6.26 | 1 x | 3179 |

| onnx | 3.81 | 1.64 x | 796 | |

| TensorRT | 3.32 | 1.89 x | 824 | |

| TensorRT(FP16) | 2.59 | 2.42 x | 630 | |

| batch_size=32 | keras | 8.64 | 1 x | 3369 |

| onnx | 6.23 | 1.39 x | 908 | |

| TensorRT | 5.06 | 1.71 x | 700 | |

| TensorRT(FP16) | 3.66 | 2.36 x | 646 | |

| batch_size=64 | keras | 16.43 | 1 x | 3949 |

| onnx | 11.1 | 1.48 x | 1164 | |

| TensorRT | 9.36 | 1.76 x | 770 | |

| TensorRT(FP16) | 5.9 | 2.78 x | 842 |

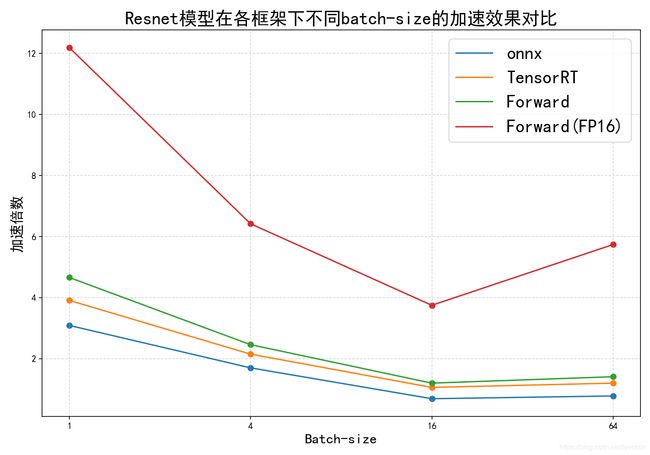

Resnet18分类模型在2080Ti上的性能对比

| 参数 | 模型 | 速度(ms) | 加速比 | 显存占用(MB) |

|---|---|---|---|---|

| batch_size=1 | pytorch | 6.34 | 1 x | 927 |

| onnx | 2.05 | 3.09 x | 1035 | |

| TensorRT | 1.62 | 3.91 x | 887 | |

| Forward | 1.36 | 4.66 x | 2749 | |

| Forward(FP16) | 0.52 | 12.19 x | 2749 | |

| batch_size=4 | pytorch | 6.16 | 1 x | 1187 |

| onnx | 3.62 | 1.7 x | 1115 | |

| TensorRT | 2.87 | 2.15 x | 1036 | |

| Forward | 2.5 | 2.46 x | 2785 | |

| Forward(FP16) | 0.96 | 6.42 x | 2785 | |

| batch_size=16 | pytorch | 8.62 | 1 x | 2537 |

| onnx | 12.50 | 0.69 x | 1495 | |

| TensorRT | 8.17 | 1.06 x | 910 | |

| Forward | 7.16 | 1.2 x | 2877 | |

| Forward(FP16) | 2.3 | 3.75 x | 2877 | |

| batch_size=64 | pytorch | 38.04 | 1 x | 6537 |

| onnx | 48.80 | 0.78 x | 3071 | |

| TensorRT | 31.61 | 1.2 x | 1421 | |

| Forward | 26.99 | 1.41 x | 3881 | |

| Forward(FP16) | 6.63 | 5.74 x | 3881 |

三、总结

目前基于识别模型,TensorRT加速倍数在4倍以上,而16bit量化对于Batch-size较大时提升更为明显。对比Batch-size,随着batch-size的增大,tensorrt和onnx加速效果会逐步降低到1.5 ~ 2倍左右。而16Bit压缩的加速效果也会降低,逐步减小到2.6 ~ 3倍左右。

参考文章:

(1)https://zhuanlan.zhihu.com/p/64053177

(2)https://www.jianshu.com/p/3c2fb7b45cc7

(3)https://github.com/onnx/onnx-tensorrt/issues/638

(4)https://zhuanlan.zhihu.com/p/299845547