Softmax回归模型的构建和实现(Fashion-MNIST图像分类)

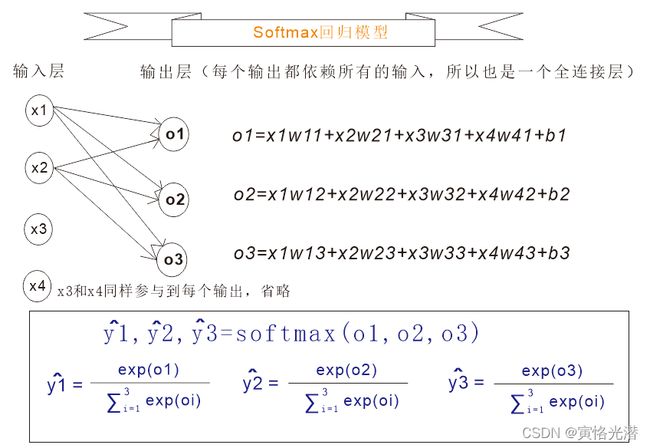

在前面的线性回归(Linear Regression)模型的构建和实现中,我们了解到这种模型适用于输出为连续值的情景,如果对于离散值(一般表示为类别)的预测,一般都使用Softmax回归,这个模型在MNIST数据集中已有用到,很适合分类问题。有兴趣的可以查阅对MNIST的实现:

MNIST数据集手写数字识别(二)![]() https://blog.csdn.net/weixin_41896770/article/details/119710429

https://blog.csdn.net/weixin_41896770/article/details/119710429

深度的卷积神经网络CNN(MNIST数据集示例)![]() https://blog.csdn.net/weixin_41896770/article/details/122407817

https://blog.csdn.net/weixin_41896770/article/details/122407817

在上面例子当中,我们知道和线性回归的区别是,输出从一个变成了多个,而且这个输出的的个数等于标签里的类别数,比如数字识别的标签类别是10个(0~9),然后我们将最大的输出值作为预测的输出(argmax)即可,最后计算输出预测的与真实类别(标签)是否一致,一致的话就说明预测正确,累加,最后跟总的预测数量相比,就是准确率(accuracy),用来评价模型的表现。

那对于求损失函数,我们知道,不需要平方损失函数那样的精确,因为分类问题,我们只需要找出预测的概率分布中最大的那个就可以了,所以一般都使用交叉熵损失函数:

-np.sum(y*np.log(y_hat+delta)) #y是真实标签,y_hat是预测标签,delta很小的值防止溢出

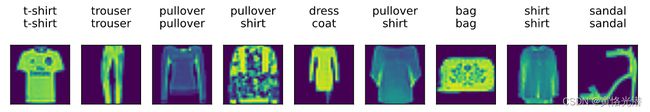

我们来熟悉下Fashion-MNIST(10类服饰的数据集),后面将用到这个数据集进行训练:

我们来熟悉下Fashion-MNIST(10类服饰的数据集),后面将用到这个数据集进行训练:

import d2lzh as d2l

from mxnet.gluon import data as gdata

import sys,time

#vision下面有比较多的数据集,可以dir()查看

mnist_train=gdata.vision.FashionMNIST(train=True)

mnist_test=gdata.vision.FashionMNIST(train=False)

features,labels=mnist_train[0:10]#返回前10张图片的特征与标签

print(len(mnist_train),features.shape,labels)#60000 (10, 28, 28, 1) [2 9 6 0 3 4 4 5 4 8]

#获取标签,d2lzh包中已有此函数

def get_fashion_mnist_labels(labels):

text_labels=['t-shirt','trouser','pullover','dress','coat','sandal','shirt','sneaker','bag','ankle boot']

return [text_labels[int(i)] for i in labels]

print(get_fashion_mnist_labels([3,1]))#['dress', 'trouser']

#显示图片与标签

def show_fashion_mnist(images,labels):

d2l.use_svg_display()#svg图形显示

_,figs=d2l.plt.subplots(1,len(images),figsize=(15,15))

for f,img,lbl in zip(figs,images,labels):

f.imshow(img.reshape(28,28).asnumpy())

f.set_title(lbl)

f.axes.get_xaxis().set_visible(False)

f.axes.get_yaxis().set_visible(False)

show_fashion_mnist(features,get_fashion_mnist_labels(labels))

#开始小批量训练数据集

batch_size=1000

#ToTensor将图像的uint8格式转成32位浮点数,且除以255得到像素的数值在0~1之间

#而且把最后一维放到了最前一维:(28,28,1)转换成了(1,28,28)

transformer=gdata.vision.transforms.ToTensor()

if sys.platform.startswith('win'):

num_workers=0#进程数,多进程加速数据读取,暂不支持windows

else:

num_workers=4

#transform_first将transformer应用在每个样本数据(图像和标签)的第一个元素

train_iter=gdata.DataLoader(mnist_train.transform_first(transformer),batch_size,shuffle=True,num_workers=num_workers)

test_iter=gdata.DataLoader(mnist_test.transform_first(transformer),batch_size,shuffle=True,num_workers=num_workers)

start=time.time()

for X,y in train_iter:

continue

print('读取一遍训练集需耗时:%.2f秒' % (time.time()-start))#读取一遍训练集需耗时:2.90秒

关于subplots的子图怎么画,还可以参考:Python画图(直方图、多张子图、二维图形、三维图形以及图中图)

关于subplots的子图怎么画,还可以参考:Python画图(直方图、多张子图、二维图形、三维图形以及图中图)![]() https://blog.csdn.net/weixin_41896770/article/details/119798960

https://blog.csdn.net/weixin_41896770/article/details/119798960

其中如果对zip的用法不是很熟悉的可以参看: Python基础知识汇总

构建Softmax回归的模型

import d2lzh as d2l

from mxnet import autograd,nd

batch_size=200

train_iter,test_iter=d2l.load_data_fashion_mnist(batch_size)

num_inputs=28*28

num_outputs=10

W=nd.random.normal(scale=0.01,shape=(num_inputs,num_outputs))#(784,10)形状的权重

b=nd.zeros(num_outputs)

#为权重和偏置附上梯度

W.attach_grad()

b.attach_grad()

#c为了防止溢出

def softmax(X):

c=nd.max(X)

X_exp=(X-c).exp()

X_exp_sum=X_exp.sum(axis=1,keepdims=True)

return X_exp/X_exp_sum

#定义模型

def net(X):

return softmax(nd.dot(X.reshape((-1,num_inputs)),W)+b)

#定义交叉熵损失函数

def cross_entropy(y_hat,y):

return -nd.pick(y_hat,y).log()

#pick函数返回样本标签的最大预测概率

#print(nd.pick(nd.array([[0.2,0.3,0.5],[0.1,0.2,0.7]]),nd.array([0,2])))#[0.2 0.7]

#评估模型准确率

def evaluate_accuracy(data_iter,net):

acc_sum,n=0.0,0

for X,y in data_iter:

y=y.astype('float32')

equals=net(X).argmax(axis=1)==y#相等为1,不等为0的NDArray

acc_sum+=equals.sum().asscalar()

n+=y.size

return (acc_sum/n)

#evaluate_accuracy(test_iter,net)#0.0982,模型为随机初始化,所以接近0.1(10分类)

#训练模型

def train(net,train_iter,test_iter,loss,num_epochs,batch_size,params=None,lr=None,trainer=None):

for epoch in range(num_epochs):

train_l_sum,train_acc_sum,n=0.0,0.0,0

for X,y in train_iter:

with autograd.record():

y_hat=net(X)

l=loss(y_hat,y).sum()

l.backward()

if trainer is None:

d2l.sgd(params,lr,batch_size)

else:

trainer.step(batch_size)

y=y.astype('float32')

train_l_sum+=l.asscalar()

train_acc_sum+=(y_hat.argmax(axis=1)==1).sum().asscalar()

n+=y.size

test_acc=evaluate_accuracy(test_iter,net)

print('epoch %d,loss %.4f,train acc %.3f,test acc %.3f'%(epoch+1,train_l_sum/n,train_acc_sum/n,test_acc))

train(net,train_iter,test_iter,cross_entropy,5,batch_size,[W,b],lr=0.1)epoch 1,loss 0.7517,train acc 0.095,test acc 0.790

epoch 2,loss 0.5542,train acc 0.097,test acc 0.826

epoch 3,loss 0.5132,train acc 0.098,test acc 0.822

epoch 4,loss 0.4924,train acc 0.098,test acc 0.835

epoch 5,loss 0.4782,train acc 0.098,test acc 0.842

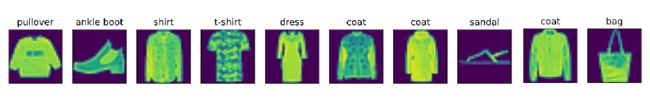

我们来看下真实标签与预测标签对应图像的情况,第一行为真实标签,第二行是预测标签:

for X,y in test_iter:

break

true_labels=d2l.get_fashion_mnist_labels(y.asnumpy())

pred_labels=d2l.get_fashion_mnist_labels(net(X).argmax(axis=1).asnumpy())

titles=[true+'\n'+pred+'\n' for true,pred in zip(true_labels,pred_labels)]

d2l.show_fashion_mnist(X[0:9],titles[0:9])Gluon的简洁实现

import d2lzh as d2l

from mxnet import gluon,init

from mxnet.gluon import loss as gloss,nn

batch_size=200

train_iter,test_iter=d2l.load_data_fashion_mnist(batch_size)

net=nn.Sequential()

net.add(nn.Dense(10))#输出个数为10的全连接层

net.initialize(init.Normal(sigma=0.01))

loss=gloss.SoftmaxCrossEntropyLoss()#将Softmax和交叉熵损失打包的函数,更加稳定

trainer=gluon.Trainer(net.collect_params(),'sgd',{'learning_rate':0.1})

num_epochs=5

#train_ch3就是前面定义的train实现,在d2l中是train_ch3

d2l.train_ch3(net,train_iter,test_iter,loss,num_epochs,batch_size,None,None,trainer)

epoch 1, loss 0.7508, train acc 0.757, test acc 0.804

epoch 2, loss 0.5546, train acc 0.815, test acc 0.810

epoch 3, loss 0.5139, train acc 0.828, test acc 0.834

epoch 4, loss 0.4927, train acc 0.833, test acc 0.841

epoch 5, loss 0.4770, train acc 0.838, test acc 0.843