[2106] Video Super-Resolution Transformer

paper

code

mathematical reasoning mainly from this paper

Content

-

- Abstract

- Preliminary

-

-

- video super-resolution (VSR)

- transformer block

-

- Method

-

-

- model architecture

- spatial-temporal convolutional self-attention (STCSA)

-

- drawbacks of FCSA

- detailed structure in STCSA

- why STCSA is suitable

- spatial-temporal position encoding

- bidirectional optical flow-based feed-forward (BOFF)

-

- Experiment

-

-

- result on REDS

- result on Vimeo-90K

- result on Vid4

- ablation study

-

- optical flow

- STCSA layer & BOFF layer

- number of frames

-

Abstract

components in traditional Transformer design and their limitations

- fully-connected self-attention layer (FCSA) neglect local information in video

ViTs split an image into several patches or tokens, which damage local spatial information since contents (eg. lines, edges, shapes, objects) divided into different tokens - token-wise feed-forward layer misalign features between video frames and ignore feature propagation across frames

this layer independently process each of input token embeddings without any interaction across frames

main contributions of VSR-Transformer

- spatial-temporal convolutional attention (STCSA) layer: exploit locality and spatial-temporal data information through different layers

- bidirectional optical flow-based feed-forward (BOFF) layer: use interaction across all frame embeddings for feature propagation and alignment

Preliminary

notation

a calligraphic letter X \mathcal{X} X: a data sequence

a calligraphic letter D \mathcal{D} D: a distribution

a bold upper case letter X \mathbf{X} X: a matrix

a bold lower case letter x \mathbf{x} x: a vector

a lower case letter x: an element of a matrix

[ T ] [T] [T]: a set { 1 , . . . , T } \{1, ..., T\} {1,...,T}

1 { . } \mathbf{1}\{.\} 1{.}: an indicator function, where 1 { A } = 1 \mathbf{1}\{A\}=1 1{A}=1 if A is true and 1 { A } = 0 \mathbf{1}\{A\}=0 1{A}=0 if A is false

E D \mathbb{E}_{\mathcal{D}} ED: an empirical expectation with respect to distribution D \mathcal{D} D

definition 1 (function distance) given a function f : R d × n → R d × n f: \mathbb{R}^{d\times n}\rightarrow\mathbb{R}^{d\times n} f:Rd×n→Rd×n and a target function f ∗ : R d × n → R d × n f^{\ast}: \mathbb{R}^{d\times n}\rightarrow\mathbb{R}^{d\times n} f∗:Rd×n→Rd×n, we define a distance between these 2 function as

L f ∗ , D ( f ) : = E X ∼ D [ l ( f ( X ) , f ∗ ( X ) ) ] \mathcal{L}_{f^{\ast}, \mathcal{D}}(f):=\mathbb{E}_{\mathbf{X}\sim\mathcal{D}}[l(f(\mathbf{X}), f^{\ast}(\mathbf{X}))] Lf∗,D(f):=EX∼D[l(f(X),f∗(X))]

for ground truth Y = f ∗ ( D ) Y=f^{\ast}(\mathcal{D}) Y=f∗(D), loss denoted by L D ( f ) \mathcal{L}_\mathcal{D}(f) LD(f)

definition 2 ( k k k-pattern function) a function f : X → Y f: \mathcal{X}\rightarrow\mathcal{Y} f:X→Y is a k-pattern if for some g : { ± } k → Y g: {\{\pm\}}^k\rightarrow\mathcal{Y} g:{±}k→Y and index j ∗ : f ( ) = g ( x j ∗ , . . . , j ∗ + k ) j^{\ast}: f()=g(x_{j^{\ast, ..., j^{\ast}+k}}) j∗:f()=g(xj∗,...,j∗+k). we call a function h u , W ( x ) = ∑ j ⟨ u ( j ) , v W ( j ) ⟩ h_{\mathbf{u}, \mathbf{W}}(\mathbf{x})=\sum_{j}\langle {\mathbf{u}}^{(j)}, {\mathbf{v}}_{\mathbf{W}}^{(j)}\rangle hu,W(x)=∑j⟨u(j),vW(j)⟩ can learn a k-pattern function from a feature v W ( j ) {\mathbf{v}}_{\mathbf{W}}^{(j)} vW(j) of data x x x with a layer u ( j ) ∈ R q {\mathbf{u}}^{(j)}\in R^q u(j)∈Rq if for ϵ > 0 \epsilon>0 ϵ>0, we have

L f ∗ , D ( h u , W ) ≤ ϵ \mathcal{L}_{f^{\ast}, \mathcal{D}}(h_{\mathbf{u}, \mathbf{W}})\leq\epsilon Lf∗,D(hu,W)≤ϵ

feature v W ( j ) {\mathbf{v}}_{\mathbf{W}}^{(j)} vW(j) learned by a convolutional attention network or a fully connected attention network parameterized by W \mathbf{W} W

⟹ \implies ⟹ any function that can capture locality of data mean it should learn a k k k-pattern function

video super-resolution (VSR)

given a LR video sequence { V 1 , . . . , V T } ∼ D \{V_1, ..., V_T\}\sim\mathcal{D} {V1,...,VT}∼D, where V t ∈ R 3 × H × W V_t\in\mathbb{R}^{3\times H\times W} Vt∈R3×H×W is t-th LR frame, D \mathcal{D} D is a distribution of videos

extract features X = { X 1 , . . . , X T } \mathcal{X}=\{X_1, ..., X_T\} X={X1,...,XT} from LR video frames, where X t ∈ R C × H × W X_t\in\mathbb{R}^{C\times H\times W} Xt∈RC×H×W is t t t-th feature

learn a non-linear mapping F F F to reconstruct HR frames Y ^ \widehat{\mathcal{Y}} Y by utilizing spatial-temporal information across sequence

Y ^ ≜ ( Y ^ 1 , . . . , Y ^ T ) = F ( X 1 , . . . , X T ) \widehat{\mathcal{Y}}\triangleq(\widehat{Y}_1, ..., \widehat{Y}_T)=F(X_1, ..., X_T) Y ≜(Y 1,...,Y T)=F(X1,...,XT)

given ground-truth HR frames Y = { Y 1 , . . . , Y T } \mathcal{Y}=\{Y_1, ..., Y_T\} Y={Y1,...,YT}, where Y t Y_t Yt is t t t-th HR frame

minimize a loss function between generated HR frame Y ^ t \widehat{Y}_t Y t and ground-truth HR frame Y t Y_t Yt

F ^ = arg min F L D ( F ) ≜ E ^ D , t ∈ [ T ] [ d ( Y ^ t , Y t ) ] \widehat{F}=\underset{F}{\arg\min}\mathcal{L}_\mathcal{D}(F)\triangleq\widehat{\mathbb{E}}_{\mathcal{D}, t\in[T]}[d(\widehat{Y}_t, Y_t)] F =FargminLD(F)≜E D,t∈[T][d(Y t,Yt)]

where, d ( ⋅ , ⋅ ) d(\cdot, \cdot) d(⋅,⋅) is a distance metric, such as L1-loss, L2-loss, Charbonnier loss

for VSR tasks, a sequence method can be used, such as RNN, LSTM, Transformer

note that Transformer gain particular interest since it avoid recursion and thus allow parallel computing in practice

transformer block

given an input feature X ∈ R d × n X\in\mathbb{R}^{d\times n} X∈Rd×n ( d d d-dimensional embeddings of n n n tokens)

transformer block is a sequence-to-sequence function, mapping a sequence R d × n \mathbb{R}^{d\times n} Rd×n to another sequence R d × n \mathbb{R}^{d\times n} Rd×n

consist of 2 parts, one is a self-attention layer with a skip connection

f 1 ( X ) = L N ( X + ∑ i = 1 h W o i ( W v i X ) S o f t M a x ( ( W k i X ) T ( W q i X ) ) f_1(X)=LN(X+\sum_{i=1}^hW_o^i(W_v^iX)SoftMax((W_k^iX)^T(W_q^iX)) f1(X)=LN(X+i=1∑hWoi(WviX)SoftMax((WkiX)T(WqiX))

where, W o i ∈ R d × m W_o^i\in\mathbb{R}^{d\times m} Woi∈Rd×m is a linear layer, W v i , W k i , W q i ∈ R m × d W_v^i, W_k^i, W_q^i\in\mathbb{R}^{m\times d} Wvi,Wki,Wqi∈Rm×d are linear layers mapping feature to value, key, query, h h h is heads number, m m m is head size

the other is a token-wise feed-forward layer with a skip connection

f 2 ( X ) = L N ( f 1 ( X ) + W 2 R e L U ( W 1 f 1 ( X ) + b 1 1 n T ) + b 2 1 n T ) f_2(X)=LN(f_1(X)+W_2ReLU(W_1f_1(X)+b_1\mathbf{1}_n^T)+b_2\mathbf{1}_n^T) f2(X)=LN(f1(X)+W2ReLU(W1f1(X)+b11nT)+b21nT)

where, W 1 ∈ R r × d , W 2 ∈ R d × r W_1\in\mathbb{R}^{r\times d}, W_2\in\mathbb{R}^{d\times r} W1∈Rr×d,W2∈Rd×r are linear layers, b 1 ∈ R r , b 2 ∈ R d b_1\in\mathbb{R}^r, b_2\in\mathbb{R}^d b1∈Rr,b2∈Rd are bias, r r r is hidden layer size of feed-forward layer

Method

model architecture

The framework of video super-resolution Transformer. Given a low-resolution (LR) video, we first use an extractor to capture features of the LR videos. Then, a spatial-temporal convolutional self-attention and an optical flow-based feed-forward network model a sequence of continuous representations. Note that these two layers both have skip connections. Last, the reconstruction network restores a high-resolution video from the representations and the up-sampling frames.

feature extractor capture features from LR input

transformer map features to a sequence of continuous representations

reconstruction restore HR videos from representations

loss function Charbonnier loss

Network architecture of the feature extractor and reconstruction network.

T frames number, C channels number, H image height, W image width

I input channels number, O output channels number

CONV convolution, with K kernel size, S stride, P padding, G groups

PixelShuffle pixel shuffle with upscale factor of 2

LeakyReLU Leaky ReLU activation function with a negative slope of 0.01

spatial-temporal convolutional self-attention (STCSA)

drawbacks of FCSA

Q: whether FCSA layer learn k k k-patterns with gradient descent

theorem 1 we assume m = 1 m=1 m=1 and ∣ u i ∣ ≤ 1 \vert u_i\vert\leq1 ∣ui∣≤1, and weights are initialized as some permutation invariant distribution over R n \mathbb{R}^n Rn, and for all x \mathbf{x} x we have h u , W F C S A ∈ [ − 1 , 1 ] h_{\mathbf{u}, \mathbf{W}}^{FCSA}\in[-1, 1] hu,WFCSA∈[−1,1] which satisfies definition 2. then, the following holds

E W ∼ W ∥ ∂ ∂ W L f , D ( h u , W F C S A ) ∥ 2 2 ≤ q n min { ( n − 1 k ) − 1 , ( n − 1 k − 1 ) − 1 } \mathbb{E}_{W\sim\mathcal{W}}\Vert\frac\partial{\partial\mathbf{W}}\mathcal{L}_{f, \mathcal{D}}(h_{\mathbf{u}, \mathbf{W}}^{FCSA})\Vert_2^2\leq qn\min\{ \dbinom{n-1}{k}^{-1}, \dbinom{n-1}{k-1}^{-1}\} EW∼W∥∂W∂Lf,D(hu,WFCSA)∥22≤qnmin{(kn−1)−1,(k−1n−1)−1}

from theorem 1:

- initial gradient is small, if k = Ω ( log n ) k=\Omega(\log{n}) k=Ω(logn) and fully connected attention layer is initialized as a permutation invariant distribution

- fully connected attention layer result in gradient vanishing, if q q q is not large enough

- gradient descent will be “stuck” upon initialization, thus unable to learn k k k-pattern function

⟹ \implies ⟹ FCSA layer cannot use spatial information of each frame since local information not encoded in embeddings of all tokens

detailed structure in STCSA

Illustration of the spatial-temporal convolutional self-attention. The unfold operation is to extract sliding local patches from a batched input feature map, while the fold operation is to combine an array of sliding local patches into a large feature map.

given feature maps of input video frames X ∈ R T × C × H × W X\in{\Reals}^{T\times C\times H\times W} X∈RT×C×H×W

step 1 capture spatial information of each frame in x x x

X ∈ R T × C × H × W → W q , W k , W v Q , K , V ∈ R T × C × H × W X\in{\Reals}^{T\times C\times H\times W}\xrightarrow{W_q, W_k, W_v}Q, K, V\in{\Reals}^{T\times C\times H\times W} X∈RT×C×H×WWq,Wk,WvQ,K,V∈RT×C×H×W

where, W q , W k , W v W_q, W_k, W_v Wq,Wk,Wv are 3 independent conv layers

step 2 unfold features into sliding local H p × W p H_p\times W_p Hp×Wp-size patches in each frame, and reshape into query, key, value matrix

Q , K , V ∈ R T × C × H × W → u n f o l d R T × C H p W p × H W H p W p → r e s h a p e R n _ h e a d s × C H p W p n _ h e a d s × T H W H p W p Q, K, V\in{\Reals}^{T\times C\times H\times W}\xrightarrow{unfold}{\Reals}^{T\times CH_pW_p\times\frac{HW}{H_pW_p}}\xrightarrow{reshape}{\Reals}^{n\_heads\times\frac{CH_pW_p}{n\_heads}\times T\frac{HW}{H_pW_p}} Q,K,V∈RT×C×H×WunfoldRT×CHpWp×HpWpHWreshapeRn_heads×n_headsCHpWp×THpWpHW

where, n _ p a t c h e s = H W H p W p n\_patches=\frac{HW}{H_pW_p} n_patches=HpWpHW is patches number in each frame, d i m = C H p W p dim=CH_pW_p dim=CHpWp is dimension of each patch, n _ h e a d s n\_heads n_heads is heads number

step 3 calculate similarity matrix and aggregate with value for attention matrix

A t t e n t i o n ( Q , K , V ) = s o f t m a x ( Q T K d ) V T ∈ R n _ h e a d s × T H W H p W p × T H W H p W p Attention(Q, K, V)=softmax(\frac{Q^TK}{\sqrt{d}})V^T\in{\Reals}^{n\_heads\times T\frac{HW}{H_pW_p}\times T\frac{HW}{H_pW_p}} Attention(Q,K,V)=softmax(dQTK)VT∈Rn_heads×THpWpHW×THpWpHW

where, d = C H p W p n _ h e a d s d=\frac{CH_pW_p}{n\_heads} d=n_headsCHpWp is hidden dimension

note that similarity matrix Q T K Q^TK QTK related to all embedding tokens of the whole video frames

step 4 reshape attention matrix, and fold tensors of updated sliding local patches into features

A t t e n t i o n ∈ R n _ h e a d s × T H W H p W p × T H W H p W p → r e s h a p e R T × C H p W p × H W H p W p → f o l d R T × C × H × W Attention\in{\Reals}^{n\_heads\times T\frac{HW}{H_pW_p}\times T\frac{HW}{H_pW_p}}\xrightarrow{reshape}{\Reals}^{T\times CH_pW_p\times\frac{HW}{H_pW_p}}\xrightarrow{fold}{\Reals}^{T\times C\times H\times W} Attention∈Rn_heads×THpWpHW×THpWpHWreshapeRT×CHpWp×HpWpHWfoldRT×C×H×W

step 5 obtain final features, and achieve output with a skip connection and a normalization

A t t e n t i o n ∈ R T × C × H × W → W o F ∈ R T × C × H × W Attention\in{\Reals}^{T\times C\times H\times W}\xrightarrow{{W_o}}F\in{\Reals}^{T\times C\times H\times W} Attention∈RT×C×H×WWoF∈RT×C×H×W

f 1 ( X ) = L N ( X + F ) ∈ R T × C × H × W f_1(X)=LN(X+F)\in{\Reals}^{T\times C\times H\times W} f1(X)=LN(X+F)∈RT×C×H×W

where, W o W_o Wo is a conv layer

step 2 to step 4 inspired by COLA-Net

with a summary of steps above, STCSA formulated as

f 1 ( X ) = L N ( X + ∑ i = 1 h W o i κ 2 ( κ 1 ( W v i X ) ⏟ v s o f t m a x ( κ 1 ( W k i X ) ⏟ w T κ 1 ( W q i X ) ⏟ q ) ) ) f_1(X)=LN(X+\sum_{i=1}^hW_o^i\kappa_2(\underbrace{\kappa_1(W_v^iX)}_\text{v}softmax({\underbrace{\kappa_1(W_k^iX)}_\text{w}}^T\underbrace{\kappa_1(W_q^iX)}_\text{q}))) f1(X)=LN(X+i=1∑hWoiκ2(v κ1(WviX)softmax(w κ1(WkiX)Tq κ1(WqiX))))

where, κ 1 ( ⋅ ) , κ 2 ( ⋅ ) \kappa_1(\cdot), \kappa_2(\cdot) κ1(⋅),κ2(⋅) are unfold and fold operation, h h h is heads number which set h = 1 h=1 h=1 for good performance

why STCSA is suitable

Q: how STCSA layer learn k k k-patterns with gradient descent

theorem 2 assume we initialize each element of weights uniformly drawn from

{ ± 1 k } \{\pm\frac1k\} {±k1}. fix some δ > 0 \delta>0 δ>0, some k-pattern f f f and some distribution D \mathcal{D} D. then is q > 2 k + 3 log ( 2 k δ ) q>2^{k+3}\log(\frac{2^k}\delta) q>2k+3log(δ2k), and let h u ( s ) , W ( s ) S T C S A h_{\mathbf{u}^{(s)}, \mathbf{W}^{(s)}}^{STCSA} hu(s),W(s)STCSA be a function satisfying definition 2, with probability at least 1 − δ 1-\delta 1−δ over the initialization, when training a spatial-temporal convolutional self-attention layer using gradient descent with η \eta η, we have

1 S ∑ s = 1 S L f , D ( h u ( s ) , W ( s ) S T C S A ) ≤ η 2 S 2 n k 5 2 2 k + 1 + k 2 2 2 k + 1 q η S + η n q k \frac{1}{S}\sum_{s=1}^S\mathcal{L}_{f, \mathcal{D}}(h_{\mathbf{u}^{(s)}, \mathbf{W}^{(s)}}^{STCSA})\leq{\eta}^2S^2nk^{\frac52}2^{k+1}+\frac{k^22^{2k+1}}{q\eta S}+\eta nqk S1s=1∑SLf,D(hu(s),W(s)STCSA)≤η2S2nk252k+1+qηSk222k+1+ηnqk

from theorem 2:

- loss L f , D ( h u ( s ) , W ( s ) S T C S A ) \mathcal{L}_{f, \mathcal{D}}(h_{\mathbf{u}^{(s)}, \mathbf{W}^{(s)}}^{STCSA}) Lf,D(hu(s),W(s)STCSA) will be small with finite S S S steps in optimization, thus able to learn k k k-pattern function

⟹ \implies ⟹ STCSA layer with gradient descent can capture locality of each frame

spatial-temporal position encoding

VSRT is permutation-invariant, thus requiring precise spatial-temporal position information

3D fixed position encoding: 2 spatial positional information (horizontal, vertical) and 1 temporal positional information

P E ( p o s , i ) = { sin ( p o s ⋅ α k ) if i = 2 k c o s ( p o s ⋅ α k ) if i = 2 k + 1 PE(pos, i)=\begin{cases} \sin(pos\cdot{\alpha}_k) &\text{if } i=2k \\ cos(pos\cdot{\alpha}_k) &\text{if } i=2k+1 \end{cases} PE(pos,i)={sin(pos⋅αk)cos(pos⋅αk)if i=2kif i=2k+1

where, α k = 1 / 100 0 2 k / d 3 {\alpha}_k=1/1000^{2k/\frac{d}3} αk=1/10002k/3d, k k k is an integer in [ 0 , k 6 ) [0, \frac{k}6) [0,6k), p o s pos pos is position in corresponding dimension, d d d is channel dimension size

bidirectional optical flow-based feed-forward (BOFF)

Illustration of the bidirectional optical flow-based feed-forward layer. Given a video sequence, we first bidirectionally estimate the forward and backward optical flows and wrap the feature maps with the responding optical flows. Then we learn a forward and backward propagation network to produce two sequences of features from concatenated wrapped features and LR frames. Last, we fusion these two feature sequences into one feature sequence.

given features X ∈ R T × C × H × W X\in\mathbb{R}^{T\times C\times H\times W} X∈RT×C×H×W output by STCSA layer

step 1: learn bidirectional optical flows between neighboring frames

O ← t = { s p y ( V 1 , V 1 ) if t = 1 s p y ( V t − 1 , V t ) if t ∈ ( 1 , T ] , O → t = { s p y ( V t + 1 , V t ) if t ∈ [ 1 , T ) s p y ( V T , V T ) if t = T \overleftarrow{O}_t=\begin{cases} spy(V_1, V_1) &\text{if } t=1 \\ spy(V_{t-1}, V_t) &\text{if } t\in(1, T] \end{cases}, \overrightarrow{O}_t=\begin{cases} spy(V_{t+1}, V_t) &\text{if } t\in[1, T) \\ spy(V_T, V_T) &\text{if } t=T \end{cases} Ot={spy(V1,V1)spy(Vt−1,Vt)if t=1if t∈(1,T],Ot={spy(Vt+1,Vt)spy(VT,VT)if t∈[1,T)if t=T

where, O ← , O → ∈ R T × 2 × H × W \overleftarrow{O}, \overrightarrow{O}\in\mathbb{R}^{T\times2\times H\times W} O,O∈RT×2×H×W are backward and forward optical flows; s p y ( ⋅ , ⋅ ) spy(\cdot, \cdot) spy(⋅,⋅) is a function as SPyNet which is pre-trained and updated in training

step 2: obtain bidirectional features along with backward and forward propagation

X ← = w a r p ( X , O ← ) , X → = w a r p ( X , O → ) \overleftarrow{X}=warp(X, \overleftarrow{O}), \overrightarrow{X}=warp(X, \overrightarrow{O}) X=warp(X,O),X=warp(X,O)

where, X ← , X → ∈ R T × C × H × W \overleftarrow{X}, \overrightarrow{X}\in\mathbb{R}^{T\times C\times H\times W} X,X∈RT×C×H×W are backward and forward features

step 3 aggerate frames and warped features, and feed into 2-layer CNN for backward and forward propagation

f 2 ( X ) = L N ( f 1 ( X ) + f u s i o n ( W 1 ← R e L U ( W 2 ← [ V , X ← ] ) + W 1 → R e L U ( W 2 → [ V , X → ) ] ) ) f_2(X)=LN(f_1(X)+fusion(\overleftarrow{W_1}ReLU(\overleftarrow{W_2}[V, \overleftarrow{X}])+\overrightarrow{W_1}ReLU(\overrightarrow{W_2}[V, \overrightarrow{X})])) f2(X)=LN(f1(X)+fusion(W1ReLU(W2[V,X])+W1ReLU(W2[V,X)]))

where, [ ⋅ , ⋅ ] [\cdot, \cdot] [⋅,⋅] is an aggregation operator, W 1 ← , W 2 ← , W 1 → , W 2 → \overleftarrow{W_1}, \overleftarrow{W_2}, \overrightarrow{W_1}, \overrightarrow{W_2} W1,W2,W1,W2 are weights of backward and forward networks

extend 2-layer networks to multi-layer networks

f 2 ( X ) = L N ( f 1 ( X ) + f u s i o n ( R 1 ( V , X ← ) + R 2 ( V , X → ) ) ) f_2(X)=LN(f_1(X)+fusion(R_1(V, \overleftarrow{X})+R_2(V, \overrightarrow{X}))) f2(X)=LN(f1(X)+fusion(R1(V,X)+R2(V,X)))

where, R 1 , R 2 R_1, R_2 R1,R2 are flexible networks

Experiment

dataset

| resolution | training set | testing set | |

|---|---|---|---|

| REDS | 1280 × 720 1280\times720 1280×720 | 266 clips | REDS4 4 clips |

| Vimeo-90K | 448 × 256 448\times256 448×256 | 64,612 clips | Vimeo-90K-T 7,824 clips |

| Vid4 | 720 × 480 720\times480 720×480 | 4 clips each 34 frames |

|

| experiment detail |

- degradation bicubic down-sampling (BI)

- input 64 × 64 64\times64 64×64-size, 5 or 7 frames

- data augmentation random horizontal flipping, random 9 0 ∘ 90^{\circ} 90∘ rotation

- frame normalized to 448 × 256 448\times256 448×256-size

- optimizer Adam: β 1 = 0.9 , β 2 = 0.99 \beta_1=0.9, \beta_2=0.99 β1=0.9,β2=0.99, batch size=2 per GPU, 600K iterations

- learning rate initial 2e-4, cosine decay to 1e-7

result on REDS

Quantitative comparison (PSNR/SSIM) on REDS4 for 4 × 4\times 4× VSR. The results are tested on RGB channels. Red and blue indicate the best and the second best performance, respectively. “ † \dag †” means a method trained on 5 frames for a fair comparison.

Qualitative comparison on the REDS4 dataset for 4 × 4\times 4× VSR. Zoom in for the best view.

key findings

- the highest PSNR and comparable SSIM

- when training with 5 frames, BasicVSR and IconVSR worse than EDVR

⟹ \implies ⟹ BasicVSR and IconVSR rely much on aggregation of long-term sequence information - 64-channel VSRT better performance than 128-channel EDVR-L

- VSRT able to recover finer details and sharper edges

result on Vimeo-90K

Quantitative comparison (PSNR/SSIM) on Vimeo-90K-T for 4 × 4\times 4× VSR. Red and blue indicate the best and the second best performance, respectively.

Qualitative comparison on Vimeo-90K-T for 4 × 4\times 4× VSR. Zoom in for the best view.

key findings

- the highest PSNR and SSIM

- generalization ability on Vid4 of VSRT better than EDVR but worse than BasicVSR and IconVSR

⟸ \impliedby ⟸ BasicVSR and IconVSR tested on all frames, while VSRT and EDVR tested on 7 frames

⟸ \impliedby ⟸ a distribution bias between Vimeo-90K-T and Vid4 - VSRT able to generate sharp and realistic HR frames

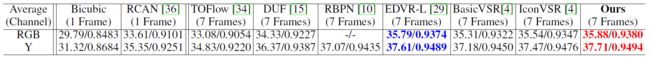

result on Vid4

Quantitative comparison (PSNR/SSIM) on Vid4 for 4x VSR. Red and blue indicate the best and the second best performance, respectively. “Y” denotes the evaluation on Y channels.

Quantitative comparison (PSNR/SSIM) on Vid4 for 4 × 4\times 4× VSR. Red and blue indicate the best and the second best performance, respectively. “Y” denotes the evaluation on Y channels. “ † \dag †” means a method trained and tested on 7 frames for a fair comparison.

>Qualitative comparison on Vid4 for 4 × 4\times 4× VSR. Zoom in for the best view.

ablation study

optical flow

w/o optical flow: replace SPyNet in BOFF layer with a stack of Residual ReLU networks

Ablation study on REDS for 4 × 4\times 4× VSR. Here, “w/o” and “w/ optical flow” mean the VSR-Transformer without and with the optical flow, respectively. Zoom in for the best view.

optical flow is important in BOFF layer and help feature propagation and alignment

STCSA layer & BOFF layer

w/o STCSA: remove STCSA layer

w/o BOFF: replace BOFF layer with a stack of Residual ReLU networks

Ablation study on REDS for 4 × 4\times 4× VSR. Here, “w/o STCSA” and “w/o BOFF” mean the VSR-Transformer without the spatial-temporal convolutional self-attention (STCSA) layer and bidirectional optical flow-based feed-forward (BOFF) layer, respectively.

STCSA layer exploit locality of data and fuse information among different frames

BOFF layer help to perform feature propagation and alignment

number of frames

w/ 3 frames: train model with 3 frames

training with more frames help to restore missing information from other neighboring frames

![[2106] Video Super-Resolution Transformer_第1张图片](http://img.e-com-net.com/image/info8/668403b979eb4f00a3e374ae44b9a631.jpg)

![[2106] Video Super-Resolution Transformer_第2张图片](http://img.e-com-net.com/image/info8/e48ae7c52a7443f0ab8e942dc3d11a19.jpg)

![[2106] Video Super-Resolution Transformer_第3张图片](http://img.e-com-net.com/image/info8/e315f51c98e5461fb7e3c78de479f4f8.jpg)

![[2106] Video Super-Resolution Transformer_第4张图片](http://img.e-com-net.com/image/info8/8d4a56c94b8f4b1bb5f6577d4cc9d9e8.jpg)

![[2106] Video Super-Resolution Transformer_第5张图片](http://img.e-com-net.com/image/info8/4a0972aab7904048a15fe06c718e4c5f.jpg)

![[2106] Video Super-Resolution Transformer_第6张图片](http://img.e-com-net.com/image/info8/81f703584b5e43a4810976d551af0f98.jpg)

![[2106] Video Super-Resolution Transformer_第7张图片](http://img.e-com-net.com/image/info8/68b78cf618be46529167163fdc2ca94f.jpg)

![[2106] Video Super-Resolution Transformer_第8张图片](http://img.e-com-net.com/image/info8/b69c050848964ca3a205d3519a2bfbab.jpg)

![[2106] Video Super-Resolution Transformer_第9张图片](http://img.e-com-net.com/image/info8/b287e882c2034e88b9ef1fb44515684c.jpg)

![[2106] Video Super-Resolution Transformer_第10张图片](http://img.e-com-net.com/image/info8/877b9679d34a4eaca5cb18d72490134b.jpg)