【深度学习】学习率策略合集

深度学习中有各种学习率策略,本文统计了pytorch和paddlepaddle共16种学习率策略,给出示例代码及对应的学习率变化图,相信一定对你有所帮助。

目录

一、LambdaLR

二、MultiplicativeLR

三、StepLR

四、MultiStepLR

五、ConstantLR

六、LinearLR

七、ExponentialLR

八、CosineAnnealingLR

九、CosineAnnealingWarmRestarts

十、CyclicLR

十一、ReduceLROnPlateau

十二、InverseTimeDecay

十三、PolynomialDecay

十四:PiecewiseDecay

十五、NoamDecay

十六、LinearWarmup

参考

一、LambdaLR

LambdaLR初始化时需要一个传入一个lambda函数,学习率等于初始学习率乘该函数的返回值。

![]()

代码:

import torch

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

lambda1 = lambda epoch: epoch // 30

lambda2 = lambda epoch: 0.95 ** epoch

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer=optimizer, lr_lambda=[lambda1])

e = [i for i in range(epoches)]

lrs = []

for epoch in range(100):

optimizer.step()

scheduler.step()

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

效果:

二、MultiplicativeLR

LambdaLR初始化时需要一个传入一个lambda函数,学习率等于上一步学习率乘该函数的返回值。

![]()

代码:

import torch

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

lambda1 = lambda epoch: 0.9

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.MultiplicativeLR(optimizer=optimizer, lr_lambda=[lambda1])

e = [i for i in range(epoches)]

lrs = []

for epoch in range(100):

optimizer.step()

scheduler.step()

print(scheduler.get_last_lr())

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

效果:

三、StepLR

StepLR需要设置学习率衰减的步长step_size,每过step_size,学习率等于上一步的学习率乘衰减因子。

![]()

代码:

import torch

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer=optimizer, step_size=10, gamma=0.9)

e = [i for i in range(epoches)]

lrs = []

for epoch in range(100):

optimizer.step()

scheduler.step()

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

效果:

四、MultiStepLR

MultiStepLR需要设定学习率衰减的步长列表,达到一定步长后学习率衰减,和StepLR不同的是每次衰减的步长是自己设定的(StepLR衰减步长固定)。

![]()

代码:

import torch

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.MultiStepLR(optimizer=optimizer, milestones=[10, 50, 80], gamma=0.9)

e = [i for i in range(epoches)]

lrs = []

for epoch in range(100):

optimizer.step()

scheduler.step()

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

效果:

五、ConstantLR

ConstantLR需要设定步长和衰减因子,在到达设定步长前,学习率等于初始学习率乘以该因子。

代码:

import torch

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.ConstantLR(optimizer=optimizer, factor=0.5, total_iters=10)

e = [i for i in range(epoches)]

lrs = []

for epoch in range(100):

optimizer.step()

scheduler.step()

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

效果:

六、LinearLR

LinearLR需要设定步长和衰减因子的变化范围,随着步长变化,衰减因子也动态变化。

代码:

import torch

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.LinearLR(optimizer=optimizer, start_factor=0.5, end_factor=1.0, total_iters=20)

e = [i for i in range(epoches)]

lrs = []

for epoch in range(100):

optimizer.step()

scheduler.step()

print(scheduler.get_last_lr())

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

效果:

七、ExponentialLR

ExponentialLR只需要传入衰减因子,学习率每轮epoch衰减。

![]()

代码:

import torch

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer=optimizer, gamma=0.9)

e = [i for i in range(epoches)]

lrs = []

for epoch in range(100):

optimizer.step()

scheduler.step()

print(scheduler.get_last_lr())

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

效果:

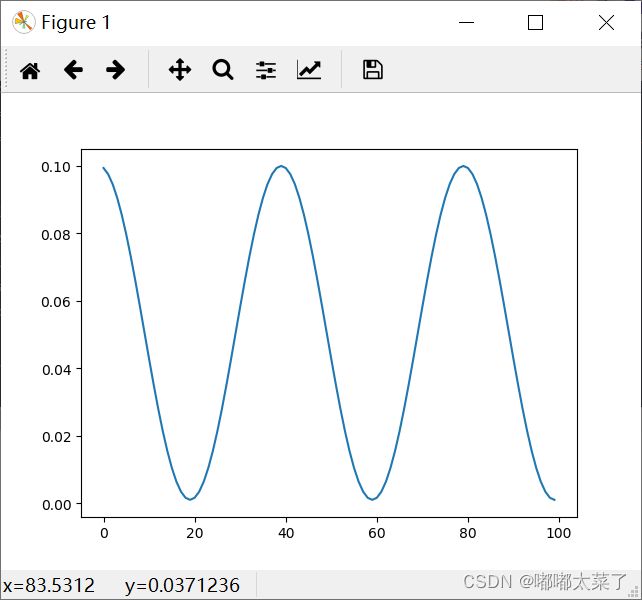

八、CosineAnnealingLR

CosineAnnealingLR是余弦退火学习率策略,学习率周期性变化,对于一般的学习率单调衰减策略,很有可能会使网络停留在局部最优无法走出来,余弦退火学习率周期性变化,能够走出局部最优。

代码:

import torch

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer=optimizer, T_max=20, eta_min=0.001)

e = [i for i in range(epoches)]

lrs = []

for epoch in range(100):

optimizer.step()

scheduler.step()

print(scheduler.get_last_lr())

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

效果:

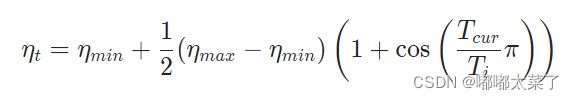

九、CosineAnnealingWarmRestarts

CosineAnnealingWarmRestarts和CosineAnnealingLR的不同在于,学习率上升变化是突变的,并且cos衰减周期是变化的。CosineAnnealingWarmRestarts接受2个和周期相关的参数:T_0和T_mult,第一次衰减的周期为T_0,第二次为T*T_mult,第三次为T*T_mult*T_mult,依次类推。

代码:

import torch

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer=optimizer, T_0=10, T_mult=2, eta_min=0.001)

e = [i for i in range(epoches)]

lrs = []

for epoch in range(100):

optimizer.step()

scheduler.step()

print(scheduler.get_last_lr())

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

效果:

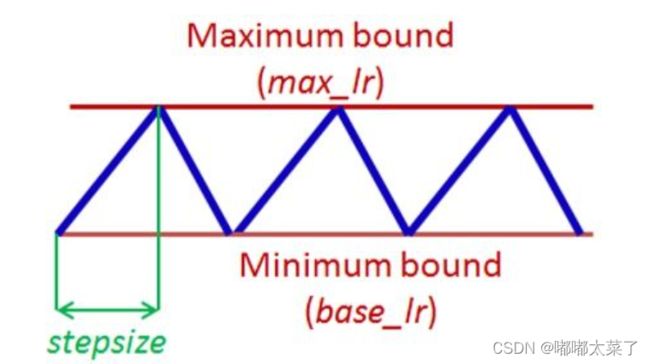

十、CyclicLR

CyclicLR是循环学习率策略,学习率根据设定的步长循环变化,该学习率应该跟随iteration变化,有3种模式:

triangular:三角形变化,无缩放

triangular2:三角形变化,每次循环学习率减半

exp_range:学习率周期变化的同时乘上缩放系数![]() (gamma为1时和triangular策略相同)

(gamma为1时和triangular策略相同)

triangular代码:

import torch

import matplotlib.pyplot as plt

iterations = 1000

learning_rate = 0.01

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.CyclicLR(

optimizer=optimizer,

base_lr=0.001,

max_lr=0.1,

step_size_up=100,

step_size_down=None,

mode='triangular',

gamma=0.9,

scale_fn=None,

)

e = [i for i in range(iterations)]

lrs = []

for epoch in range(iterations):

optimizer.step()

scheduler.step()

print(scheduler.get_last_lr())

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

triangular效果:

triangular2代码:

import torch

import matplotlib.pyplot as plt

iterations = 1000

learning_rate = 0.01

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.CyclicLR(

optimizer=optimizer,

base_lr=0.001,

max_lr=0.1,

step_size_up=100,

step_size_down=None,

mode='triangular2',

gamma=0.9,

scale_fn=None,

)

e = [i for i in range(iterations)]

lrs = []

for epoch in range(iterations):

optimizer.step()

scheduler.step()

print(scheduler.get_last_lr())

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

triangular2效果:

exp_range代码:

import torch

import matplotlib.pyplot as plt

iterations = 1000

learning_rate = 0.01

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = torch.optim.SGD(model, learning_rate)

scheduler = torch.optim.lr_scheduler.CyclicLR(

optimizer=optimizer,

base_lr=0.001,

max_lr=0.1,

step_size_up=100,

step_size_down=None,

mode='exp_range',

gamma=0.99,

scale_fn=None,

)

e = [i for i in range(iterations)]

lrs = []

for epoch in range(iterations):

optimizer.step()

scheduler.step()

print(scheduler.get_last_lr())

lrs.append(scheduler.get_last_lr())

plt.plot(e, lrs)

plt.show()

exp_range效果:

十一、ReduceLROnPlateau

loss 自适应的学习率衰减策略。默认情况下,当 loss 停止下降时,降低学习率。其思想是:一旦模型表现不再提升,将学习率降低2-10倍对模型的训练往往有益。

如果 loss 停止下降超过 patience 个epoch,学习率将会衰减为 learning_rate * factor。

此外,每降低一次学习率后,将会进入一个时长为 cooldown 个epoch的冷静期,在冷静期内,将不会监控 loss 的变化情况,也不会衰减。 在冷静期之后,会继续监控 loss 的上升或下降。

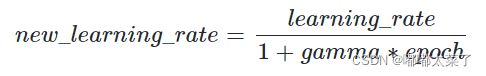

十二、InverseTimeDecay

InverseTimeDecay是逆时间衰减学习率策略,即学习率和衰减次数成反比。

代码:

import paddle

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

linear = paddle.nn.Linear(10, 10)

scheduler = paddle.optimizer.lr.InverseTimeDecay(learning_rate=learning_rate, gamma=0.1, verbose=True)

sgd = paddle.optimizer.SGD(learning_rate=scheduler, parameters=linear.parameters())

x = [i for i in range(epoches)]

lrs = []

for epoch in range(epoches):

scheduler.step()

lrs.append(scheduler.get_lr())

plt.plot(x, lrs)

plt.show()

效果:

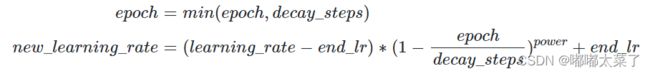

十三、PolynomialDecay

PolynomialDecay是多项式学习率衰减策略,他有2种模式,一种是学习率下降至最低后不变,一种是学习率下降至最低后再次上升(有益于脱离局部最优)。

1、学习率不上升

代码:

import paddle

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

linear = paddle.nn.Linear(10, 10)

scheduler = paddle.optimizer.lr.PolynomialDecay(learning_rate=learning_rate, decay_steps=50, end_lr=0, power=2, cycle=False)

sgd = paddle.optimizer.SGD(learning_rate=scheduler, parameters=linear.parameters())

x = [i for i in range(epoches)]

lrs = []

for epoch in range(epoches):

scheduler.step()

lrs.append(scheduler.get_lr())

plt.plot(x, lrs)

plt.show()

效果:

2、学习率上升

代码:

import paddle

import matplotlib.pyplot as plt

epoches = 100

learning_rate = 0.1

linear = paddle.nn.Linear(10, 10)

scheduler = paddle.optimizer.lr.PolynomialDecay(learning_rate=learning_rate, decay_steps=30, end_lr=0, power=2, cycle=True)

sgd = paddle.optimizer.SGD(learning_rate=scheduler, parameters=linear.parameters())

x = [i for i in range(epoches)]

lrs = []

for epoch in range(epoches):

scheduler.step()

lrs.append(scheduler.get_lr())

plt.plot(x, lrs)

plt.show()

效果:

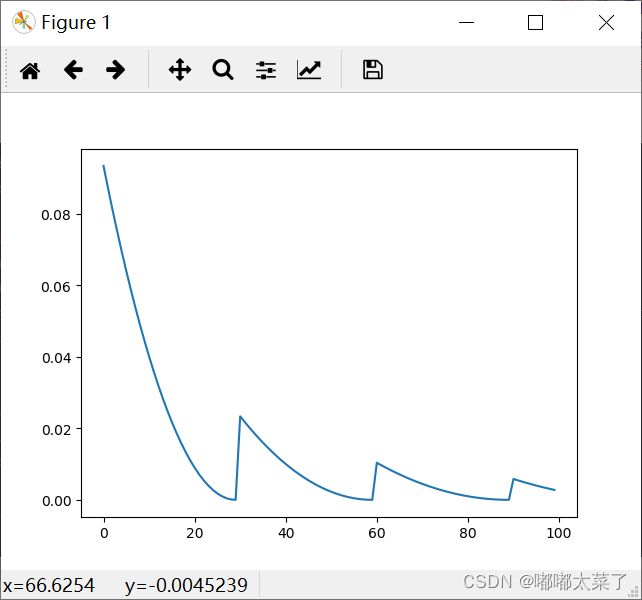

十四:PiecewiseDecay

PiecewiseDecay策略是在指定epoch内的学习率。

代码:

import paddle

import matplotlib.pyplot as plt

epoches = 100

linear = paddle.nn.Linear(10, 10)

scheduler = paddle.optimizer.lr.PiecewiseDecay(boundaries=[30, 60], values=[0.1, 0.05, 0.01])

sgd = paddle.optimizer.SGD(learning_rate=scheduler, parameters=linear.parameters())

x = [i for i in range(epoches)]

lrs = []

for epoch in range(epoches):

scheduler.step()

lrs.append(scheduler.get_lr())

plt.plot(x, lrs)

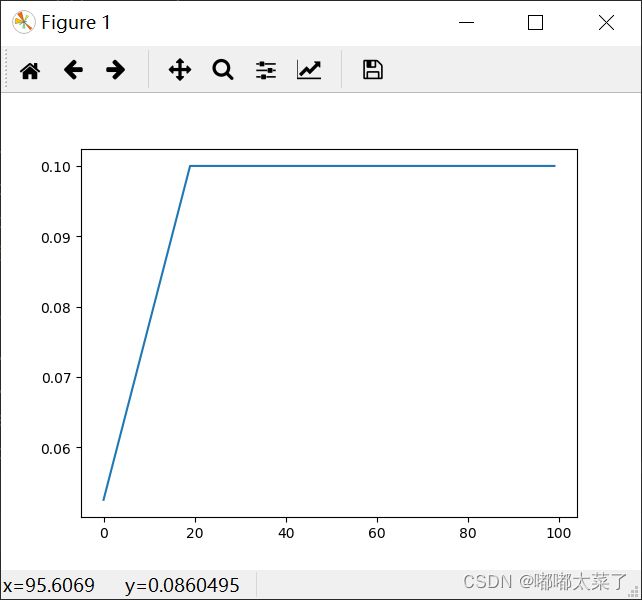

plt.show()

效果:

十五、NoamDecay

NoamDecay策略,公式如下(![]() 为输入或者输出的维度):

为输入或者输出的维度):

代码:

import paddle

import matplotlib.pyplot as plt

epoches = 100

linear = paddle.nn.Linear(10, 10)

scheduler = paddle.optimizer.lr.NoamDecay(d_model=100, warmup_steps=20, learning_rate=0.1)

sgd = paddle.optimizer.SGD(learning_rate=scheduler, parameters=linear.parameters())

x = [i for i in range(epoches)]

lrs = []

for epoch in range(epoches):

scheduler.step()

lrs.append(scheduler.get_lr())

plt.plot(x, lrs)

plt.show()

效果:

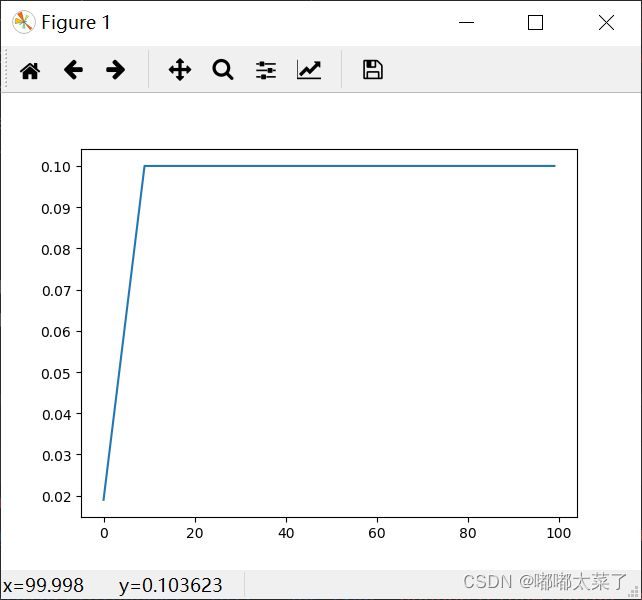

十六、LinearWarmup

LinearWarmup线性学习率热身策略,先以较低的学习率开始训练,学习率慢慢提升至设定值后按照正常的衰减策略衰减。

当训练步数小于热身步数(warmup_steps)时,学习率lr按如下方式更新:

当训练步数大于等于热身步数(warmup_steps)时,学习率lr为:

代码:

import paddle

import matplotlib.pyplot as plt

epoches = 100

linear = paddle.nn.Linear(10, 10)

scheduler = paddle.optimizer.lr.LinearWarmup(learning_rate=0.1, warmup_steps=10, start_lr=0.01, end_lr=0.1)

sgd = paddle.optimizer.SGD(learning_rate=scheduler, parameters=linear.parameters())

x = [i for i in range(epoches)]

lrs = []

for epoch in range(epoches):

scheduler.step()

lrs.append(scheduler.get_lr())

plt.plot(x, lrs)

plt.show()

效果:

参考

torch.optim — PyTorch 1.11.0 documentation

API 文档-API文档-PaddlePaddle深度学习平台

所有示例代码:各类学习率策略示例代码