This blog is probably going to be in terms of coding the most technically driven that I have written so far, and it will be about a project using Deep Learning Neural Networks to classify a set of structured X-rays images from pedriatic patients to identify whether or not, they have pneumonia. The Neural Network that I chose is the Convolutional Neural Network (CNN/ConvNet), mainly because it is known to perform really well with image classification.

该博客可能是到目前为止我编写的最技术驱动的代码,它将涉及一个使用深度学习神经网络对来自儿童患者的结构化X射线图像进行分类的项目,以确定是否是否患有肺炎。 我选择的神经网络是卷积神经网络(CNN / ConvNet),主要是因为它在图像分类方面表现非常好。

This project is not intended as a study of Pneumonia itself, but for detecting it from X-rays images by using Deep Learning Neural Networks. Therefore only a short explanation of what the illness is and why it is important in today’s world will follow.

该项目并非旨在研究肺炎本身,而是通过使用深度学习神经网络从X射线图像中检测出来。 因此,只有简短的解释是什么疾病以及为什么它在当今世界很重要。

So what is Pneumonia?

那么什么是肺炎?

Pneumonia is an infection of the lungs that may be caused by bacteria, viruses, or fungi. The infection causes the lung’s air sac, or alveoli, to become inflamed and fill up with fluid or pus. This progression then limits a person’s ability to take in oxygen. If there is a continuous deprivation of oxygen, many of the body’s organs can get damaged, causing kidney failure, heart failure, and other life threatening conditions.

肺炎是由细菌,病毒或真菌引起的肺部感染。 感染会使肺的气囊或肺泡发炎并充满液体或脓液。 然后,这种进展会限制人的摄氧能力。 如果持续缺氧,人体的许多器官都可能受损,从而导致肾功能衰竭,心力衰竭和其他威胁生命的状况。

The symptoms of pneumonia can range from mild to severe, and include cough, fever, chills, and trouble breathing (source)

肺炎的症状范围从轻到重,包括咳嗽,发烧,发冷和呼吸困难(来源)

Now, is there any relationship between Pneumonia and Covid-19?

现在,肺炎和Covid-19有什么关系?

According to the Center for Disease Control and Prevention (CDC), Covid-19 is a respiratory illness where a person can experience a dry cough, fever, muscle aches, and fatigue.

根据疾病控制与预防中心(CDC)的数据,Covid-19是一种呼吸系统疾病,患者可能会感到干咳,发烧,肌肉酸痛和疲劳。

This virus can progress through the respiratory tract and into a person’s lungs causing pneumonia. People with severe cases of pneumonia may have lungs that are so inflamed that they cannot take in enough oxygen or expel enough carbon dioxide.

该病毒可通过呼吸道进入人的肺部,引起肺炎。 患有严重肺炎的人的肺部可能发炎到无法吸收足够的氧气或排出足够的二氧化碳。

According to the World Health Organization (WHO), the most common diagnosis for severe COVID-19 is severe pneumonia. For people who do develop symptoms in the lungs, COVID-19 may be life threatening, therefore, the detection of Pneumonia through X-ray has increased its importance due to the worldwide spread of Covid-19 (source).

根据世界卫生组织(WHO)的资料,重症COVID-19的最常见诊断是重症肺炎。 对于人谁做开发肺部症状,COVID-19可危及生命,因此,肺炎通过X射线检测已增加,由于Covid-19(在世界范围内传播的重要性源)。

Now that we know what Pneumonia is, and why detecting it through X-rays is important, lets go into the dataset used and start analysing it before going into the modeling.

现在我们知道什么是肺炎,以及为什么通过X射线检测它很重要,让我们进入所使用的数据集并在进行建模之前开始对其进行分析。

Dataset

数据集

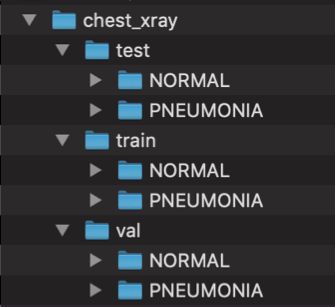

The dataset used comes from Kaggle and it consists of 5856 X-ray images contained in a hierarchical file structure which is already split into three subsets folders (test, train, and val), each of which containing sub-folders where the X-ray images have been already labeled as NORMAL or PNEUMONIA (Figure 1).

所使用的数据集来自Kaggle ,由5856张X射线图像组成,包含在一个分层文件结构中,该文件结构已经分为三个子文件夹(测试,训练和验证),每个子文件夹都包含子文件夹,其中X射线图像已被标记为NORMAL或PNEUMONIA(图1)。

Figure 1. Hierarchical file structure 图1.分层文件结构Because this is a typical nested file structure, the first thing to do is to link each one of those subset folders (test, train and val) and then their internal labelled X-ray images (NORMAL and PNEUMONIA). This will be done with the following code:

因为这是典型的嵌套文件结构,所以首先要做的是链接这些子文件夹(测试,训练和验证)中的每个文件夹,然后链接其内部标记的X射线图像(NORMAL和PNEUMONIA)。 这将通过以下代码完成:

# Directory paths:data_dir = "../chest_xray/"

train_dir = os.path.join(data_dir, "train/")

test_dir = os.path.join(data_dir, "test/")

val_dir = os.path.join(data_dir, "val/")# train folder dataos.listdir(train_dir)

train_normal = train_dir + 'NORMAL/'

train_pneumo = train_dir + 'PNEUMONIA/'# test folder dataos.listdir(test_dir)

test_normal = test_dir + 'NORMAL/'

test_pneumo = test_dir + 'PNEUMONIA/'# val folder dataos.listdir(val_dir)

val_normal = val_dir + 'NORMAL/'

val_pneumo = val_dir + 'PNEUMONIA/'Now, with access to all the data, I created a function that randomly selects a couple of images per subset and plots them. As an example of the output, Figure 2 shows a train subset pair.

现在,通过访问所有数据,我创建了一个函数,该函数可以为每个子集随机选择几个图像并将其绘制出来。 作为输出的示例,图2显示了一个火车子集对。

Figure 2. X-ray image randomly selected from the train subset 图2.从火车子集中随机选择的X射线图像The function is also display below:

该功能也显示在下面:

def X_ray_QC(path, set_normal, set_pneumo):

# X-Rays classified as 'NORMAL' or equal to 'No-Pneumonia'rand_norm = np.random.randint(0, len(os.listdir(path)))

norm_xray = os.listdir(set_normal)[rand_norm]

print('Normal xray file name:', norm_xray)# X-rays classified as 'PNEUMONIA' meaning that the patient has pneumoniarand_pneumo = np.random.randint(0, len(os.listdir(path)))

pneumo_xray = os.listdir(set_pneumo)[rand_pneumo]

print('Pneumo xray file name:', pneumo_xray)# Image loadingnorm_xray_address = set_normal + norm_xray

pneumo_xray_address = set_pneumo + pneumo_xraynormal_load = Image.open(norm_xray_address)

pneumonia_load = Image.open(pneumo_xray_address)# Plotting the X-ray files:figure = plt.figure(figsize=(14,6))ax1 = figure.add_subplot(1,2,1)

xray_plot = plt.imshow(normal_load, cmap='gray')

plt.rcParams["figure.facecolor"] = "lightblue"

ax1.set_title('NORMAL', fontsize=14)

ax1.axis('on')

ax2 = figure.add_subplot(1,2,2)

xray_plot = plt.imshow(pneumonia_load, cmap='gray')

ax2.set_title('PNEUMONIA', fontsize=14)

ax2.axis('on')

plt.show()Continuing with the hierarchical file structure, we already know that the dataset has been split into three subsets: train, test and val. This is great to save some time but we now need to know if the split has created a balanced distribution?

继续使用分层文件结构,我们已经知道数据集已分为三个子集:train,test和val。 这很节省时间,但是我们现在需要知道拆分是否创建了平衡的分配?

In order to answer that question I created another function that automatically creates dataframes with binary columns, where a 0 was assigned to the NORMAL cases, and a 1 to the PNEUMONIA cases, and then these are automatically plotted (Figure 3). Initially the val (validation) subset (validation subset) had only 16 X-rays images, so I modified it and added 100 X-rays from the test subset not to modify the train subset, as Deep Learning algorithms behave better with larger train dataset.

为了回答这个问题,我创建了另一个函数,该函数自动创建带有二进制列的数据帧,其中将0分配给NORMAL情况,将1分配给PNEUMONIA情况,然后自动绘制它们(图3)。 最初,val(验证)子集(验证子集)只有16张X射线图像,因此我对其进行了修改,并从测试子集中添加了100条X射线,以不修改火车子集,因为深度学习算法在较大的火车数据集下表现更好。

Figure 3. X-ray data distribution for data balance check 图3.用于数据平衡检查的X射线数据分布The final data split distributed the data with the following percentages:

最终数据拆分按以下百分比分配数据:

Train subset: 89.07%

火车子集:89.07%

Test subset: 8.95%

测试子集:8.95%

Val subset: 1.98%

Val子集:1.98%

Now, these distribution might suggest that the data is unbalanced, but again, because we are doing Deep Learning, I want to keep the largest possible training set, therefore I will proceed as it is.

现在,这些分布可能表明数据是不平衡的,但是再次,因为我们正在进行深度学习,所以我希望保留最大的训练集,因此我将按原样继续进行。

Data Scaling and Augmentation

数据扩展和扩充

Since neural networks receive inputs of the same size, all images need to be resized to a fixed size before inputting them to the CNN. The larger the fixed size, the less shrinking required. Less shrinking means less deformation of features and patterns inside the image.

由于神经网络接收相同大小的输入,因此在将所有图像输入到CNN之前,需要将所有图像调整为固定大小。 固定尺寸越大,所需的收缩越少。 较少的收缩意味着图像内部特征和图案的变形较小。

These X-rays are all in a black and white scale, so just for the sake of avoiding any image difference size issues, I have rescaled all images to 256 colors (0–255). There is always a second option which is to re-scale even further to [0,1] and could accelerate the model, but I didn’t go that route

这些X射线都是黑白的,因此,为了避免出现任何图像差异大小问题,我将所有图像重新缩放为256色(0–255)。 总有第二种选择,可以重新缩放到[0,1]并可以加速模型,但是我没有走这条路

As mentioned above, it is well known that the performance of Deep Learning Neural Networks often improves with the amount of data available, therefore increasing the number of data samples could result in a more skillful model. This can be achieved by doing “Data Augmentation”, which is a technique to artificially create new training data from existing training data. This is done by applying domain-specific techniques to examples from the training data that create new and different training examples by simply shifting, flipping, rotating, modifying brightness, and zooming the training examples. Both, re-scaling and augmentation were performed with the code below:

如上所述,众所周知,深度学习神经网络的性能通常会随着可用数据量的提高而提高,因此,增加数据样本的数量可能会导致模型更加熟练。 这可以通过执行“数据增强”来实现,这是一种从现有训练数据中人为创建新训练数据的技术。 这是通过将特定领域的技术应用于来自训练数据的示例来完成的,这些数据只需简单地移动,翻转,旋转,修改亮度和缩放训练示例即可创建新的不同训练示例。 重新缩放和扩充均使用以下代码执行:

rescale = 1./255

target_size = (150,150)

batch_size = 32

class_mode = "categorical"

#class_mode = 'binary'train_datagen = ImageDataGenerator(rescale=rescale,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True)train_generator = train_datagen.flow_from_directory(train_dir,

target_size=target_size,

class_mode=class_mode,

batch_size=batch_size,

shuffle=True)validation_datagen = ImageDataGenerator(rescale=rescale)validation_generator = validation_datagen.flow_from_directory(val_dir,

target_size=target_size,

class_mode=class_mode,

batch_size=dir_file_count(val_dir),

shuffle=False)test_datagen = ImageDataGenerator(rescale=rescale)test_generator = test_datagen.flow_from_directory(test_dir,

target_size=target_size,

class_mode=class_mode,

batch_size=dir_file_count(test_dir),

shuffle=False)Model Architecture

模型架构

The next step is to define the architecture of the CNN model which is where it gets a bit more complicated. I tested a total of 15 different models/architectures which might sound like a lot, but I started from a very simple two layer one and with low epochs numbers to get results faster, and evolve from there into more complex ones by adding layers one at a time to test them. I will obviously just go over one of these models, which is the one that I consider to be the best, and for those interested on seeing the other architectures and results I will add my GitHub at the end of this blog. The final model architecture is displayed on Figure 4:

下一步是定义CNN模型的体系结构,这会使它变得更加复杂。 我总共测试了15种不同的模型/体系结构,听起来可能很多,但是我从一个非常简单的两层开始,并以较低的历元数开始,以更快地获得结果,然后通过在第一层增加一层来发展为更复杂的模型/体系结构。有时间测试它们。 显然,我将只介绍其中一种模型,这是我认为是最好的模型,对于那些对查看其他架构和结果感兴趣的人,我将在本文末尾添加我的GitHub。 最终的模型架构如图4所示:

model_15 = models.Sequential()# 1st Convolution block

model_15.add(layers.Conv2D(16, (3, 3), activation='relu', padding='same', input_shape=(150, 150, 3)))

model_15.add(layers.Conv2D(16, (3, 3), activation='relu'))

model_15.add(layers.MaxPooling2D((2, 2)))# 2nd Convolution block

model_15.add(layers.Conv2D(32, (3, 3), activation='relu', padding='same'))

model_15.add(layers.Conv2D(32, (3, 3), activation='relu'))

model_15.add(layers.MaxPooling2D((2, 2)))# 3rd Convolution block

model_15.add(layers.Conv2D(64, (3, 3), activation='relu', padding='same'))

model_15.add(layers.Conv2D(64, (3, 3), activation='relu'))

model_15.add(layers.MaxPooling2D((2, 2)))# 4th Convolution block

model_15.add(layers.Conv2D(96, (3, 3), dilation_rate=(2,2), activation='relu', padding='same'))

model_15.add(layers.Conv2D(96, (3, 3), activation='relu'))

model_15.add(layers.MaxPooling2D((2, 2)))# 5th Convolution block

model_15.add(layers.Conv2D(128, (3, 3), dilation_rate=(2, 2), activation='relu', padding='same'))

model_15.add(layers.Conv2D(128, (3, 3), activation='relu'))

model_15.add(layers.MaxPooling2D((2, 2)))# Flattened the layer

model_15.add(layers.Flatten())# Fully connected layers

model_15.add(layers.Dense(64, activation='relu'))

model_15.add(layers.Dropout(0.4))

model_15.add(layers.Dense(2, activation='softmax'))model_15.summary()I will not go over what each one of the layers of my model represent/mean to avoid making this an extremely long blog, so if interested just refer to my GitHub where you will find explanations of each one and good links to tutorials on how to manage them.

我不会讲解模型的每一层所代表的含义,以避免使它成为一个冗长的博客,因此,如果有兴趣,请参阅我的GitHub,您将在其中找到每一层的解释以及有关如何进行操作的教程的良好链接。管理他们。

The one thing I do want to explain is the usage of the Softmax activation instead of a Sigmoid, which is normally the choice for a binary classification, and that is what I have on my hands. So what I did was to convert my binary problem into a category one with two categories, NORMAL or PNEUMONIA, and therefore the behaviour of the Softmax became the same as a Sigmoid. Now why did I do that? because the validation accuracy was stagnated at 50% regardless of the model architecture that I used, therefore I used this workaround as a solution because a 50% accuracy means tossing a coin and that is not acceptable regardless of the accuracy obtained with your train subset which in my case was always above 95%, so most probably overfitting.

我想解释的一件事是使用Softmax激活而不是Sigmoid,这通常是二进制分类的选择,而这正是我的能力。 因此,我要做的就是将我的二元问题转换为一个具有两个类别的类别,即NORMAL或PNEUMONIA,因此Softmax的行为与Sigmoid相同。 现在为什么要这样做? 因为不管我使用哪种模型体系结构,验证精度都停滞在50%,所以我将这种解决方法用作解决方案,因为50%的精度意味着扔硬币,并且无论火车子集获得的精度如何,这都是不可接受的。就我而言,总是高于95%,因此很可能过度拟合。

Now to continue, below is the code for fitting/training the model that I used followed by the results of running it with the test subset

现在继续,下面是用于拟合/训练我使用的模型的代码,以及通过测试子集运行它的结果

optimizer=optimizers.Adam()

loss='categorical_crossentropy'

metrics=['accuracy']

epochs = 50

steps_per_epoch=100

validation_steps=50model.compile(optimizer, loss=loss, metrics=metrics)history = model_15.fit_generator(train_generator,

steps_per_epoch=steps_per_epoch,

epochs=epochs,

verbose=2,

validation_data=validation_generator,

validation_steps=validation_steps)In the code above there is a parameter called epoch. This is a term used in Machine Learning (ML) to indicate the number of passes of the entire training dataset the ML algorithm has completed. Because of time constraints I chose 50 epochs, which is probably the minimum for these type of models. Therefore you could expect a better behaved and skillful model by increasing this number to 100 or even 150 but you need the hardware power and the time. Still with the 50 epochs the model ended up with a train accuracy of 96.69% (most probably overfitted) and validation accuracy of 94.83%.

上面的代码中有一个称为epoch的参数。 这是机器学习(ML)中使用的一个术语,表示ML算法已完成的整个训练数据集的通过次数。 由于时间限制,我选择了50个时期,这可能是此类模型的最小值。 因此,您可以通过将这个数字增加到100甚至150,来期望一个更好的行为和熟练的模型,但是您需要硬件功能和时间。 仍然经过50个时期,该模型最终的训练精度为96.69%(很可能是过度拟合),验证精度为94.83%。

A simple way to understand how the model behaves with each epoch is to look at a plot of the train and validation subset loss and accuracy (Figure 4)

了解模型在每个时期的行为的简单方法是查看训练图和验证子集的损失和准确性(图4)。

Figure 4. Model loss and accuracy visualisation for train and validation data subsets 图4.训练和验证数据子集的模型损失和准确性可视化What the plots from Figure 4 is telling us is that for the train subset, with the increasing epochs, there is convergence happening as both blue curves get closer together, with a similar situation for the validation subset but not as smooth and fast as for the train subset, hence the need of increasing the epoch number to probably 100 as it seems that the validation curves start becoming less spiky towards the 40 epochs onwards.

图4的曲线告诉我们,对于训练子集,随着时间的增加,会发生收敛,因为两条蓝色曲线越来越靠近,对于验证子集来说情况相似,但不如验证子集平滑和快速。训练子集,因此需要将时期数增加到大约100,因为验证曲线似乎开始朝着40个时期开始变得不那么尖刻。

Now that I am happy with this model’s performance, the next step is to evaluate the model using the test subset. The code to do so is in the cell below with the outputs on the last three rows.

现在,我对该模型的性能感到满意,下一步是使用测试子集评估模型。 这样做的代码在下面的单元格中,最后三行的输出。

test_generator = test_datagen.flow_from_directory(test_dir,

target_size=target_size,

class_mode=class_mode,

batch_size=dir_file_count(test_dir),

shuffle=False)test_loss, test_acc = model_5.evaluate_generator(test_generator, steps=50)

print('test accuracy:', round(test_acc*100, 2)

print('test loss:' , round(test_loss))Found 524 images belonging to 2 classes.

test accuracy: 90.84

test loss: 0.3The test subset resulted with an accuracy of 90.84% which is quite good. However, as a performance measure, accuracy is inappropriate for imbalanced classification problems. The reason for this is that the overwhelming number of samples from the majority class, in this case the train subset, will overwhelm the number of examples in the minority class. This means that even unskillful models can achieve accuracy scores of 90 percent, or 99 percent, depending on how severe the class imbalance happens to be.

测试子集的准确性为90.84%,这是相当不错的。 但是,作为一种性能指标,准确性不适用于不平衡的分类问题。 这样做的原因是,多数类(在这种情况下是火车子集)中的绝大多数样本将使少数类中的样本数量过多。 这意味着即使不熟练的模型也可以达到90%或99%的准确率,这取决于班级失衡的严重程度。

An alternative to using classification accuracy is to use precision and recall metrics. For those not familiar with these metrics, here is a simple definition of what they are:

使用分类精度的另一种方法是使用精度和召回率指标。 对于不熟悉这些指标的人,这里是它们的简单定义:

Precision: quantifies the number of positive class predictions that actually belong to the positive class.

精度:量化实际上属于肯定类别的肯定类别预测的数量。

Recall: quantifies the number of positive class predictions made out of all positive examples in the dataset.

召回:量化从数据集中所有正面示例得出的正面类别预测的数量。

F-Measure: provides a single score that balances both the concerns of precision and recall in one number.

F量度:提供一个单一的评分,可以在一个数字中兼顾准确性和召回性。

If you are interested in knowing more about these metrics I recommend this link which has good explanations and a small project to run them.

如果您想了解更多有关这些指标的信息,建议您使用此链接,该链接有很好的解释和一个运行它们的小项目。

With those definitions now in hand, what I did was to calculate them automatically by using the scikit-learn ML library. The code follows below with the outputs for each in the last three rows.

现在有了这些定义,我要做的就是使用scikit-learn ML库自动计算它们。 代码如下,最后三行分别显示输出。

precision = precision_score(true_classes, predicted_classes)

recall = recall_score(true_classes, predicted_classes)

f1 = f1_score(true_classes, predicted_classes)print('Precision score:', round(precision*100, 2))

print('Recall score:', round(recall*100, 2))

print('F1 score:', round(f1*100, 2))Precision score: 87.05

Recall score: 85.0

F1 score: 86.01These three are better metrics for these type of imbalanced datasets. In this particular case the difference is not too big as the model ended up with an accuracy of 90.84%, and the Precision, Recall and F-score ended up being 87, 85, and 86% respectively. So not too far apart from each other making the model’s accuracy mode credible.

对于这类型的不平衡数据集,这三个是更好的指标。 在此特定情况下,差异不会太大,因为模型最终的准确度为90.84%,而Precision,Recall和F分数的最终结果分别为87%,85%和86%。 因此,彼此之间的距离不会太远,从而使模型的准确性模式可信。

So there we go, I have successfully finished my first CNN model for Pneumonia prediction using X-ray images with an accuracy of 90.84%

到此为止,我已经成功完成了第一个使用X射线图像进行肺炎预测的CNN模型,其准确度为90.84%

I hope those who made it this far have enjoyed the reading, and as promised above, here is the associated GitHub and my linkedin just in case anyone has questions or wants to discuss these results.

我希望到目前为止取得这些成绩的人喜欢阅读,并且如上所述,这里是相关的GitHub和我的Linkedin ,以防万一有人有疑问或想要讨论这些结果。

翻译自: https://medium.com/@jaherbas/pneumonia-detection-from-x-ray-images-using-deep-learning-neural-network-fa9b2feee206