这几天学习SURF特征检测,直接看的视频和书本有点吃不消,现在是基本看懂了,如果写博客记录没有必要,因为网上都差不多,笔记都在书上了,以下是个人认为比较浅显易懂的文章,当然海有很多好文章我没看到。

看第一篇入门就可以,后面讲的不是很好: http://blog.csdn.net/jwh_bupt/article/details/7621681

harris: http://www.cnblogs.com/ronny/p/4009425.html

Harr: http://blog.csdn.net/zouxy09/article/details/7929570/

Box Filters: http://blog.csdn.net/lanbing510/article/details/28696833

SIFT: http://blog.csdn.net/abcjennifer/article/details/7639681/ http://blog.csdn.net/pi9nc/article/details/23302075

Harr小波特征: 场合不同这个表示也不同,比如在SURF中表示dx、dy,当然也可以表示成其它样子,得看Harr的内核

SURF: 看一下毛星云的那本书,其实网上说的差不多。

ORB: http://www.aiuxian.com/article/p-1728722.html,里面包括FAST特征点、BRIEF描述子

HOG: 这个算法相对比较上面容易理解。

LBP: http://blog.csdn.net/quincuntial/article/details/50541815这个算法可以手写实现,上面基本自己都实现不了(难度很大)

双线性插值: 百度百科有(先有三角函数计算坐标位置,然后用双线性插值计算像素值)

LBP算子

经典LBP算法:

1 int main(int argc, char**argv)

2 {

3 Mat input_image, Middle_image,threshold_image;

4 input_image = imread("1.jpg");

5 if (input_image.data == NULL) {

6 return -1; cout << "can't open image.../";

7 }

8 cvtColor(input_image, input_image, CV_BGR2GRAY);

9 Mat output_image;

10 output_image.create(Size(input_image.rows - 2, input_image.cols - 2), input_image.type());

11 output_image.setTo(0, Mat());

12 int width = input_image.cols * input_image.channels();

13 int heigth = input_image.rows;

14 for (size_t i = 1; i < heigth - 1; i++)

15 {

16 //uchar *ptr = input_image.ptr(i);

17 for (size_t j = 1; j < width - 1; j++)

18 {

19 uchar code = 0;

20 uchar center = input_image.at(i, j);

21 code |= (input_image.at(i - 1, j - 1) >= center) << 7;

22 code |= (input_image.at(i - 1, j ) >= center) << 6;

23 code |= (input_image.at(i - 1, j + 1) >= center) << 5;

24 code |= (input_image.at(i , j - 1) >= center) << 4;

25 code |= (input_image.at(i , j + 1) >= center) << 3;

26 code |= (input_image.at(i + 1, j - 1) >= center) << 2;

27 code |= (input_image.at(i + 1, j ) >= center) << 1;

28 code |= (input_image.at(i + 1, j + 1) >= center) << 0;

29 output_image.at(i - 1, j - 1) = code;

30 }

31 }

32 imshow("LineImage", input_image);

33 imshow("output_image", output_image);

34

35 waitKey(0);

36 return 0;

37 }

CLBP:

1 #include

2 #include

3

4 using namespace cv;

5 using namespace std;

6

7 const int neighbor = 8;//圆周上共几个像素

8 const int radius = 3;

9

10 int main(int argc, char**argv)

11 {

12 Mat input_image, output_image;

13 input_image = imread("1.jpg");

14

15 if (input_image.data == NULL) {

16 return -1; cout << "can't open image.../";

17 }

18 cvtColor(input_image, input_image, CV_BGR2GRAY);

19 //Mat output_image = Mat::zeros(Size(input_image.cols - 2 * 30, input_image.rows - 2 * 30), CV_8UC1);

20 output_image.create(Size(input_image.cols - 2* radius, input_image.rows - 2*radius), CV_8UC1);

21 output_image.setTo(0);

22 int width = input_image.cols;

23 int height = input_image.rows;

24

25

26 for (size_t n = 0; n < neighbor; n++)

27 {

28 //-----计算圆边长点的坐标(这里x、y具体谁用cos和sin的结果一样,看后来计算双线性插值自己怎么理解了)

29 float x = static_cast(radius) * cos(2.0 * CV_PI*n / static_cast(neighbor));

30 float y = static_cast(-radius) * sin(2.0 * CV_PI*n / static_cast(neighbor));//这里加不加负号效果一样,因为是点的顺序而已。

31 //-----计算圆边上点的像素值,用双线性插值方法

32 int fx = static_cast(floor(x));

33 int fy = static_cast(floor(y));//上采样

34 int cx = static_cast(ceil(x));

35 int cy = static_cast(ceil(y));//下采样

36

37 float dx = x - fx;

38 float dy = y - fy;

39

40 float w1 = (1 - dx)*(1 - dy);

41 float w2 = dx*(1 - dy);

42 float w3 = (1 - dx)*dy;

43 float w4 = dx*dy;

44

45 for (size_t i = radius; i < height - radius; i++)

46 {

47 for (size_t j = radius; j < width - radius; j++)

48 {

49 float p1 = input_image.at(i + fy, j + fx);

50 float p2 = input_image.at(i + fy, j + cx);

51 float p3 = input_image.at(i + cy, j + fx);

52 float p4 = input_image.at(i + cy, j + cx);

53

54 float Angle_data = p1*w1 + p2*w2 + p3*w3 + p4*w4;

55 output_image.at(i - radius, j - radius) |= (((input_image.at(i, j) >= Angle_data) && (std::abs(Angle_data-input_image.at(i,j)) > numeric_limits::epsilon())) << n);

56 }

57

58 }

59 }

60

61 imshow("LineImage", input_image);

62 imshow("output_image", output_image);

63

64 waitKey(0);

65 return 0;

66 }

LBP后面还有很多知识,现在不学习那么深,只是了解一下,以后用到再继续深入。

SIFT算子

1 #if 1

2 #include

3 #include

4 #include

5 #include "math.h"

6

7 using namespace cv::xfeatures2d;

8 using namespace cv;

9 using namespace std;

10

11 void calSIFTFeatureAndCompare(Mat& src1, Mat& src2);

12

13 int main(int argc, char**argv)

14 {

15 Mat input_image1, input_image2;

16 input_image1 = imread("hand1.jpg");

17 input_image2 = imread("hand2.jpg");

18

19 if (!input_image1.data && !input_image2.data) {

20 return -1; cout << "can't open image.../";

21 }

22 calSIFTFeatureAndCompare(input_image1, input_image2);

23 /*imshow("input_image1", input_image1);

24 imshow("input_image2", input_image2);*/

25

26 waitKey(0);

27 return 0;

28 }

29 //-------------特征点:如同harris等检测的点一样(当然加了更多的约束)--------------//

30 //-------------描述子:点形成组织性,有方向和区域等,更能代表整副图像(也就是在特征点上加了更多的约束) --------------//

31 void calSIFTFeatureAndCompare(Mat& src1,Mat& src2)

32 {

33 Mat grayMat1, grayMat2;

34 cvtColor(src1, grayMat1, CV_BGR2GRAY);

35 cvtColor(src2, grayMat2, CV_BGR2GRAY);

36 normalize(grayMat1, grayMat1, 0, 255, CV_MINMAX);

37 normalize(grayMat2, grayMat2, 0, 255, CV_MINMAX);

38 /*//---定义SIFT描述子

39 SiftFeatureDetector detector; //计算特征点

40 SiftDescriptorExtractor extractor;//计算描述子

41 //---特征点检测

42 vector keypoints1;

43 vector keypoints2;

44 detector.detect(grayMat1, keypoints1, Mat());

45 detector.detect(grayMat2, keypoints2, Mat());

46 //---计算特征点描述子

47 Mat descriptors1, descriptors2;

48 extractor.compute(grayMat1, keypoints1, descriptors1);

49 extractor.compute(grayMat2, keypoints2, descriptors2);*/

50 //SURF* detector = SURF::create(100);//那道理说是可以的,但是奔溃,不知道原因

51 Ptr detector = SURF::create(500);//这个参数hessianThreshold为海深矩阵的阈值T,看SIFT原理就可

52 vector keypoints1;

53 vector keypoints2;

54 Mat descriptors1, descriptors2;

55 //---特征点和描述子一起检测了

56 detector->detectAndCompute(grayMat1, Mat(), keypoints1, descriptors1);

57 detector->detectAndCompute(grayMat2, Mat(), keypoints2, descriptors2);

58 //---特征点匹配

59 vector matches;//存储匹配结果的类,和keypoint类似

60 BFMatcher matcher(NORM_L1); //NORM_L1:代表SIFT,NORM_L2:代表SURF,默认SURF

61 matcher.match(descriptors1, descriptors2, matches);

62 Mat resultMatch;

63 drawMatches(grayMat1, keypoints1, grayMat2, keypoints2, matches, resultMatch);

64 }

65

66 #endif

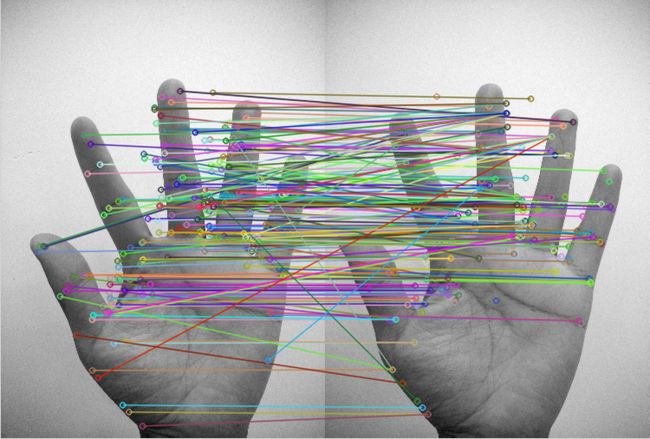

实际图片的匹配与寻找

在原图上截取的一个小图片进行测试---->>>>

本文利用SURF算子进行检测,利用FLANN进行匹配!

上代码:

1 #if 1

2 #include

3 #include

4 #include

5 #include "math.h"

6

7 using namespace cv::xfeatures2d;

8 using namespace cv;

9 using namespace std;

10

11

12 int main(int argc, char**argv)

13 {

14 Mat input_image1, input_image2;

15 input_image1 = imread("2.jpg");

16 input_image2 = imread("1.jpg");

17

18 if (!input_image1.data && !input_image2.data) {

19 return -1; cout << "can't open image.../";

20 }

21 //-------------keypoints and descriptor detection

22 const int minHessian = 400;

23 Ptr detector = SURF::create(minHessian);

24 vector srcKeyPoint, dstKeyPoint;

25 Mat srcDescriptor, dstDescriptor;

26 detector->detectAndCompute(input_image1, Mat(), srcKeyPoint, srcDescriptor);

27 detector->detectAndCompute(input_image2, Mat(), dstKeyPoint, dstDescriptor);

28 //------------FLANN algorithm match

29 FlannBasedMatcher matcher;

30 vector matches;

31 matcher.match(srcDescriptor, dstDescriptor, matches);

32 Mat matchImage;

33 drawMatches(input_image1, srcKeyPoint, input_image2, dstKeyPoint, matches, matchImage);

34 //------------calculate best keypoint

35 double maxDistance = 0, minDistance = 10000;

36 for (size_t i = 0; i < matches.size(); i++)

37 {

38 maxDistance = maxDistance < matches[i].distance ? matches[i].distance : maxDistance;

39 minDistance = minDistance < matches[i].distance ? minDistance : matches[i].distance;

40 }

41 vector bestMatches;

42 for (size_t i = 0; i < matches.size(); i++)

43 {

44 if (matches[i].distance < 3 * minDistance)

45 {

46 bestMatches.push_back(matches[i]);

47 }

48 }

49 Mat bestMatchImage;

50 drawMatches(input_image1, srcKeyPoint, input_image2, dstKeyPoint, bestMatches, bestMatchImage);

51 //-----------Find four corner points from bestMatches

52 vector srcPoint;

53 vector dstPoint;

54 for (size_t i = 0; i < bestMatches.size(); i++)//save coordinate to srcPoint/dstPoint from bestMatches

55 {

56 srcPoint.push_back(srcKeyPoint[bestMatches[i].queryIdx].pt);

57 dstPoint.push_back(dstKeyPoint[bestMatches[i].trainIdx].pt);

58 }

59 Mat H = findHomography(srcPoint, dstPoint,CV_RANSAC);//detection perspective Mapping(H映射)

60 vector srcCorners(4);

61 vector dstCorners(4);

62 srcCorners[0] = Point(0, 0);

63 srcCorners[1] = Point(input_image1.cols, 0);

64 srcCorners[2] = Point(0, input_image1.rows);

65 srcCorners[3] = Point(input_image1.cols, input_image1.rows);

66 perspectiveTransform(srcCorners, dstCorners, H);//detection destination Corners

67 line(input_image2, dstCorners[0], dstCorners[1], Scalar(255, 255, 0), 2);

68 line(input_image2, dstCorners[0], dstCorners[2], Scalar(255, 255, 0), 2);

69 line(input_image2, dstCorners[1], dstCorners[3], Scalar(255, 255, 0), 2);

70 line(input_image2, dstCorners[2], dstCorners[3], Scalar(255, 255, 0), 2);

71 waitKey(0);

72 return 0;

73 }

74

75

76 #endif