CNN案例:手写数字识别

1. 超参数定义

import torch

import torchvision

from torch.utils.data import DataLoader

# 训练次数

n_epochs = 3

# 一次训练的图片数

batch_size_train = 64

# 一次测试的图片数

batch_size_test = 1000

# 学习率

learning_rate = 0.01

momentum = 0.5

log_interval = 10

# 随机数种子,保证了实验的可复现

random_seed = 1

torch.manual_seed(random_seed)

2. 数据加载与标准化裁剪

为了便于训练,图像需要进行标准化处理。TorchVision库提供了许多方便的图像转化功能。

# 获取训练集

train_loader = torch.utils.data.DataLoader(

torchvision.datasets.MNIST('./data/', train=True, download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(

(0.1307,), (0.3081,))

])),

batch_size=batch_size_train, shuffle=True)

# 获取测试集

test_loader = torch.utils.data.DataLoader(

torchvision.datasets.MNIST('./data/', train=False, download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(

(0.1307,), (0.3081,))

])),

batch_size=batch_size_test, shuffle=True)

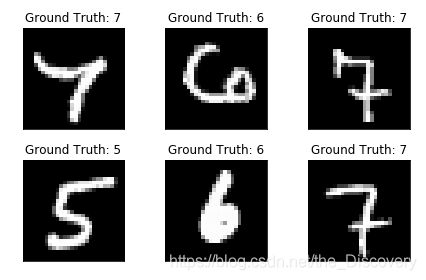

下面我们可以看看数据集的样貌,以测试集为例。

examples = enumerate(test_loader)

batch_idx, (example_data, example_targets) = next(examples)

# 目标值,0-9之间的数字

print(example_targets)

# 数据集的格式 batch_size=1000,channels = 1, width = height = 28

print(example_data.shape)

tensor([7, 6, 7, 5, 6, 7, 8, 1, 1, 2, 4, 1, 0, 8, 4, 4, 4, 9, 8, 1, 3, 3, 8, 6,

2, 7, 5, 1, 6, 5, 6, 2, 9, 2, 8, 4, 9, 4, 8, 6, 7, 7, 9, 8, 4, 9, 5, 3,

1, 0, 9, 1, 7, 3, 7, 0, 9, 2, 5, 1, 8, 9, 3, 7, 8, 4, 1, 9, 0, 3, 1, 2,

3, 6, 2, 9, 9, 0, 3, 8, 3, 0, 8, 8, 5, 3, 8, 2, 8, 5, 5, 7, 1, 5, 5, 1,

0, 9, 7, 5, 2, 0, 7, 6, 1, 2, 2, 7, 5, 4, 7, 3, 0, 6, 7, 5, 1, 7, 6, 7,

2, 1, 9, 1, 9, 2, 7, 6, 8, 8, 8, 4, 6, 0, 0, 2, 3, 0, 1, 7, 8, 7, 4, 1,

3, 8, 3, 5, 5, 9, 6, 0, 5, 3, 3, 9, 4, 0, 1, 9, 9, 1, 5, 6, 2, 0, 4, 7,

3, 5, 8, 8, 2, 5, 9, 5, 0, 7, 8, 9, 3, 8, 5, 3, 2, 4, 4, 6, 3, 0, 8, 2,

7, 0, 5, 2, 0, 6, 2, 6, 3, 6, 6, 7, 9, 3, 4, 1, 6, 2, 8, 4, 7, 7, 2, 7,

4, 2, 4, 9, 7, 7, 5, 9, 1, 3, 0, 4, 4, 8, 9, 6, 6, 5, 3, 3, 2, 3, 9, 1,

1, 4, 4, 8, 1, 5, 1, 8, 8, 0, 7, 5, 8, 4, 0, 0, 0, 6, 3, 0, 9, 0, 6, 6,

9, 8, 1, 2, 3, 7, 6, 1, 5, 9, 3, 9, 3, 2, 5, 9, 9, 5, 4, 9, 3, 9, 6, 0,

3, 3, 8, 3, 1, 4, 1, 4, 7, 3, 1, 6, 8, 4, 7, 7, 3, 3, 6, 1, 3, 2, 3, 5,

9, 9, 9, 2, 9, 0, 2, 7, 0, 7, 5, 0, 2, 6, 7, 3, 7, 1, 4, 6, 4, 0, 0, 3,

2, 1, 9, 3, 5, 5, 1, 6, 4, 7, 4, 6, 4, 4, 9, 7, 4, 1, 5, 4, 8, 7, 5, 9,

2, 9, 4, 0, 8, 7, 3, 4, 2, 7, 9, 4, 4, 0, 1, 4, 1, 2, 5, 2, 8, 5, 3, 9,

1, 3, 5, 1, 9, 5, 3, 6, 8, 1, 7, 9, 9, 9, 9, 9, 2, 3, 5, 1, 4, 2, 3, 1,

1, 3, 8, 2, 8, 1, 9, 2, 9, 0, 7, 3, 5, 8, 3, 7, 8, 5, 6, 4, 1, 9, 7, 1,

7, 1, 1, 8, 6, 7, 5, 6, 7, 4, 9, 5, 8, 6, 5, 6, 8, 4, 1, 0, 9, 1, 4, 3,

5, 1, 8, 7, 5, 4, 6, 6, 0, 2, 4, 2, 9, 5, 9, 8, 1, 4, 8, 1, 1, 6, 7, 5,

9, 1, 1, 7, 8, 7, 5, 5, 2, 6, 5, 8, 1, 0, 7, 2, 2, 4, 3, 9, 7, 3, 5, 7,

6, 9, 5, 9, 6, 5, 7, 2, 3, 7, 2, 9, 7, 4, 8, 4, 9, 3, 8, 7, 5, 0, 0, 3,

4, 3, 3, 6, 0, 1, 7, 7, 4, 6, 3, 0, 8, 0, 9, 8, 2, 4, 2, 9, 4, 9, 9, 9,

7, 7, 6, 8, 2, 4, 9, 3, 0, 4, 4, 1, 5, 7, 7, 6, 9, 7, 0, 2, 4, 2, 1, 4,

7, 4, 5, 1, 4, 7, 3, 1, 7, 6, 9, 0, 0, 7, 3, 6, 3, 3, 6, 5, 8, 1, 7, 1,

6, 1, 2, 3, 1, 6, 8, 8, 7, 4, 3, 7, 7, 1, 8, 9, 2, 6, 6, 6, 2, 8, 8, 1,

6, 0, 3, 0, 5, 1, 3, 2, 4, 1, 5, 5, 7, 3, 5, 6, 2, 1, 8, 0, 2, 0, 8, 4,

4, 5, 0, 0, 1, 5, 0, 7, 4, 0, 9, 2, 5, 7, 4, 0, 3, 7, 0, 3, 5, 1, 0, 6,

4, 7, 6, 4, 7, 0, 0, 5, 8, 2, 0, 6, 2, 4, 2, 3, 2, 7, 7, 6, 9, 8, 5, 9,

7, 1, 3, 4, 3, 1, 8, 0, 3, 0, 7, 4, 9, 0, 8, 1, 5, 7, 3, 2, 2, 0, 7, 3,

1, 8, 8, 2, 2, 6, 2, 7, 6, 6, 9, 4, 9, 3, 7, 0, 4, 6, 1, 9, 7, 4, 4, 5,

8, 2, 3, 2, 4, 9, 1, 9, 6, 7, 1, 2, 1, 1, 2, 6, 9, 7, 1, 0, 1, 4, 2, 7,

7, 8, 3, 2, 8, 2, 7, 6, 1, 1, 9, 1, 0, 9, 1, 3, 9, 3, 7, 6, 5, 6, 2, 0,

0, 3, 9, 4, 7, 3, 2, 9, 0, 9, 5, 2, 2, 4, 1, 6, 3, 4, 0, 1, 6, 9, 1, 7,

0, 8, 0, 0, 9, 8, 5, 9, 4, 4, 7, 1, 9, 0, 0, 2, 4, 3, 5, 0, 4, 0, 1, 0,

5, 8, 1, 8, 3, 3, 2, 1, 2, 6, 8, 2, 5, 3, 7, 9, 3, 6, 2, 2, 6, 2, 7, 7,

6, 1, 8, 0, 3, 5, 7, 5, 0, 8, 6, 7, 2, 4, 1, 4, 3, 7, 7, 2, 9, 3, 5, 5,

9, 4, 8, 7, 6, 7, 4, 9, 2, 7, 7, 1, 0, 7, 2, 8, 0, 3, 5, 4, 5, 1, 5, 7,

6, 7, 3, 5, 3, 4, 5, 3, 4, 3, 2, 3, 1, 7, 4, 4, 8, 5, 5, 3, 2, 2, 9, 5,

8, 2, 0, 6, 0, 7, 9, 9, 6, 1, 6, 6, 2, 3, 7, 4, 7, 5, 2, 9, 4, 2, 9, 0,

8, 1, 7, 5, 5, 7, 0, 5, 2, 9, 5, 2, 3, 4, 6, 0, 0, 2, 9, 2, 0, 5, 4, 8,

9, 0, 9, 1, 3, 4, 1, 8, 0, 0, 4, 0, 8, 5, 9, 8])

torch.Size([1000, 1, 28, 28])

下面用matplotlib还原几个图像

import matplotlib.pyplot as plt

fig = plt.figure()

for i in range(6):

plt.subplot(2,3,i+1)

plt.tight_layout()

plt.imshow(example_data[i][0], cmap='gray', interpolation='none')

plt.title("Ground Truth: {}".format(example_targets[i]))

plt.xticks([])

plt.yticks([])

plt.show()

3. 卷积神经网络模型构建

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# 输入1通道,输出10通道,卷积核10个1*5*5

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

# 输入10通道,输出20通道,卷积核20个10*5*5

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

# 下采样

self.conv2_drop = nn.Dropout2d()

# 全连接层,输入320由计算得出

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

# 池化核大小为2*2

x = F.relu(F.max_pool2d(self.conv1(x), 2))

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), 2))

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

return F.log_softmax(x)

4. 初始化网络、优化器

import torch.optim as optim

network = Net()

# momentum是一种采用动量思想的SGD优化算法,即积累了之前转化为动能的重力势能,加快训练,又不容易陷入局部最小

optimizer = optim.SGD(network.parameters(), lr=learning_rate, momentum=momentum)

# 检测是否有N卡

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

network.to(device)

Net(

(conv1): Conv2d(1, 10, kernel_size=(5, 5), stride=(1, 1))

(conv2): Conv2d(10, 20, kernel_size=(5, 5), stride=(1, 1))

(conv2_drop): Dropout2d(p=0.5, inplace=False)

(fc1): Linear(in_features=320, out_features=50, bias=True)

(fc2): Linear(in_features=50, out_features=10, bias=True)

)

5. 模型训练

# 用于记录loss下降的过程

train_losses = []

train_counter = []

test_counter = [i*len(train_loader.dataset) for i in range(n_epochs + 1)]

def train(epoch):

network.train()

for batch_idx, (data, target) in enumerate(train_loader):

data = data.to(device)

target = target.to(device)

optimizer.zero_grad()

output = network(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

train_losses.append(loss.item())

train_counter.append(

(batch_idx*64) + ((epoch-1)*len(train_loader.dataset)))

torch.save(network.state_dict(), './model.pth')

torch.save(optimizer.state_dict(), './optimizer.pth')

train(1)

D:\Anaconda3\lib\site-packages\ipykernel_launcher.py:24: UserWarning: Implicit dimension choice for log_softmax has been deprecated. Change the call to include dim=X as an argument.

Train Epoch: 1 [0/60000 (0%)] Loss: 2.342775

Train Epoch: 1 [640/60000 (1%)] Loss: 2.301787

Train Epoch: 1 [1280/60000 (2%)] Loss: 2.273638

Train Epoch: 1 [1920/60000 (3%)] Loss: 2.215885

Train Epoch: 1 [2560/60000 (4%)] Loss: 2.219636

Train Epoch: 1 [3200/60000 (5%)] Loss: 2.182050

Train Epoch: 1 [3840/60000 (6%)] Loss: 2.154599

Train Epoch: 1 [4480/60000 (7%)] Loss: 2.109921

Train Epoch: 1 [5120/60000 (9%)] Loss: 2.040787

Train Epoch: 1 [5760/60000 (10%)] Loss: 1.918081

Train Epoch: 1 [6400/60000 (11%)] Loss: 1.916582

Train Epoch: 1 [7040/60000 (12%)] Loss: 1.752552

Train Epoch: 1 [7680/60000 (13%)] Loss: 1.654067

Train Epoch: 1 [8320/60000 (14%)] Loss: 1.799136

Train Epoch: 1 [8960/60000 (15%)] Loss: 1.734123

Train Epoch: 1 [9600/60000 (16%)] Loss: 1.306920

Train Epoch: 1 [10240/60000 (17%)] Loss: 1.465809

Train Epoch: 1 [10880/60000 (18%)] Loss: 1.182047

Train Epoch: 1 [11520/60000 (19%)] Loss: 1.411433

Train Epoch: 1 [12160/60000 (20%)] Loss: 1.196440

Train Epoch: 1 [12800/60000 (21%)] Loss: 1.151352

Train Epoch: 1 [13440/60000 (22%)] Loss: 0.999309

Train Epoch: 1 [14080/60000 (23%)] Loss: 1.099866

Train Epoch: 1 [14720/60000 (25%)] Loss: 1.343900

Train Epoch: 1 [15360/60000 (26%)] Loss: 1.035041

Train Epoch: 1 [16000/60000 (27%)] Loss: 1.200880

Train Epoch: 1 [16640/60000 (28%)] Loss: 1.073424

Train Epoch: 1 [17280/60000 (29%)] Loss: 0.992197

Train Epoch: 1 [17920/60000 (30%)] Loss: 0.889907

Train Epoch: 1 [18560/60000 (31%)] Loss: 0.791340

Train Epoch: 1 [19200/60000 (32%)] Loss: 0.929186

Train Epoch: 1 [19840/60000 (33%)] Loss: 0.779179

Train Epoch: 1 [20480/60000 (34%)] Loss: 0.822259

Train Epoch: 1 [21120/60000 (35%)] Loss: 0.800306

Train Epoch: 1 [21760/60000 (36%)] Loss: 1.106641

Train Epoch: 1 [22400/60000 (37%)] Loss: 0.866425

Train Epoch: 1 [23040/60000 (38%)] Loss: 0.680763

Train Epoch: 1 [23680/60000 (39%)] Loss: 0.738366

Train Epoch: 1 [24320/60000 (41%)] Loss: 0.724880

Train Epoch: 1 [24960/60000 (42%)] Loss: 0.657511

Train Epoch: 1 [25600/60000 (43%)] Loss: 0.858225

Train Epoch: 1 [26240/60000 (44%)] Loss: 0.733906

Train Epoch: 1 [26880/60000 (45%)] Loss: 0.921771

Train Epoch: 1 [27520/60000 (46%)] Loss: 0.743885

Train Epoch: 1 [28160/60000 (47%)] Loss: 0.652919

Train Epoch: 1 [28800/60000 (48%)] Loss: 0.791037

Train Epoch: 1 [29440/60000 (49%)] Loss: 0.587879

Train Epoch: 1 [30080/60000 (50%)] Loss: 0.465844

Train Epoch: 1 [30720/60000 (51%)] Loss: 0.340918

Train Epoch: 1 [31360/60000 (52%)] Loss: 0.679137

Train Epoch: 1 [32000/60000 (53%)] Loss: 0.695010

Train Epoch: 1 [32640/60000 (54%)] Loss: 0.616460

Train Epoch: 1 [33280/60000 (55%)] Loss: 0.592633

Train Epoch: 1 [33920/60000 (57%)] Loss: 0.617049

Train Epoch: 1 [34560/60000 (58%)] Loss: 0.550085

Train Epoch: 1 [35200/60000 (59%)] Loss: 0.801612

Train Epoch: 1 [35840/60000 (60%)] Loss: 0.454174

Train Epoch: 1 [36480/60000 (61%)] Loss: 0.588209

Train Epoch: 1 [37120/60000 (62%)] Loss: 0.671104

Train Epoch: 1 [37760/60000 (63%)] Loss: 0.798899

Train Epoch: 1 [38400/60000 (64%)] Loss: 0.637726

Train Epoch: 1 [39040/60000 (65%)] Loss: 0.656208

Train Epoch: 1 [39680/60000 (66%)] Loss: 0.542938

Train Epoch: 1 [40320/60000 (67%)] Loss: 0.541254

Train Epoch: 1 [40960/60000 (68%)] Loss: 0.551119

Train Epoch: 1 [41600/60000 (69%)] Loss: 0.646996

Train Epoch: 1 [42240/60000 (70%)] Loss: 0.639059

Train Epoch: 1 [42880/60000 (71%)] Loss: 0.523870

Train Epoch: 1 [43520/60000 (72%)] Loss: 0.537203

Train Epoch: 1 [44160/60000 (74%)] Loss: 0.279806

Train Epoch: 1 [44800/60000 (75%)] Loss: 0.609367

Train Epoch: 1 [45440/60000 (76%)] Loss: 0.662970

Train Epoch: 1 [46080/60000 (77%)] Loss: 0.637495

Train Epoch: 1 [46720/60000 (78%)] Loss: 0.405338

Train Epoch: 1 [47360/60000 (79%)] Loss: 0.496160

Train Epoch: 1 [48000/60000 (80%)] Loss: 0.428567

Train Epoch: 1 [48640/60000 (81%)] Loss: 0.475516

Train Epoch: 1 [49280/60000 (82%)] Loss: 0.443161

Train Epoch: 1 [49920/60000 (83%)] Loss: 0.474389

Train Epoch: 1 [50560/60000 (84%)] Loss: 0.480269

Train Epoch: 1 [51200/60000 (85%)] Loss: 0.431389

Train Epoch: 1 [51840/60000 (86%)] Loss: 0.422643

Train Epoch: 1 [52480/60000 (87%)] Loss: 0.510923

Train Epoch: 1 [53120/60000 (88%)] Loss: 0.335113

Train Epoch: 1 [53760/60000 (90%)] Loss: 0.722130

Train Epoch: 1 [54400/60000 (91%)] Loss: 0.362313

Train Epoch: 1 [55040/60000 (92%)] Loss: 0.456559

Train Epoch: 1 [55680/60000 (93%)] Loss: 0.491410

Train Epoch: 1 [56320/60000 (94%)] Loss: 0.517430

Train Epoch: 1 [56960/60000 (95%)] Loss: 0.417551

Train Epoch: 1 [57600/60000 (96%)] Loss: 0.554161

Train Epoch: 1 [58240/60000 (97%)] Loss: 0.481269

Train Epoch: 1 [58880/60000 (98%)] Loss: 0.512804

Train Epoch: 1 [59520/60000 (99%)] Loss: 0.526870

6. 测试环节

def test():

test_losses = []

network.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

data = data.to(device)

target = target.to(device)

output = network(data)

test_loss += F.nll_loss(output, target, size_average=False).item()

pred = output.data.max(1, keepdim=True)[1]

correct += pred.eq(target.data.view_as(pred)).sum()

test_loss /= len(test_loader.dataset)

test_losses.append(test_loss)

print('\nTest set: Avg. loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

test()

D:\Anaconda3\lib\site-packages\ipykernel_launcher.py:24: UserWarning: Implicit dimension choice for log_softmax has been deprecated. Change the call to include dim=X as an argument.

Test set: Avg. loss: 0.0402, Accuracy: 9881/10000 (99%)

for epoch in range(1, n_epochs + 1):

train(epoch)

test()

D:\Anaconda3\lib\site-packages\ipykernel_launcher.py:24: UserWarning: Implicit dimension choice for log_softmax has been deprecated. Change the call to include dim=X as an argument.

D:\Anaconda3\lib\site-packages\torch\nn\_reduction.py:43: UserWarning: size_average and reduce args will be deprecated, please use reduction='sum' instead.

warnings.warn(warning.format(ret))

Train Epoch: 1 [0/60000 (0%)] Loss: 0.156632

Train Epoch: 1 [640/60000 (1%)] Loss: 0.192664

Train Epoch: 1 [1280/60000 (2%)] Loss: 0.151135

Train Epoch: 1 [1920/60000 (3%)] Loss: 0.103477

Train Epoch: 1 [2560/60000 (4%)] Loss: 0.064784

Train Epoch: 1 [3200/60000 (5%)] Loss: 0.051481

Train Epoch: 1 [3840/60000 (6%)] Loss: 0.441348

Train Epoch: 1 [4480/60000 (7%)] Loss: 0.104150

Train Epoch: 1 [5120/60000 (9%)] Loss: 0.179621

Train Epoch: 1 [5760/60000 (10%)] Loss: 0.041205

Train Epoch: 1 [6400/60000 (11%)] Loss: 0.194008

Train Epoch: 1 [7040/60000 (12%)] Loss: 0.157087

Train Epoch: 1 [7680/60000 (13%)] Loss: 0.124243

Train Epoch: 1 [8320/60000 (14%)] Loss: 0.157707

Train Epoch: 1 [8960/60000 (15%)] Loss: 0.246198

Train Epoch: 1 [9600/60000 (16%)] Loss: 0.069816

Train Epoch: 1 [10240/60000 (17%)] Loss: 0.108673

Train Epoch: 1 [10880/60000 (18%)] Loss: 0.057313

Train Epoch: 1 [11520/60000 (19%)] Loss: 0.048674

Train Epoch: 1 [12160/60000 (20%)] Loss: 0.088004

Train Epoch: 1 [12800/60000 (21%)] Loss: 0.306724

Train Epoch: 1 [13440/60000 (22%)] Loss: 0.038037

Train Epoch: 1 [14080/60000 (23%)] Loss: 0.065409

Train Epoch: 1 [14720/60000 (25%)] Loss: 0.220334

Train Epoch: 1 [15360/60000 (26%)] Loss: 0.275643

Train Epoch: 1 [16000/60000 (27%)] Loss: 0.151600

Train Epoch: 1 [16640/60000 (28%)] Loss: 0.081870

Train Epoch: 1 [17280/60000 (29%)] Loss: 0.322228

Train Epoch: 1 [17920/60000 (30%)] Loss: 0.075777

Train Epoch: 1 [18560/60000 (31%)] Loss: 0.071830

Train Epoch: 1 [19200/60000 (32%)] Loss: 0.154053

Train Epoch: 1 [19840/60000 (33%)] Loss: 0.268226

Train Epoch: 1 [20480/60000 (34%)] Loss: 0.277857

Train Epoch: 1 [21120/60000 (35%)] Loss: 0.128731

Train Epoch: 1 [21760/60000 (36%)] Loss: 0.216666

Train Epoch: 1 [22400/60000 (37%)] Loss: 0.143428

Train Epoch: 1 [23040/60000 (38%)] Loss: 0.049380

Train Epoch: 1 [23680/60000 (39%)] Loss: 0.108051

Train Epoch: 1 [24320/60000 (41%)] Loss: 0.126978

Train Epoch: 1 [24960/60000 (42%)] Loss: 0.054452

Train Epoch: 1 [25600/60000 (43%)] Loss: 0.232893

Train Epoch: 1 [26240/60000 (44%)] Loss: 0.189157

Train Epoch: 1 [26880/60000 (45%)] Loss: 0.118713

Train Epoch: 1 [27520/60000 (46%)] Loss: 0.127154

Train Epoch: 1 [28160/60000 (47%)] Loss: 0.042029

Train Epoch: 1 [28800/60000 (48%)] Loss: 0.067848

Train Epoch: 1 [29440/60000 (49%)] Loss: 0.080663

Train Epoch: 1 [30080/60000 (50%)] Loss: 0.194574

Train Epoch: 1 [30720/60000 (51%)] Loss: 0.085501

Train Epoch: 1 [31360/60000 (52%)] Loss: 0.049935

Train Epoch: 1 [32000/60000 (53%)] Loss: 0.132505

Train Epoch: 1 [32640/60000 (54%)] Loss: 0.227582

Train Epoch: 1 [33280/60000 (55%)] Loss: 0.019724

Train Epoch: 1 [33920/60000 (57%)] Loss: 0.154131

Train Epoch: 1 [34560/60000 (58%)] Loss: 0.304377

Train Epoch: 1 [35200/60000 (59%)] Loss: 0.073455

Train Epoch: 1 [35840/60000 (60%)] Loss: 0.053076

Train Epoch: 1 [36480/60000 (61%)] Loss: 0.099523

Train Epoch: 1 [37120/60000 (62%)] Loss: 0.116375

Train Epoch: 1 [37760/60000 (63%)] Loss: 0.215245

Train Epoch: 1 [38400/60000 (64%)] Loss: 0.146011

Train Epoch: 1 [39040/60000 (65%)] Loss: 0.121127

Train Epoch: 1 [39680/60000 (66%)] Loss: 0.156879

Train Epoch: 1 [40320/60000 (67%)] Loss: 0.047265

Train Epoch: 1 [40960/60000 (68%)] Loss: 0.139341

Train Epoch: 1 [41600/60000 (69%)] Loss: 0.156220

Train Epoch: 1 [42240/60000 (70%)] Loss: 0.116193

Train Epoch: 1 [42880/60000 (71%)] Loss: 0.095791

Train Epoch: 1 [43520/60000 (72%)] Loss: 0.189572

Train Epoch: 1 [44160/60000 (74%)] Loss: 0.074131

Train Epoch: 1 [44800/60000 (75%)] Loss: 0.101932

Train Epoch: 1 [45440/60000 (76%)] Loss: 0.123666

Train Epoch: 1 [46080/60000 (77%)] Loss: 0.153217

Train Epoch: 1 [46720/60000 (78%)] Loss: 0.077803

Train Epoch: 1 [47360/60000 (79%)] Loss: 0.283785

Train Epoch: 1 [48000/60000 (80%)] Loss: 0.212793

Train Epoch: 1 [48640/60000 (81%)] Loss: 0.142060

Train Epoch: 1 [49280/60000 (82%)] Loss: 0.102382

Train Epoch: 1 [49920/60000 (83%)] Loss: 0.221080

Train Epoch: 1 [50560/60000 (84%)] Loss: 0.038571

Train Epoch: 1 [51200/60000 (85%)] Loss: 0.123979

Train Epoch: 1 [51840/60000 (86%)] Loss: 0.146767

Train Epoch: 1 [52480/60000 (87%)] Loss: 0.436692

Train Epoch: 1 [53120/60000 (88%)] Loss: 0.078703

Train Epoch: 1 [53760/60000 (90%)] Loss: 0.083898

Train Epoch: 1 [54400/60000 (91%)] Loss: 0.133949

Train Epoch: 1 [55040/60000 (92%)] Loss: 0.102200

Train Epoch: 1 [55680/60000 (93%)] Loss: 0.133427

Train Epoch: 1 [56320/60000 (94%)] Loss: 0.077202

Train Epoch: 1 [56960/60000 (95%)] Loss: 0.156647

Train Epoch: 1 [57600/60000 (96%)] Loss: 0.037719

Train Epoch: 1 [58240/60000 (97%)] Loss: 0.102780

Train Epoch: 1 [58880/60000 (98%)] Loss: 0.052420

Train Epoch: 1 [59520/60000 (99%)] Loss: 0.076627

Test set: Avg. loss: 0.0421, Accuracy: 9875/10000 (99%)

Train Epoch: 2 [0/60000 (0%)] Loss: 0.081168

Train Epoch: 2 [640/60000 (1%)] Loss: 0.224670

Train Epoch: 2 [1280/60000 (2%)] Loss: 0.129191

Train Epoch: 2 [1920/60000 (3%)] Loss: 0.247358

Train Epoch: 2 [2560/60000 (4%)] Loss: 0.036171

Train Epoch: 2 [3200/60000 (5%)] Loss: 0.077806

Train Epoch: 2 [3840/60000 (6%)] Loss: 0.133592

Train Epoch: 2 [4480/60000 (7%)] Loss: 0.103453

Train Epoch: 2 [5120/60000 (9%)] Loss: 0.102922

Train Epoch: 2 [5760/60000 (10%)] Loss: 0.060962

Train Epoch: 2 [6400/60000 (11%)] Loss: 0.131838

Train Epoch: 2 [7040/60000 (12%)] Loss: 0.189249

Train Epoch: 2 [7680/60000 (13%)] Loss: 0.191448

Train Epoch: 2 [8320/60000 (14%)] Loss: 0.190570

Train Epoch: 2 [8960/60000 (15%)] Loss: 0.259936

Train Epoch: 2 [9600/60000 (16%)] Loss: 0.118716

Train Epoch: 2 [10240/60000 (17%)] Loss: 0.028625

Train Epoch: 2 [10880/60000 (18%)] Loss: 0.044575

Train Epoch: 2 [11520/60000 (19%)] Loss: 0.112197

Train Epoch: 2 [12160/60000 (20%)] Loss: 0.143760

Train Epoch: 2 [12800/60000 (21%)] Loss: 0.213565

Train Epoch: 2 [13440/60000 (22%)] Loss: 0.067672

Train Epoch: 2 [14080/60000 (23%)] Loss: 0.075030

Train Epoch: 2 [14720/60000 (25%)] Loss: 0.173301

Train Epoch: 2 [15360/60000 (26%)] Loss: 0.243791

Train Epoch: 2 [16000/60000 (27%)] Loss: 0.509218

Train Epoch: 2 [16640/60000 (28%)] Loss: 0.065029

Train Epoch: 2 [17280/60000 (29%)] Loss: 0.064137

Train Epoch: 2 [17920/60000 (30%)] Loss: 0.219690

Train Epoch: 2 [18560/60000 (31%)] Loss: 0.068412

Train Epoch: 2 [19200/60000 (32%)] Loss: 0.084879

Train Epoch: 2 [19840/60000 (33%)] Loss: 0.118277

Train Epoch: 2 [20480/60000 (34%)] Loss: 0.178690

Train Epoch: 2 [21120/60000 (35%)] Loss: 0.138507

Train Epoch: 2 [21760/60000 (36%)] Loss: 0.283177

Train Epoch: 2 [22400/60000 (37%)] Loss: 0.111385

Train Epoch: 2 [23040/60000 (38%)] Loss: 0.200256

Train Epoch: 2 [23680/60000 (39%)] Loss: 0.091059

Train Epoch: 2 [24320/60000 (41%)] Loss: 0.039519

Train Epoch: 2 [24960/60000 (42%)] Loss: 0.117363

Train Epoch: 2 [25600/60000 (43%)] Loss: 0.123654

Train Epoch: 2 [26240/60000 (44%)] Loss: 0.224314

Train Epoch: 2 [26880/60000 (45%)] Loss: 0.280589

Train Epoch: 2 [27520/60000 (46%)] Loss: 0.052264

Train Epoch: 2 [28160/60000 (47%)] Loss: 0.063365

Train Epoch: 2 [28800/60000 (48%)] Loss: 0.064455

Train Epoch: 2 [29440/60000 (49%)] Loss: 0.081827

Train Epoch: 2 [30080/60000 (50%)] Loss: 0.089505

Train Epoch: 2 [30720/60000 (51%)] Loss: 0.081288

Train Epoch: 2 [31360/60000 (52%)] Loss: 0.160924

Train Epoch: 2 [32000/60000 (53%)] Loss: 0.204062

Train Epoch: 2 [32640/60000 (54%)] Loss: 0.033370

Train Epoch: 2 [33280/60000 (55%)] Loss: 0.150454

Train Epoch: 2 [33920/60000 (57%)] Loss: 0.092165

Train Epoch: 2 [34560/60000 (58%)] Loss: 0.132951

Train Epoch: 2 [35200/60000 (59%)] Loss: 0.111180

Train Epoch: 2 [35840/60000 (60%)] Loss: 0.170439

Train Epoch: 2 [36480/60000 (61%)] Loss: 0.272381

Train Epoch: 2 [37120/60000 (62%)] Loss: 0.047448

Train Epoch: 2 [37760/60000 (63%)] Loss: 0.205407

Train Epoch: 2 [38400/60000 (64%)] Loss: 0.135557

Train Epoch: 2 [39040/60000 (65%)] Loss: 0.101824

Train Epoch: 2 [39680/60000 (66%)] Loss: 0.044439

Train Epoch: 2 [40320/60000 (67%)] Loss: 0.086475

Train Epoch: 2 [40960/60000 (68%)] Loss: 0.093398

Train Epoch: 2 [41600/60000 (69%)] Loss: 0.139246

Train Epoch: 2 [42240/60000 (70%)] Loss: 0.146613

Train Epoch: 2 [42880/60000 (71%)] Loss: 0.128355

Train Epoch: 2 [43520/60000 (72%)] Loss: 0.061965

Train Epoch: 2 [44160/60000 (74%)] Loss: 0.112888

Train Epoch: 2 [44800/60000 (75%)] Loss: 0.081551

Train Epoch: 2 [45440/60000 (76%)] Loss: 0.170145

Train Epoch: 2 [46080/60000 (77%)] Loss: 0.131782

Train Epoch: 2 [46720/60000 (78%)] Loss: 0.167966

Train Epoch: 2 [47360/60000 (79%)] Loss: 0.054064

Train Epoch: 2 [48000/60000 (80%)] Loss: 0.133177

Train Epoch: 2 [48640/60000 (81%)] Loss: 0.089363

Train Epoch: 2 [49280/60000 (82%)] Loss: 0.097963

Train Epoch: 2 [49920/60000 (83%)] Loss: 0.077683

Train Epoch: 2 [50560/60000 (84%)] Loss: 0.175217

Train Epoch: 2 [51200/60000 (85%)] Loss: 0.126265

Train Epoch: 2 [51840/60000 (86%)] Loss: 0.213446

Train Epoch: 2 [52480/60000 (87%)] Loss: 0.071028

Train Epoch: 2 [53120/60000 (88%)] Loss: 0.045520

Train Epoch: 2 [53760/60000 (90%)] Loss: 0.155648

Train Epoch: 2 [54400/60000 (91%)] Loss: 0.065158

Train Epoch: 2 [55040/60000 (92%)] Loss: 0.145879

Train Epoch: 2 [55680/60000 (93%)] Loss: 0.167453

Train Epoch: 2 [56320/60000 (94%)] Loss: 0.084260

Train Epoch: 2 [56960/60000 (95%)] Loss: 0.325450

Train Epoch: 2 [57600/60000 (96%)] Loss: 0.186134

Train Epoch: 2 [58240/60000 (97%)] Loss: 0.028011

Train Epoch: 2 [58880/60000 (98%)] Loss: 0.058806

Train Epoch: 2 [59520/60000 (99%)] Loss: 0.163296

Test set: Avg. loss: 0.0399, Accuracy: 9878/10000 (99%)

Train Epoch: 3 [0/60000 (0%)] Loss: 0.102998

Train Epoch: 3 [640/60000 (1%)] Loss: 0.066535

Train Epoch: 3 [1280/60000 (2%)] Loss: 0.051959

Train Epoch: 3 [1920/60000 (3%)] Loss: 0.107484

Train Epoch: 3 [2560/60000 (4%)] Loss: 0.087987

Train Epoch: 3 [3200/60000 (5%)] Loss: 0.134019

Train Epoch: 3 [3840/60000 (6%)] Loss: 0.144111

Train Epoch: 3 [4480/60000 (7%)] Loss: 0.198046

Train Epoch: 3 [5120/60000 (9%)] Loss: 0.049358

Train Epoch: 3 [5760/60000 (10%)] Loss: 0.067649

Train Epoch: 3 [6400/60000 (11%)] Loss: 0.177920

Train Epoch: 3 [7040/60000 (12%)] Loss: 0.334545

Train Epoch: 3 [7680/60000 (13%)] Loss: 0.124170

Train Epoch: 3 [8320/60000 (14%)] Loss: 0.152111

Train Epoch: 3 [8960/60000 (15%)] Loss: 0.061891

Train Epoch: 3 [9600/60000 (16%)] Loss: 0.096038

Train Epoch: 3 [10240/60000 (17%)] Loss: 0.128670

Train Epoch: 3 [10880/60000 (18%)] Loss: 0.141426

Train Epoch: 3 [11520/60000 (19%)] Loss: 0.098369

Train Epoch: 3 [12160/60000 (20%)] Loss: 0.076490

Train Epoch: 3 [12800/60000 (21%)] Loss: 0.024070

Train Epoch: 3 [13440/60000 (22%)] Loss: 0.062902

Train Epoch: 3 [14080/60000 (23%)] Loss: 0.111864

Train Epoch: 3 [14720/60000 (25%)] Loss: 0.041554

Train Epoch: 3 [15360/60000 (26%)] Loss: 0.176218

Train Epoch: 3 [16000/60000 (27%)] Loss: 0.091784

Train Epoch: 3 [16640/60000 (28%)] Loss: 0.099284

Train Epoch: 3 [17280/60000 (29%)] Loss: 0.045067

Train Epoch: 3 [17920/60000 (30%)] Loss: 0.163887

Train Epoch: 3 [18560/60000 (31%)] Loss: 0.371201

Train Epoch: 3 [19200/60000 (32%)] Loss: 0.132180

Train Epoch: 3 [19840/60000 (33%)] Loss: 0.298130

Train Epoch: 3 [20480/60000 (34%)] Loss: 0.188392

Train Epoch: 3 [21120/60000 (35%)] Loss: 0.256692

Train Epoch: 3 [21760/60000 (36%)] Loss: 0.333582

Train Epoch: 3 [22400/60000 (37%)] Loss: 0.103843

Train Epoch: 3 [23040/60000 (38%)] Loss: 0.054006

Train Epoch: 3 [23680/60000 (39%)] Loss: 0.174218

Train Epoch: 3 [24320/60000 (41%)] Loss: 0.109797

Train Epoch: 3 [24960/60000 (42%)] Loss: 0.135606

Train Epoch: 3 [25600/60000 (43%)] Loss: 0.208556

Train Epoch: 3 [26240/60000 (44%)] Loss: 0.188399

Train Epoch: 3 [26880/60000 (45%)] Loss: 0.216521

Train Epoch: 3 [27520/60000 (46%)] Loss: 0.240275

Train Epoch: 3 [28160/60000 (47%)] Loss: 0.046752

Train Epoch: 3 [28800/60000 (48%)] Loss: 0.098208

Train Epoch: 3 [29440/60000 (49%)] Loss: 0.145031

Train Epoch: 3 [30080/60000 (50%)] Loss: 0.075472

Train Epoch: 3 [30720/60000 (51%)] Loss: 0.060327

Train Epoch: 3 [31360/60000 (52%)] Loss: 0.147099

Train Epoch: 3 [32000/60000 (53%)] Loss: 0.071874

Train Epoch: 3 [32640/60000 (54%)] Loss: 0.103185

Train Epoch: 3 [33280/60000 (55%)] Loss: 0.042416

Train Epoch: 3 [33920/60000 (57%)] Loss: 0.128803

Train Epoch: 3 [34560/60000 (58%)] Loss: 0.070104

Train Epoch: 3 [35200/60000 (59%)] Loss: 0.185671

Train Epoch: 3 [35840/60000 (60%)] Loss: 0.109853

Train Epoch: 3 [36480/60000 (61%)] Loss: 0.301363

Train Epoch: 3 [37120/60000 (62%)] Loss: 0.012276

Train Epoch: 3 [37760/60000 (63%)] Loss: 0.137707

Train Epoch: 3 [38400/60000 (64%)] Loss: 0.111371

Train Epoch: 3 [39040/60000 (65%)] Loss: 0.192704

Train Epoch: 3 [39680/60000 (66%)] Loss: 0.118988

Train Epoch: 3 [40320/60000 (67%)] Loss: 0.056389

Train Epoch: 3 [40960/60000 (68%)] Loss: 0.069326

Train Epoch: 3 [41600/60000 (69%)] Loss: 0.137394

Train Epoch: 3 [42240/60000 (70%)] Loss: 0.113170

Train Epoch: 3 [42880/60000 (71%)] Loss: 0.024602

Train Epoch: 3 [43520/60000 (72%)] Loss: 0.108824

Train Epoch: 3 [44160/60000 (74%)] Loss: 0.196626

Train Epoch: 3 [44800/60000 (75%)] Loss: 0.149237

Train Epoch: 3 [45440/60000 (76%)] Loss: 0.099488

Train Epoch: 3 [46080/60000 (77%)] Loss: 0.262205

Train Epoch: 3 [46720/60000 (78%)] Loss: 0.107861

Train Epoch: 3 [47360/60000 (79%)] Loss: 0.081145

Train Epoch: 3 [48000/60000 (80%)] Loss: 0.044981

Train Epoch: 3 [48640/60000 (81%)] Loss: 0.092366

Train Epoch: 3 [49280/60000 (82%)] Loss: 0.110018

Train Epoch: 3 [49920/60000 (83%)] Loss: 0.104542

Train Epoch: 3 [50560/60000 (84%)] Loss: 0.183242

Train Epoch: 3 [51200/60000 (85%)] Loss: 0.166517

Train Epoch: 3 [51840/60000 (86%)] Loss: 0.169112

Train Epoch: 3 [52480/60000 (87%)] Loss: 0.097010

Train Epoch: 3 [53120/60000 (88%)] Loss: 0.096146

Train Epoch: 3 [53760/60000 (90%)] Loss: 0.085258

Train Epoch: 3 [54400/60000 (91%)] Loss: 0.321822

Train Epoch: 3 [55040/60000 (92%)] Loss: 0.215173

Train Epoch: 3 [55680/60000 (93%)] Loss: 0.158387

Train Epoch: 3 [56320/60000 (94%)] Loss: 0.048139

Train Epoch: 3 [56960/60000 (95%)] Loss: 0.051018

Train Epoch: 3 [57600/60000 (96%)] Loss: 0.141063

Train Epoch: 3 [58240/60000 (97%)] Loss: 0.069343

Train Epoch: 3 [58880/60000 (98%)] Loss: 0.113296

Train Epoch: 3 [59520/60000 (99%)] Loss: 0.032219

Test set: Avg. loss: 0.0402, Accuracy: 9881/10000 (99%)

7.模型性能评估

import matplotlib.pyplot as plt

fig = plt.figure()

plt.plot(train_counter, train_losses, color='blue')

plt.scatter(test_counter, test_losses, color='red')

plt.legend(['Train Loss', 'Test Loss'], loc='upper right')

plt.xlabel('number of training examples seen')

plt.ylabel('negative log likelihood loss')

plt.show()