机器学习--文本分析(jieba)(9)

一、jieba

jieba分词有三种拆词模式:

jieba.cut(cut_all=False) # 精确模式 适用于文本分析(建模)

jieba.cut(cut_all=True) # 全模式 适合搜索引擎

jieba.cut_for_search() # 搜索引擎模式

cut方法拆完是一个生成器对象

jieba.lcut(cut_all=False) # 精确模式 适用于文本分析(建模)

jieba.lcut(cut_all=True) # 全模式 适合搜索引擎

jieba.lcut_for_search() # 搜索引擎模式

lcut方法拆完是一个列表

二、练习

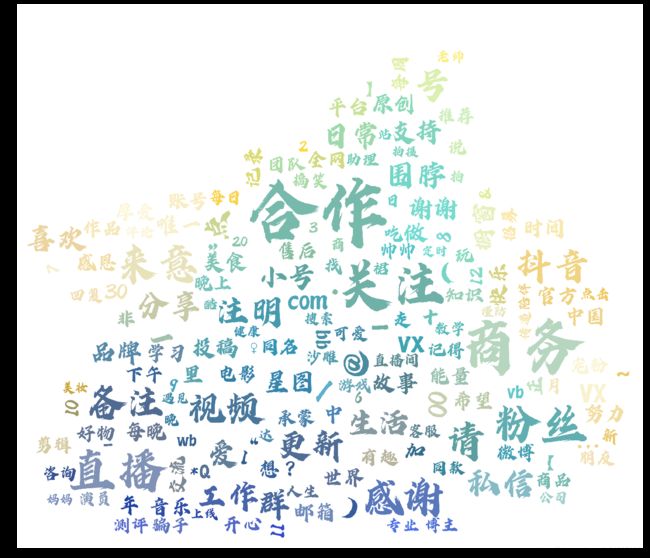

2.1 词云图制作

import wordcloud

import jieba

import numpy as np

import pandas as pd

from PIL import Image

import matplotlib.pyplot as plt

douyin = pd.read_csv('douyin.csv')

# 导入停用词列表

stopwords = pd.read_csv('stopwords.txt',encoding='gbk',header=None, error_bad_lines=False)[0].values.tolist()

stopwords1 = ['️', '!', '—', '❤',',','•']

content = ""

for i in douyin['signature'].values:

if type(i) == float:

continue

words = jieba.lcut(i,cut_all=False)

for word in words:

if word in stopwords or word in stopwords1:

continue

content += word+" "

importance_words = pd.Series(content.split(" ")).value_counts()[1:300]

bgimg = np.array(Image.open('bgimg.png'))

genclr=wordcloud.ImageColorGenerator(bgimg)

wc = wordcloud.WordCloud(font_path='./FZZJ-LongYTJW.TTF', # 设置字体

background_color="white", # 背景颜色

max_words=200, # 词云显示的最大词数

max_font_size=300, # 字体最大值

min_font_size=5, #字体最小值

random_state=42, #随机数

mask=bgimg, #造型遮盖

color_func=genclr)

# 渲染文字的函数, importance_words文字列表,

wc.generate_from_frequencies(importance_words)

plt.figure(figsize=(24, 24))

plt.imshow(wc)

plt.axis('off')

2.2 文本集合转换成词频向量集合

# 拆词默认以【空格】作为分隔符

# 如果是英文,可以直接进行转换

# 如果是汉语,需要先分词处理,再做转换

from sklearn.feature_extraction.text import CountVectorizer

# 注意文本集的形式 是一维列表,每一条文本是一个元素

statements = [

'hello world hello world',

'good morning hello',

'good good hello world',

'morning hello good hello'

]

# step1. 构造词频转换器

cv = CountVectorizer()

# step2. 对文本集进行词频转换,得到一个matrix

matrix = cv.fit_transform(statements)

# step3. 提取矩阵的内容,就是词频向量

count_vector = matrix.toarray()

# step4. 提取词频转换器cv解析的词汇

columns = cv.get_feature_names()

# step5. 整合结果

train = DataFrame(data=count_vector, columns=columns)

2.3 汉字集合转为词频向量集合

import jieba

statements = [

"桃花坞里桃花庵",

"桃花庵里桃花仙",

"桃花仙人种桃树",

"再买桃花换酒钱",

"酒醒只在花前坐",

"酒醉还来花下眠"

]

# 只 在 还来 处理成停用词

stop_words = ['只','在', '还来']

# 把 花前坐 处理成一个词

jieba.load_userdict({

'花前坐'

})

for index, statement in enumerate(statements):

message = ""

for word in jieba.lcut(statement):

if word not in stop_words:

message += word + " "

statements[index] = message.rstrip(" ")

# step2: 词频向量转换

cv = CountVectorizer()

matrix = cv.fit_transform(statements)

DataFrame(data=matrix.toarray(), columns=cv.get_feature_names())

# 词频-逆文档频率

# 用于处理,某条文本中,若干个【相同词频】的词汇的重要性的一种评估手段

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf_vc = TfidfVectorizer()

m = tfidf_vc.fit_transform(statements)

# m.toarray()

DataFrame(data=m.toarray(), columns=tfidf_vc.get_feature_names())

2.4 垃圾邮件识别

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

from sklearn.feature_extraction.text import CountVectorizer,TfidfVectorizer

import re

# 导入数据集

SMS = pd.read_table('SMSSpamCollection',header=None)

# 取出文档集合和标签集合

text = SMS.iloc[:,1]

target = SMS.iloc[:,0]

tf = TfidfVectorizer()

matrix = tf.fit_transform(text)

# 取出词频-逆文档频率矩阵的值

matrix.toarray()

# 取出矩阵特征

tf.get_feature_names()

# 处理数字开头的单词

# 返回值为True不包含数字

def not_numric(x):

pattern = '.*\d.*'

re_obj = re.compile(pattern)

return len(re_obj.findall(x)) ==0

def process_statements(text):

# 对每一个句子先做处理,删除无效词汇(停用词处理)

for index,statement in text.items():

# 汉语需要jieba分词,英文直接按空格拆

word_list = statement.split(" ")

good_statement = ""

for word in word_list:

# 如果是True,单词保留

if not_numric(word):

good_statement += word + " "

# 更新文本集原语句

text.loc[index] = good_statement

# 先拆分数据集,在处理符合实际应用场景

from sklearn.model_selection import train_test_split

# 对训练集进行去数字处理

process_statements(X_train_text)

# 进行tf-idf转换

tf = TfidfVectorizer()

# tf.fit之后,其中每一个单词的逆文档频率固定

tf.fit(X_train_text)

matrix = tf.transform(X_train_text)

X_train = matrix.toarray()

X_train = pd.DataFrame(data=X_train,columns=tf.get_feature_names())

from sklearn.naive_bayes import MultinomialNB

# 生成多项式朴素贝叶斯模型

mnb = MultinomialNB()

mnb.fit(X_train,y_train)

# 注意测试集转换用训练集的tf对象

X_test = tf.transform(X_test_text)

X_test = pd.DataFrame(data=X_test.toarray(),columns=tf.get_feature_names())

mnb.score(X_test,y_test)

# 生成伯努利朴素贝叶斯模型

from sklearn.naive_bayes import BernoulliNB

bnb = BernoulliNB()

bnb.fit(X_train,y_train)

bnb.score(X_test,y_test)