mmdetection训练自己的数据,用网络deformable_detr做示例,先用labelme标注转为coco格式,训练后测试并分析

目录

1.标注labelme

2.将labelme标注的数据转为coco格式

直接上代码:

coco格式如下:

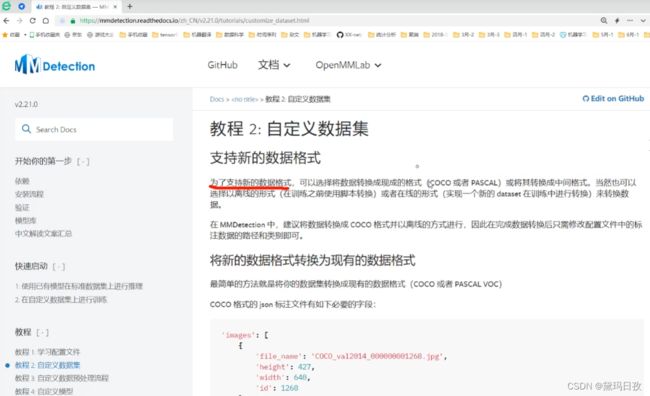

3.mmdetection训练自己的数据,用网络deformable_detr做示例

(0)先生成整体配置文件,在一个配置文件中修改比较方便,方法参考:open-mmlab. mmclassification安装并使用自己数据集windows下_黛玛日孜的博客-CSDN博客

(1)#mmdet/datasets/coco.py中将类CocoDataset中的内容改成自己的,只改类别和颜色表示

(2)#mmdet/core/evaluation/classnames.py中将函数coco_classes中的内容改成自己的

(3)展示整体配置文件。配置文件configs中的类别数量改成自己的,并修改数据路径,

(4)遇到其他问题,可以去官网问答区搜索,如下

4.模型训练train.py(选择可视化标注文件browse_dataset.py)

5.DEMO演示image_demo.py

6.模型测试test.py

7.可视化分析模块confusion_matrix.py、analyze_results.py、analyze_logs.py等

其他可在官网查看

8.参数量、计算量

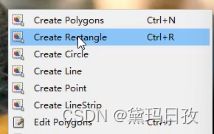

1.标注labelme

安装labelme,

1.使用Annoconda创建虚拟环境

conda create -n labelme python=3.6

activate labelme

2.安装相关的包

pip install pyqt

pip install labelme

3.启动labelme 并标注:

在labelme的虚拟环境中键入labelme就会启动labelme可视化标注软件

安装好labelme后直接在cmd命名窗口输入labelme打开。![]()

左上角到右下角标注,确定框的名字,可以标注多个框。保存![]() ,最好不改名字。

,最好不改名字。

得到如下文件格式:

2.将labelme标注的数据转为coco格式

直接上代码:

D:\Code\mmdetection-master\mmdet\data\json2coco.py

重点修改类别和输入输出文件路径,以及测试集比例:

classname_to_id = {

"mask": 0, #改成自己的类别

"person": 1

}

labelme_path = "./labelme-data/maskdataset"

saved_coco_path = "./labelme-data/coco-format"

train_path, val_path = train_test_split(json_list_path, test_size=0.1, train_size=0.9)

代码如下:

#D:\Code\mmdetection-master\mmdet\data\json2coco.py

import os

import json

import numpy as np

import glob

import shutil

import cv2

from sklearn.model_selection import train_test_split

np.random.seed(41)

classname_to_id = {

"mask": 0, #改成自己的类别

"person": 1

}

class Lableme2CoCo:

def __init__(self):

self.images = []

self.annotations = []

self.categories = []

self.img_id = 0

self.ann_id = 0

def save_coco_json(self, instance, save_path):

json.dump(instance, open(save_path, 'w', encoding='utf-8'), ensure_ascii=False, indent=1) # indent=2 更加美观显示

# 由json文件构建COCO

def to_coco(self, json_path_list):

self._init_categories()

for json_path in json_path_list:

obj = self.read_jsonfile(json_path)

self.images.append(self._image(obj, json_path))

shapes = obj['shapes']

for shape in shapes:

annotation = self._annotation(shape)

self.annotations.append(annotation)

self.ann_id += 1

self.img_id += 1

instance = {}

instance['info'] = 'spytensor created'

instance['license'] = ['license']

instance['images'] = self.images

instance['annotations'] = self.annotations

instance['categories'] = self.categories

return instance

# 构建类别

def _init_categories(self):

for k, v in classname_to_id.items():

category = {}

category['id'] = v

category['name'] = k

self.categories.append(category)

# 构建COCO的image字段

def _image(self, obj, path):

image = {}

from labelme import utils

img_x = utils.img_b64_to_arr(obj['imageData'])

h, w = img_x.shape[:-1]

image['height'] = h

image['width'] = w

image['id'] = self.img_id

image['file_name'] = os.path.basename(path).replace(".json", ".jpg")

return image

# 构建COCO的annotation字段

def _annotation(self, shape):

# print('shape', shape)

label = shape['label']

points = shape['points']

annotation = {}

annotation['id'] = self.ann_id

annotation['image_id'] = self.img_id

annotation['category_id'] = int(classname_to_id[label])

annotation['segmentation'] = [np.asarray(points).flatten().tolist()]

annotation['bbox'] = self._get_box(points)

annotation['iscrowd'] = 0

annotation['area'] = 1.0

return annotation

# 读取json文件,返回一个json对象

def read_jsonfile(self, path):

with open(path, "r", encoding='utf-8') as f:

return json.load(f)

# COCO的格式: [x1,y1,w,h] 对应COCO的bbox格式

def _get_box(self, points):

min_x = min_y = np.inf

max_x = max_y = 0

for x, y in points:

min_x = min(min_x, x)

min_y = min(min_y, y)

max_x = max(max_x, x)

max_y = max(max_y, y)

return [min_x, min_y, max_x - min_x, max_y - min_y]

#训练过程中,如果遇到Index put requires the source and destination dtypes match, got Long for the destination and Int for the source

#参考:https://github.com/open-mmlab/mmdetection/issues/6706

if __name__ == '__main__':

labelme_path = "./labelme-data/maskdataset"

saved_coco_path = "./labelme-data/coco-format"

print('reading...')

# 创建文件

if not os.path.exists("%scoco/annotations/" % saved_coco_path):

os.makedirs("%scoco/annotations/" % saved_coco_path)

if not os.path.exists("%scoco/images/train2017/" % saved_coco_path):

os.makedirs("%scoco/images/train2017" % saved_coco_path)

if not os.path.exists("%scoco/images/val2017/" % saved_coco_path):

os.makedirs("%scoco/images/val2017" % saved_coco_path)

# 获取images目录下所有的joson文件列表

print(labelme_path + "/*.json")

json_list_path = glob.glob(labelme_path + "/*.json")

print('json_list_path: ', len(json_list_path))

# 数据划分,这里没有区分val2017和tran2017目录,所有图片都放在images目录下

train_path, val_path = train_test_split(json_list_path, test_size=0.1, train_size=0.9)

print("train_n:", len(train_path), 'val_n:', len(val_path))

# 把训练集转化为COCO的json格式

l2c_train = Lableme2CoCo()

train_instance = l2c_train.to_coco(train_path)

l2c_train.save_coco_json(train_instance, '%scoco/annotations/instances_train2017.json' % saved_coco_path)

for file in train_path:

# shutil.copy(file.replace("json", "jpg"), "%scoco/images/train2017/" % saved_coco_path)

img_name = file.replace('json', 'jpg')

temp_img = cv2.imread(img_name)

try:

cv2.imwrite("{}coco/images/train2017/{}".format(saved_coco_path, img_name.split('\\')[-1].replace('png', 'jpg')), temp_img)

except Exception as e:

print(e)

print('Wrong Image:', img_name )

continue

print(img_name + '-->', img_name.replace('png', 'jpg'))

for file in val_path:

# shutil.copy(file.replace("json", "jpg"), "%scoco/images/val2017/" % saved_coco_path)

img_name = file.replace('json', 'jpg')

temp_img = cv2.imread(img_name)

try:

cv2.imwrite("{}coco/images/val2017/{}".format(saved_coco_path, img_name.split('\\')[-1].replace('png', 'jpg')), temp_img)

except Exception as e:

print(e)

print('Wrong Image:', img_name)

continue

print(img_name + '-->', img_name.replace('png', 'jpg'))

# 把验证集转化为COCO的json格式

l2c_val = Lableme2CoCo()

val_instance = l2c_val.to_coco(val_path)

l2c_val.save_coco_json(val_instance, '%scoco/annotations/instances_val2017.json' % saved_coco_path)

coco格式如下:

3.mmdetection训练自己的数据,用网络deformable_detr做示例

deformable_detr网络:MMCV需要要1.4.2。特点是很吃现存,训练速度慢。

(0)先生成整体配置文件,在一个配置文件中修改比较方便,方法参考:open-mmlab. mmclassification安装并使用自己数据集windows下_黛玛日孜的博客-CSDN博客

(1)#mmdet/datasets/coco.py中将类CocoDataset中的内容改成自己的,只改类别和颜色表示

CLASSES = ('mask', 'person')

PALETTE = [(220, 20, 60), (119, 11, 32)]

(2)#mmdet/core/evaluation/classnames.py中将函数coco_classes中的内容改成自己的

return [

'mask', 'person']

(3)展示整体配置文件。配置文件configs中的类别数量改成自己的,并修改数据路径,

如:D:\Code\mmdetection-master\configs\deformable_detr\my_deformable_detr_r50_16x2_50e_coco.py中

#num_classes改成自己的

#D:\Code\mmdetection-master\configs\deformable_detr\my_deformable_detr_r50_16x2_50e_coco.py

dataset_type = 'CocoDataset' #不读,走后面设置的绝对路径

data_root = 'data/coco/' #不读,走后面设置的绝对路径

#mmdet/core/evaluation/classnames.py中将coco_classes中的内容改成自己的

#mmdet/datasets/coco.py中将cocodatasets中的内容改成自己的

#num_classes改成自己的

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='AutoAugment',#自动数据增强,从以下策略policies中随机选择一个。

policies=[[{#随机带走一个小朋友。

'type':'Resize',

'img_scale': [(480, 1333), (512, 1333), (544, 1333), (576, 1333),

(608, 1333), (640, 1333), (672, 1333), (704, 1333),

(736, 1333), (768, 1333), (800, 1333)],

'multiscale_mode':'value',#多尺度训练,在上面随机找一个

'keep_ratio':True#True的时候以h和w中比例差异小的为基准倍数,对h和w按照相同比例resize(保持原有长宽比)

}], #False时直接按照上面大小resize

[{

'type': 'Resize',

'img_scale': [(400, 4200), (500, 4200), (600, 4200)],

'multiscale_mode': 'value',

'keep_ratio': True

}, {

'type': 'RandomCrop',

'crop_type': 'absolute_range',

'crop_size': (384, 600),

'allow_negative_crop': True

}, {

'type':

'Resize',

'img_scale': [(480, 1333), (512, 1333), (544, 1333),

(576, 1333), (608, 1333), (640, 1333),

(672, 1333), (704, 1333), (736, 1333),

(768, 1333), (800, 1333)],

'multiscale_mode':

'value',

'override':#无含义,使不报错

True,

'keep_ratio':

True

}]]),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=1),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels'])

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800), #选训练时多尺度的中间数比较好

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=1),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

]

data = dict(

samples_per_gpu=1, #8G以下的显存选1

workers_per_gpu=1,

train=dict(

type='CocoDataset',

ann_file='D:\\Code\\mmdetection-master\\mmdet\\data\\labelme-data\\coco-formatcoco\\annotations\\instances_train2017.json',#windows最好用绝对路径,并且是双斜杠\\

img_prefix='D:\\Code\\mmdetection-master\\mmdet\\data\\labelme-data\\coco-formatcoco\\images\\train2017',

pipeline=[

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='AutoAugment',

policies=[[{

'type':

'Resize',

'img_scale': [(480, 1333), (512, 1333), (544, 1333),

(576, 1333), (608, 1333), (640, 1333),

(672, 1333), (704, 1333), (736, 1333),

(768, 1333), (800, 1333)],

'multiscale_mode':

'value',

'keep_ratio':

True

}],

[{

'type': 'Resize',

'img_scale': [(400, 4200), (500, 4200),

(600, 4200)],

'multiscale_mode': 'value',

'keep_ratio': True

}, {

'type': 'RandomCrop',

'crop_type': 'absolute_range',

'crop_size': (384, 600),

'allow_negative_crop': True

}, {

'type':

'Resize',

'img_scale': [(480, 1333), (512, 1333),

(544, 1333), (576, 1333),

(608, 1333), (640, 1333),

(672, 1333), (704, 1333),

(736, 1333), (768, 1333),

(800, 1333)],

'multiscale_mode':

'value',

'override':

True,

'keep_ratio':

True

}]]),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=1),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels'])

],

filter_empty_gt=False),

val=dict(

type='CocoDataset',

ann_file='D:\\Code\\mmdetection-master\\mmdet\\data\\labelme-data\\coco-formatcoco\\annotations\\instances_val2017.json',

img_prefix='D:\\Code\\mmdetection-master\\mmdet\\data\\labelme-data\\coco-formatcoco\\images\\val2017',

pipeline=[

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=1),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

]),

test=dict(

type='CocoDataset',

ann_file='D:\\Code\\mmdetection-master\\mmdet\\data\\labelme-data\\coco-formatcoco\\annotations\\instances_val2017.json',

img_prefix='D:\\Code\\mmdetection-master\\mmdet\\data\\labelme-data\\coco-formatcoco\\images\\val2017',

pipeline=[

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=1),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

]))

evaluation = dict(interval=10, metric='bbox') #每多少epoch评估

checkpoint_config = dict(interval=50) #每多少epoch保存模型

log_config = dict(interval=10, hooks=[dict(type='TextLoggerHook')])#每多少epoch打印信息

custom_hooks = [dict(type='NumClassCheckHook')]

dist_params = dict(backend='nccl')

log_level = 'INFO'

load_from = './work_dirs/deformable_detr_r50_16x2_50e_coco/deformable_detr_r50_16x2_50e_coco_20210419_220030-a12b9512.pth'

#https://github.com/open-mmlab/mmdetection/tree/master/configs/deformable_detr

resume_from = None

workflow = [('train', 1)]

opencv_num_threads = 0

mp_start_method = 'fork'

model = dict(

type='DeformableDETR',

backbone=dict(

type='ResNet',

depth=50,

num_stages=4,

out_indices=(1, 2, 3),

frozen_stages=1,

norm_cfg=dict(type='BN', requires_grad=False),

norm_eval=True,

style='pytorch',

init_cfg=dict(type='Pretrained', checkpoint='torchvision://resnet50')),

neck=dict(

type='ChannelMapper', #

in_channels=[512, 1024, 2048],

kernel_size=1,

out_channels=256,

act_cfg=None,

norm_cfg=dict(type='GN', num_groups=32),

num_outs=4),

bbox_head=dict(

type='DeformableDETRHead',

num_query=300,

num_classes=2,

in_channels=2048,

sync_cls_avg_factor=True,

as_two_stage=False,

transformer=dict(

type='DeformableDetrTransformer',

encoder=dict(

type='DetrTransformerEncoder',

num_layers=6,

transformerlayers=dict(

type='BaseTransformerLayer',

attn_cfgs=dict(

type='MultiScaleDeformableAttention', embed_dims=256),

feedforward_channels=1024,

ffn_dropout=0.1,

operation_order=('self_attn', 'norm', 'ffn', 'norm'))),

decoder=dict(

type='DeformableDetrTransformerDecoder',

num_layers=6,

return_intermediate=True,

transformerlayers=dict(

type='DetrTransformerDecoderLayer',

attn_cfgs=[

dict(

type='MultiheadAttention',

embed_dims=256,

num_heads=8,

dropout=0.1),

dict(

type='MultiScaleDeformableAttention',

embed_dims=256)

],

feedforward_channels=1024,

ffn_dropout=0.1,

operation_order=('self_attn', 'norm', 'cross_attn', 'norm',

'ffn', 'norm')))),

positional_encoding=dict(

type='SinePositionalEncoding',

num_feats=128,

normalize=True,

offset=-0.5),

loss_cls=dict(

type='FocalLoss',

use_sigmoid=True,

gamma=2.0,

alpha=0.25,

loss_weight=2.0),

loss_bbox=dict(type='L1Loss', loss_weight=5.0),

loss_iou=dict(type='GIoULoss', loss_weight=2.0)),

train_cfg=dict(

assigner=dict(

type='HungarianAssigner',

cls_cost=dict(type='FocalLossCost', weight=2.0),

reg_cost=dict(type='BBoxL1Cost', weight=5.0, box_format='xywh'),

iou_cost=dict(type='IoUCost', iou_mode='giou', weight=2.0))),

test_cfg=dict(max_per_img=100))

optimizer = dict(

type='AdamW',

lr=0.0002,

weight_decay=0.0001,

paramwise_cfg=dict(

custom_keys=dict(

backbone=dict(lr_mult=0.1),

sampling_offsets=dict(lr_mult=0.1),

reference_points=dict(lr_mult=0.1))))

optimizer_config = dict(grad_clip=dict(max_norm=0.1, norm_type=2))

lr_config = dict(policy='step', step=[40])

runner = dict(type='EpochBasedRunner', max_epochs=50)

work_dir = './work_dirs/deformable_detr_r50_16x2_50e_coco'

auto_resume = False

gpu_ids = [0]

(4)遇到其他问题,可以去官网问答区搜索,如下

4.模型训练train.py(选择可视化标注文件browse_dataset.py)

可选择先检查一下数据是否标注正确,可视化标注文件D:\Code\mmdetection-master\tools\misc\browse_dataset.py其参数如下

D:\\Code\\mmdetection-master\\configs\\deformable_detr\\my_deformable_detr_r50_16x2_50e_coco.py

--output-dir D:\\Code\\mmdetection-master\\mmdet\\data\\labelme-data\\coco-formatcoco\\sinpoec-label ##保存路径在D:\Code\mmdetection-master\tools\train.py用以下配置文件作为参数训练。记得用预训练模型。

D:\Code\mmdetection-master\configs\deformable_detr\my_deformable_detr_r50_16x2_50e_coco.py5.DEMO演示image_demo.py

D:\Code\mmdetection-master\demo\image_demo.py

参数如下:

D:\\Code\\mmdetection-master\\mmdet\\data\\labelme-data\\coco-formatcoco\\images\\val2017\\19.jpg

D:\\Code\\mmdetection-master\\configs\\deformable_detr\\my_deformable_detr_r50_16x2_50e_coco.py

D:\\Code\\mmdetection-master\\tools\\work_dirs\\deformable_detr_r50_16x2_50e_coco\\latest.pth6.模型测试test.py

D:\Code\mmdetection-master\tools\test.py

参数如下:

D:\\Code\\mmdetection-master\\configs\\deformable_detr\\my_deformable_detr_r50_16x2_50e_coco.py

D:\\Code\\mmdetection-master\\tools\\work_dirs\\deformable_detr_r50_16x2_50e_coco\\latest.pth

--eval bbox ##根据数据格式传入参数,"bbox",'' "segm", "proposal" for COCO, and "mAP", "recall" for PASCAL VOC'

--out ./work_dirs/deformable_detr_r50_16x2_50e_coco/test.pkl ##做数据分析要用

--show7.可视化分析模块confusion_matrix.py、analyze_results.py、analyze_logs.py等

D:\Code\mmdetection-master\tools\analysis_tools\analyze_logs.py

D:\Code\mmdetection-master\tools\analysis_tools\analyze_results.py

D:\Code\mmdetection-master\tools\analysis_tools\confusion_matrix.py

用confusion_matrix.py示例,其可做多分类,参数如下:

D:\\Code\\mmdetection-master\\configs\\deformable_detr\\my_deformable_detr_r50_16x2_50e_coco.py

../work_dirs/deformable_detr_r50_16x2_50e_coco/test.pkl

../work_dirs/deformable_detr_r50_16x2_50e_coco/ ##混淆矩阵保存地址

--show其他可在官网查看

8.参数量、计算量

D:\Code\mmdetection-master\tools\analysis_tools\get_flops.py

参数如下:

D:\\Code\\mmdetection-master\\configs\\deformable_detr\\my_deformable_detr_r50_16x2_50e_coco.py

--shape 1280 800