tf.data.Dataset.from_tensor_slices()

tf.data.Dataset.from_tensor_slices(tensors, name=None)该函数的作用是接收tensor,对tensor的第一维度进行切分,并返回一个表示该tensor的切片数据集

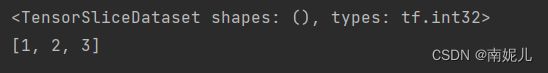

# Slicing a 1D tensor produces scalar tensor elements.

import tensorflow as tf

dataset = tf.data.Dataset.from_tensor_slices([1, 2, 3])

print(dataset)

print(list(dataset.as_numpy_iterator()))

import tensorflow as tf

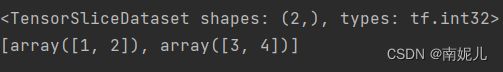

# Slicing a 2D tensor produces 1D tensor elements.

dataset = tf.data.Dataset.from_tensor_slices([[1, 2], [3, 4]])

print(dataset)

print(list(dataset.as_numpy_iterator()))import tensorflow as tf

# Dictionary structure is also preserved.

dataset = tf.data.Dataset.from_tensor_slices({"a": [1, 2], "b": [3, 4]})

print(dataset)

print(list(dataset.as_numpy_iterator()))import tensorflow as tf

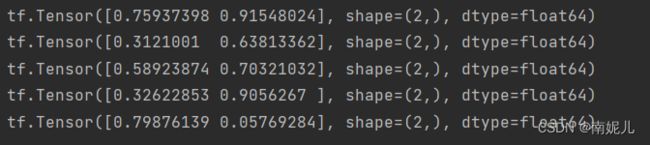

import numpy as np

x = np.random.uniform(size=(5, 2))

#print(x)

dataset = tf.data.Dataset.from_tensor_slices(x)

for ele in dataset:

print(ele)import tensorflow as tf

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt

def load_dataset():

# Step0 准备数据集, 可以是自己动手丰衣足食, 也可以从 tf.keras.datasets 加载需要的数据集(获取到的是numpy数据)

# 这里以 mnist 为例

(x, y), (x_test, y_test) = keras.datasets.mnist.load_data()

# Step1 使用 tf.data.Dataset.from_tensor_slices 进行加载

db_train = tf.data.Dataset.from_tensor_slices((x,y))

db_test = tf.data.Dataset.from_tensor_slices((x_test, y_test))

# Step2 打乱数据

db_train.shuffle(1000)

db_test.shuffle(1000)

# Step3 预处理 (预处理函数在下面)

db_train.map(preprocess)

db_test.map(preprocess)

# Step4 设置 batch size 一次喂入64个数据

db_train.batch(64)

db_test.batch(64)

# Step5 设置迭代次数(迭代2次) test数据集不需要emmm

db_train.repeat(2)

return db_train, db_test

def preprocess(labels, images):

'''

最简单的预处理函数:

转numpy为Tensor、分类问题需要处理label为one_hot编码、处理训练数据

'''

# 把numpy数据转为Tensor

labels = tf.cast(labels, dtype=tf.int32)

# labels 转为one_hot编码

labels = tf.one_hot(labels, depth=10)

# 顺手归一化

images = tf.cast(images, dtype=tf.float32) / 255

return labels, images

train,test=load_dataset()

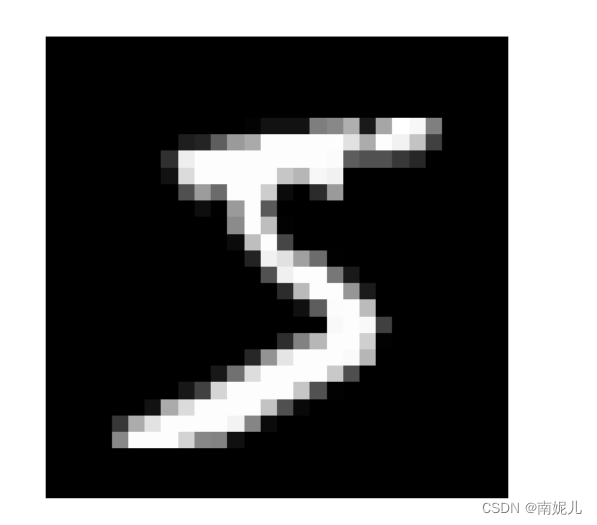

for image in train:

print(type(image))

image=np.array(image)

# print(image)

print(image[0].shape)

plt.axis('off')

plt.imshow(image[0],cmap='gray')

plt.show()

breakimport tensorflow as tf

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt

def load_dataset():

# Step0 准备数据集, 可以是自己动手丰衣足食, 也可以从 tf.keras.datasets 加载需要的数据集(获取到的是numpy数据)

# 这里以 mnist 为例

(x, y), (x_test, y_test) = keras.datasets.mnist.load_data()

# Step1 使用 tf.data.Dataset.from_tensor_slices 进行加载

db_train = tf.data.Dataset.from_tensor_slices((x,y))

db_test = tf.data.Dataset.from_tensor_slices((x_test, y_test))

# Step2 打乱数据

db_train.shuffle(1000)

db_test.shuffle(1000)

# Step3 预处理 (预处理函数在下面)

db_train.map(preprocess)

db_test.map(preprocess)

# Step4 设置 batch size 一次喂入64个数据

db_train.batch(64)

db_test.batch(64)

# Step5 设置迭代次数(迭代2次) test数据集不需要emmm

db_train.repeat(2)

return db_train, db_test

def preprocess(labels, images):

'''

最简单的预处理函数:

转numpy为Tensor、分类问题需要处理label为one_hot编码、处理训练数据

'''

# 把numpy数据转为Tensor

labels = tf.cast(labels, dtype=tf.int32)

# labels 转为one_hot编码

labels = tf.one_hot(labels, depth=10)

# 顺手归一化

images = tf.cast(images, dtype=tf.float32) / 255

return labels, images

train,test=load_dataset()

for image in train.as_numpy_iterator():

print(image[0])

image=np.array(image[0])

print(image.shape)

plt.imshow(image,cmap='gray')

plt.show()

break

使用tf.data.Dataset.from_tensor_slices五步加载数据集_rainweic的博客-CSDN博客