【Ubuntu机器学习实战】MMdetection训练自己的数据集并预测(使用mask_rcnn_r50_fpn_1x_coco完美走个流程)

1:使用Jupyter Notebook并切换到Pytorch内核

Ubuntu/Pytorch/MMdetection......等等可自行查找资料配置好,此处仅展示数据集方面所需要的库

!pip install pycocotools -i https://pypi.tuna.tsinghua.edu.cn/simple

!pip install labelme -i https://pypi.tuna.tsinghua.edu.cn/simple

!pip install imgviz -i https://pypi.tuna.tsinghua.edu.cn/simple

!pip install pyqt5 -i https://pypi.tuna.tsinghua.edu.cn/simple2:准备数据集

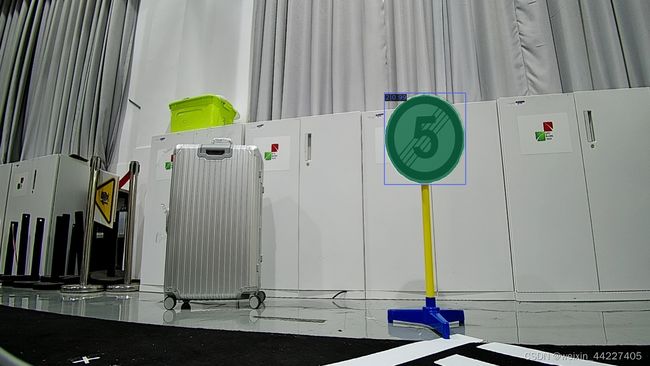

2-1:使用labelme标注数据集

打开终端输入labelme,点击Create Polygons描边并设置编号,保存时直接确定即可,不用改路径

2-2:转换数据集

2-2-1:创建数据文件夹

建议创建一个data文件夹,内容包含:

1:labels文件夹:即使用labelme标注好的文件,一张图片对应一个json文件

2:labelme2coco.py:用于转换coco数据集

3:labels.txt:用于在转换时对应标注名称

#labelme2coco.py的源码

import argparse

import collections

import datetime

import glob

import json

import os

import os.path as osp

import sys

import uuid

import imgviz

import numpy as np

import labelme

try:

import pycocotools.mask

except ImportError:

print("Please install pycocotools:\n\n pip install pycocotools\n")

sys.exit(1)

def main():

parser = argparse.ArgumentParser(

formatter_class=argparse.ArgumentDefaultsHelpFormatter

)

parser.add_argument("input_dir", help="input annotated directory")

parser.add_argument("output_dir", help="output dataset directory")

parser.add_argument("--labels", help="labels file", required=True)

parser.add_argument(

"--noviz", help="no visualization", action="store_true"

)

args = parser.parse_args()

if osp.exists(args.output_dir):

print("Output directory already exists:", args.output_dir)

sys.exit(1)

os.makedirs(args.output_dir)

os.makedirs(osp.join(args.output_dir, "JPEGImages"))

if not args.noviz:

os.makedirs(osp.join(args.output_dir, "Visualization"))

print("Creating dataset:", args.output_dir)

now = datetime.datetime.now()

data = dict(

info=dict(

description=None,

url=None,

version=None,

year=now.year,

contributor=None,

date_created=now.strftime("%Y-%m-%d %H:%M:%S.%f"),

),

licenses=[dict(url=None, id=0, name=None,)],

images=[

# license, url, file_name, height, width, date_captured, id

],

type="instances",

annotations=[

# segmentation, area, iscrowd, image_id, bbox, category_id, id

],

categories=[

# supercategory, id, name

],

)

class_name_to_id = {}

for i, line in enumerate(open(args.labels).readlines()):

class_id = i - 1 # starts with -1

class_name = line.strip()

if class_id == -1:

assert class_name == "__ignore__"

continue

class_name_to_id[class_name] = class_id

data["categories"].append(

dict(supercategory=None, id=class_id, name=class_name,)

)

out_ann_file = osp.join(args.output_dir, "annotations.json")

label_files = glob.glob(osp.join(args.input_dir, "*.json"))

for image_id, filename in enumerate(label_files):

print("Generating dataset from:", filename)

label_file = labelme.LabelFile(filename=filename)

base = osp.splitext(osp.basename(filename))[0]

out_img_file = osp.join(args.output_dir, "JPEGImages", base + ".jpg")

img = labelme.utils.img_data_to_arr(label_file.imageData)

imgviz.io.imsave(out_img_file, img)

data["images"].append(

dict(

license=0,

url=None,

file_name=osp.relpath(out_img_file, osp.dirname(out_ann_file)),

height=img.shape[0],

width=img.shape[1],

date_captured=None,

id=image_id,

)

)

masks = {} # for area

segmentations = collections.defaultdict(list) # for segmentation

for shape in label_file.shapes:

points = shape["points"]

label = shape["label"]

group_id = shape.get("group_id")

shape_type = shape.get("shape_type", "polygon")

mask = labelme.utils.shape_to_mask(

img.shape[:2], points, shape_type

)

if group_id is None:

group_id = uuid.uuid1()

instance = (label, group_id)

if instance in masks:

masks[instance] = masks[instance] | mask

else:

masks[instance] = mask

if shape_type == "rectangle":

(x1, y1), (x2, y2) = points

x1, x2 = sorted([x1, x2])

y1, y2 = sorted([y1, y2])

points = [x1, y1, x2, y1, x2, y2, x1, y2]

if shape_type == "circle":

(x1, y1), (x2, y2) = points

r = np.linalg.norm([x2 - x1, y2 - y1])

# r(1-cos(a/2)) N>pi/arccos(1-x/r)

# x: tolerance of the gap between the arc and the line segment

n_points_circle = max(int(np.pi / np.arccos(1 - 1 / r)), 12)

i = np.arange(n_points_circle)

x = x1 + r * np.sin(2 * np.pi / n_points_circle * i)

y = y1 + r * np.cos(2 * np.pi / n_points_circle * i)

points = np.stack((x, y), axis=1).flatten().tolist()

else:

points = np.asarray(points).flatten().tolist()

segmentations[instance].append(points)

segmentations = dict(segmentations)

for instance, mask in masks.items():

cls_name, group_id = instance

if cls_name not in class_name_to_id:

continue

cls_id = class_name_to_id[cls_name]

mask = np.asfortranarray(mask.astype(np.uint8))

mask = pycocotools.mask.encode(mask)

area = float(pycocotools.mask.area(mask))

bbox = pycocotools.mask.toBbox(mask).flatten().tolist()

data["annotations"].append(

dict(

id=len(data["annotations"]),

image_id=image_id,

category_id=cls_id,

segmentation=segmentations[instance],

area=area,

bbox=bbox,

iscrowd=0,

)

)

if not args.noviz:

viz = img

if masks:

labels, captions, masks = zip(

*[

(class_name_to_id[cnm], cnm, msk)

for (cnm, gid), msk in masks.items()

if cnm in class_name_to_id

]

)

viz = imgviz.instances2rgb(

image=img,

labels=labels,

masks=masks,

captions=captions,

font_size=15,

line_width=2,

)

out_viz_file = osp.join(

args.output_dir, "Visualization", base + ".jpg"

)

imgviz.io.imsave(out_viz_file, viz)

with open(out_ann_file, "w") as f:

json.dump(data, f)

if __name__ == "__main__":

main() #labels.txt源码(示例):

__ignore__

_background_

1

2

3

4

5

6 2-2-2:修改labels.txt对应所标注的名称

!gedit labels.txt2-2-3:转换coco数据集

!python labelme2coco.py --labels labels.txt labels coco #自行命名存放coco数据集的文件夹,如此处为coco转换成功后会有JEPGImage,Visualization和annotations.json三个文件

2-2-4:修改configs中的coco数据集设置

!gedit /home/jyt/mmdetection/configs/_base_/datasets/coco_instance.py# dataset settings

dataset_type = 'CocoDataset'

data_root = '/500/data/coco/'#请修改为自己创建的路径!

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True, with_mask=True),

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels', 'gt_masks']),

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img']),

])

]

data = dict(

samples_per_gpu=2,

workers_per_gpu=2,

train=dict(

type=dataset_type,

ann_file=data_root + 'annotations.json',

img_prefix=data_root,

pipeline=train_pipeline),

val=dict(

type=dataset_type,

ann_file=data_root + 'annotations.json',

img_prefix=data_root,

pipeline=test_pipeline),

test=dict(

type=dataset_type,

ann_file=data_root + 'annotations.json',

img_prefix=data_root,

pipeline=test_pipeline))

evaluation = dict(metric=['bbox', 'segm'])2-2-5:修改mmdet中的coco数据集设置

!gedit /home/jyt/mmdetection/mmdet/datasets/coco.py将CLASSES=(...)改为自己数据集的编号,如CLASSES=('1', '2', '3', '4', '5', '6')

!gedit /home/jyt/mmdetection/mmdet/core/evaluation/class_names.py将coco_classes中的return[...]改为自己数据集的编号,如return['1', '2', '3', '4', '5', '6']

2-2-6:修改模型中的coco数据集设置

!gedit /home/jyt/mmdetection/configs/_base_/models/mask_rcnn_r50_fpn.py将第47行和第66行的num_classes修改为数据集编号总数,如num_classes=6

3:训练模型

可自行查找资料修改训练参数,如调整schedule_1x中的学习率和max_epochs

请自行修改--work-dir后的模型保存路径(建议放空间多的盘)

!python /home/jyt/mmdetection/tools/train.py home/jyt/mmdetection/configs/mask_rcnn/mask_rcnn_r50_fpn_1x_coco.py --work-dir /500/checkpoints/注:若提示有些库没有安装,请仔细检查环境配置!

本人遇到的问题:

1:在Jupyter notebook自己敲import mmcv和import torch等正常,但跑train.py异常

2:训练时loss突然爆炸级增长,出现NaN,训练中断

解决方案:

1:自行在终端激活pytorch环境运行此代码(有时Jupyter notebook不认我的环境就很怪)

2:降低学习率,如0.001

4:模型预测

from mmdet.apis import init_detector, inference_detector

config_file = '/home/jyt/mmdetection/configs/mask_rcnn/mask_rcnn_r50_fpn_1x_coco.py'

checkpoint_file = '/home/jyt/mmdetection/checkpoints/latest.pth'

device = 'cpu'#也可以是cuda:0,亲测cpu更快

# 初始化检测器

model = init_detector(config_file, checkpoint_file, device=device)

# 推理演示图像

img = '/500/data/labels/1.jpg'

result = inference_detector(model, img)

model.show_result(img, result, out_file="/500/data/predictions/1_result.jpg")恭喜你,成功了!

#写在最后:

深圳准高二学生,物化生选手,喜欢计算机和动漫钢琴。

Peter_Jiang的个人空间_哔哩哔哩_Bilibili(业余自学弹着玩的哈)

暑假参加了一个AI模型识别比赛,云服务器的钱用完了,自己想多了解点MMdetection,于是转战本地,遇到了很多问题(头皮发麻),在CSDN大佬的帮助下才顺利进行,同时也了解下CSDN这个平台~Nice!

所以自己也学着发文章来帮助下像我一样的初学者,希望能够帮到你!

有不足之处也请指出和谅解。学业繁重,先溜了~