【深度学习】PyTorch深度学习实践 - Lecture_11_Advanced_CNN

文章目录

- 一、GoogLe Net

-

- 1.1 网络结构

- 1.2 Inception Model

- 1.3 1×1 Convolution

- 1.4 PyTorch-GPU 实现 GoogLe Net

- 二、Residual Net

-

- 2.1 梯度消失和梯度爆炸

- 2.2 残差模块

- 2.3 PyTorch-GPU 实现 Simple Residual Net

-

- 2.3.1 整体结构

- 2.3.2 残差块对象

- 2.3.3 残差网络对象

- 2.3.4 完整代码

- 三、课后作业

-

- 3.1 实现不同结构的残差块

-

- 3.1.1 Original

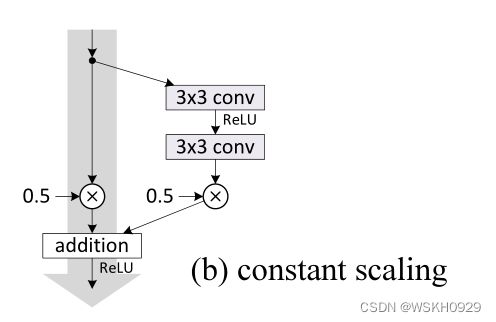

- 3.1.2 Constant Scaling

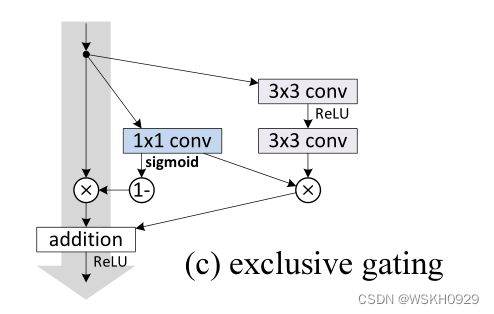

- 3.1.3 Exclusive Gating

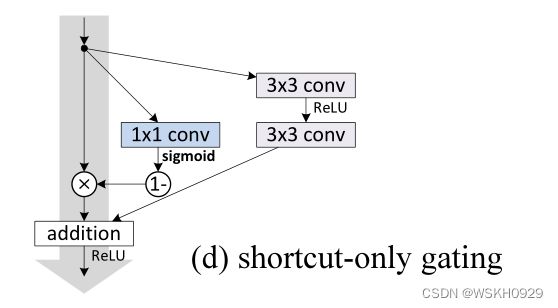

- 3.1.4 Shortcut-Only Gating

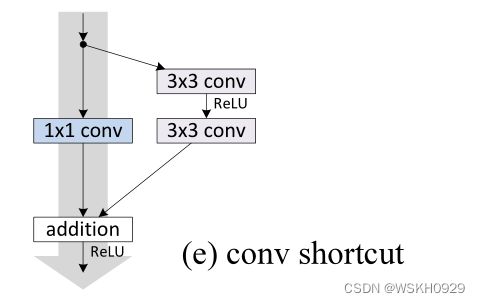

- 3.1.5 Conv Shortcut

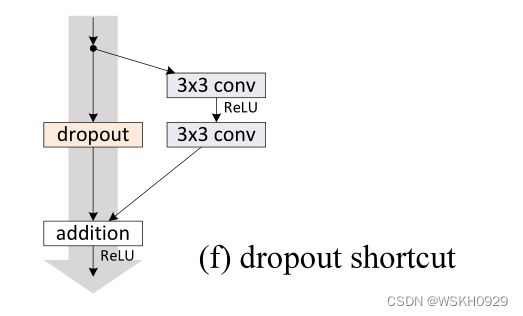

- 3.1.6 Dropout Shortcut

- 3.2 尝试实现 Densely Net

- 四、后期学习

在CNN基础篇中介绍的CNN都是串行结构,而在实际应用中,还存在很多具有更复杂结构的CNN,例如存在分支、存在回溯等等,下面对一些具有复杂结构的CNN进行介绍。

一、GoogLe Net

1.1 网络结构

1.2 Inception Model

1.3 1×1 Convolution

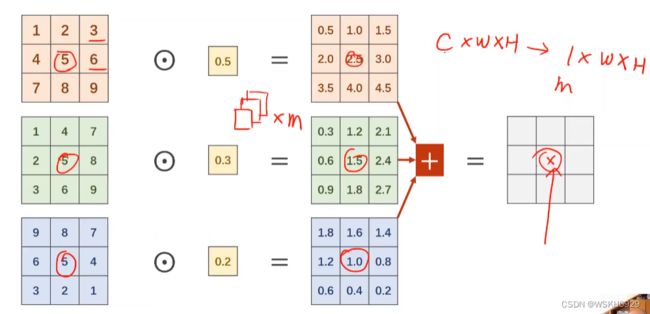

什么是1×1的卷积核,有什么用?可以参考这篇文章 1*1卷积核的作用

总结一下:

- 跨通道的特征整合

- 特征通道的升维和降维

- 减少卷积核参数(简化模型)

由下图可以看到,没有加1×1卷积核的网络结构将192×28×28的数据转化为32×28×28的数据的运算量为120422400,加了1×1卷积核的网络结构,同样是将192×28×28的数据转化为32×28×28的数据,运算量减少到了12433648,直接把运算量降低了一个数量级!!!

(原理:通过1×1的卷积核首先对原始数据进行降维,即减少通道数)

1.4 PyTorch-GPU 实现 GoogLe Net

Inception Net类的实现:

整体网络对象:

完整代码:

import torch

import torch.nn as nn

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.optim as optim

import torch.nn.functional as F

class InceptionA(nn.Module):

def __init__(self, in_channels):

super(InceptionA, self).__init__()

self.branch1x1 = nn.Conv2d(in_channels, 16, kernel_size=(1, 1))

self.branch5x5_1 = nn.Conv2d(in_channels, 16, kernel_size=(1, 1))

self.branch5x5_2 = nn.Conv2d(16, 24, kernel_size=(5, 5), padding=(2, 2))

self.branch3x3_1 = nn.Conv2d(in_channels, 16, kernel_size=(1, 1))

self.branch3x3_2 = nn.Conv2d(16, 24, kernel_size=(3, 3), padding=(1, 1))

self.branch3x3_3 = nn.Conv2d(24, 24, kernel_size=(3, 3), padding=(1, 1))

self.branch_pool = nn.Conv2d(in_channels, 24, kernel_size=(1, 1))

def forward(self, x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch3x3 = self.branch3x3_3(branch3x3)

branch_pool = F.avg_pool2d(x, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch5x5, branch3x3, branch_pool]

return torch.cat(outputs, dim=1)

class GoogLeNet(torch.nn.Module):

def __init__(self):

super(GoogLeNet, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=(5, 5))

self.conv2 = nn.Conv2d(88, 20, kernel_size=(5, 5))

self.incep1 = InceptionA(in_channels=10)

self.incep2 = InceptionA(in_channels=20)

self.mp = nn.MaxPool2d(kernel_size=(2, 2))

self.fc = nn.Linear(1408, 10)

def forward(self, x):

in_size = x.size(0)

x = torch.relu(self.mp(self.conv1(x)))

x = self.incep1(x)

x = torch.relu(self.mp(self.conv2(x)))

x = self.incep2(x)

x = x.view(in_size, -1)

x = self.fc(x)

return x

# 单次训练函数

def train(epoch, criterion):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

# 将inputs, target转移到Gpu或者Cpu上

inputs, target = inputs.to(device), target.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

# 单次测试函数

def ttt():

correct = 0.0

total = 0.0

with torch.no_grad():

for data in test_loader:

images, labels = data

# 将images, labels转移到Gpu或者Cpu上

images, labels = images.to(device), labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy on test set: %d %% [%d/%d]' % (100 * correct / total, correct, total))

if __name__ == '__main__':

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root='../dataset/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 声明模型

model = GoogLeNet()

# 将模型转移道Gpu或者Cpu上

model.to(device)

# 定义损失函数

criterion = torch.nn.CrossEntropyLoss()

# 定义优化器

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

for epoch in range(10):

train(epoch, criterion)

ttt()

输出:

[1, 300] loss: 0.980

[1, 600] loss: 0.199

[1, 900] loss: 0.137

Accuracy on test set: 95 % [9581/10000]

[2, 300] loss: 0.104

[2, 600] loss: 0.090

[2, 900] loss: 0.092

Accuracy on test set: 97 % [9791/10000]

[3, 300] loss: 0.081

[3, 600] loss: 0.065

[3, 900] loss: 0.069

Accuracy on test set: 98 % [9806/10000]

[4, 300] loss: 0.068

[4, 600] loss: 0.058

[4, 900] loss: 0.057

Accuracy on test set: 98 % [9843/10000]

[5, 300] loss: 0.048

[5, 600] loss: 0.056

[5, 900] loss: 0.053

Accuracy on test set: 98 % [9869/10000]

[6, 300] loss: 0.049

[6, 600] loss: 0.043

[6, 900] loss: 0.048

Accuracy on test set: 98 % [9856/10000]

[7, 300] loss: 0.043

[7, 600] loss: 0.045

[7, 900] loss: 0.040

Accuracy on test set: 98 % [9871/10000]

[8, 300] loss: 0.035

[8, 600] loss: 0.042

[8, 900] loss: 0.040

Accuracy on test set: 98 % [9881/10000]

[9, 300] loss: 0.035

[9, 600] loss: 0.036

[9, 900] loss: 0.038

Accuracy on test set: 98 % [9884/10000]

[10, 300] loss: 0.034

[10, 600] loss: 0.032

[10, 900] loss: 0.036

Accuracy on test set: 99 % [9900/10000]

二、Residual Net

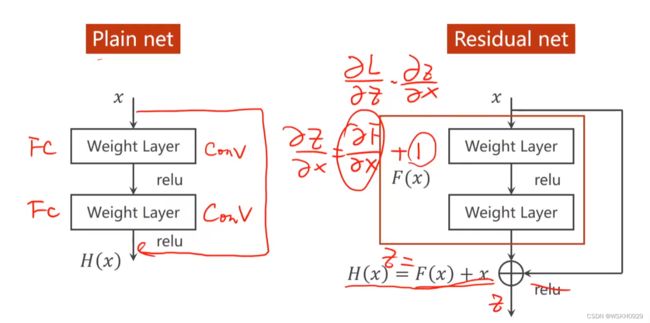

2.1 梯度消失和梯度爆炸

随着网络层数的增加,反向传播的过程也会变长,如果梯度小于1,在漫长的反向传播过程中,梯度不断连乘,最终在网络的入口附近会得到非常小、接近于0的数,使得网络入口附近的节点没有办法得到更新,我们称之为梯度消失或者梯度弥散。

同理,如果梯度大于1,在漫长的反向传播过程中,梯度不断连乘,最终在网络的入口附近会得到非常大的数,使得网络入口附近的节点得到“不切实际的更新”,我们称之为梯度爆炸。

梯度消失和梯度爆炸现象的出现,意味着加深网络层数,不能很好地提高模型的识别准确度,甚至还会导致网络识别能力下降

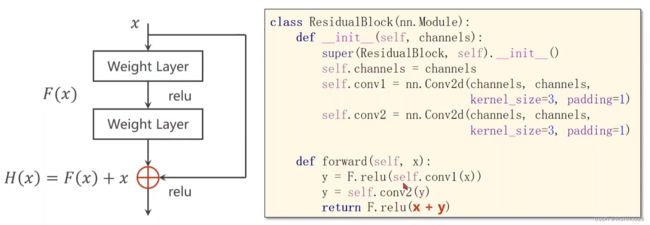

2.2 残差模块

为了缓解梯度消失、梯度爆炸的问题,残差网络(Residual Net)引入了特有的残差模块。

残差模块中,输入会和输出建立一个“跳跃连接”,有了跳连接之后,原本的输出就变为了

H ( x ) = F ( x ) + x H(x)=F(x)+x H(x)=F(x)+x 这样对其求偏导,当F对x的偏导值较小时,就可以将梯度稳定在1附近,缓解了梯度消失和梯度爆炸的现象

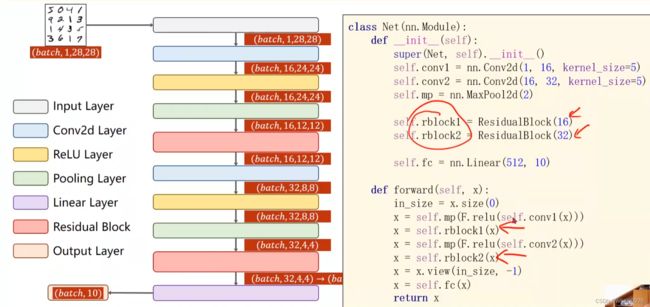

2.3 PyTorch-GPU 实现 Simple Residual Net

2.3.1 整体结构

2.3.2 残差块对象

2.3.3 残差网络对象

2.3.4 完整代码

import torch

import torch.nn as nn

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.optim as optim

import torch.nn.functional as F

class ResidualBlock(nn.Module):

def __init__(self, in_channels):

super(ResidualBlock, self).__init__()

self.in_channels = in_channels

self.conv1 = nn.Conv2d(in_channels,in_channels,kernel_size=(3,3),padding=(1,1))

self.conv2 = nn.Conv2d(in_channels,in_channels,kernel_size=(3,3),padding=(1,1))

def forward(self, x):

y = torch.relu(self.conv1(x))

y = self.conv2(x)

return torch.relu(x+y)

class ResidualNet(torch.nn.Module):

def __init__(self):

super(ResidualNet, self).__init__()

self.conv1 = nn.Conv2d(1,16,kernel_size=(5,5))

self.conv2 = nn.Conv2d(16,32,kernel_size=(5,5))

self.mp = nn.MaxPool2d(kernel_size=(2,2))

self.res_block1 = ResidualBlock(16)

self.res_block2 = ResidualBlock(32)

self.fc = nn.Linear(512,10)

def forward(self, x):

in_size = x.size(0)

x = self.res_block1(self.mp(torch.relu(self.conv1(x))))

x = self.res_block2(self.mp(torch.relu(self.conv2(x))))

x = x.view(in_size,-1)

x = self.fc(x)

return x

# 单次训练函数

def train(epoch, criterion):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

# 将inputs, target转移到Gpu或者Cpu上

inputs, target = inputs.to(device), target.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

# 单次测试函数

def ttt():

correct = 0.0

total = 0.0

with torch.no_grad():

for data in test_loader:

images, labels = data

# 将images, labels转移到Gpu或者Cpu上

images, labels = images.to(device), labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy on test set: %d %% [%d/%d]' % (100 * correct / total, correct, total))

if __name__ == '__main__':

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root='../dataset/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 声明模型

model = ResidualNet()

# 将模型转移道Gpu或者Cpu上

model.to(device)

# 定义损失函数

criterion = torch.nn.CrossEntropyLoss()

# 定义优化器

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

for epoch in range(10):

train(epoch, criterion)

ttt()

输出:

[1, 300] loss: 0.471

[1, 600] loss: 0.143

[1, 900] loss: 0.104

Accuracy on test set: 97 % [9732/10000]

[2, 300] loss: 0.084

[2, 600] loss: 0.071

[2, 900] loss: 0.067

Accuracy on test set: 98 % [9833/10000]

[3, 300] loss: 0.055

[3, 600] loss: 0.054

[3, 900] loss: 0.058

Accuracy on test set: 98 % [9880/10000]

[4, 300] loss: 0.045

[4, 600] loss: 0.042

[4, 900] loss: 0.048

Accuracy on test set: 98 % [9860/10000]

[5, 300] loss: 0.038

[5, 600] loss: 0.039

[5, 900] loss: 0.037

Accuracy on test set: 99 % [9900/10000]

[6, 300] loss: 0.030

[6, 600] loss: 0.035

[6, 900] loss: 0.034

Accuracy on test set: 98 % [9884/10000]

[7, 300] loss: 0.027

[7, 600] loss: 0.028

[7, 900] loss: 0.034

Accuracy on test set: 99 % [9901/10000]

[8, 300] loss: 0.029

[8, 600] loss: 0.025

[8, 900] loss: 0.023

Accuracy on test set: 99 % [9900/10000]

[9, 300] loss: 0.021

[9, 600] loss: 0.021

[9, 900] loss: 0.027

Accuracy on test set: 98 % [9887/10000]

[10, 300] loss: 0.019

[10, 600] loss: 0.023

[10, 900] loss: 0.021

Accuracy on test set: 98 % [9886/10000]

三、课后作业

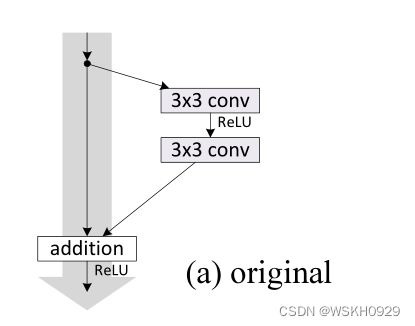

3.1 实现不同结构的残差块

参考论文2016-《Identity Mappings in Deep Residual Networks》,复现论文中提出的几种残差块

3.1.1 Original

class OriginalResidualBlock(nn.Module):

def __init__(self, in_channels):

super(OriginalResidualBlock, self).__init__()

self.in_channels = in_channels

self.conv1 = nn.Conv2d(in_channels,in_channels,kernel_size=(3,3),padding=(1,1))

self.conv2 = nn.Conv2d(in_channels,in_channels,kernel_size=(3,3),padding=(1,1))

def forward(self, x):

y = torch.relu(self.conv1(x))

y = self.conv2(x)

return torch.relu(x+y)

3.1.2 Constant Scaling

class ConstantScalingResidualBlock(nn.Module):

def __init__(self, in_channels):

super(ConstantScalingResidualBlock, self).__init__()

self.in_channels = in_channels

self.conv1 = nn.Conv2d(in_channels, in_channels, kernel_size=(3, 3), padding=(1, 1))

self.conv2 = nn.Conv2d(in_channels, in_channels, kernel_size=(3, 3), padding=(1, 1))

def forward(self, x):

y = torch.relu(self.conv1(x))

y = self.conv2(x)

return torch.relu(0.5 * (x + y))

3.1.3 Exclusive Gating

class ExclusiveGatingResidualBlock(nn.Module):

def __init__(self, in_channels):

super(ExclusiveGatingResidualBlock, self).__init__()

self.in_channels = in_channels

self.conv3x3_1 = nn.Conv2d(in_channels, in_channels, kernel_size=(3, 3), padding=(1, 1))

self.conv3x3_2 = nn.Conv2d(in_channels, in_channels, kernel_size=(3, 3), padding=(1, 1))

self.conv1x1 = nn.Conv2d(in_channels, in_channels, kernel_size=(1, 1))

def forward(self, x):

y1 = self.conv1x1(x) * self.conv3x3_2(torch.relu(self.conv3x3_1(x)))

y2 = (1 - torch.sigmoid(self.conv1x1(x))) * x

return torch.relu(y1 + y2)

3.1.4 Shortcut-Only Gating

class ShortcutOnlyGatingResidualBlock(nn.Module):

def __init__(self, in_channels):

super(ShortcutOnlyGatingResidualBlock, self).__init__()

self.in_channels = in_channels

self.conv3x3_1 = nn.Conv2d(in_channels, in_channels, kernel_size=(3, 3), padding=(1, 1))

self.conv3x3_2 = nn.Conv2d(in_channels, in_channels, kernel_size=(3, 3), padding=(1, 1))

self.conv1x1 = nn.Conv2d(in_channels, in_channels, kernel_size=(1, 1))

def forward(self, x):

y1 = self.conv3x3_2(torch.relu(self.conv3x3_1(x)))

y2 = (1 - torch.sigmoid(self.conv1x1(x))) * x

return torch.relu(y1 + y2)

3.1.5 Conv Shortcut

class ConvShortcutResidualBlock(nn.Module):

def __init__(self, in_channels):

super(ConvShortcutResidualBlock, self).__init__()

self.in_channels = in_channels

self.conv3x3_1 = nn.Conv2d(in_channels, in_channels, kernel_size=(3, 3), padding=(1, 1))

self.conv3x3_2 = nn.Conv2d(in_channels, in_channels, kernel_size=(3, 3), padding=(1, 1))

self.conv1x1 = nn.Conv2d(in_channels, in_channels, kernel_size=(1, 1))

def forward(self, x):

y1 = self.conv3x3_2(torch.relu(self.conv3x3_1(x)))

y2 = self.conv1x1(x)

return torch.relu(y1 + y2)

3.1.6 Dropout Shortcut

class DropoutShortcutResidualBlock(nn.Module):

def __init__(self, in_channels):

super(DropoutShortcutResidualBlock, self).__init__()

self.in_channels = in_channels

self.conv3x3_1 = nn.Conv2d(in_channels, in_channels, kernel_size=(3, 3), padding=(1, 1))

self.conv3x3_2 = nn.Conv2d(in_channels, in_channels, kernel_size=(3, 3), padding=(1, 1))

self.dropout = nn.Dropout(0.5) # 以0.5的概率失活

def forward(self, x):

y1 = self.conv3x3_2(torch.relu(self.conv3x3_1(x)))

y2 = self.dropout(x)

return torch.relu(y1 + y2)

3.2 尝试实现 Densely Net

参考论文《Densely Connected Convolutional Networks》

【论文阅读及复现】(2017)Densely Connected Convolutional Networks + Pytorch代码实现