mmdetection环境配置与训练自己的VOC数据集

文章目录

- mmdetection环境安装

-

- 安装必要的环境和库

- 使用已经训练好的model进行前向推理

- 使用mmdetection训练自定义的VOC数据集

-

- 1.创建数据集目录

- 2.修改VOC0712.py文件

- 3.修改voc.py文件

- 4.修改class_names.py 文件

- 5.修改配置文件faster_rcnn_r50_fpn_1x_coco.py

- 6.修改faster_rcnn_r50_fpn.py

- 7.开始训练

- 8.使用训练好的模型预测

- config文件参数意义

mmdetection环境安装

requirements

- Linux或者macOS

- Python 3.6+

- PyTorch 1.3+

- CUDA 9.2+ (If you build PyTorch from source, CUDA 9.0 is also compatible)

- GCC 5+

- MMCV

建议最好用Linux系统,Windows环境下坑特别多,不建议使用,填坑环节过于繁琐且费事。

安装必要的环境和库

官方安装教程:https://mmdetection.readthedocs.io/en/latest/get_started.html#prepare-environment

mmcv文档:https://mmcv.readthedocs.io/en/latest/build.html

- 创建新环境

conda create -n open-mmlab python=3.7 -y

conda activate open-mmlab # 或 source activate open-mmlab

- 安装pytorch1.7.1

conda install pytorch==1.7.1 torchvision==0.8.2 torchaudio==0.7.2 cudatoolkit=10.1 -c pytorch

- 推荐使用MIM命令安装MMDetection

pip install openmim

mim install mmdet

MIM命令可以自动下载OpenMMLab的projects和requirements

- 安装opencv

pip install opencv-python

- 安装mmcv

推荐使用预编译好的库安装

pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/{cu_version}/{torch_version}/index.html

例如,我装的cuda版本是10.1,pytorch是1.7.1,对应的命令为

pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/cu101/torch1.7.1/index.html

不好用的话可以尝试下这个命令,我需要用的是最新版本1.3.7,根据自己需要选择对应版本

,从官网https://github.com/open-mmlab/mmcv查询需要的版本

pip install mmcv-full==1.3.7 -f https://download.openmmlab.com/mmcv/dist/cu101/torch1.7.0/index.html

- 安装opencv

pip install opencv-python

最后执行

git clone https://github.com/open-mmlab/mmdetection.git

cd mmdetection

pip install -v -e .

环境安装完成之后,跑个DEMO试试吧

from mmdet.apis import init_detector, inference_detector

config_file = 'configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py'

# download the checkpoint from model zoo and put it in `checkpoints/`

# url: https://download.openmmlab.com/mmdetection/v2.0/faster_rcnn/faster_rcnn_r50_fpn_1x_coco/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth

checkpoint_file = 'checkpoints/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth'

device = 'cuda:0'

# init a detector

model = init_detector(config_file, checkpoint_file, device=device)

# inference the demo image

inference_detector(model, 'demo/demo.jpg')

使用已经训练好的model进行前向推理

前向推理就是用已经训练好的model对图片中的目标进行检测。在MMDetection中,模型在config文件中定义,训练好的model参数保存在checkpoint文件中

from mmdet.apis import init_detector, inference_detector

import mmcv

# Specify the path to model config and checkpoint file

config_file = 'configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py'

checkpoint_file = 'checkpoints/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth'

# build the model from a config file and a checkpoint file

model = init_detector(config_file, checkpoint_file, device='cuda:0')

# test a single image and show the results

img = 'test.jpg' # or img = mmcv.imread(img), which will only load it once

result = inference_detector(model, img)

# visualize the results in a new window

model.show_result(img, result)

# or save the visualization results to image files

model.show_result(img, result, out_file='result.jpg')

# test a video and show the results

video = mmcv.VideoReader('video.mp4')

for frame in video:

result = inference_detector(model, frame)

model.show_result(frame, result, wait_time=1)

使用mmdetection训练自定义的VOC数据集

1.创建数据集目录

mmdetection

├── mmdet

├── tools

├── configs

├── data #手动创建data、VOCdevkit、VOC2007、Annotations、JPEGImages、ImageSets、Main这些文件夹

│ ├── VOCdevkit

│ │ ├── VOC2007

│ │ │ ├── Annotations #把test.txt、trainval.txt对应的xml文件放在这

│ │ │ ├── JPEGImages #把test.txt、trainval.txt对应的图片放在这

│ │ │ ├── ImageSets

│ │ │ │ ├── Main

│ │ │ │ │ ├── test.txt

│ │ │ │ │ ├── trainval.txt

2.修改VOC0712.py文件

cd /mmdetection/configs/base/datasets 进入目录后打开voc0712.py

在data的配置 要删除屏蔽VOC2012的路径,和VOC2012变量 保存文件

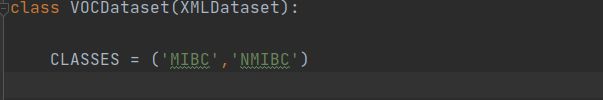

3.修改voc.py文件

cd /mmdetection/mmdet/datasets 进入目录后打开voc.py文件

这个CLASSES 是VOC标签的类别 我们要换成自己数据集的类别标签

4.修改class_names.py 文件

cd /mmdetection/mmdet/core/evaluation进入目录后打开class_names.py 文件

修改 voc_classes() 函数返回的标签,换成自己数据集的标签 保存退出

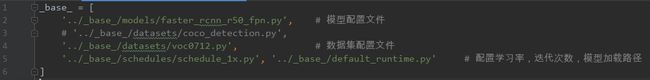

5.修改配置文件faster_rcnn_r50_fpn_1x_coco.py

cd mmdetection/configs/faster_rcnn 我们这次选用faster_rcnn 模型训练,进入目录后打开faster_rcnn_r50_fpn_1x_coco.py文件

把原来COCO_detection.py 修改成VOC0712.py 文件

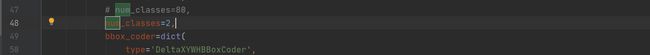

6.修改faster_rcnn_r50_fpn.py

cd /mmdetection/configs/base/models

进入目录后打开 faster_rcnn_r50_fpn.py 文件 ,修改num_classes 数量,num_classes 的值等于类别数量,注意不需要加背景了

7.开始训练

指定GPU训练

CUDA_VISIBLE_DEVICES=0 python3 ./tools/train.py ./configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py

8.使用训练好的模型预测

自动从test文件里随机抽取图片进行预测

python tools/test.py work_dirs/{训练自动生成dir}/{配置文件.py} work_dirs/{训练自动生成的dir}/{epoch12.pth} --show

例如:

python tools/test.py work_dirs/faster_rcnn_r50_fpn_1x_voc/faster_rcnn_r50_fpn_1x_voc.py work_dirs/faster_rcnn_r50_fpn_1x_voc/epoch_12.pth --show

config文件参数意义

- {model}:模型的类型 ,例如faster_rcnn、mask_rcnn, 等等.

- [model setting]: 给模型一些指定设置, 例如without_semantic for htc、moment for reppoints, 等等.

- {backbone}: backbone 的类型 r50 (ResNet-50), x101 (ResNeXt-101)。(相当于特征提取网络)

- {neck}: neck 的类型选择,例如fpn, pafpn, nasfpn, c4.

[- norm_setting]: 如果没有指定,那就默认为bn (Batch Normalization) , 还有其他可选的norm layer类型,比如 gn (Group Normalization)、syncbn (Synchronized Batch Normalization). gn-head/gn-neck 表示 GN 仅仅被用在head/neck模块上, gn-all 表示 GN 被用在整个模型上, 例如:backbone, neck, head这些模块。 - [misc]: 一些比较杂的模型设置或者插件,例如 dconv, gcb, attention, albu, mstrain.

- [gpu x batch_per_gpu]: GPU的个数以及每块GPU上的batch size大小,默认为8*2(8块GPU,每块GPU上2个batch size,相当于batch size为16)。

- {schedule}: 训练的 schedule, 可选择的有1x, 2x, 20e等等. 1x 和 2x 分别表示 12 个epochs 和 24个epochs。 20e 被用在 cascade models中,它表示20个epochs. 对于1x/2x而言, 初始的学习率分别在第8/16个epeochs和第11/22个epochs以10的倍率递减。对于20e而言,初始的学习率在第16个epeochs和第19个epochs以10的倍率。

- {dataset}: 数据集有 coco, cityscapes, voc_0712, wider_face这些选项。