TextRNN、TextLSTM、Bi-LSTM循环神经网络代码实现

循环神经网络(recurrent neural network,RNN)是一类用于处理序列数据的神经网络,可以处理预测下一个单词、完形填空等任务。

下面是TextRNN、TextLSTM、Bi-LSTM的具体代码实现。

1. TextRNN

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

class TextRNN(nn.Module):

def __init__(self,dict_size, n_hidden):

super(TextRNN, self).__init__()

self.rnn = nn.RNN(input_size=dict_size, hidden_size=n_hidden) #输入维度大小,隐藏维度大小

self.W = nn.Linear(n_hidden, dict_size, bias=False)

self.b = nn.Parameter(torch.ones([dict_size]))

def forward(self, hidden, X):

X = X.transpose(0, 1) # X : [n_step, batch_size, dict_size]

outputs, hidden = self.rnn(X, hidden) #batch_first设置为True,则X为[batch_size,n_step,dict_size]

# 输出 : [n_step, batch_size, num_directions(=1) * n_hidden]

# 隐层输出 : [num_layers(=1) * num_directions(=1), batch_size, n_hidden]

outputs = outputs[-1] # [batch_size, num_directions(=1) * n_hidden]

outputs = self.W(outputs) + self.b # model : [batch_size, dict_size]

return outputs

def make_batch(sentences,word_dict,dict_size):

input_batch = []

target_batch = []

for sen in sentences:

word = sen.split()

input = [word_dict[n] for n in word[:-1]]

target = word_dict[word[-1]]

input_batch.append(np.eye(dict_size)[input])

target_batch.append(target)

return input_batch, target_batch

if __name__ == '__main__':

n_step = 2 #输出次数

n_hidden = 5 #隐层维度大小

sentences = ["i like dog", "i love coffee", "i hate milk"]

word_list = " ".join(sentences).split()

word_list = list(set(word_list))

word_dict = {w: i for i, w in enumerate(word_list)}

number_dict = {i: w for i, w in enumerate(word_list)}

dict_size = len(word_dict)

batch_size = len(sentences)

model = TextRNN(dict_size, n_hidden)

criterion = nn.CrossEntropyLoss() #交叉熵损失

optimizer = optim.Adam(model.parameters(), lr=0.001) #优化器

input_batch, target_batch = make_batch(sentences,word_dict,dict_size)

input_batch = torch.FloatTensor(input_batch) #[3,2,7]

target_batch = torch.LongTensor(target_batch) #[3]

#训练

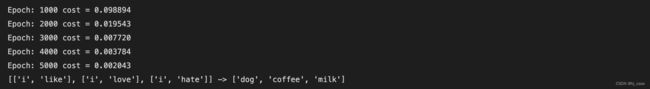

for epoch in range(5000):

optimizer.zero_grad()

# 隐层 : [num_layers * num_directions, batch, hidden_size]

hidden = torch.zeros(1, batch_size, n_hidden)

# 输入 : [batch_size, n_step, dict_size]

output = model(hidden, input_batch)

# 输出 : [batch_size, dict_size], 目标 : [batch_size] (LongTensor, not one-hot)

loss = criterion(output, target_batch)

if (epoch + 1) % 1000 == 0:

print('Epoch:', '%04d' % (epoch + 1), 'cost =', '{:.6f}'.format(loss))

loss.backward()

optimizer.step()

input = [sen.split()[:2] for sen in sentences]

# 预测

hidden = torch.zeros(1, batch_size, n_hidden)

predict = model(hidden, input_batch).data.max(1, keepdim=True)[1]

print([sen.split()[:2] for sen in sentences], '->', [number_dict[n.item()] for n in predict.squeeze()])

2. TextLSTM

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

class TextLSTM(nn.Module):

def __init__(self,class_size,n_hidden):

super(TextLSTM, self).__init__()

self.n_hidden = n_hidden

self.lstm = nn.LSTM(input_size=class_size, hidden_size=n_hidden) #输入维度大小,隐层维度大小

self.W = nn.Linear(n_hidden, class_size, bias=False)

self.b = nn.Parameter(torch.ones([class_size]))

def forward(self, X):

input = X.transpose(0, 1) # X : [n_step, batch_size, class_size]

hidden_state = torch.zeros(1, len(X), self.n_hidden) # [num_layers(=1) * num_directions(=1), batch_size, n_hidden]

cell_state = torch.zeros(1, len(X), self.n_hidden) # [num_layers(=1) * num_directions(=1), batch_size, n_hidden]

outputs, (_, _) = self.lstm(input, (hidden_state, cell_state))

outputs = outputs[-1] # [batch_size, n_hidden]

model = self.W(outputs) + self.b # model : [batch_size, class_size]

return model

def make_batch(seq_data,word_dict,class_size):

input_batch, target_batch = [], []

for seq in seq_data:

input = [word_dict[n] for n in seq[:-1]]

target = word_dict[seq[-1]]

input_batch.append(np.eye(class_size)[input])

target_batch.append(target)

return input_batch, target_batch

if __name__ == '__main__':

n_step = 3 #序列长度

n_hidden = 128 # 隐层维度大小

char_arr = [c for c in 'abcdefghijklmnopqrstuvwxyz']

word_dict = {n: i for i, n in enumerate(char_arr)}

number_dict = {i: w for i, w in enumerate(char_arr)}

class_size = len(word_dict) # 词典大小

seq_data = ['make', 'need', 'coal', 'word', 'love', 'hate', 'live', 'home', 'hash', 'star']

model = TextLSTM(class_size,n_hidden)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

input_batch, target_batch = make_batch(seq_data,word_dict,class_size)

input_batch = torch.FloatTensor(input_batch)

target_batch = torch.LongTensor(target_batch)

# 训练

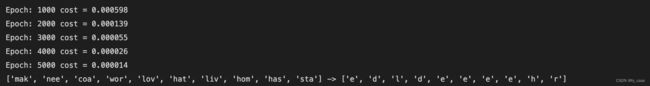

for epoch in range(3000):

optimizer.zero_grad()

output = model(input_batch)

loss = criterion(output, target_batch)

if (epoch + 1) % 100 == 0:

print('Epoch:', '%04d' % (epoch + 1), 'cost =', '{:.6f}'.format(loss))

loss.backward()

optimizer.step()

inputs = [sen[:3] for sen in seq_data]

#预测

predict = model(input_batch).data.max(1, keepdim=True)[1]

print(inputs, '->', [number_dict[n.item()] for n in predict.squeeze()])

3. Bi-LSTM

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

class BiLSTM(nn.Module):

def __init__(self, dict_size, n_hidden):

super(BiLSTM, self).__init__()

self.n_hidden = n_hidden

self.lstm = nn.LSTM(input_size=dict_size, hidden_size=n_hidden, bidirectional=True)

self.W = nn.Linear(n_hidden * 2, dict_size, bias=False)

self.b = nn.Parameter(torch.ones([dict_size]))

def forward(self, X):

input = X.transpose(0, 1) # input : [sequence_len, batch_size, word_dict]

hidden_state = torch.zeros(1*2, len(X), self.n_hidden) # [num_layers(=1) * num_directions(=2), batch_size, n_hidden]

cell_state = torch.zeros(1*2, len(X), self.n_hidden) # [num_layers(=1) * num_directions(=2), batch_size, n_hidden]

outputs, (_, _) = self.lstm(input, (hidden_state, cell_state))

outputs = outputs[-1] # [batch_size, n_hidden]

model = self.W(outputs) + self.b # model : [batch_size, word_dict]

return model

def make_batch(word_dict, dict_size, max_len):

input_batch = []

target_batch = []

words = sentence.split()

for i, word in enumerate(words[:-1]):

input = [word_dict[n] for n in words[:(i + 1)]]

input = input + [0] * (max_len - len(input))

target = word_dict[words[i + 1]]

input_batch.append(np.eye(dict_size)[input])

target_batch.append(target)

return input_batch, target_batch

if __name__ == '__main__':

n_hidden = 5 #隐层维度大小

sentence = (

'Lorem ipsum dolor sit amet consectetur adipisicing elit '

'sed do eiusmod tempor incididunt ut labore et dolore magna '

'aliqua Ut enim ad minim veniam quis nostrud exercitation'

)

word_dict = {w: i for i, w in enumerate(list(set(sentence.split())))}

number_dict = {i: w for i, w in enumerate(list(set(sentence.split())))}

dict_size = len(word_dict)

max_len = len(sentence.split())

model = BiLSTM(dict_size, n_hidden)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

input_batch, target_batch = make_batch(word_dict, dict_size,max_len)

input_batch = torch.FloatTensor(input_batch)

target_batch = torch.LongTensor(target_batch)

#训练

for epoch in range(10000):

optimizer.zero_grad()

output = model(input_batch)

loss = criterion(output, target_batch)

if (epoch + 1) % 1000 == 0:

print('Epoch:', '%04d' % (epoch + 1), 'cost =', '{:.6f}'.format(loss))

loss.backward()

optimizer.step()

predict = model(input_batch).data.max(1, keepdim=True)[1]

print(sentence)

print([number_dict[n.item()] for n in predict.squeeze()])