【机器学习】图像语义分割常用指标Dice系数 敏感性 特异性 IOU及python代码实现

文章目录

- 知识铺垫

- 1. Dice系数和IOU

- 2.敏感性(=Recall)、特异性和精确度(=precision=PPV)

-

- 2.1 敏感性(召回率)和特异性

- 2.2 敏感性和特异性之间的关系

- 2.3 Recall和Precision之间的关系

- 3. F1

知识铺垫

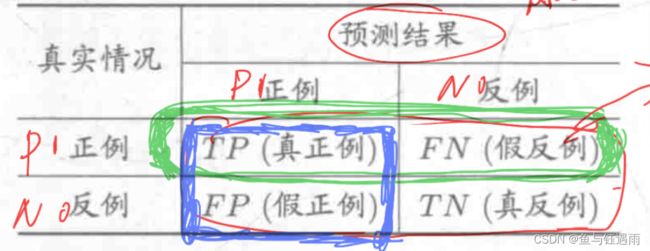

首先,对于像素点,我们要知道,当预测的像素点类别和其真实类别不同或者相同时,我们可以用混淆矩阵来表示,如下图:

1. Dice系数和IOU

解释链接

由于网上给的Dice系数的求解代码基本上都是batch_size=1的,当batch_size>1的时候,就没法用了,因此在这里对网上流行的代码和自己改的代码分别进行总结。

Dice系数的计算公式如下:

D i c e = 2 × T P ( T P + F N ) + ( T P + F P ) Dice=\frac{2\times TP}{(TP+FN)+(TP+FP)} Dice=(TP+FN)+(TP+FP)2×TP

def dice_coef(output, target): # batch_size=1

smooth = 1e-5

#output = torch.sigmoid(output).view(-1).data.cpu().numpy()

output=torch.sigmoid(output)

output[output > 0.5] = 1 #将概率输出变为于标签相匹配的矩阵

output[output <= 0.5] = 0

#target = target.view(-1).data.cpu().numpy()

intersection = (output * target).sum() = TP

# \符号有换行的作用

return (2. * intersection + smooth) / \

(output.sum() + target.sum() + smooth)

# dice=2*TP/(TP+FN)+(TP+FP)

def dice_coef(output, target): # Batch_size>1时

smooth = 1e-5

#output = torch.sigmoid(output).view(-1).data.cpu().numpy()

output=torch.sigmoid(output).data.cpu().numpy()

output[output > 0.5] = 1 #将概率输出变为于标签相匹配的矩阵

output[output <= 0.5] = 0

# target = target.view(-1).data.cpu().numpy()

target = target.data.cpu().numpy()

dice=0.

# ipdb.set_trace() # 用于断掉调试

if len(output)>1:# 如果样本量>1,则逐样本累加

for i in range(len(output)):

intersection = (output[i] * target[i]).sum()

dice += (2. * intersection + smooth)/(output[i].sum() + target[i].sum() + smooth)

else:

intersection = (output * target).sum() # 一个数字,=TP

dice = (2. * intersection + smooth) /(output.sum() + target.sum() + smooth)

return dice

IOU的计算公式如下:

D i c e = T P T P + F N + F P Dice=\frac{TP}{TP+FN+FP} Dice=TP+FN+FPTP

# batch_size = 1

def iou_score(output, target):

smooth = 1e-5

if torch.is_tensor(output):

output = torch.sigmoid(output).data.cpu().numpy()

if torch.is_tensor(target):

target = target.data.cpu().numpy()

output_ = output > 0.5

target_ = target > 0.5

intersection = (output_ & target_).sum()

union = (output_ | target_).sum()

return (intersection + smooth) / (union + smooth)

#batch_size > 1

def iou_score(output, target):

smooth = 1e-5

if torch.is_tensor(output):

output = torch.sigmoid(output).data.cpu().numpy()

if torch.is_tensor(target):

target = target.data.cpu().numpy()

output_ = output > 0.5

target_ = target > 0.5

# intersection = (output_ & target_).sum()

# union = (output_ | target_).sum()

iou = 0.

if len(output)>1:

for i in range(len(output)):

union = (output_[i] | target_[i]).sum()

intersection = (output_[i] & target_[i]).sum()

iou += (intersection + smooth) / (union + smooth)

else:

intersection = (output_ & target_).sum()

union = (output_ | target_).sum()

iou = (intersection + smooth) / (union + smooth)

return iou

2.敏感性(=Recall)、特异性和精确度(=precision=PPV)

2.1 敏感性(召回率)和特异性

Recall公式

def get_sensitivity(output, gt): # 求敏感度 se=TP/(TP+FN)

SE = 0.

output = output > 0.5

gt = gt > 0.5

TP = ((output==1).byte() + (gt==1).byte()) == 2

FN = ((output==0).byte() + (gt==1).byte()) == 2

#wfy:batch_num>1时,改进

if len(output)>1:

for i in range(len(output)):

SE += float(torch.sum(TP[i])) / (float(torch.sum(TP[i]+FN[i])) + 1e-6)

else:

SE = float(torch.sum(TP)) / (float(torch.sum(TP+FN)) + 1e-6) #原本只用这一句

#SE = float(torch.sum(TP)) / (float(torch.sum(TP + FN)) + 1e-6) # 原本只用这一句

return SE #返回batch中所有样本的SE和

特异性:

def get_specificity(SR, GT, threshold=0.5):#求特异性 sp=TN/(FP+TN)

SR = SR > threshold #得到true和false

GT = GT > threshold

SP=0.# wfy

# TN : True Negative

# FP : False Positive

TN = ((SR == 0).byte() + (GT == 0).byte()) == 2

FP = ((SR == 1).byte() + (GT == 0).byte()) == 2

#wfy:batch_num>1时,改进

if len(SR)>1:

for i in range(len(SR)):

SP += float(torch.sum(TN[i])) / (float(torch.sum(TN[i] + FP[i])) + 1e-6)

else:

SP = float(torch.sum(TN)) / (float(torch.sum(TN + FP)) + 1e-6) # 原本只用这一句

#

# SP = float(torch.sum(TN)) / (float(torch.sum(TN + FP)) + 1e-6)

return SP

这两个指标在医疗领域很常用,而在机器学习领域常用的是Recall和Precision。

2.2 敏感性和特异性之间的关系

暂略

2.3 Recall和Precision之间的关系

ppv=precision

def ppv(output, target): #阳性预测值,准确率(precision)pr = TP/(TP+FP)

smooth = 1e-5

if torch.is_tensor(output):

output = torch.sigmoid(output).data.cpu().numpy()

if torch.is_tensor(target):

target = target.data.cpu().numpy()

ppv=0.

if len(output)>1:

for i in range(len(output)):

intersection = (output[i] * target[i]).sum()

ppv += (intersection + smooth)/(output[i].sum() + smooth)

else:

intersection = (output * target).sum() # 一个数字,=TP

ppv = (intersection + smooth)/(output.sum() + smooth)

# intersection = (output * target).sum() # TP

return ppv

3. F1

def get_F1(output, gt):

se = get_sensitivity(output, gt)

pc = get_precision(output, gt)

f1 = 2*se*pc / (se+pc+1e-6)

return f1