图像评价指标(python)

图像评价指标的综合记录:

一、信息熵

代码:

import cv2

import numpy as np

import math

tmp = []

for i in range(256):

tmp.append(0)

val = 0

k = 0

res = 0

#'img/1-3.jpg'=6.0404 ; out2.jpg=7.0361 ;result2=7.1585

image = cv2.imread('img/result2.jpg',0)

img = np.array(image)

for i in range(len(img)):

for j in range(len(img[i])):

val = img[i][j]

tmp[val] = float(tmp[val] + 1)

k = float(k + 1)

for i in range(len(tmp)):

tmp[i] = float(tmp[i]/ k)

for i in range(len(tmp)):

if(tmp[i] == 0):

res = res

else:

res = float(res - tmp[i] * (math.log(tmp[i]) / math.log(2.0)))

print (res)

二、均值和标准差

均值代表图像的亮度,越大代表越亮,但是不能单纯的说图像越亮越好,要视情况而定;

标准差则用于评价图像的对比度,越大表明图像明暗渐变层越多,图像细节越突出越清晰,不失为一种好的评价指标。

代码:

from PIL import Image,ImageStat

import cv2

import numpy as np

import matplotlib.pyplot as plt

img = cv2.imread('img/1-3.jpg')

img = img.astype(np.float32) / 255

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

# plt.imshow(img)

cv2.imshow('img',img)

# 通过img.copy()方法,复制img的数据到mean_img

mean_img = img.copy()

# 使用 .mean() 方法可得出 mean_img 的平均值

print(mean_img.mean())

# mean_img -= mean_img.mean() 等效于 mean_img = mean_img - mean_img.mean()

# 减去平均值,得出零平均值矩阵

mean_img -= mean_img.mean()

# 显示图像

# cv2.imshow(mean_img)

cv2.imshow('mean_img',mean_img)

std_img = mean_img.copy()

# 输出 std_img 的标准差

print(std_img.std())

# std_img /= std_img.mean() 等效于 std_img = std_img / std_img.mean()

# 除于标准差,得出单位方差矩阵

std_img /= std_img.std()

# 显示图像

# plt.imshow(std_img)

cv2.imshow('std_img',std_img)

cv2.waitKey(0)

cv2.destroyAllWindows()三、信噪比(峰值信噪比)

PSNR峰值信噪比(python代码实现+SSIM+MSIM)_彩色海绵的博客-CSDN博客_psnr峰值信噪比

代码:

import cv2 as cv

import math

import numpy as np

def psnr1(img1, img2):

# compute mse

# mse = np.mean((img1-img2)**2)

mse = np.mean((img1 / 1.0 - img2 / 1.0) ** 2)

# compute psnr

if mse < 1e-10:

return 100

psnr1 = 20 * math.log10(255 / math.sqrt(mse))

return psnr1

def psnr2(img1, img2):#第二种法:归一化

mse = np.mean((img1 / 255.0 - img2 / 255.0) ** 2)

if mse < 1e-10:

return 100

PIXEL_MAX = 1

psnr2 = 20 * math.log10(PIXEL_MAX / math.sqrt(mse))

return psnr2

imag1 = cv.imread("./img/22.jpg")

print(imag1.shape)

imag2 = cv.imread("./img/222.jpg")

print(imag2.shape)

#如果大小不同可以强制改变

# imag2 = imag2.reshape(352,352,3)

#print(imag2.shape)

res1 = psnr1(imag1, imag2)

print("res1:", res1)

res2 = psnr2(imag1, imag2)

print("res2:", res2)

#tensorflow框架里有直接关于psnr计算的函数,直接调用就行了:(更推荐)以下代码

'''

#注意:计算PSNR的时候必须满足两张图像的size要完全一样!

#compute PSNR with tensorflow

import tensorflow as tf

def read_img(path):

return tf.image.decode_image(tf.read_file(path))

def psnr(tf_img1, tf_img2):

return tf.image.psnr(tf_img1, tf_img2, max_val=255)

def _main():

t1 = read_img('t1.jpg')

t2 = read_img('t2.jpg')

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

y = sess.run(psnr(t1, t2))

print(y)

if __name__ == '__main__':

_main()

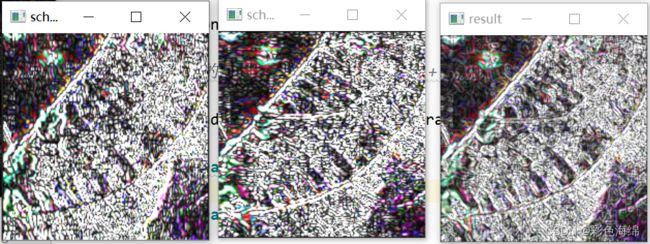

'''四、平均梯度

平均梯度(meangradient):指图像的边界或影线两侧附近灰度有明显差异,即灰度变化率大,这种变化率的大小可用来表示图像清晰度。它反映了图像微小细节反差变化的速率,即图像多维方向上密度变化的速率,表征图像的相对清晰程度。

平均梯度即图像的清晰度(definition),反映图像对细节对比的表达能力,计算公式为

图像梯度: G(x,y) = dx i + dy j;

dx(i,j) = I(i+1,j) - I(i,j);

dy(i,j) = I(i,j+1) - I(i,j);

其中,I是图像像素的值(如:RGB值),(i,j)为像素的坐标。

图像梯度一般也可以用中值差分:

dx(i,j) = [I(i+1,j) - I(i-1,j)]/2;

dy(i,j) = [I(i,j+1) - I(i,j-1)]/2;

图像边缘一般都是通过对图像进行梯度运算来实现的。

上面说的是简单的梯度定义,其实还有更多更复杂的梯度公式。

python opencv学习(六)图像梯度计算_刘子晞的博客的博客-CSDN博客_图像计算梯度

代码:

import cv2 as cv

import numpy as np

'''图像梯度(由x,y方向上的偏导数和偏移构成),有一阶导数(sobel算子)和二阶导数(Laplace算子)

用于求解图像边缘,一阶的极大值,二阶的零点

一阶偏导在图像中为一阶差分,再变成算子(即权值)与图像像素值乘积相加,二阶同理

'''

def sobel_demo(image):

grad_x = cv.Sobel(image, cv.CV_32F, 1, 0) # 采用Scharr边缘更突出

grad_y = cv.Sobel(image, cv.CV_32F, 0, 1)

gradx = cv.convertScaleAbs(grad_x) # 由于算完的图像有正有负,所以对其取绝对值

grady = cv.convertScaleAbs(grad_y)

#计算两个图像的权值和,dst = src1alpha + src2beta + gamma

gradxy = cv.addWeighted(gradx, 0.5, grady, 0.5, 0)

cv.imshow("gradx", gradx)

cv.imshow("grady", grady)

cv.imshow("gradient", gradxy)

def laplace_demo(image): # 二阶导数,边缘更细

dst = cv.Laplacian(image, cv.CV_32F)

lpls = cv.convertScaleAbs(dst)

cv.imshow("laplace_demo", lpls)

def custom_laplace(image):

#以下算子与上面的Laplace_demo()是一样的,增强采用np.array([[1, 1, 1], [1, -8, 1], [1, 1, 1]])kernel = np.array([[1, 1, 1], [1, -8, 1], [1, 1, 1]])

dst = cv.filter2D(image, cv.CV_32F, kernel=kernel)

lpls = cv.convertScaleAbs(dst)

cv.imshow("custom_laplace", lpls)

def Scharr(img):

scharrx = cv.Scharr(img,cv.CV_64F, dx= 1, dy= 0)

scharrx = cv.convertScaleAbs(scharrx)

scharry = cv.Scharr(img,cv.CV_64F, dx = 0, dy = 1)

scharry = cv.convertScaleAbs(scharry)

result = cv.addWeighted(scharrx, 0.5, scharry, 0.5, 0)

cv.imshow("scharrx", scharrx)

cv.imshow("scharry", scharry)

cv.imshow("result", result)

src = cv.imread("img/result2.jpg")

cv.imshow("original", src)

sobel_demo(src)

laplace_demo(src)

# custom_laplace(src)

Scharr(src)

cv.waitKey(0) # 等有键输入或者1000ms后自动将窗口消除,0表示只用键输入结束窗口

cv.destroyAllWindows() # 关闭所有窗口

五、SSIM

结构相似性指标(英文:structural similarity index,SSIM index),是一种用以衡量两张数字图象相似性的指标。结构相似性在于衡量数字图像相邻像素的关联性,图像中相邻像素的关联性反映了实际场景中物体的结构信息。因此,在设计图像失真的衡量指标时,必须考虑结构性失真。

图像相似性评价指标SSIM/PSNR_恒友成的博客-CSDN博客_ssim指标

import sys

import numpy

from scipy import signal

from scipy import ndimage

import cv2

def fspecial_gauss(size, sigma):

x, y = numpy.mgrid[-size//2 + 1:size//2 + 1, -size//2 + 1:size//2 + 1]

g = numpy.exp(-((x**2 + y**2)/(2.0*sigma**2)))

return g/g.sum()

def ssim(img1, img2, cs_map=False):

img1 = img1.astype(numpy.float64)

img2 = img2.astype(numpy.float64)

size = 11

sigma = 1.5

window = fspecial_gauss(size, sigma)

K1 = 0.01

K2 = 0.03

L = 255 #bitdepth of image

C1 = (K1*L)**2

C2 = (K2*L)**2

mu1 = signal.fftconvolve(window, img1, mode='valid')

mu2 = signal.fftconvolve(window, img2, mode='valid')

mu1_sq = mu1*mu1

mu2_sq = mu2*mu2

mu1_mu2 = mu1*mu2

sigma1_sq = signal.fftconvolve(window, img1*img1, mode='valid') - mu1_sq

sigma2_sq = signal.fftconvolve(window, img2*img2, mode='valid') - mu2_sq

sigma12 = signal.fftconvolve(window, img1*img2, mode='valid') - mu1_mu2

if cs_map:

return (((2*mu1_mu2 + C1)*(2*sigma12 + C2))/((mu1_sq + mu2_sq + C1)*

(sigma1_sq + sigma2_sq + C2)),

(2.0*sigma12 + C2)/(sigma1_sq + sigma2_sq + C2))

else:

return ((2*mu1_mu2 + C1)*(2*sigma12 + C2))/((mu1_sq + mu2_sq + C1)*

(sigma1_sq + sigma2_sq + C2))

def mssim(img1, img2):

"""

refer to https://github.com/mubeta06/python/tree/master/signal_processing/sp

"""

level = 5

weight = numpy.array([0.0448, 0.2856, 0.3001, 0.2363, 0.1333])

downsample_filter = numpy.ones((2, 2))/4.0

im1 = img1.astype(numpy.float64)

im2 = img2.astype(numpy.float64)

mssim = numpy.array([])

mcs = numpy.array([])

for l in range(level):

ssim_map, cs_map = ssim(im1, im2, cs_map=True)

mssim = numpy.append(mssim, ssim_map.mean())

mcs = numpy.append(mcs, cs_map.mean())

filtered_im1 = ndimage.filters.convolve(im1, downsample_filter,

mode='reflect')

filtered_im2 = ndimage.filters.convolve(im2, downsample_filter,

mode='reflect')

im1 = filtered_im1[::2, ::2]

im2 = filtered_im2[::2, ::2]

return (numpy.prod(mcs[0:level-1]**weight[0:level-1])*

(mssim[level-1]**weight[level-1]))

img = cv2.imread("img/1-3.jpg",0)

print(img.shape)

noise_img = cv2.imread("img/result2.jpg",0)

ssim_val = ssim(img, noise_img)

mssim_val = mssim(img, noise_img)

print(f"ssim_val: {ssim_val.mean()}")

print(f"mssim_val: {mssim_val}")