I3D Finetune

背景介绍

在现有的的行为分类数据集(UCF-101 and HMDB-51)中,视频数据的缺乏使得确定一个好的视频结构很困难,大部分方法在小规模数据集上取得差不多的效果。这篇文章根据Kinetics人类行为动作来重新评估这些先进的结构。Kinetics有两个数量级的数据,400类人类行为,每一类有超过400剪辑,并且这些都是从现实的,有挑战性的YouTube视频中收集的。我们分析了现有的结构在这个数据集上进行行为分类任务的过程以及在Kinetics上预训练后是如何提高在小样本集上的表现。

我们引入了一个基于二维卷积膨胀网络的Two-Stream Inflated 三维卷积网络(I3D):深度图像分类卷积网络中的滤波器和pooling卷积核推广到了3D的情况,这样能够学到从视频中提取好的时空特征的能力,同时可以利用ImageNet结构的设计以及参数;我们发现在Kinetics上预训练之后,I3D模型在行为分类上提高了很多,在HMDB-51上达到了80.7%的正确率,在UCF-101上达到了98.0%的正确率。

具体参考前面的介绍《Qua Vadis, Action Recognition? A New Model and the Kinetics Dataset》论文解读之Two-Stream I3D

今天主要介绍在UCF-101上的I3D finetune

相关的项目资源也是很多,这里简单列一下:

1.https://github.com/LossNAN/I3D-Tensorflow

2.https://github.com/piergiaj/pytorch-i3d

3.https://github.com/USTC-Video-Understanding/I3D_Finetune

4.https://github.com/hassony2/kinetics_i3d_pytorch

All this code is based on Deepmind's Kinetics-I3D. Including PyTorch versions of their models.

下面我们介绍下操作步骤。

# how to run this demo?about train,test .Follow these steps please.

1.Clone this repo:https://github.com/LossNAN/I3D-Tensorflow

2. Download kinetics pretrained I3D models

In order to finetune I3D network on UCF101, you have to download Kinetics pretrained I3D models provided by DeepMind at here.

https://github.com/deepmind/kinetics-i3d/tree/master/data

Specifically, download the repo kinetics-i3d and put the data/checkpoints folder into data subdir of our I3D_Finetune repo:

git clone https://github.com/deepmind/kinetics-i3d

cp -r kinetics-i3d/data/checkpoints I3D-Tensorflow/

ps:if u have model files in I3D-Tensorflow/checkpoints,u don't need to download again.

3.Data_process

1>download UCF101 and HMDB51 dataset by yourself

2>extract RGB and FLOW frames by denseFlow_GPU, (or https://github.com/wanglimin/dense_flow)

you will get rgb frames and x_flow,y_flow frames in the video path.Then,run

python3 split_flow.py

to get files such as:

~PATH/UCF-101/ApplyEyeMakeup/v_ApplyEyeMakeup_g01_c01/i for all rgb frames

~PATH/UCF-101/ApplyEyeMakeup/v_ApplyEyeMakeup_g01_c01/x for all x_flow frames

~PATH/UCF-101/ApplyEyeMakeup/v_ApplyEyeMakeup_g01_c01/y for all y_flow frames

3>convert images to list for train and test

cd ./list/ucf_list/

bash ./convert_images_to_list.sh ~path/UCF-101 4

you will get train.list and test.list for your own dataset

such as: ~PATH/UCF-101/ApplyEyeMakeup/v_ApplyEyeMakeup_g01_c01 0

4.Train your own dataset(UCF101 as example)

1>if you get path errors, please modify by yourself

cd ./experiments/ucf-101

python3 train_ucf_rgb.py

python3 train_ucf_flow.py

test_flow.list 2004 /home/gavin/Dataset/UCF-101_Flow/PlayingDaf/v_PlayingDaf_g17_c03 59

result :

1.flow :0.913642

2.rgb:TOP_1_ACC in test: 0.949649

3.rgb+ flow:TOP_1_ACC in test: 0.969555

https://github.com/Gavin666Github/dense_flow

提取代码如下:extract_flow_rgb.py

import numpy as np

import os

root_path = "/home/gavin/Dataset/actiondata" #"/home/gavin/Downloads/UCF-101"

flow_path = "/home/gavin/Dataset/action_flow" #"/home/gavin/Dataset/UCF-101_Flow"

# ./denseFlow -f /home/gavin/Downloads/UCF-101/ApplyEyeMakeup/v_ApplyEyeMakeup_g01_c01.avi

# -x /home/gavin/Dataset/UCF-101_Flow/ApplyEyeMakeup/v_ApplyEyeMakeup_g01_c01/x

# -y /home/gavin/Dataset/UCF-101_Flow/ApplyEyeMakeup/v_ApplyEyeMakeup_g01_c01/y

# -i /home/gavin/Dataset/UCF-101_Flow/ApplyEyeMakeup/v_ApplyEyeMakeup_g01_c01/i -b 20

def extract_flow_rgb(root_dirs):

video_dirs = os.listdir(root_dirs) # ApplyEyeMakeup,...

for video_dir in video_dirs:

tmp = video_dir

video_dir = os.path.join(root_dirs, video_dir) # root_path/ApplyEyeMakeup

flow_dir = os.path.join(flow_path, tmp) # flow_path/ApplyEyeMakeup

video_list = os.listdir(video_dir) # v_ApplyEyeMakeup_g01_c01.avi,...

for video in video_list:

video_name = video.split('.')[0]

# tmp_dir = os.path.join(video_dir, video_name)

tmpFlow_dir = os.path.join(flow_dir, video_name)

tmp_file = os.path.join(video_dir, video)

print(tmp_file) # path/UCF-101/ApplyEyeMakeup/v_ApplyEyeMakeup_g01_c01.avi

if not os.path.exists(tmpFlow_dir):

os.makedirs(tmpFlow_dir)

i_dir = os.path.join(tmpFlow_dir,'i')

x_dir = os.path.join(tmpFlow_dir, 'x')

y_dir = os.path.join(tmpFlow_dir, 'y')

cmd = './denseFlow -f %s -x %s -y %s -i %s -b 20' % (tmp_file,x_dir,y_dir,i_dir)

if len(tmp_file.split('.')) > 1:

os.system(cmd)

print("extract rgb and flow from %s done." % (video))

def splitflow(root_dirs):

video_dirs = os.listdir(root_dirs)

for video_dir in video_dirs:

tmp = video_dir

video_dir = os.path.join(root_dirs, video_dir)

video_list = os.listdir(video_dir)

for video in video_list:

print(os.path.join(video_dir, video))

image_list = os.listdir(os.path.join(video_dir, video))

i_dir = os.path.join(video_dir, video, 'i')

x_dir = os.path.join(video_dir, video, 'x')

y_dir = os.path.join(video_dir, video, 'y')

if not os.path.exists(i_dir):

os.makedirs(i_dir)

if not os.path.exists(x_dir):

os.makedirs(x_dir)

if not os.path.exists(y_dir):

os.makedirs(y_dir)

for image in image_list:

classic = image.split('_')[0]

cmd = 'mv %s %s' % (os.path.join(video_dir, video, image), os.path.join(video_dir, video, classic))

if len(image.split('_')) > 1:

os.system(cmd)

if __name__ == '__main__':

#extract_flow_rgb(root_path)

splitflow(flow_path)

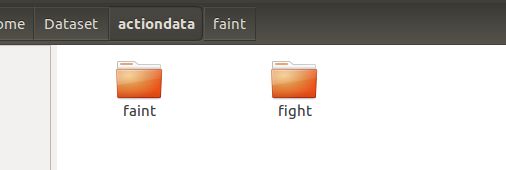

对于自定义的行为,我们同样采取上述方法,比如我们训练两个类的行为

文件夹下面就是具体一个个的视频了。

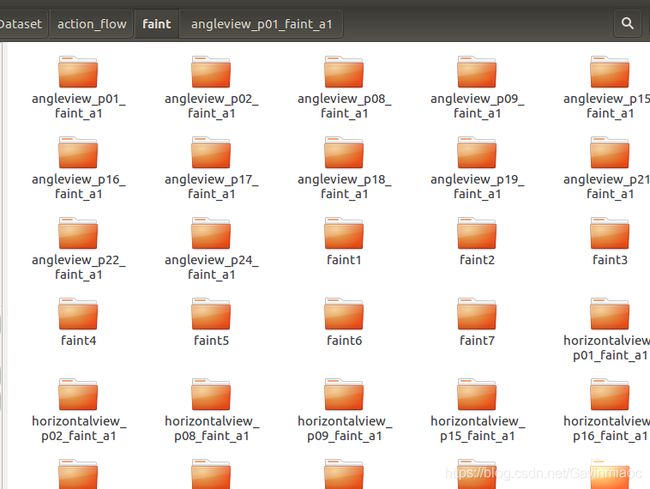

提取的rgb和flow存在action_flow下

进去一个看看:

再进:

即整理好的i,x,y三类图片截图。

接下来可以进行训练了。

参考:

1.https://www.jianshu.com/p/0c26a33cefd0

2.https://www.jianshu.com/p/71d35fda32d3

3.github:https://github.com/LossNAN/I3D-Tensorflow