Python+OpenCV:理解k近邻(kNN)算法(k-Nearest Neighbour (kNN) algorithm)

Python+OpenCV:理解k近邻(kNN)算法(k-Nearest Neighbour (kNN) algorithm)

理论

kNN is one of the simplest classification algorithms available for supervised learning.

The idea is to search for the closest match(es) of the test data in the feature space.

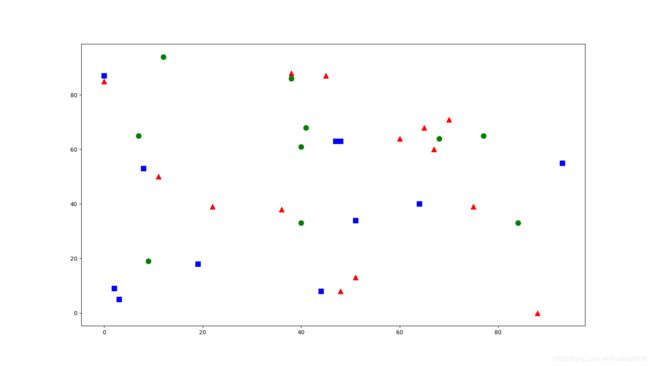

We will look into it with the below image.

In the image, there are two families: Blue Squares and Red Triangles. We refer to each family as a Class.

Their houses are shown in their town map which we call the Feature Space.

You can consider a feature space as a space where all data are projected.

For example, consider a 2D coordinate space. Each datum has two features, a x coordinate and a y coordinate.

You can represent this datum in your 2D coordinate space, right? Now imagine that there are three features, you will need 3D space.

Now consider N features: you need N-dimensional space, right? This N-dimensional space is its feature space. In our image, you can consider it as a 2D case with two features.

Now consider what happens if a new member comes into the town and creates a new home, which is shown as the green circle.

He should be added to one of these Blue or Red families (or classes). We call that process, Classification.

How exactly should this new member be classified? Since we are dealing with kNN, let us apply the algorithm.

One simple method is to check who is his nearest neighbour. From the image, it is clear that it is a member of the Red Triangle family. So he is classified as a Red Triangle.

This method is called simply Nearest Neighbour classification, because classification depends only on the nearest neighbour.

But there is a problem with this approach! Red Triangle may be the nearest neighbour, but what if there are also a lot of Blue Squares nearby?

Then Blue Squares have more strength in that locality than Red Triangles, so just checking the nearest one is not sufficient. Instead we may want to check some k nearest families.

Then whichever family is the majority amongst them, the new guy should belong to that family.

In our image, let's take k=3, i.e. consider the 3 nearest neighbours.

The new member has two Red neighbours and one Blue neighbour (there are two Blues equidistant, but since k=3, we can take only one of them), so again he should be added to Red family.

But what if we take k=7? Then he has 5 Blue neighbours and 2 Red neighbours and should be added to the Blue family. The result will vary with the selected value of k.

Note that if k is not an odd number, we can get a tie, as would happen in the above case with k=4.

We would see that our new member has 2 Red and 2 Blue neighbours as his four nearest neighbours and we would need to choose a method for breaking the tie to perform classification.

So to reiterate, this method is called k-Nearest Neighbour since classification depends on the k nearest neighbours.

Again, in kNN, it is true we are considering k neighbours, but we are giving equal importance to all, right? Is this justified?

For example, take the tied case of k=4. As we can see, the 2 Red neighbours are actually closer to the new member than the other 2 Blue neighbours, so he is more eligible to be added to the Red family.

How do we mathematically explain that? We give some weights to each neighbour depending on their distance to the new-comer: those who are nearer to him get higher weights, while those that are farther away get lower weights.

Then we add the total weights of each family separately and classify the new-comer as part of whichever family received higher total weights. This is called modified kNN or weighted kNN.

So what are some important things you see here?

- Because we have to check the distance from the new-comer to all the existing houses to find the nearest neighbour(s), you need to have information about all of the houses in town, right?

If there are plenty of houses and families, it takes a lot of memory, and also more time for calculation. - There is almost zero time for any kind of "training" or preparation. Our "learning" involves only memorizing (storing) the data, before testing and classifying.

Now let's see this algorithm at work in OpenCV.

kNN in OpenCV

####################################################################################################

# 理解k近邻(kNN)算法(k-Nearest Neighbour (kNN) algorithm)

def lmc_cv_knn():

"""

函数功能: 理解k近邻(kNN)算法(k-Nearest Neighbour (kNN) algorithm).

"""

# Feature set containing (x,y) values of 25 known/training data

train_data = np.random.randint(0, 100, (25, 2)).astype(np.float32)

# Label each one either Red or Blue with numbers 0 and 1

responses = np.random.randint(0, 2, (25, 1)).astype(np.float32)

# Take Red neighbours and plot them

red = train_data[responses.ravel() == 0]

# Take Blue neighbours and plot them

blue = train_data[responses.ravel() == 1]

# The new-comer is marked in green.

# If you have multiple new-comers (test data), you can just pass them as an array.

# Corresponding results are also obtained as arrays.

newcomer = np.random.randint(0, 100, (10, 2)).astype(np.float32)

knn = lmc_cv.ml.KNearest_create()

knn.train(train_data, lmc_cv.ml.ROW_SAMPLE, responses)

ret, results, neighbours, dist = knn.findNearest(newcomer, 3)

print("result: {}\n".format(results))

print("neighbours: {}\n".format(neighbours))

print("distance: {}\n".format(dist))

pyplot.figure('k-Nearest Neighbour (kNN) algorithm', figsize=(16, 9))

pyplot.scatter(red[:, 0], red[:, 1], 80, 'r', '^')

pyplot.scatter(blue[:, 0], blue[:, 1], 80, 'b', 's')

pyplot.scatter(newcomer[:, 0], newcomer[:, 1], 80, 'g', 'o')

pyplot.savefig('%02d.png' % (0 + 1))

pyplot.show()result:

[

[0.]

[0.]

[0.]

[1.]

[0.]

[1.]

[0.]

[1.]

[1.]

[0.]

]

neighbours:

[

[0. 1. 0.]

[1. 0. 0.]

[1. 0. 0.]

[1. 1. 0.]

[0. 0. 1.]

[0. 1. 1.]

[0. 0. 0.]

[1. 1. 1.]

[1. 1. 0.]

[0. 0. 0.]

]

distance:

[

[ 41. 122. 360.]

[145. 241. 449.]

[193. 225. 712.]

[ 53. 68. 409.]

[ 4. 50. 610.]

[117. 449. 565.]

[ 17. 25. 53.]

[101. 149. 232.]

[ 61. 74. 377.]

[ 85. 125. 153.]

]