关于运行DynaSLAM源码这档子事(OpenCV3.x版)

好的,又来到了一篇源码运行记录← ←

一. 基础环境

根据作者源码Readme文件,必须需要有Python2.7,后续我装tensorflow什么都是在Anaconda建立的一个虚拟环境下弄的。这篇记录是对于OpenCV 3.x的版本的!对于3.x的版本的修改在第四大点,前面都是通用的部分。

二. 满足能编译ORB-SLAM2的条件

因为这个源码是基于ORB-SLAM2写的,所以当然要满足一些预备条件,需要预先安装一些库比如C++11 or C++0x Compiler, Pangolin, OpenCV and Eigen3,如果不知道怎么装,可以再手动搜索一下。DynaSLAM刚出时只能支持OpenCV2.4.11,不过19年有热心人提交了可以支持Opencv3.x的代码,后面我们再详细说。我自己装的是OpenCV 3.4.5

三. 编译DynaSLAM前需要安装的其他库

按照开源代码的Readme文件:

1. 安装boost库

sudo apt-get install libboost-all-dev

2. 下载DynaSLAM源码并放入h5文件

git clone https://github.com/BertaBescos/DynaSLAM.git

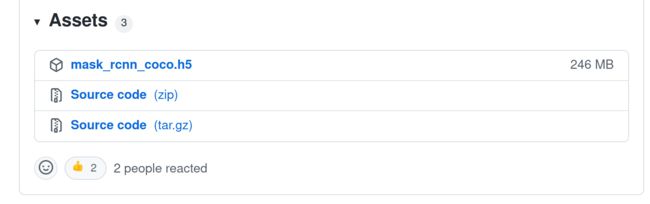

然后从这个页面https://github.com/matterport/Mask_RCNN/releases下载h5文件,把文件存到DynaSLAM/src/python/下

3.Python相关的环境

这里先在Anaconda创建一个新的虚拟环境并激活,然后在虚拟环境中依次安装tensorflow和keras。

conda create -n MaskRCNN python=2.7

conda activate MaskRCNN

pip install tensorflow==1.14.0 #或者 pip install tensorflow-gpu==1.14.0

pip install keras==2.0.9

完成上面的步骤后,python的环境差不多就弄好了,下面可以测试一下

cd DynaSLAM

python src/python/Check.py

如果输出为Mask R-CNN is correctly working,就可以下一步了。然而,事情很难这么顺利哈哈哈,那么就一一解决。我这里碰到了两个问题:

3.1 没有安装scikit-image

sudo pip install scikit-image

3.2 关于pycocotools的报错

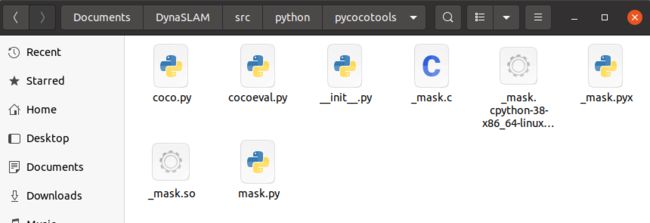

注意!这里一定要在Python2.7(cocoapi只支持Python2)的时候进行安装!否则运行Check.py的时候会报错找不到_mask, 因为Python3运行的话就不会生成_mask.so这个文件。

git clone https://github.com/waleedka/coco

python PythonAPI/setup.py build_ext install

运行完上面指令之后就把pycocotools文件夹整个复制到src/python/下,像这样:

四. 修改部分DynaSLAM源码

这里非常感谢这个小姐姐(?),原地址在这里:Pushyami_dev,如果想看看代码具体增删了哪些可以点进去看看。

提交的代码主要是针对Opencv3的使用做出了一些修改,然后在这个代码的基础上,去掉CMakeLists.txt中的-march=native(会出现Segment Default报错),/Thirdparty/DBoW2中的记得也要去一下。代码主要在以下部分做了修改,直接整个复制到文件夹里就行,注意修改一下自己OpenCV3.x的版本。

- CMakeLists.txt

- Thirdparty/DBoW2/CMakeLists.txt

- include/Conversion.h

- src/Conversion.cc

1. CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(DynaSLAM)

IF(NOT CMAKE_BUILD_TYPE)

SET(CMAKE_BUILD_TYPE Release)

# SET(CMAKE_BUILD_TYPE Debug)

ENDIF()

MESSAGE("Build type: " ${CMAKE_BUILD_TYPE})

#set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O3 -march=native ")

#set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O3 -march=native")

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O3 ")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O3 ")

# This is required if opencv is built from source locally

#SET(OpenCV_DIR "~/opencv/build")

# set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O0 -march=native ")

# set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O0 -march=native")

# Check C++11 or C++0x support

include(CheckCXXCompilerFlag)

CHECK_CXX_COMPILER_FLAG("-std=c++11" COMPILER_SUPPORTS_CXX11)

CHECK_CXX_COMPILER_FLAG("-std=c++0x" COMPILER_SUPPORTS_CXX0X)

if(COMPILER_SUPPORTS_CXX11)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11")

add_definitions(-DCOMPILEDWITHC11)

message(STATUS "Using flag -std=c++11.")

elseif(COMPILER_SUPPORTS_CXX0X)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++0x")

add_definitions(-DCOMPILEDWITHC0X)

message(STATUS "Using flag -std=c++0x.")

else()

message(FATAL_ERROR "The compiler ${CMAKE_CXX_COMPILER} has no C++11 support. Please use a different C++ compiler.")

endif()

LIST(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules)

set(Python_ADDITIONAL_VERSIONS "2.7")

#This is to avoid detecting python 3

find_package(PythonLibs 2.7 EXACT REQUIRED)

if (NOT PythonLibs_FOUND)

message(FATAL_ERROR "PYTHON LIBS not found.")

else()

message("PYTHON LIBS were found!")

message("PYTHON LIBS DIRECTORY: " ${PYTHON_LIBRARY} ${PYTHON_INCLUDE_DIRS})

endif()

message("PROJECT_SOURCE_DIR: " ${OpenCV_DIR})

find_package(OpenCV 3.4 QUIET)

if(NOT OpenCV_FOUND)

find_package(OpenCV 2.4 QUIET)

if(NOT OpenCV_FOUND)

message(FATAL_ERROR "OpenCV > 2.4.x not found.")

endif()

endif()

find_package(Qt5Widgets REQUIRED)

find_package(Qt5Concurrent REQUIRED)

find_package(Qt5OpenGL REQUIRED)

find_package(Qt5Test REQUIRED)

find_package(Boost REQUIRED COMPONENTS thread)

if(Boost_FOUND)

message("Boost was found!")

message("Boost Headers DIRECTORY: " ${Boost_INCLUDE_DIRS})

message("Boost LIBS DIRECTORY: " ${Boost_LIBRARY_DIRS})

message("Found Libraries: " ${Boost_LIBRARIES})

endif()

find_package(Eigen3 3.1.0 REQUIRED)

find_package(Pangolin REQUIRED)

set(PYTHON_INCLUDE_DIRS ${PYTHON_INCLUDE_DIRS} /usr/local/lib/python2.7/dist-packages/numpy/core/include/numpy)

include_directories(

${PROJECT_SOURCE_DIR}

${PROJECT_SOURCE_DIR}/include

${EIGEN3_INCLUDE_DIR}

${Pangolin_INCLUDE_DIRS}

${PYTHON_INCLUDE_DIRS}

/usr/include/python2.7/

#/usr/lib/python2.7/dist-packages/numpy/core/include/numpy/

${Boost_INCLUDE_DIRS}

)

message("PROJECT_SOURCE_DIR: " ${PROJECT_SOURCE_DIR})

set(CMAKE_LIBRARY_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/lib)

add_library(${PROJECT_NAME} SHARED

src/System.cc

src/Tracking.cc

src/LocalMapping.cc

src/LoopClosing.cc

src/ORBextractor.cc

src/ORBmatcher.cc

src/FrameDrawer.cc

src/Converter.cc

src/MapPoint.cc

src/KeyFrame.cc

src/Map.cc

src/MapDrawer.cc

src/Optimizer.cc

src/PnPsolver.cc

src/Frame.cc

src/KeyFrameDatabase.cc

src/Sim3Solver.cc

src/Initializer.cc

src/Viewer.cc

src/Conversion.cc

src/MaskNet.cc

src/Geometry.cc

)

target_link_libraries(${PROJECT_NAME}

${OpenCV_LIBS}

${EIGEN3_LIBS}

${Pangolin_LIBRARIES}

${PROJECT_SOURCE_DIR}/Thirdparty/DBoW2/lib/libDBoW2.so

${PROJECT_SOURCE_DIR}/Thirdparty/g2o/lib/libg2o.so

/usr/lib/x86_64-linux-gnu/libpython2.7.so

${Boost_LIBRARIES}

)

# Build examples

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/Examples/RGB-D)

add_executable(rgbd_tum

Examples/RGB-D/rgbd_tum.cc)

target_link_libraries(rgbd_tum ${PROJECT_NAME})

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/Examples/Stereo)

add_executable(stereo_kitti

Examples/Stereo/stereo_kitti.cc)

target_link_libraries(stereo_kitti ${PROJECT_NAME})

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/Examples/Monocular)

add_executable(mono_tum

Examples/Monocular/mono_tum.cc)

target_link_libraries(mono_tum ${PROJECT_NAME})

2.Thirdparty/DBoW2/CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(DBoW2)

#set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O3 -march=native ")

#set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O3 -march=native")

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O3 ")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O3 ")

set(HDRS_DBOW2

DBoW2/BowVector.h

DBoW2/FORB.h

DBoW2/FClass.h

DBoW2/FeatureVector.h

DBoW2/ScoringObject.h

DBoW2/TemplatedVocabulary.h)

set(SRCS_DBOW2

DBoW2/BowVector.cpp

DBoW2/FORB.cpp

DBoW2/FeatureVector.cpp

DBoW2/ScoringObject.cpp)

set(HDRS_DUTILS

DUtils/Random.h

DUtils/Timestamp.h)

set(SRCS_DUTILS

DUtils/Random.cpp

DUtils/Timestamp.cpp)

find_package(OpenCV 3.4 QUIET)

if(NOT OpenCV_FOUND)

find_package(OpenCV 2.4.3 QUIET)

if(NOT OpenCV_FOUND)

message(FATAL_ERROR "OpenCV > 2.4.3 not found.")

endif()

endif()

set(LIBRARY_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/lib)

include_directories(${OpenCV_INCLUDE_DIRS})

add_library(DBoW2 SHARED ${SRCS_DBOW2} ${SRCS_DUTILS})

target_link_libraries(DBoW2 ${OpenCV_LIBS})

3.include/Conversion.h

/**

* This file is part of DynaSLAM.

* Copyright (C) 2018 Berta Bescos (University of Zaragoza)

* For more information see .

*

*/

#ifndef CONVERSION_H_

#define CONVERSION_H_

#include 4. src/Conversion.cc

/**

* This file is part of DynaSLAM.

* Copyright (C) 2018 Berta Bescos <bbescos at unizar dot es> (University of Zaragoza)

* For more information see <https://github.com/bertabescos/DynaSLAM>.

*

*/

#include "Conversion.h"

#include 五.编译源码与运行

编译DynaSLAM源码

cd DynaSLAM

chmod +x build.sh

./build.sh

如果运行时不给后面两个参数,就相当于运行ORB-SLAM2

如果只想用MaskRCNN的功能但不想存mask,那么在PATH_MASK那里就写为no_save,否则就给一个存Mask的文件夹地址

./Examples/RGB-D/rgbd_tum Vocabulary/ORBvoc.txt Examples/RGB-D/TUM3.yaml /XXX/tum_dataset/ /XXX/tum_dataset/associations.txt masks/ output/

如果运行起来发现Light Track一直不成功,无法初始化,那么就把ORB参数设置中特征点的数目增多,github上大家一般改成3000就好了。

完结,撒花~