二进制安装k8s

二进制安装k8s

- **Kubernetes概述**

- 1.Kubernetes是什么

-

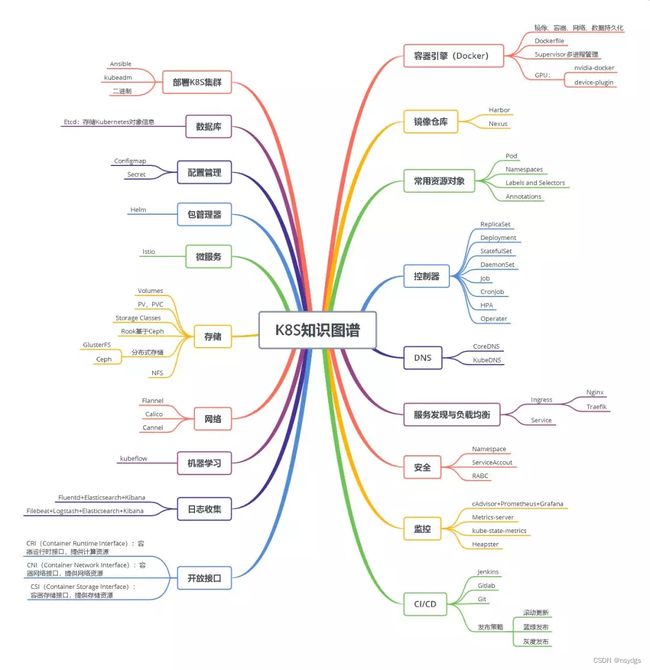

- K8S知识图谱

- 2.Kubernetes特性

- 3.Kubernetes集群架构与组件

-

- - Master组件

-

- **kube-apiserver**

- **kube-controller-manager**

- **kube-scheduler**

- **etcd**

- - Node组件

-

- **kubelet**

- **kube-proxy**

- **docker或rocket**

- 4.Kubernetes核心概念-

-

- Pod

- Controllers

- Service

- Label

- Namespaces

- Annotations

- 01.系统初始化和全局变量

-

- 集群机器

- 主机名

- 添加 docker 账户

- 无密码 ssh 登录其它节点

- 更新 PATH 变量

- 安装依赖包

- 关闭防火墙

- 关闭 swap 分区

- 关闭 SELinux

- 优化资源配置

- 加载内核模块

- 优化内核参数

- 设置系统时区

- 关闭无关的服务

- 设置 rsyslogd 和 systemd journald

- master-1 创建环境变量文件

- 创建相关目录

- 升级内核

- 分发集群配置参数脚本

- 02.创建 CA 证书和秘钥

-

- 安装 cfssl 工具集

- 创建根证书 (CA)

-

- 创建配置文件

- 创建证书签名请求文件

- 生成 CA 证书和私钥

- 签发etcd证书

- 签发apiserver证书

- 签发controller-manager证书

- 签发scheduler证书

- 签发kubectl(admin)证书

- 签发kube-proxy证书

- 配置flannel证书

- 分发证书文件

- 参考

- 03.部署 kubectl 命令行工具

-

- 下载和分发 kubectl 二进制文件

- 创建 kubeconfig 文件

- 分发 kubeconfig 文件

- 使用kubecoler增强显示

- 04.部署 etcd 集群

-

- 下载和分发 etcd 二进制文件

- 创建 etcd 的 systemd unit 模板文件

- 为各节点创建和分发 etcd systemd unit 文件

- 启动 etcd 服务

- 检查启动结果

- 验证服务状态

- 查看当前的 leader

- trouble-shooting

- 05.部署 flannel 网络

-

- 下载和分发 flanneld & CNI插件 二进制文件

- 创建并分发flannel 配置文件

- 创建并分发CNI网络插件 配置文件

- 配置flannel kubeconfig

- 创建flannel.conf

- 分发flannel.conf

- 创建 flanneld 的 systemd unit 文件

- 替换变量

- 启动 flanneld 服务

- 检查启动结果

- 向etcd写入集群Pod网段信息

- 检查分配给各 flanneld 的 Pod 网段信息

- 检查节点 flannel 网络信息

- 验证各节点能通过 Pod 网段互通

- 06-0 kube-apiserver 高可用之 nginx 代理

-

- 基于 nginx 代理的 kube-apiserver 高可用方案

- 安装keepalived

- 下载和编译 nginx

- 验证编译的 nginx

- 安装和部署 nginx

- 配置 systemd unit 文件,启动服务

- 检查 kube-nginx 服务运行状态

- 06-1.部署 master 节点

-

- 安装和配置 kube-nginx

- 下载最新版本二进制文件

- 06-2.部署高可用 kube-apiserver 集群

-

- 准备工作

- 创建加密配置文件

- 创建审计策略文件

- 创建令牌认证文件

- 创建 kube-apiserver systemd unit 模板文件

- 为各节点创建和分发 kube-apiserver systemd unit 文件

- 启动 kube-apiserver 服务

- 检查 kube-apiserver 运行状态

- 打印 kube-apiserver 写入 etcd 的数据

- 检查集群信息

- 检查 kube-apiserver 监听的端口

- 授予 kube-apiserver 访问 kubelet API 的权限

- 06-3.部署高可用 kube-controller-manager 集群

-

- 准备工作

- 创建和分发 kubeconfig 文件

- 创建 kube-controller-manager systemd unit 模板文件

- 为各节点创建和分发 kube-controller-mananger systemd unit 文件

- 启动 kube-controller-manager 服务

- 检查服务运行状态

- 查看输出的 metrics

- kube-controller-manager 的权限

- 查看当前的 leader

- 测试 kube-controller-manager 集群的高可用

- 参考

- 06-4.部署高可用 kube-scheduler 集群

-

- 准备工作

- 创建和分发 kubeconfig 文件

- 创建 kube-scheduler systemd unit 模板文件

- 启动 kube-scheduler 服务

- 检查服务运行状态

- 查看输出的 metrics

- 查看当前的 leader

- 测试 kube-scheduler 集群的高可用

- 07-0.部署 worker 节点

-

- 安装和配置 flanneld

- 安装和配置 kube-nginx

- 安装依赖包

- 07-1.部署 docker 组件

-

- 安装依赖包

- 下载和分发 docker 二进制文件

- 创建和分发 systemd unit 文件

- 配置和分发 docker 配置文件

- 启动 docker 服务

- 检查服务运行状态

- 检查 docker0 网桥

- 查看 docker 的状态信息

- 07-2.部署 kubelet 组件

-

- 下载和分发 kubelet 二进制文件

- 安装依赖包

- 创建 kubelet bootstrap kubeconfig 文件

- 分发 kubelet bootstrap kubeconfig 文件

- 创建ClusterRoleBinding

- 创建和分发 kubelet systemd unit 文件

- 启动 kubelet 服务

- Bootstrap Token Auth 和授予权限

- 启动 kubelet 服务

- 自动 approve CSR 请求

- 查看 kubelet 的情况

- 手动 approve server cert csr

- kubelet 提供的 API 接口

- kubelet api 认证和授权

-

- 证书认证和授权

- bear token 认证和授权

- cadvisor 和 metrics

- 参考

- 07-3.部署 kube-proxy 组件

-

- 下载和分发 kube-proxy 二进制文件

- 安装依赖包

- 分发证书

- 创建和分发 kubeconfig 文件

- 创建 kube-proxy 配置文件

- 创建和分发 kube-proxy systemd unit 文件

- 启动 kube-proxy 服务

- 检查启动结果

- 查看监听端口

- 查看 ipvs 路由规则

- 08.验证集群功能

-

- 检查节点状态

- 创建测试文件

- 检查各节点的 Pod IP 连通性

- 检查服务 IP 和端口可达性

- 09-0.部署集群插件

-

- 配置污点

- 配置角色标签

- 09-1.部署 coredns 插件

-

- 修改配置文件

- 创建 coredns

- 检查 coredns 功能

- 参考

- 09-2.部署 dashboard 插件

-

- 修改配置文件

- 执行所有定义文件

- 查看分配的 NodePort

- 查看 dashboard 支持的命令行参数

- 访问 dashboard

- 创建登录 Dashboard 的 token 和 kubeconfig 配置文件

-

- 创建登录 token

- 使用kubectl proxy

-

- 创建使用 token 的 KubeConfig 文件

- 参考

Kubernetes概述

1.Kubernetes是什么

•Kubernetes是Google在2014年开源的一个容器集群管理系统,Kubernetes简称K8S。

•K8S用于容器化应用程序的部署,扩展和管理。

•K8S提供了容器编排,资源调度,弹性伸缩,部署管理,服务发现等一系列功能。

•Kubernetes目标是让部署容器化应用简单高效。

官方网站:http://www.kubernetes.io

K8S知识图谱

2.Kubernetes特性

- 自我修复

在节点故障时重新启动失败的容器,替换和重新部署,保证预期的副本数量;杀死健康检查失败的容器,并且在未准备好之前不会处理客户端请求,确保线上服务不中断。 - 弹性伸缩

使用命令、UI或者基于CPU使用情况自动快速扩容和缩容应用程序实例,保证应用业务高峰并发时的高可用性;业务低峰时回收资源,以最小成本运行服务。 - 自动部署和回滚

K8S采用滚动更新策略更新应用,一次更新一个Pod,而不是同时删除所有Pod,如果更新过程中出现问题,将回滚更改,确保升级不受影响业务。 - 服务发现和负载均衡

K8S为多个容器提供一个统一访问入口(内部IP地址和一个DNS名称),并且负载均衡关联的所有容器,使得用户无需考虑容器IP问题。 - 机密和配置管理

管理机密数据和应用程序配置,而不需要把敏感数据暴露在镜像里,提高敏感数据安全性。并可以将一些常用的配置存储在K8S中,方便应用程序使用。 - 存储编排

挂载外部存储系统,无论是来自本地存储,公有云(如AWS),还是网络存储(如NFS、GlusterFS、Ceph)都作为集群资源的一部分使用,极大提高存储使用灵活性。 - 批处理

提供一次性任务,定时任务;满足批量数据处理和分析的场景。

3.Kubernetes集群架构与组件

,故需要在每台机器上关闭 swap 分区。同时注释 /etc/fstab 中相应的条目,防止开机自动挂载 swap 分区:

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

关闭 SELinux

关闭 SELinux,否则后续 K8S 挂载目录时可能报错 Permission denied:

setenforce 0

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

优化资源配置

cat >> /etc/security/limits.conf <<EOF

* soft noproc 65535

* hard noproc 65535

* soft nofile 65535

* hard nofile 65535

* soft memlock unlimited

* hard memlock unlimited

EOF

加载内核模块

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4 # 4.19内核已改名为nf_conntrack,这里报错可忽略

modprobe -- overlay

modprobe -- br_netfilter

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

优化内核参数

cat > kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它

vm.overcommit_memory=1 # 不检查物理内存是否够用

vm.panic_on_oom=0 # 开启 OOM

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

- 必须关闭 tcp_tw_recycle,否则和 NAT 冲突,会导致服务不通;

- 关闭 IPV6,防止触发 docker BUG;

设置系统时区

# 调整系统 TimeZone

timedatectl set-timezone Asia/Shanghai

# 将当前的 UTC 时间写入硬件时钟

timedatectl set-local-rtc 0

# 重启依赖于系统时间的服务

systemctl restart rsyslog

systemctl restart crond

关闭无关的服务

systemctl stop postfix && systemctl disable postfix

设置 rsyslogd 和 systemd journald

systemd 的 journald 是 Centos 7 缺省的日志记录工具,它记录了所有系统、内核、Service Unit 的日志。

相比 systemd,journald 记录的日志有如下优势:

- 可以记录到内存或文件系统;(默认记录到内存,对应的位置为 /run/log/jounal);

- 可以限制占用的磁盘空间、保证磁盘剩余空间;

- 可以限制日志文件大小、保存的时间;

journald 默认将日志转发给 rsyslog,这会导致日志写了多份,/var/log/messages 中包含了太多无关日志,不方便后续查看,同时也影响系统性能。

mkdir /var/log/journal # 持久化保存日志的目录

mkdir /etc/systemd/journald.conf.d

cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF

[Journal]

# 持久化保存到磁盘

Storage=persistent

# 压缩历史日志

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

# 最大占用空间 10G

SystemMaxUse=10G

# 单日志文件最大 200M

SystemMaxFileSize=200M

# 日志保存时间 2 周

MaxRetentionSec=2week

# 不将日志转发到 syslog

ForwardToSyslog=no

EOF

systemctl restart systemd-journald

master-1 创建环境变量文件

cat /app/k8s/bin/environment.sh << EOF

#!/bin/bash

# 生成 EncryptionConfig 所需的加密 key

export ENCRYPTION_KEY=$(head -c 32 /dev/urandom | base64)

# 集群各机器 IP 数组

export NODE_IPS=(192.168.3.141 192.168.3.142 192.168.3.143 192.168.3.144 192.168.3.145 192.168.3.146 192.168.3.147 192.168.3.148)

# 集群各 IP 对应的主机名数组

export NODE_NAMES=(ka-1 ka-2 ka-3 k8master-1 k8master-2 k8master-3 k8worker-1 k8worker-2)

# etcd 集群服务地址列表

export ETCD_ENDPOINTS="https://192.168.3.144:2379,https://192.168.3.145:2379,https://192.168.3.146:2379"

# etcd 集群间通信的 IP 和端口

export ETCD_NODES="k8master-1=https://192.168.3.144:2380,k8master-2=https://192.168.3.145:2380,k8master-3=https://192.168.3.146:2380"

#etcd IP

export ETCD_IPS=(192.168.3.144 192.168.3.145 192.168.3.146)

export API_IPS=(192.168.3.144 192.168.3.145 192.168.3.146)

export CTL_IPS=(192.168.3.144 192.168.3.145 192.168.3.146)

export WORK_IPS=(192.168.3.141 192.168.3.142 192.168.3.143 192.168.3.147 192.168.3.148)

export KA_IPS=(192.168.3.141 192.168.3.142 192.168.3.143)

export VIP_IP="192.168.3.140"

# kube-apiserver 的反向代理(kube-nginx)地址端口

export KUBE_APISERVER="https://192.168.3.140:8443"

# 节点间互联网络接口名称

export IFACE="ens192"

# etcd 数据目录

export ETCD_DATA_DIR="/app/k8s/etcd/data"

# etcd WAL 目录,建议是 SSD 磁盘分区,或者和 ETCD_DATA_DIR 不同的磁盘分区

export ETCD_WAL_DIR="/app/k8s/etcd/wal"

# k8s 各组件数据目录

export K8S_DIR="/app/k8s/k8s"

# docker 数据目录

export DOCKER_DIR="/app/k8s/docker"

## 以下参数一般不需要修改

# TLS Bootstrapping 使用的 Token,可以使用命令 head -c 16 /dev/urandom | od -An -t x | tr -d ' ' 生成

BOOTSTRAP_TOKEN="41f7e4ba8b7be874fcff18bf5cf41a7c"

# 最好使用 当前未用的网段 来定义服务网段和 Pod 网段

# 服务网段,部署前路由不可达,部署后集群内路由可达(kube-proxy 保证)

SERVICE_CIDR="10.254.0.0/16"

# Pod 网段,建议 /16 段地址,部署前路由不可达,部署后集群内路由可达(flanneld 保证)

CLUSTER_CIDR="172.1.0.0/16"

# 服务端口范围 (NodePort Range)

export NODE_PORT_RANGE="30000-32767"

# flanneld 网络配置前缀

export FLANNEL_ETCD_PREFIX="/kubernetes/network"

# kubernetes 服务 IP (一般是 SERVICE_CIDR 中第一个IP)

export CLUSTER_KUBERNETES_SVC_IP="10.254.0.1"

# 集群 DNS 服务 IP (从 SERVICE_CIDR 中预分配)

export CLUSTER_DNS_SVC_IP="10.254.0.2"

# 集群 DNS 域名(末尾不带点号)

export CLUSTER_DNS_DOMAIN="cluster.local"

# 将二进制目录 /app/k8s/bin 加到 PATH 中

export PATH=/app/k8s/bin:$PATH

EOF

创建相关目录

创建目录:

mkdir -p /app/k8s/{bin,work} /etc/{kubernetes,etcd}/cert

升级内核

升级内核,详见:

https://gitee.com/lenovux/k8s/blob/master/B.centos7%E5%86%85%E6%A0%B8%E5%8D%87%E7%BA%A7.md

分发集群配置参数脚本

后续使用的环境变量都定义在文件 A.实用脚本.md 的[environment.sh]中,请根据自己的机器、网络情况修改。然后,把它拷贝到所有节点的 /app/k8s/bin 目录。

tags: TLS, CA, x509

02.创建 CA 证书和秘钥

为确保安全,kubernetes 系统各组件需要使用 x509 证书对通信进行加密和认证。

CA (Certificate Authority) 是自签名的根证书,用来签名后续创建的其它证书。

本文档使用 CloudFlare 的 PKI 工具集 cfssl 创建所有证书。

注意:如果没有特殊指明,本文档的所有操作均在k8master-1 节点上执行,然后远程分发文件和执行命令。

安装 cfssl 工具集

sudo mkdir -p /app/k8s/cert && cd /app/k8s

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

mv cfssl_linux-amd64 /app/k8s/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

mv cfssljson_linux-amd64 /app/k8s/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /app/k8s/bin/cfssl-certinfo

chmod +x /app/k8s/bin/*

export PATH=/app/k8s/bin:$PATH

创建根证书 (CA)

CA 证书是集群所有节点共享的,只需要创建一个 CA 证书,后续创建的所有证书都由它签名。

创建配置文件

CA 配置文件用于配置根证书的使用场景 (profile) 和具体参数 (usage,过期时间、服务端认证、客户端认证、加密等),后续在签名其它证书时需要指定特定场景。

cd /app/k8s/work

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

signing:表示该证书可用于签名其它证书,生成的ca.pem证书中CA=TRUE;server auth:表示 client 可以用该该证书对 server 提供的证书进行验证;client auth:表示 server 可以用该该证书对 client 提供的证书进行验证;

创建证书签名请求文件

cd /app/k8s/work

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "hangzhou",

"L": "hangzhou",

"O": "k8s",

"OU": "CMCC"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

- CN:

Common Name,kube-apiserver 从证书中提取该字段作为请求的用户名 (User Name),浏览器使用该字段验证网站是否合法; - O:

Organization,kube-apiserver 从证书中提取该字段作为请求用户所属的组 (Group); - kube-apiserver 将提取的 User、Group 作为

RBAC授权的用户标识;

生成 CA 证书和私钥

cd /app/k8s/work

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

ls ca*

签发etcd证书

1.创建证书请求文件

cd /app/k8s/work

cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.3.144",

"192.168.3.145",

"192.168.3.146",

"192.168.3.140"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "hangzhou",

"L": "hangzhou",

"O": "k8s",

"OU": "CMCC"

}

]

}

EOF

2.签发证书

cd /app/k8s/work

cfssl gencert -ca=/app/k8s/work/ca.pem \

-ca-key=/app/k8s/work/ca-key.pem \

-config=/app/k8s/work/ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

ls etcd*pem

签发apiserver证书

1.创建证书请求文件

source /app/k8s/bin/environment.sh

cat > kubernetes-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.3.144",

"192.168.3.145",

"192.168.3.146",

"192.168.3.140",

"${CLUSTER_KUBERNETES_SVC_IP}",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local."

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "zhejiang",

"L": "hangzhou",

"O": "k8s",

"OU": "CMCC"

}

]

}

EOF

2.签发证书

cfssl gencert -ca=/app/k8s/work/ca.pem \

-ca-key=/app/k8s/work/ca-key.pem \

-config=/app/k8s/work/ca-config.json \

-profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

ls kubernetes*pem

签发controller-manager证书

1.创建证书请求配置文件

cd /app/k8s/work

cat > kube-controller-manager-csr.json <<EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.3.144",

"192.168.3.145",

"192.168.3.146",

"192.168.3.140"

],

"names": [

{

"C": "CN",

"ST": "zhejiang",

"L": "hangzhou",

"O": "system:kube-controller-manager",

"OU": "CMCC"

}

]

}

EOF

2.签发证书

cd /app/k8s/work

cfssl gencert -ca=/app/k8s/work/ca.pem \

-ca-key=/app/k8s/work/ca-key.pem \

-config=/app/k8s/work/ca-config.json \

-profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

ls kube-controller-manager*pem

签发scheduler证书

1.创建证书请求配置文件

cd /app/k8s/work

cat > kube-scheduler-csr.json <<EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.3.146",

"192.168.3.145",

"192.168.3.144",

"192.168.3.140"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "zhejiang",

"L": "hangzhou",

"O": "system:kube-scheduler",

"OU": "CMCC"

}

]

}

EOF

2.签发证书

cd /app/k8s/work

cfssl gencert -ca=/app/k8s/work/ca.pem \

-ca-key=/app/k8s/work/ca-key.pem \

-config=/app/k8s/work/ca-config.json \

-profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

ls kube-scheduler*pem

签发kubectl(admin)证书

1.创建证书请求配置文件

cd /app/k8s/work

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:masters",

"OU": "4Paradigm"

}

]

}

EOF

这里绑定的不是User(用户),而是Group(组),使用O指定组。如果部署完后你想查看,可以通过如下命令:k get ClusterRolebinding cluster-admin -o yaml

2.签发证书

cd /app/k8s/work

cfssl gencert -ca=/app/k8s/work/ca.pem \

-ca-key=/app/k8s/work/ca-key.pem \

-config=/app/k8s/work/ca-config.json \

-profile=kubernetes admin-csr.json | cfssljson -bare admin

ls admin*

签发kube-proxy证书

1.创建证书请求配置文件

cd /app/k8s/work

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "zhejiang",

"L": "hangzhou",

"O": "k8s",

"OU": "CMCC"

}

]

}

EOF

2.签发证书

cd /app/k8s/work

cfssl gencert -ca=/app/k8s/work/ca.pem \

-ca-key=/app/k8s/work/ca-key.pem \

-config=/app/k8s/work/ca-config.json \

-profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

ls kube-proxy*

配置flannel证书

1.创建证书请求配置文件

cd /app/k8s/work

cat > flanneld-csr.json <<EOF

{

"CN": "flanneld",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "zhejiang",

"L": "hangzhou",

"O": "k8s",

"OU": "CMCC"

}

]

}

EOF

2.签发证书

cfssl gencert -ca=/app/k8s/work/ca.pem \

-ca-key=/app/k8s/work/ca-key.pem \

-config=/app/k8s/work/ca-config.json \

-profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld

ls flanneld*pem

分发证书文件

将生成的 CA 证书、秘钥文件、配置文件拷贝到所有节点的 /etc/kubernetes/cert 目录下:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert"

scp flanneld*pem ca-config.json root@${node_ip}:/etc/flanneld/cert

done

参考

- 各种 CA 证书类型:https://github.com/kubernetes-incubator/apiserver-builder/blob/master/docs/concepts/auth.md

tags: kubectl

03.部署 kubectl 命令行工具

本文档介绍安装和配置 kubernetes 集群的命令行管理工具 kubectl 的步骤。

kubectl 默认从 ~/.kube/config 文件读取 kube-apiserver 地址和认证信息,如果没有配置,执行 kubectl 命令时可能会出错:

$ kubectl get pods

The connection to the server localhost:8080 was refused - did you specify the right host or port?

注意:

- 如果没有特殊指明,本文档的所有操作均在 k8master-1 节点上执行,然后远程分发文件和执行命令;

- 本文档只需要部署一次,生成的 kubeconfig 文件是通用的,可以拷贝到需要执行 kubectl 命令的机器,重命名为

~/.kube/config;

下载和分发 kubectl 二进制文件

下载和解压:

cd /app/k8s/work

curl -LO https://dl.k8s.io/release/v1.22.0/bin/linux/amd64/kubectl

分发到所有使用 kubectl 的节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kubectl root@${node_ip}:/app/k8s/bin/

ssh root@${node_ip} "chmod +x /app/k8s/bin/*"

done

创建 kubeconfig 文件

kubeconfig 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书;

cd /app/k8s/work

source /app/k8s/bin/environment.sh

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/app/k8s/work/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubectl.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials admin \

--client-certificate=/app/k8s/work/admin.pem \

--client-key=/app/k8s/work/admin-key.pem \

--embed-certs=true \

--kubeconfig=kubectl.kubeconfig

# 设置上下文参数

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin \

--kubeconfig=kubectl.kubeconfig

# 设置默认上下文

kubectl config use-context kubernetes --kubeconfig=kubectl.kubeconfig

--certificate-authority:验证 kube-apiserver 证书的根证书;--client-certificate、--client-key:刚生成的admin证书和私钥,连接 kube-apiserver 时使用;--embed-certs=true:将 ca.pem 和 admin.pem 证书内容嵌入到生成的 kubectl.kubeconfig 文件中(不加时,写入的是证书文件路径,后续拷贝 kubeconfig 到其它机器时,还需要单独拷贝证书文件,不方便。);

分发 kubeconfig 文件

分发到所有使用 kubectl 命令的节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ~/.kube"

scp kubectl.kubeconfig root@${node_ip}:~/.kube/config

done

- 保存的文件名为 ``;

使用kubecoler增强显示

wget https://github.com//dty1er/kubecolor/releases/download/v0.0.20/kubecolor_0.0.20_Linux_x86_64.tar.gz

tar -zvxf kubecolor_0.0.20_Linux_x86_64.tar.gz

cp kubecolor /app/k8s/bin/

chmod +x /app/k8s/bin/*

echo 'command -v kubecolor >/dev/null 2>&1 && alias k="kubecolor"' >> ~/.bashrc

echo 'complete -o default -F __start_kubectl k' >> ~/.bashrc

source ~/.bashrc

kubecolor get pods

tags: etcd

04.部署 etcd 集群

etcd 是基于 Raft 的分布式 key-value 存储系统,由 CoreOS 开发,常用于服务发现、共享配置以及并发控制(如 leader 选举、分布式锁等)。kubernetes 使用 etcd 存储所有运行数据。

本文档介绍部署一个三节点高可用 etcd 集群的步骤:

- 下载和分发 etcd 二进制文件;

- 创建 etcd 集群各节点的 x509 证书,用于加密客户端(如 etcdctl) 与 etcd 集群、etcd 集群之间的数据流;

- 创建 etcd 的 systemd unit 文件,配置服务参数;

- 检查集群工作状态;

etcd 集群各节点的名称和 IP 如下:

- 192.168.3.144 k8master-1

- 192.168.3.145 k8master-2

- 192.168.3.146 k8master-3

注意:如果没有特殊指明,本文档的所有操作均在 k8master-1 节点上执行,然后远程分发文件和执行命令。

下载和分发 etcd 二进制文件

到 etcd 的 release 页面 下载最新版本的发布包:

cd /app/k8s/work

wget https://github.com/etcd-io/etcd/releases/download/v3.5.0/etcd-v3.5.0-linux-amd64.tar.gz

tar -zxvf etcd-v3.5.0-linux-amd64.tar.gz

分发二进制文件到集群所有节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${ETCD_IPS[@]}

do

echo ">>> ${node_ip}"

scp etcd-v3.5.0-linux-amd64/etcd* root@${node_ip}:/app/k8s/bin

ssh root@${node_ip} "chmod +x /app/k8s/bin/*"

done

分发生成的证书和私钥到各 etcd 节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${ETCD_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/etcd/cert"

scp etcd*.pem root@${node_ip}:/etc/etcd/cert/

done

创建 etcd 的 systemd unit 模板文件

cd /app/k8s/work

source /app/k8s/bin/environment.sh

cat > etcd.service.template <<EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=${ETCD_DATA_DIR}

ExecStart=/app/k8s/bin/etcd \\

--data-dir=${ETCD_DATA_DIR} \\

--wal-dir=${ETCD_WAL_DIR} \\

--name=##NODE_NAME## \\

--cert-file=/etc/etcd/cert/etcd.pem \\

--key-file=/etc/etcd/cert/etcd-key.pem \\

--trusted-ca-file=/etc/kubernetes/cert/ca.pem \\

--peer-cert-file=/etc/etcd/cert/etcd.pem \\

--peer-key-file=/etc/etcd/cert/etcd-key.pem \\

--peer-trusted-ca-file=/etc/kubernetes/cert/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--listen-peer-urls=https://##NODE_IP##:2380 \\

--initial-advertise-peer-urls=https://##NODE_IP##:2380 \\

--listen-client-urls=https://##NODE_IP##:2379,http://127.0.0.1:2379 \\

--advertise-client-urls=https://##NODE_IP##:2379 \\

--initial-cluster-token=etcd-cluster-0 \\

--initial-cluster=${ETCD_NODES} \\

--initial-cluster-state=new \\

--auto-compaction-mode=periodic \\

--auto-compaction-retention=1 \\

--max-request-bytes=33554432 \\

--quota-backend-bytes=6442450944 \\

--heartbeat-interval=250 \\

--election-timeout=2000 \\

--enable-v2=true

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

WorkingDirectory、--data-dir:指定工作目录和数据目录为${ETCD_DATA_DIR},需在启动服务前创建这个目录;--wal-dir:指定 wal 目录,为了提高性能,一般使用 SSD 或者和--data-dir不同的磁盘;--name:指定节点名称,当--initial-cluster-state值为new时,--name的参数值必须位于--initial-cluster列表中;--cert-file、--key-file:etcd server 与 client 通信时使用的证书和私钥;--trusted-ca-file:签名 client 证书的 CA 证书,用于验证 client 证书;--peer-cert-file、--peer-key-file:etcd 与 peer 通信使用的证书和私钥;--peer-trusted-ca-file:签名 peer 证书的 CA 证书,用于验证 peer 证书;

或者这个配置:

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/app/k8s/etcd/data

ExecStart=/app/k8s/bin/etcd \

--data-dir=/app/k8s/etcd/data \

--wal-dir=/app/k8s/etcd/wal \

--name=k8master-1 \

--cert-file=/etc/etcd/cert/etcd.pem \

--key-file=/etc/etcd/cert/etcd-key.pem \

--trusted-ca-file=/etc/kubernetes/cert/ca.pem \

--peer-cert-file=/etc/etcd/cert/etcd.pem \

--peer-key-file=/etc/etcd/cert/etcd-key.pem \

--peer-trusted-ca-file=/etc/kubernetes/cert/ca.pem \

--peer-client-cert-auth \

--client-cert-auth \

--listen-peer-urls=https://192.168.3.144:2380 \

--initial-advertise-peer-urls=https://192.168.3.144:2380 \

--listen-client-urls=https://192.168.3.144:2379,http://127.0.0.1:2379 \

--advertise-client-urls=https://192.168.3.144:2379 \

--initial-cluster-token=etcd-cluster-0 \

--initial-cluster=k8master-1=https://192.168.3.144:2380,k8master-2=https://192.168.3.145:2380,k8master-3=https://192.168.3.146:2380

--initial-cluster-state=new \

--auto-compaction-mode=periodic \

--auto-compaction-retention=1 \

--max-request-bytes=33554432 \

--quota-backend-bytes=6442450944 \

--heartbeat-interval=250 \

--election-timeout=2000

--enable-v2=true

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

为各节点创建和分发 etcd systemd unit 文件

替换模板文件中的变量,为各节点创建 systemd unit 文件:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for (( i=0; i < 9; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" etcd.service.template > etcd-${NODE_IPS[i]}.service

done

ls *.service

- NODE_NAMES 和 NODE_IPS 为相同长度的 bash 数组,分别为节点名称和对应的 IP;

分发生成的 systemd unit 文件:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${ETCD_IPS[@]}

do

echo ">>> ${node_ip}"

scp etcd-${node_ip}.service root@${node_ip}:/etc/systemd/system/etcd.service

done

- 文件重命名为 etcd.service;

启动 etcd 服务

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${ETCD_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${ETCD_DATA_DIR} ${ETCD_WAL_DIR}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable etcd && systemctl restart etcd " &

done

- 必须先创建 etcd 数据目录和工作目录;

- etcd 进程首次启动时会等待其它节点的 etcd 加入集群,命令

systemctl start etcd会卡住一段时间,为正常现象;

检查启动结果

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${ETCD_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status etcd|grep Active"

done

确保状态为 active (running),否则查看日志,确认原因:

journalctl -u etcd

验证服务状态

部署完 etcd 集群后,在任一 etcd 节点上执行如下命令:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${ETCD_IPS[@]}

do

echo ">>> ${node_ip}"

ETCDCTL_API=3 /app/k8s/bin/etcdctl \

--endpoints=https://${node_ip}:2379 \

--cacert=/etc/kubernetes/cert/ca.pem \

--cert=/etc/etcd/cert/etcd.pem \

--key=/etc/etcd/cert/etcd-key.pem endpoint health

done

预期输出:

>>> 172.27.137.240

https://172.27.137.240:2379 is healthy: successfully committed proposal: took = 2.756451ms

>>> 172.27.137.239

https://172.27.137.239:2379 is healthy: successfully committed proposal: took = 2.025018ms

>>> 172.27.137.238

https://172.27.137.238:2379 is healthy: successfully committed proposal: took = 2.335097ms

输出均为 healthy 时表示集群服务正常。

查看当前的 leader

source /app/k8s/bin/environment.sh

ETCDCTL_API=3 /app/k8s/bin/etcdctl \

-w table --cacert=/etc/kubernetes/cert/ca.pem \

--cert=/etc/etcd/cert/etcd.pem \

--key=/etc/etcd/cert/etcd-key.pem \

--endpoints=${ETCD_ENDPOINTS} endpoint status

输出:

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.3.144:2379 | 4c3f96aeaf516ead | 3.5.0 | 29 kB | false | false | 16 | 44 | 44 | |

| https://192.168.3.145:2379 | 166a0a39c02deebc | 3.5.0 | 20 kB | false | false | 16 | 44 | 44 | |

| https://192.168.3.146:2379 | 4591e7e99dfd9a5e | 3.5.0 | 20 kB | true | false | 16 | 44 | 44 | |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

- 可见,当前的 leader 为 172.27.137.239。

trouble-shooting

如果出现 IS LEADER 2个true,检查日志发现:

request cluster ID mismatch

需要删除:

/app/k8s/etcd/work/*

/app/k8s/etcd/wal/*

再重启服务

tags: flanneld

05.部署 flannel 网络

kubernetes 要求集群内各节点(包括 master 节点)能通过 Pod 网段互联互通。flannel 使用 vxlan 技术为各节点创建一个可以互通的 Pod 网络,使用的端口为 UDP 8472(需要开放该端口,如公有云 AWS 等)。

flanneld 第一次启动时,从 etcd 获取配置的 Pod 网段信息,为本节点分配一个未使用的地址段,然后创建 flannedl.1 网络接口(也可能是其它名称,如 flannel1 等)。

flannel 将分配给自己的 Pod 网段信息写入 /app/flannel/docker 文件,docker 后续使用这个文件中的环境变量设置 docker0 网桥,从而从这个地址段为本节点的所有 Pod 容器分配 IP。

注意:如果没有特殊指明,本文档的所有操作均在 k8master-1 节点上执行,然后远程分发文件和执行命令。

下载和分发 flanneld & CNI插件 二进制文件

从 flannel 的 release 页面 下载最新版本的安装包:

cd /app/k8s/work

mkdir flannel

wget https://github.com/coreos/flannel/releases/download/v0.14.0/flannel-v0.14.0-linux-amd64.tar.gz

tar -xzvf flannel-v0.14.0-linux-amd64.tar.gz -C flannel

cd /app/k8s/work

mkdir cni

wget https://github.com/containernetworking/plugins/releases/download/v0.9.1/cni-plugins-linux-amd64-v0.9.1.tgz

tar -xvf cni-plugins-linux-amd64-v0.9.1.tgz -C cni

分发flanneld二进制文件到集群所有节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${WORK_IPS[@]}

do

echo ">>> ${node_ip}"

scp flannel/{flanneld,mk-docker-opts.sh} root@${node_ip}:/app/k8s/bin/

ssh root@${node_ip} "chmod +x /app/k8s/bin/*"

done

分发CNI插件二进制文件到集群worker节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${WORK_IPS[@]}

do

echo ">>> ${node_ip}"

scp cni/* root@${node_ip}:/app/k8s/bin/cni

ssh root@${node_ip} "chmod +x /app/k8s/bin/cni/*"

done

创建并分发flannel 配置文件

- 创建配置文件

cd /app/k8s/work

source /app/k8s/bin/environment.sh

cat > net-conf.json <<EOF

{

"Network": "172.1.0.0/16",

"Backend": {

"Type": "vxlan",

"DirectRouting": true

}

}

EOF

- 分发配置文件

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${WORK_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kube-flannel/"

scp net-conf.json root@${node_ip}:/etc/kube-flannel/

done

创建并分发CNI网络插件 配置文件

- 创建配置文件

cd /app/k8s/work

source /app/k8s/bin/environment.sh

cat > 10-flannel.conflist <<EOF

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

EOF

- 分发配置文件

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${WORK_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/cni/net.d/"

scp 10-flannel.conflist root@${node_ip}:/etc/cni/net.d/

done

配置flannel kubeconfig

cat <<EOF | kubectl apply -f -

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

EOF

创建flannel.conf

cd /app/k8s/work

source /app/k8s/bin/environment.sh

kubectl config set-cluster kubernetes --kubeconfig=flannel.conf --embed-certs --server=https://192.168.3.140:8443 --certificate-authority=/app/k8s/work/ca.pem

kubectl config set-credentials flannel --kubeconfig=flannel.conf --token=$(kubectl get sa -n kube-system flannel -o jsonpath={.secrets[0].name} | xargs kubectl get secret -n kube-system -o jsonpath={.data.token} | base64 -d)

kubectl config set-context kubernetes --kubeconfig=flannel.conf --user=flannel --cluster=kubernetes

kubectl config use-context kubernetes --kubeconfig=flannel.conf

分发flannel.conf

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${WORK_IPS[@]}

do

echo ">>> ${node_ip}"

scp flannel.conf root@${node_ip}:/etc/kubernetes/

done

创建 flanneld 的 systemd unit 文件

cd /app/k8s/work

source /app/k8s/bin/environment.sh

cat > flanneld.service.template <<EOF

[Unit]

Description=Flanneld

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

[Service]

Type=notify

Environment=NODE_NAME=##NODE_IP##

ExecStart=/app/k8s/bin/flanneld \\

--iface=ens192 \\

--ip-masq \\

--kube-subnet-mgr=true \\

--kubeconfig-file=/etc/kubernetes/flannel.conf

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

EOF

mk-docker-apps.sh脚本将分配给 flanneld 的 Pod 子网段信息写入/app/flannel/docker文件,后续 docker 启动时使用这个文件中的环境变量配置 docker0 网桥;- flanneld 使用系统缺省路由所在的接口与其它节点通信,对于有多个网络接口(如内网和公网)的节点,可以用

-iface参数指定通信接口; - flanneld 运行时需要 root 权限;

-ip-masq: flanneld 为访问 Pod 网络外的流量设置 SNAT 规则,同时将传递给 Docker 的变量--ip-masq(/app/flannel/docker文件中)设置为 false,这样 Docker 将不再创建 SNAT 规则;

Docker 的--ip-masq为 true 时,创建的 SNAT 规则比较“暴力”:将所有本节点 Pod 发起的、访问非 docker0 接口的请求做 SNAT,这样访问其他节点 Pod 的请求来源 IP 会被设置为 flannel.1 接口的 IP,导致目的 Pod 看不到真实的来源 Pod IP。

flanneld 创建的 SNAT 规则比较温和,只对访问非 Pod 网段的请求做 SNAT。

替换变量

替换模板文件中的变量,为各节点生成 systemd unit 文件:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for (( i=0; i < 7; i++ ))

do

sed -e "s/##NODE_IP##/${WOKR_IPS[i]}/" flanneld.service.template > flanneld-${WORK_IPS[i]}.service

done

ls flanneld-*.service

##分发生成的 systemd unit 文件:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${WORK_IPS[@]}

do

echo ">>> ${node_ip}"

scp flanneld-${node_ip}.service root@${node_ip}:/etc/systemd/system/flanneld.service

done

- 文件重命名为 flanneld.service;

启动 flanneld 服务

source /app/k8s/bin/environment.sh

for node_ip in ${WORK_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable flanneld && systemctl restart flanneld"

done

检查启动结果

source /app/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status flanneld|grep Active"

done

确保状态为 active (running),否则查看日志,确认原因:

journalctl -u flanneld

向etcd写入集群Pod网段信息

source /app/k8s/bin/environment.sh

ETCDCTL_API=2 etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

set ${FLANNEL_ETCD_PREFIX}/config '{"Network":"'${CLUSTER_CIDR}'", "SubnetLen": 21, "Backend": {"Type": "vxlan"}}'

检查分配给各 flanneld 的 Pod 网段信息

查看集群 Pod 网段(/16):

source /app/k8s/bin/environment.sh

ETCDCTL_API=2 etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

get ${FLANNEL_ETCD_PREFIX}/config

输出:

{“Network”:“172.1.0.0/16”, “SubnetLen”: 24, “Backend”: {“Type”: “vxlan”}}

查看已分配的 Pod 子网段列表(/24):

source /app/k8s/bin/environment.sh

ETCDCTL_API=2 etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

ls ${FLANNEL_ETCD_PREFIX}/subnets

输出(结果视部署情况而定):

/kubernetes/network/subnets/172.1.99.0-24

/kubernetes/network/subnets/172.1.30.0-24

/kubernetes/network/subnets/172.1.14.0-24

/kubernetes/network/subnets/172.1.38.0-24

/kubernetes/network/subnets/172.1.12.0-24

/kubernetes/network/subnets/172.1.52.0-24

/kubernetes/network/subnets/172.1.2.0-24

/kubernetes/network/subnets/172.1.24.0-24

查看某一 Pod 网段对应的节点 IP 和 flannel 接口地址:

source /app/k8s/bin/environment.sh

ETCDCTL_API=2 etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

get ${FLANNEL_ETCD_PREFIX}/subnets/172.1.99.0-24

输出(结果视部署情况而定):

{"PublicIP":"192.168.3.143","BackendType":"vxlan","BackendData":{"VNI":1,"VtepMAC":"f6:89:30:ef:45:04"}}

- 172.30.80.0/21 被分配给节点 keepalive-3(192.168.3.143);

- VtepMAC 为 keepalive-3 节点的 flannel.1 网卡 MAC 地址;

检查节点 flannel 网络信息

[root@ka-3 ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:80:99:bd brd ff:ff:ff:ff:ff:ff

inet 192.168.3.143/24 brd 192.168.3.255 scope global noprefixroute ens192

valid_lft forever preferred_lft forever

3: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether f6:89:30:ef:45:04 brd ff:ff:ff:ff:ff:ff

inet 172.1.99.0/32 brd 172.1.99.0 scope global flannel.1

valid_lft forever preferred_lft forever

- flannel.1 网卡的地址为分配的 Pod 子网段的第一个 IP(.0),且是 /32 的地址;

[root@ka-3 ~]# ip route show |grep flannel.1

172.1.2.0/24 via 172.1.2.0 dev flannel.1 onlink

172.1.12.0/24 via 172.1.12.0 dev flannel.1 onlink

172.1.14.0/24 via 172.1.14.0 dev flannel.1 onlink

172.1.24.0/24 via 172.1.24.0 dev flannel.1 onlink

172.1.30.0/24 via 172.1.30.0 dev flannel.1 onlink

172.1.38.0/24 via 172.1.38.0 dev flannel.1 onlink

172.1.52.0/24 via 172.1.52.0 dev flannel.1 onlink

- 到其它节点 Pod 网段请求都被转发到 flannel.1 网卡;

- flanneld 根据 etcd 中子网段的信息,如

${FLANNEL_ETCD_PREFIX}/subnets/172.1.99.0-24,来决定进请求发送给哪个节点的互联 IP;

验证各节点能通过 Pod 网段互通

在各节点上部署 flannel 后,检查是否创建了 flannel 接口(名称可能为 flannel0、flannel.0、flannel.1 等):

source /app/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "/usr/sbin/ip addr show flannel.1|grep -w inet"

done

输出:

>>> 192.168.3.141

inet 172.1.2.0/32 brd 172.1.2.0 scope global flannel.1

>>> 192.168.3.142

inet 172.1.24.0/32 brd 172.1.24.0 scope global flannel.1

>>> 192.168.3.143

inet 172.1.99.0/32 brd 172.1.99.0 scope global flannel.1

>>> 192.168.3.144

inet 172.1.12.0/32 brd 172.1.12.0 scope global flannel.1

>>> 192.168.3.145

inet 172.1.52.0/32 brd 172.1.52.0 scope global flannel.1

>>> 192.168.3.146

inet 172.1.30.0/32 brd 172.1.30.0 scope global flannel.1

>>> 192.168.3.147

inet 172.1.14.0/32 brd 172.1.14.0 scope global flannel.1

>>> 192.168.3.148

inet 172.1.38.0/32 brd 172.1.38.0 scope global flannel.1

在各节点上 ping 所有 flannel 接口 IP,确保能通:

source /app/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "ping -c 1 172.1.2.0"

ssh ${node_ip} "ping -c 1 172.1.24.0"

ssh ${node_ip} "ping -c 1 172.1.99.0"

done

06-0 kube-apiserver 高可用之 nginx 代理

本文档讲解使用 nginx 4 层透明代理功能实现 K8S 节点( master 节点和 worker 节点)高可用访问 kube-apiserver 的步骤。

注意:如果没有特殊指明,本文档的所有操作均在 k8master-1 节点上执行,然后远程分发文件和执行命令。

基于 nginx 代理的 kube-apiserver 高可用方案

- 控制节点的 kube-controller-manager、kube-scheduler 是多实例部署,所以只要有一个实例正常,就可以保证高可用;

- 集群内的 Pod 使用 K8S 服务域名 kubernetes 访问 kube-apiserver, kube-dns 会自动解析出多个 kube-apiserver 节点的 IP,所以也是高可用的;

- 在每个节点起一个 nginx 进程,后端对接多个 apiserver 实例,nginx 对它们做健康检查和负载均衡;

- kubelet、kube-proxy、controller-manager、scheduler 通过本地的 nginx(监听 192.168.3.140)访问 kube-apiserver,从而实现 kube-apiserver 的高可用;

安装keepalived

KA-1 KA-2 KA-3

yum install keepalived -y

配置 ka-1

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens192

virtual_router_id 51

priority 100

advert_int 1

nopreempt

unicast_src_ip 192.168.3.141

unicast_peer {

192.168.3.142

192.168.3.143

}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.3.140/24

}

}

EOF

配置keepalived on ka-2:

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens192

virtual_router_id 51

priority 90

advert_int 1

nopreempt

unicast_src_ip 192.168.3.142

unicast_peer {

192.168.3.141

192.168.3.143

}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.3.140/24

}

}

EOF

配置keepalived on ka-3:

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens192

virtual_router_id 51

priority 60

advert_int 1

nopreempt

unicast_src_ip 192.168.3.143

unicast_peer {

192.168.3.141

192.168.3.142

}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.3.140/24

}

}

EOF

下载和编译 nginx

下载源码:

cd /app/k8s/work

wget http://nginx.org/download/nginx-1.15.3.tar.gz

tar -xzvf nginx-1.15.3.tar.gz

安装编译环境:

yum -y install gcc gcc-c++ autoconf automake make

配置编译参数:

cd /app/k8s/work/nginx-1.15.3

mkdir nginx-prefix

./configure --with-stream --without-http --prefix=$(pwd)/nginx-prefix --without-http_uwsgi_module --without-http_scgi_module --without-http_fastcgi_module

--with-stream:开启 4 层透明转发(TCP Proxy)功能;--without-xxx:关闭所有其他功能,这样生成的动态链接二进制程序依赖最小;

输出:

Configuration summary

+ PCRE library is not used

+ OpenSSL library is not used

+ zlib library is not used

nginx path prefix: "/root/tmp/nginx-1.15.3/nginx-prefix"

nginx binary file: "/root/tmp/nginx-1.15.3/nginx-prefix/sbin/nginx"

nginx modules path: "/root/tmp/nginx-1.15.3/nginx-prefix/modules"

nginx configuration prefix: "/root/tmp/nginx-1.15.3/nginx-prefix/conf"

nginx configuration file: "/root/tmp/nginx-1.15.3/nginx-prefix/conf/nginx.conf"

nginx pid file: "/root/tmp/nginx-1.15.3/nginx-prefix/logs/nginx.pid"

nginx error log file: "/root/tmp/nginx-1.15.3/nginx-prefix/logs/error.log"

nginx http access log file: "/root/tmp/nginx-1.15.3/nginx-prefix/logs/access.log"

nginx http client request body temporary files: "client_body_temp"

nginx http proxy temporary files: "proxy_temp"

编译和安装:

cd /app/k8s/work/nginx-1.15.3

make && make install

验证编译的 nginx

cd /app/k8s/work/nginx-1.15.3

./nginx-prefix/sbin/nginx -v

输出:

nginx version: nginx/1.15.3

查看 nginx 动态链接的库:

$ ldd ./nginx-prefix/sbin/nginx

输出:

linux-vdso.so.1 => (0x00007ffc945e7000)

libdl.so.2 => /lib64/libdl.so.2 (0x00007f4385072000)

libpthread.so.0 => /lib64/libpthread.so.0 (0x00007f4384e56000)

libc.so.6 => /lib64/libc.so.6 (0x00007f4384a89000)

/lib64/ld-linux-x86-64.so.2 (0x00007f4385276000)

- 由于只开启了 4 层透明转发功能,所以除了依赖 libc 等操作系统核心 lib 库外,没有对其它 lib 的依赖(如 libz、libssl 等),这样可以方便部署到各版本操作系统中;

安装和部署 nginx

创建目录结构:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${KA_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /app/k8s/kube-nginx/{conf,logs,sbin}"

done

拷贝二进制程序:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${KA_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /app/k8s/kube-nginx/{conf,logs,sbin}"

scp /app/k8s/work/nginx-1.15.3/nginx-prefix/sbin/nginx root@${node_ip}:/app/k8s/kube-nginx/sbin/kube-nginx

ssh root@${node_ip} "chmod a+x /app/k8s/kube-nginx/sbin/*"

done

- 重命名二进制文件为 kube-nginx;

配置 nginx,开启 4 层透明转发功能:

cd /app/k8s/work

cat > kube-nginx.conf << \EOF

worker_processes 1;

events {

worker_connections 1024;

}

stream {

upstream backend {

hash $remote_addr consistent;

server 192.168.3.144:6443 max_fails=3 fail_timeout=30s;

server 192.168.3.145:6443 max_fails=3 fail_timeout=30s;

server 192.168.3.146:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 192.168.3.140:8443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

EOF

- 需要根据集群 kube-apiserver 的实际情况,替换 backend 中 server 列表;

分发配置文件:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${KA_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-nginx.conf root@${node_ip}:/app/k8s/kube-nginx/conf/kube-nginx.conf

done

配置 systemd unit 文件,启动服务

配置 kube-nginx systemd unit 文件:

cd /app/k8s/work

cat > kube-nginx.service <<EOF

[Unit]

Description=kube-apiserver nginx proxy

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=forking

ExecStartPre=/app/k8s/kube-nginx/sbin/kube-nginx -c /app/k8s/kube-nginx/conf/kube-nginx.conf -p /app/k8s/kube-nginx -t

ExecStart=/app/k8s/kube-nginx/sbin/kube-nginx -c /app/k8s/kube-nginx/conf/kube-nginx.conf -p /app/k8s/kube-nginx

ExecReload=/app/k8s/kube-nginx/sbin/kube-nginx -c /app/k8s/kube-nginx/conf/kube-nginx.conf -p /app/k8s/kube-nginx -s reload

PrivateTmp=true

Restart=always

RestartSec=5

StartLimitInterval=0

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

分发 systemd unit 文件:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${KA_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-nginx.service root@${node_ip}:/etc/systemd/system/

done

启动 kube-nginx 服务:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${KA_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-nginx && systemctl restart kube-nginx"

done

检查 kube-nginx 服务运行状态

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${KA_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-nginx |grep 'Active:'"

done

确保状态为 active (running),否则查看日志,确认原因:

journalctl -u kube-nginx

tags: master, kube-apiserver, kube-scheduler, kube-controller-manager

06-1.部署 master 节点

kubernetes master 节点运行如下组件:

- kube-apiserver

- kube-scheduler

- kube-controller-manager

- kube-nginx

kube-apiserver、kube-scheduler 和 kube-controller-manager 均以多实例模式运行:

- kube-scheduler 和 kube-controller-manager 会自动选举产生一个 leader 实例,其它实例处于阻塞模式,当 leader 挂了后,重新选举产生新的 leader,从而保证服务可用性;

- kube-apiserver 是无状态的,需要通过 kube-nginx 进行代理访问,从而保证服务可用性;

注意:如果没有特殊指明,本文档的所有操作均在 k8master-1 节点上执行,然后远程分发文件和执行命令。

安装和配置 kube-nginx

参考 06-0.apiserver高可用之nginx代理.md

下载最新版本二进制文件

从 CHANGELOG 页面 下载二进制 tar 文件并解压:

cd /app/k8s/work

wget https://dl.k8s.io/v1.22.1/kubernetes-server-linux-amd64.tar.gz

tar -xzvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes

tar -xzvf kubernetes-src.tar.gz

将二进制文件拷贝到所有 master 节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${ETCD_IPS[@]}

do

echo ">>> ${node_ip}"

scp kubernetes/server/bin/{apiextensions-apiserver,cloud-controller-manager,kube-apiserver,kube-controller-manager,kube-proxy,kube-scheduler,kubeadm,kubectl,kubelet,mounter} root@${node_ip}:/app/k8s/bin/

ssh root@${node_ip} "chmod +x /app/k8s/bin/*"

done

tags: master, kube-apiserver

06-2.部署高可用 kube-apiserver 集群

- 06-2.部署高可用 kube-apiserver 集群

- 准备工作

- 创建 kubernetes 证书和私钥

- 创建加密配置文件

- 创建审计策略文件

- 创建后续访问 metrics-server 使用的证书

- 创建 kube-apiserver systemd unit 模板文件

- 为各节点创建和分发 kube-apiserver systemd unit 文件

- 启动 kube-apiserver 服务

- 检查 kube-apiserver 运行状态

- 打印 kube-apiserver 写入 etcd 的数据

- 检查集群信息

- 检查 kube-apiserver 监听的端口

- 授予 kube-apiserver 访问 kubelet API 的权限

本文档讲解部署一个三实例 kube-apiserver 集群的步骤,它们通过 kube-nginx 进行代理访问,从而保证服务可用性。

注意:如果没有特殊指明,本文档的所有操作均在 k8master-1 节点上执行,然后远程分发文件和执行命令。

准备工作

下载最新版本的二进制文件、安装和配置 flanneld 参考:06-1.部署master节点.md

将生成的证书和私钥文件拷贝到所有 master 节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${API_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert"

scp api*.pem root@${node_ip}:/etc/kubernetes/cert/

done

创建加密配置文件

cd /app/k8s/work

source /app/k8s/bin/environment.sh

cat > encryption-config.yaml <<EOF

kind: EncryptionConfig

apiVersion: v1

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: key1

secret: ${ENCRYPTION_KEY}

- identity: {}

EOF

将加密配置文件拷贝到 master 节点的 /etc/kubernetes 目录下:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${API_IPS[@]}

do

echo ">>> ${node_ip}"

scp encryption-config.yaml root@${node_ip}:/etc/kubernetes/

done

创建审计策略文件

cd /app/k8s/work

source /app/k8s/bin/environment.sh

cat > audit-policy.yaml <<EOF

apiVersion: audit.k8s.io/v1

kind: Policy

rules:

# 不记录一些高容量和低风险的日志.

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core

resources: ["endpoints", "services", "services/status"]

- level: None

# Ingress controller reads 'configmaps/ingress-uid' through the unsecured port.

# TODO(#46983): Change this to the ingress controller service account.

users: ["system:unsecured"]

namespaces: ["kube-system"]

verbs: ["get"]

resources:

- group: "" # core

resources: ["configmaps"]

- level: None

users: ["kubelet"] # legacy kubelet identity

verbs: ["get"]

resources:

- group: "" # core

resources: ["nodes", "nodes/status"]

- level: None

userGroups: ["system:nodes"]

verbs: ["get"]

resources:

- group: "" # core

resources: ["nodes", "nodes/status"]

- level: None

users:

- system:kube-controller-manager

- system:kube-scheduler

- system:serviceaccount:kube-system:endpoint-controller

verbs: ["get", "update"]

namespaces: ["kube-system"]

resources:

- group: "" # core

resources: ["endpoints"]

- level: None

users: ["system:apiserver"]

verbs: ["get"]

resources:

- group: "" # core

resources: ["namespaces", "namespaces/status", "namespaces/finalize"]

- level: None

users: ["cluster-autoscaler"]

verbs: ["get", "update"]

namespaces: ["kube-system"]

resources:

- group: "" # core

resources: ["configmaps", "endpoints"]

# Don't log HPA fetching metrics.

- level: None

users:

- system:kube-controller-manager

verbs: ["get", "list"]

resources:

- group: "metrics.k8s.io"

# Don't log these read-only URLs.

- level: None

nonResourceURLs:

- /healthz*

- /version

- /swagger*

# Don't log events requests because of performance impact.

- level: None

resources:

- group: "" # core

resources: ["events"]

# node and pod status calls from nodes are high-volume and can be large, don't log responses for expected updates from nodes

- level: Request

users: ["kubelet", "system:node-problem-detector", "system:serviceaccount:kube-system:node-problem-detector"]

verbs: ["update","patch"]

resources:

- group: "" # core

resources: ["nodes/status", "pods/status"]

omitStages:

- "RequestReceived"

- level: Request

userGroups: ["system:nodes"]

verbs: ["update","patch"]

resources:

- group: "" # core

resources: ["nodes/status", "pods/status"]

omitStages:

- "RequestReceived"

# deletecollection calls can be large, don't log responses for expected namespace deletions

- level: Request

users: ["system:serviceaccount:kube-system:namespace-controller"]

verbs: ["deletecollection"]

omitStages:

- "RequestReceived"

# Secrets, ConfigMaps, TokenRequest and TokenReviews can contain sensitive & binary data,

# so only log at the Metadata level.

- level: Metadata

resources:

- group: "" # core

resources: ["secrets", "configmaps", "serviceaccounts/token"]

- group: authentication.k8s.io

resources: ["tokenreviews"]

omitStages:

- "RequestReceived"

# Get responses can be large; skip them.

- level: Request

verbs: ["get", "list", "watch"]

resources:

- group: "" # core

- group: "admissionregistration.k8s.io"

- group: "apiextensions.k8s.io"

- group: "apiregistration.k8s.io"

- group: "apps"

- group: "authentication.k8s.io"

- group: "authorization.k8s.io"

- group: "autoscaling"

- group: "batch"

- group: "certificates.k8s.io"

- group: "extensions"

- group: "metrics.k8s.io"

- group: "networking.k8s.io"

- group: "node.k8s.io"

- group: "policy"

- group: "rbac.authorization.k8s.io"

- group: "scheduling.k8s.io"

- group: "storage.k8s.io"

omitStages:

- "RequestReceived"

# Default level for known APIs

- level: RequestResponse

resources:

- group: "" # core

- group: "admissionregistration.k8s.io"

- group: "apiextensions.k8s.io"

- group: "apiregistration.k8s.io"

- group: "apps"

- group: "authentication.k8s.io"

- group: "authorization.k8s.io"

- group: "autoscaling"

- group: "batch"

- group: "certificates.k8s.io"

- group: "extensions"

- group: "metrics.k8s.io"

- group: "networking.k8s.io"

- group: "node.k8s.io"

- group: "policy"

- group: "rbac.authorization.k8s.io"

- group: "scheduling.k8s.io"

- group: "storage.k8s.io"

omitStages:

- "RequestReceived"

# Default level for all other requests.

- level: Metadata

omitStages:

- "RequestReceived"

EOF

分发审计策略文件:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${API_IPS[@]}

do

echo ">>> ${node_ip}"

scp audit-policy.yaml root@${node_ip}:/etc/kubernetes/audit-policy.yaml

done

创建令牌认证文件

kubelet在启动时会向apiserver发送给csr请求,这里我们创建一个权限有限的token,用于kubelet向apiserver申请证书。

token,user,uid,"group1,group2,group3"

echo "$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:bootstrappers"" > /etc/kubernetes/token.csv

token 下发:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${API_IPS[@]}

do

echo ">>> ${node_ip}"

scp /etc/kubernetes/token.csv root@${node_ip}:/etc/kubernetes/

done

创建 kube-apiserver systemd unit 模板文件

cd /app/k8s/work

source /app/k8s/bin/environment.sh

cat > kube-apiserver.service.template <<EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

ExecStart=/app/k8s/bin/kube-apiserver \\

--advertise-address=##NODE_IP## \\

--allow-privileged=true \\

--apiserver-count=3 \\

--audit-log-maxage=15 \\

--audit-log-maxsize=100 \\

--audit-log-maxbackup=5 \\

--audit-log-path=/var/log/kube-apiserver-audit.log \\

--audit-policy-file=/etc/kubernetes/audit-policy.yaml \\

--authorization-mode=Node,RBAC \\

--bind-address=##NODE_IP## \\

--client-ca-file=/etc/kubernetes/cert/ca.pem \

--delete-collection-workers=2 \\

--enable-admission-plugins=NodeRestriction \\

--enable-bootstrap-token-auth \\

--token-auth-file=/etc/kubernetes/token.csv \\

--encryption-provider-config=/etc/kubernetes/encryption-config.yaml \\

--etcd-cafile=/etc/kubernetes/cert/ca.pem \\

--etcd-certfile=/etc/etcd/cert/etcd.pem \\

--etcd-keyfile=/etc/etcd/cert/etcd-key.pem \\

--etcd-servers=https://192.168.3.144:2379,https://192.168.3.145:2379,https://192.168.3.146:2379 \\

--event-ttl=72h \\

--feature-gates=EphemeralContainers=true \\

--kubelet-certificate-authority=/etc/kubernetes/cert/ca.pem \\

--kubelet-client-certificate=/etc/kubernetes/cert/apiserver.pem \\

--kubelet-client-key=/etc/kubernetes/cert/apiserver-key.pem \\

--logtostderr=false \\

--log-file=/var/log/kube-apiserver.log \\

--log-file-max-size=1 \\

--proxy-client-cert-file=/etc/kubernetes/cert/apiserver.pem \\

--proxy-client-key-file=/etc/kubernetes/cert/apiserver-key.pem \\

--requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\

--requestheader-allowed-names="aggregator" \\

--requestheader-username-headers="X-Remote-User" \\

--requestheader-group-headers="X-Remote-Group" \\

--requestheader-extra-headers-prefix="X-Remote-Extra-" \\

--runtime-config=api/all=true \\

--secure-port=6443 \\

--service-account-issuer=https://kubernetes.default.svc.cluster.local \\

--service-account-key-file=/etc/kubernetes/cert/apiserver.pem \\

--service-account-signing-key-file=/etc/kubernetes/cert/apiserver-key.pem \\

--service-cluster-ip-range=10.254.0.0/16 \\

--service-node-port-range=30000-32767 \\

--tls-cert-file=/etc/kubernetes/cert/apiserver.pem \\

--tls-private-key-file=/etc/kubernetes/cert/apiserver-key.pem

Restart=on-failure

RestartSec=10

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

参数参考:

- https://kubernetes.io/zh/docs/reference/command-line-tools-reference/kube-apiserver/

为各节点创建和分发 kube-apiserver systemd unit 文件

替换模板文件中的变量,为各节点生成 systemd unit 文件:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for (( i=3; i < 7; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" kube-apiserver.service.template > kube-apiserver-${NODE_IPS[i]}.service

done

ls kube-apiserver*.service

- NODE_NAMES 和 NODE_IPS 为相同长度的 bash 数组,分别为节点名称和对应的 IP;

分发生成的 systemd unit 文件:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${API_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-apiserver-${node_ip}.service root@${node_ip}:/etc/systemd/system/kube-apiserver.service

done

- 文件重命名为 kube-apiserver.service;

启动 kube-apiserver 服务

source /app/k8s/bin/environment.sh

for node_ip in ${API_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-apiserver && systemctl restart kube-apiserver"

done

- 启动服务前必须先创建工作目录;

检查 kube-apiserver 运行状态

source /app/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-apiserver |grep 'Active:'"

done

确保状态为 active (running),否则查看日志,确认原因:

journalctl -u kube-apiserver

打印 kube-apiserver 写入 etcd 的数据

source /app/k8s/bin/environment.sh

ETCDCTL_API=3 etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--cacert=/app/k8s/work/ca.pem \

--cert=/app/k8s/work/etcd.pem \

--key=/app/k8s/work/etcd-key.pem \

get /registry/ --prefix --keys-only

检查集群信息

$ kubectl cluster-info

Kubernetes master is running at https://192.168.3.140:8443

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

$ kubectl get all --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 12m

$ kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

-

如果执行 kubectl 命令式时输出如下错误信息,则说明使用的

~/.kube/config文件不对,请切换到正确的账户后再执行该命令:The connection to the server localhost:8080 was refused - did you specify the right host or port? -

执行

kubectl get componentstatuses命令时,apiserver 默认向 192.168.3.140 发送请求。当 controller-manager、scheduler 以集群模式运行时,有可能和 kube-apiserver 不在一台机器上,这时 controller-manager 或 scheduler 的状态为 Unhealthy,但实际上它们工作正常。

检查 kube-apiserver 监听的端口

$ sudo netstat -lnpt|grep kube

tcp 0 0 192.168.3.144:6443 0.0.0.0:* LISTEN 101442/kube-apiserv

- 6443: 接收 https 请求的安全端口,对所有请求做认证和授权;

- 由于关闭了非安全端口,故没有监听 8080;

授予 kube-apiserver 访问 kubelet API 的权限

在执行 kubectl exec、run、logs 等命令时,apiserver 会将请求转发到 kubelet 的 https 端口。这里定义 RBAC 规则,授权 apiserver 使用的证书(kubernetes.pem)用户名(CN:kuberntes)访问 kubelet API 的权限:

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

tags: master, kube-controller-manager

06-3.部署高可用 kube-controller-manager 集群

- 06-3.部署高可用 kube-controller-manager 集群

- 准备工作

- 创建 kube-controller-manager 证书和私钥

- 创建和分发 kubeconfig 文件

- 创建 kube-controller-manager systemd unit 模板文件

- 为各节点创建和分发 kube-controller-mananger systemd unit 文件

- 启动 kube-controller-manager 服务

- 检查服务运行状态

- 查看输出的 metrics

- kube-controller-manager 的权限

- 查看当前的 leader

- 测试 kube-controller-manager 集群的高可用

- 参考

本文档介绍部署高可用 kube-controller-manager 集群的步骤。

该集群包含 3 个节点,启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用时,阻塞的节点将再次进行选举产生新的 leader 节点,从而保证服务的可用性。

为保证通信安全,本文档先生成 x509 证书和私钥,kube-controller-manager 在如下两种情况下使用该证书:

- 与 kube-apiserver 的安全端口通信;

- 在安全端口(https,10252) 输出 prometheus 格式的 metrics;

注意:如果没有特殊指明,本文档的所有操作均在 k8master-1 节点上执行,然后远程分发文件和执行命令。

准备工作

下载最新版本的二进制文件、安装和配置 flanneld 参考:06-1.部署master节点.md。

将生成的证书和私钥分发到所有 master 节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${CTL_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager*.pem root@${node_ip}:/etc/kubernetes/cert/

done

创建和分发 kubeconfig 文件

kube-controller-manager 使用 kubeconfig 文件访问 apiserver,该文件提供了 apiserver 地址、嵌入的 CA 证书和 kube-controller-manager 证书:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

kubectl config set-cluster kubernetes \

--certificate-authority=/app/k8s/work/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context system:kube-controller-manager \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

分发 kubeconfig 到所有 master 节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${ETCD_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager.kubeconfig root@${node_ip}:/etc/kubernetes/

done

创建 kube-controller-manager systemd unit 模板文件

cd /app/k8s/work

source /app/k8s/bin/environment.sh

cat > kube-controller-manager.service.template <<EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/app/k8s/bin/kube-controller-manager \\

--allocate-node-cidrs=true \\

--cluster-cidr=172.1.0.0/16 \\

--service-cluster-ip-range=0.254.0.0/16 \\

--node-cidr-mask-size=24 \\

--authentication-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--authorization-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--bind-address=##NODE_IP## \\

--client-ca-file=/etc/kubernetes/cert/ca.pem \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \\

--cluster-signing-duration=87600h \\

--concurrent-deployment-syncs=10 \\

--controllers=*,bootstrapsigner,tokencleaner \\

--horizontal-pod-autoscaler-initial-readiness-delay=30s \\

--horizontal-pod-autoscaler-sync-period=10s \\

--kube-api-burst=100 \\

--kube-api-qps=100 \\

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--leader-elect=true \\

--logtostderr=false \\

--log-file=/var/log/kube-controller-manager.log \\

--log-file-max-size=100 \\

--pod-eviction-timeout=1m \\

--root-ca-file=/etc/kubernetes/cert/ca.pem \\

--secure-port=10257 \\

--service-account-private-key-file=/etc/kubernetes/cert/apiserver-key.pem \\

--tls-cert-file=/etc/kubernetes/cert/kube-controller-manager.pem \\

--tls-private-key-file=/etc/kubernetes/cert/kube-controller-manager-key.pem \\

--use-service-account-credentials=true

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

为各节点创建和分发 kube-controller-mananger systemd unit 文件

替换模板文件中的变量,为各节点创建 systemd unit 文件:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for (( i=3; i < 7; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" kube-controller-manager.service.template > kube-controller-manager-${NODE_IPS[i]}.service

done

ls kube-controller-manager*.service

- NODE_NAMES 和 NODE_IPS 为相同长度的 bash 数组,分别为节点名称和对应的 IP;

分发到所有 master 节点:

cd /app/k8s/work

source /app/k8s/bin/environment.sh

for node_ip in ${CTL_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager-${node_ip}.service root@${node_ip}:/etc/systemd/system/kube-controller-manager.service

done

- 文件重命名为 kube-controller-manager.service;

启动 kube-controller-manager 服务

source /app/k8s/bin/environment.sh

for node_ip in ${CTL_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager"

done

- 启动服务前必须先创建工作目录;

检查服务运行状态

source /app/k8s/bin/environment.sh

for node_ip in ${CTL_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-controller-manager|grep Active"

done

确保状态为 active (running),否则查看日志,确认原因:

journalctl -u kube-controller-manager

kube-controller-manager 监听 10257 端口,接收 https 请求:

$ sudo netstat -lnpt | grep kube-cont

tcp 0 0 192.168.3.144:10257 0.0.0.0:* LISTEN 108977/kube-control

查看输出的 metrics

注意:以下命令在 kube-controller-manager 节点上执行。

$ curl -s --cacert /app/k8s/work/ca.pem --cert /app/k8s/work/admin.pem --key /app/k8s/work/admin-key.pem https://192.168.3.144:10257/metrics |head

# HELP ClusterRoleAggregator_adds (Deprecated) Total number of adds handled by workqueue: ClusterRoleAggregator

# TYPE ClusterRoleAggregator_adds counter

ClusterRoleAggregator_adds 3

# HELP ClusterRoleAggregator_depth (Deprecated) Current depth of workqueue: ClusterRoleAggregator

# TYPE ClusterRoleAggregator_depth gauge

ClusterRoleAggregator_depth 0

# HELP ClusterRoleAggregator_longest_running_processor_microseconds (Deprecated) How many microseconds has the longest running processor for ClusterRoleAggregator been running.

# TYPE ClusterRoleAggregator_longest_running_processor_microseconds gauge

ClusterRoleAggregator_longest_running_processor_microseconds 0

# HELP ClusterRoleAggregator_queue_latency (Deprecated) How long an item stays in workqueueClusterRoleAggregator before being requested.

kube-controller-manager 的权限

ClusteRole system:kube-controller-manager 的权限很小,只能创建 secret、serviceaccount 等资源对象,各 controller 的权限分散到 ClusterRole system:controller:XXX 中:

$ kubectl describe clusterrole system:kube-controller-manager

Name: system:kube-controller-manager

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

secrets [] [] [create delete get update]

endpoints [] [] [create get update]

serviceaccounts [] [] [create get update]

events [] [] [create patch update]

tokenreviews.authentication.k8s.io [] [] [create]

subjectaccessreviews.authorization.k8s.io [] [] [create]

configmaps [] [] [get]

namespaces [] [] [get]

*.* [] [] [list watch]

需要在 kube-controller-manager 的启动参数中添加 --use-service-account-credentials=true 参数,这样 main controller 会为各 controller 创建对应的 ServiceAccount XXX-controller。内置的 ClusterRoleBinding system:controller:XXX 将赋予各 XXX-controller ServiceAccount 对应的 ClusterRole system:controller:XXX 权限。

$ kubectl get clusterrole|grep controller

system:controller:attachdetach-controller 51m

system:controller:certificate-controller 51m

system:controller:clusterrole-aggregation-controller 51m

system:controller:cronjob-controller 51m

system:controller:daemon-set-controller 51m

system:controller:deployment-controller 51m

system:controller:disruption-controller 51m

system:controller:endpoint-controller 51m

system:controller:expand-controller 51m

system:controller:generic-garbage-collector 51m

system:controller:horizontal-pod-autoscaler 51m

system:controller:job-controller 51m

system:controller:namespace-controller 51m

system:controller:node-controller 51m

system:controller:persistent-volume-binder 51m

system:controller:pod-garbage-collector 51m

system:controller:pv-protection-controller 51m

system:controller:pvc-protection-controller 51m

system:controller:replicaset-controller 51m

system:controller:replication-controller 51m

system:controller:resourcequota-controller 51m

system:controller:route-controller 51m

system:controller:service-account-controller 51m

system:controller:service-controller 51m

system:controller:statefulset-controller 51m

system:controller:ttl-controller 51m

system:kube-controller-manager 51m

以 deployment controller 为例:

$ kubectl describe clusterrole system:controller:deployment-controller

Name: system:controller:deployment-controller

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

replicasets.apps [] [] [create delete get list patch update watch]

replicasets.extensions [] [] [create delete get list patch update watch]

events [] [] [create patch update]

pods [] [] [get list update watch]

deployments.apps [] [] [get list update watch]

deployments.extensions [] [] [get list update watch]

deployments.apps/finalizers [] [] [update]

deployments.apps/status [] [] [update]

deployments.extensions/finalizers [] [] [update]

deployments.extensions/status [] [] [update]

查看当前的 leader

$ kubectl get lease -n kube-system kube-controller-manager

NAME HOLDER AGE

kube-controller-manager k8master-2_152485e6-c38b-4ef1-9f24-12388ca93634 97m

可见,当前的 leader 为 k8master-2 节点。

测试 kube-controller-manager 集群的高可用

停掉一个或两个节点的 kube-controller-manager 服务,观察其它节点的日志,是否获取了 leader 权限。

参考

- 关于 controller 权限和 use-service-account-credentials 参数:https://github.com/kubernetes/kubernetes/issues/48208

- kubelet 认证和授权:https://kubernetes.io/docs/admin/kubelet-authentication-authorization/#kubelet-authorization

tags: master, kube-scheduler

06-4.部署高可用 kube-scheduler 集群

- 06-4.部署高可用 kube-scheduler 集群

- 准备工作

- 创建 kube-scheduler 证书和私钥

- 创建和分发 kubeconfig 文件

- 创建 kube-scheduler 配置文件

- 创建 kube-scheduler systemd unit 模板文件

- 为各节点创建和分发 kube-scheduler systemd unit 文件

- 启动 kube-scheduler 服务

- 检查服务运行状态

- 查看输出的 metrics

- 查看当前的 leader

- 测试 kube-scheduler 集群的高可用

本文档介绍部署高可用 kube-scheduler 集群的步骤。