李宏毅《机器学习》飞桨特训营(九)——卷积神经网络(含作业:食物分类)

李宏毅《机器学习》飞桨特训营(九)

- 一. 卷积神经网络

- 二. 作业:食物分类

-

- 2.1 数据集介绍

- 2.2 导入包

- 2.3 定义数据集

- 2.4 构建模型

- 2.5 开始训练

- 2.6 测试

- 2.7 单张图片预测

一. 卷积神经网络

| 视频 | PPT |

|---|---|

| 内容总结1(老师上课内容) | ⭐内容总结2(含拓展内容:AlexNet、GoogleNet、ResNet模型及源码等) |

二. 作业:食物分类

| 视频讲解(25:00开始) |

|---|

2.1 数据集介绍

本次使用的数据集为food-11数据集,共有11类分别是:

Bread, Dairy product, Dessert, Egg, Fried food, Meat, Noodles/Pasta, Rice, Seafood, Soup, and Vegetable/Fruit.

- Training set: 9866张

- Validation set: 3430张

- Testing set: 3347张

下载 zip 后解压缩会有三个文件夹,分别为training、validation 以及 testing。

training 以及 validation 中的照片名称格式为 [类别]_[编号].jpg,例如 3_100.jpg 即为类别 3 的照片(编号不重要)。

数据集下载地址:https://aistudio.baidu.com/aistudio/datasetdetail/76103

2.2 导入包

# Import需要的套件

import os

import cv2

import time

import numpy as np

import paddle

from paddle.io import Dataset, DataLoader

from paddle.nn import Sequential, Conv2D, BatchNorm2D, ReLU, MaxPool2D, Linear, Flatten

from paddle.vision.transforms import Compose, Transpose, RandomRotation, RandomHorizontalFlip, Normalize, Resize

# 分配GPU设备

place = paddle.CUDAPlace(0)

paddle.disable_static(place)

2.3 定义数据集

在 paddle 中,我们可以利用 paddle.io 的 Dataset 及 DataLoader 来"包装"数据,使后续的训练及预测更为方便。

Dataset 需要 overload 两个函数:len 及 getitem:

- len 必须要回传 dataset 的大小

- getitem 则定义了当函数利用 [idx] 取值时,数据集应该要怎么回传数据。

实际上我们并不会直接使用到这两个函数,但是使用 DataLoader 在 enumerate Dataset 时会使用到,没有做的话会在运行阶段出现错误。

class FoodDataset(Dataset):

def __init__(self, image_path, image_size=(128, 128), mode='train'):

self.image_path = image_path

self.image_file_list = sorted(os.listdir(image_path))

self.mode = mode

# training 时做数据增强

self.train_transforms = Compose([

Resize(size=image_size),

RandomHorizontalFlip(),

RandomRotation(15),

Transpose(),

Normalize(mean=127.5, std=127.5)

])

# testing 时不需做数据增强

self.test_transforms = Compose([

Resize(size=image_size),

Transpose(),

Normalize(mean=127.5, std=127.5)

])

def __len__(self):

return len(self.image_file_list)

def __getitem__(self, idx):

img = cv2.imread(os.path.join(self.image_path, self.image_file_list[idx]))

if self.mode == 'train':

img = self.train_transforms(img)

label = int(self.image_file_list[idx].split("_")[0])

return img, label

else:

img = self.test_transforms(img)

return img

batch_size = 128

traindataset = FoodDataset('work/food-11/training')

valdataset = FoodDataset('work/food-11/validation')

#DataLoader返回一个迭代器,该迭代器根据 batch_sampler 给定的顺序迭代一次给定的 dataset

train_loader = DataLoader(traindataset, places=paddle.CUDAPlace(0), batch_size=batch_size, shuffle=True, drop_last=True)

val_loader = DataLoader(valdataset, places=paddle.CUDAPlace(0), batch_size=batch_size, shuffle=False, drop_last=True)

2.4 构建模型

卷积神经网络时常使用“Conv+BN+激活+池化”作为一个基础block,我们可以将多个block堆叠在一起,进行特征提取,最后连 接一个Linear层,实现图片分类。

⭐CNN的卷积核通道数 = 卷积输入层的通道数

⭐CNN的卷积输出层通道数 = 卷积核的个数

class Classifier(paddle.nn.Layer):

def __init__(self):

super(Classifier, self).__init__()

# input 维度 [3, 128, 128]

self.cnn = Sequential(

#Conv2D(输入图像通道数,卷积输出层通道数,卷积核大小,步长,padding大小)

Conv2D(3, 64, 3, 1, 1), # [64, 128, 128]

#BatchNorm2D(输入通道数)

BatchNorm2D(64), #根据当前批次数据按通道计算的均值和方差进行归一化

ReLU(), #设置激活函数

#Maxpool2D(池化核大小,步长,padding)

MaxPool2D(2, 2, 0), # [64, 64, 64] 池化操作,将图像缩小为原来的一半

Conv2D(64, 128, 3, 1, 1), # [128, 64, 64]

BatchNorm2D(128),

ReLU(),

MaxPool2D(2, 2, 0), # [128, 32, 32]

Conv2D(128, 256, 3, 1, 1), # [256, 32, 32]

BatchNorm2D(256),

ReLU(),

MaxPool2D(2, 2, 0), # [256, 16, 16]

Conv2D(256, 512, 3, 1, 1), # [512, 16, 16]

BatchNorm2D(512),

ReLU(),

MaxPool2D(2, 2, 0), # [512, 8, 8]

Conv2D(512, 512, 3, 1, 1), # [512, 8, 8]

BatchNorm2D(512),

ReLU(),

MaxPool2D(2, 2, 0), # [512, 4, 4]

)

#全连接层

self.fc = Sequential(

Linear(512*4*4, 1024),

ReLU(),

Linear(1024, 512),

ReLU(),

Linear(512, 11)

)

#将卷积神经网络和全连接层连起来,组成完整的网络

def forward(self, x):

x = self.cnn(x)

x = x.flatten(start_axis=1)

x = self.fc(x)

return x

查看模型结构:

my_model = paddle.Model(Classifier())

my_model.summary((-1, 3, 128, 128))

运行后输出以下内容:

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-11 [[1, 3, 128, 128]] [1, 64, 128, 128] 1,792

BatchNorm2D-11 [[1, 64, 128, 128]] [1, 64, 128, 128] 256

ReLU-15 [[1, 64, 128, 128]] [1, 64, 128, 128] 0

MaxPool2D-11 [[1, 64, 128, 128]] [1, 64, 64, 64] 0

Conv2D-12 [[1, 64, 64, 64]] [1, 128, 64, 64] 73,856

BatchNorm2D-12 [[1, 128, 64, 64]] [1, 128, 64, 64] 512

ReLU-16 [[1, 128, 64, 64]] [1, 128, 64, 64] 0

MaxPool2D-12 [[1, 128, 64, 64]] [1, 128, 32, 32] 0

Conv2D-13 [[1, 128, 32, 32]] [1, 256, 32, 32] 295,168

BatchNorm2D-13 [[1, 256, 32, 32]] [1, 256, 32, 32] 1,024

ReLU-17 [[1, 256, 32, 32]] [1, 256, 32, 32] 0

MaxPool2D-13 [[1, 256, 32, 32]] [1, 256, 16, 16] 0

Conv2D-14 [[1, 256, 16, 16]] [1, 512, 16, 16] 1,180,160

BatchNorm2D-14 [[1, 512, 16, 16]] [1, 512, 16, 16] 2,048

ReLU-18 [[1, 512, 16, 16]] [1, 512, 16, 16] 0

MaxPool2D-14 [[1, 512, 16, 16]] [1, 512, 8, 8] 0

Conv2D-15 [[1, 512, 8, 8]] [1, 512, 8, 8] 2,359,808

BatchNorm2D-15 [[1, 512, 8, 8]] [1, 512, 8, 8] 2,048

ReLU-19 [[1, 512, 8, 8]] [1, 512, 8, 8] 0

MaxPool2D-15 [[1, 512, 8, 8]] [1, 512, 4, 4] 0

Linear-7 [[1, 8192]] [1, 1024] 8,389,632

ReLU-20 [[1, 1024]] [1, 1024] 0

Linear-8 [[1, 1024]] [1, 512] 524,800

ReLU-21 [[1, 512]] [1, 512] 0

Linear-9 [[1, 512]] [1, 11] 5,643

===========================================================================

Total params: 12,836,747

Trainable params: 12,830,859

Non-trainable params: 5,888

---------------------------------------------------------------------------

Input size (MB): 0.19

Forward/backward pass size (MB): 49.59

Params size (MB): 48.97

Estimated Total Size (MB): 98.74

---------------------------------------------------------------------------

{'total_params': 12836747, 'trainable_params': 12830859}

2.5 开始训练

使用训练数据集训练,并使用验证数据集寻找好的参数。

epoch_num = 30 #迭代轮数

learning_rate = 0.001 #学习率

model = Classifier() #模型

loss = paddle.nn.loss.CrossEntropyLoss() # 因为是分类任务,所以 loss 使用 CrossEntropyLoss(交叉熵)

optimizer = paddle.optimizer.Adam(learning_rate=learning_rate, parameters=model.parameters()) # optimizer 使用 Adam

print('start training...')

for epoch in range(epoch_num):

epoch_start_time = time.time()

train_acc = 0.0

train_loss = 0.0

val_acc = 0.0

val_loss = 0.0

# 模型训练

model.train()

for img, label in train_loader():

pred = model(img)

step_loss = loss(pred, label)

step_loss.backward()

optimizer.minimize(step_loss)

optimizer.clear_grad()

optimizer.step()

train_acc += np.sum(np.argmax(pred.numpy(), axis=1) == label.numpy())

train_loss += step_loss.numpy()[0]

# 模型验证

model.eval()

for img, label in val_loader():

pred = model(img)

step_loss = loss(pred, label)

val_acc += np.sum(np.argmax(pred.numpy(), axis=1) == label.numpy())

val_loss += step_loss.numpy()[0]

# 将结果打印出来

print('[%03d/%03d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f | Val Acc: %3.6f loss: %3.6f' % \

(epoch + 1, epoch_num, \

time.time()-epoch_start_time, \

train_acc/traindataset.__len__(), \

train_loss/traindataset.__len__(), \

val_acc/valdataset.__len__(), \

val_loss/valdataset.__len__()))

2.6 测试

构建测试集的迭代器:

batch_size = 128

testdataset = FoodDataset('work/food-11/testing', mode='test')

test_loader = DataLoader(testdataset, places=paddle.CUDAPlace(0), batch_size=batch_size, shuffle=False, drop_last=True)

得到测试集每张图片的预测结果,放到名为prediction的列表内:

prediction = list()

model.eval()

for img in test_loader():

pred = model(img[0])

test_label = np.argmax(pred.numpy(), axis=1)

for y in test_label:

prediction.append(y)

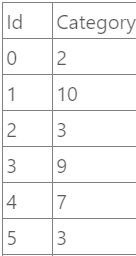

将结果写入CSV文件:

with open('work/predict.csv', 'w') as f:

f.write('Id,Category\n')

for i, y in enumerate(prediction):

f.write('{},{}\n'.format(i, y))

2.7 单张图片预测

准备工作:

import matplotlib.pyplot as plt

import cv2

from PIL import Image

#将每个类别对应的名称存入列表,方便后续输出

label_class = ["Bread", "Dairy product", "Dessert", "Egg", "Fried food", "Meat", "Noodles/Pasta", "Rice", "Seafood", "Soup", "Vegetable/Fruit"]

#导入一张图片,训练时用cv2读入,预测时也用cv2读入

img = cv2.imread('work/food-11/testing/0001.jpg')

图片预处理,需要跟训练时图片做的预处理相同(不需要做数据增强):

img_1 = Compose([

Resize(size=(128,128)),#改变图片大小

Transpose(), #转置

Normalize(mean=127.5, std=127.5) #标准化

])(img)

将图片转换为模型能够读取的格式(N,C,W,H):

img_1 = paddle.to_tensor(img_1) #将图片转换为tensor格式

img_1 = paddle.reshape(img_1, shape=(1, 3, 128,128)) #给tensor增加一个维度,变为模型能够识别的格式

开始预测:

output = model.forward(img_1)

label = np.argmax(output.numpy(), axis=1)

输出图片和预测结果:

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) #由于cv2读入的彩色图为BGR格式的,所以输出前需要转换为RGB格式

plt.imshow(img)

plt.show()

print("预测结果为:",label_class[label[0]])