【语义分割】利用U-Net网络对遥感影像道路信息分割提取

一、论文阅读

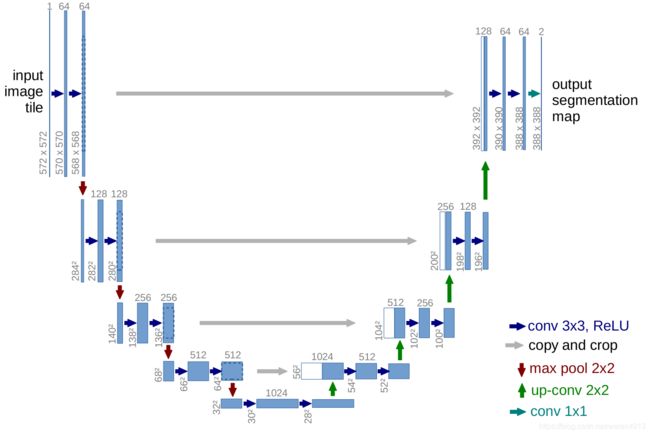

原始论文是《U-Net: Convolutional Networks for Biomedical Image Segmentation》地址:https://arxiv.org/abs/1505.04597。其网络结构主要是以“U”型编码器-解码器构成了下采样-上采样两部分功能结构。下采样采用典型的卷积网络架构,就采样结构结果而言,每层的Max-Pooling采样减小了图像尺寸,但是成倍增加了channels,具体每层卷积操作可以看代码或者详读论文。上采用过程中对下采样的结果进行Conv-Transpose反卷积过程,直到恢复网络结构,网络结构如图1.1:

二、代码实现

代码分成了三个py文件,分别为数据预处理模块dataset.py,网络模型实现模块unet.py以及main.py。

# dataset.py

from torch.utils.data import Dataset

import PIL.Image as Image

import os

def make_dataset(root):

imgs=[]

n=len(os.listdir(root))//2

for i in range(n):

img=os.path.join(root,"%03d.png"%i)

mask=os.path.join(root,"%03d_mask.png"%i)

imgs.append((img,mask))

return imgs

class LiverDataset(Dataset):

def __init__(self, root, transform=None, target_transform=None):

imgs = make_dataset(root)

self.imgs = imgs

self.transform = transform

self.target_transform = target_transform

def __getitem__(self, index):

x_path, y_path = self.imgs[index]

img_x = Image.open(x_path)

img_y = Image.open(y_path)

if self.transform is not None:

img_x = self.transform(img_x)

if self.target_transform is not None:

img_y = self.target_transform(img_y)

return img_x, img_y

def __len__(self):

return len(self.imgs)

dataset.py中有两个功能函数,make_dataset模块是将样本以及样本标签导入。LiverDataset模块是为了做DataLoader而准备。

# unet.py

import torch

from torch import nn

class DoubleConv(nn.Module):

def __init__(self, in_ch, out_ch):

super(DoubleConv, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(in_ch, out_ch, 3, padding=1),

nn.BatchNorm2d(out_ch),

nn.ReLU(inplace=True),

nn.Conv2d(out_ch, out_ch, 3, padding=1),

nn.BatchNorm2d(out_ch),

nn.ReLU(inplace=True)

)

def forward(self, input):

return self.conv(input)

class Unet(nn.Module):

def __init__(self,in_ch,out_ch):

super(Unet, self).__init__()

self.conv1 = DoubleConv(in_ch, 64)

self.pool1 = nn.MaxPool2d(2)

self.conv2 = DoubleConv(64, 128)

self.pool2 = nn.MaxPool2d(2)

self.conv3 = DoubleConv(128, 256)

self.pool3 = nn.MaxPool2d(2)

self.conv4 = DoubleConv(256, 512)

self.pool4 = nn.MaxPool2d(2)

self.conv5 = DoubleConv(512, 1024)

self.up6 = nn.ConvTranspose2d(1024, 512, 2, stride=2)

self.conv6 = DoubleConv(1024, 512)

self.up7 = nn.ConvTranspose2d(512, 256, 2, stride=2)

self.conv7 = DoubleConv(512, 256)

self.up8 = nn.ConvTranspose2d(256, 128, 2, stride=2)

self.conv8 = DoubleConv(256, 128)

self.up9 = nn.ConvTranspose2d(128, 64, 2, stride=2)

self.conv9 = DoubleConv(128, 64)

self.conv10 = nn.Conv2d(64,out_ch, 1)

def forward(self,x):

c1=self.conv1(x)

p1=self.pool1(c1)

c2=self.conv2(p1)

p2=self.pool2(c2)

c3=self.conv3(p2)

p3=self.pool3(c3)

c4=self.conv4(p3)

p4=self.pool4(c4)

c5=self.conv5(p4)

up_6= self.up6(c5)

merge6 = torch.cat([up_6, c4], dim=1)

c6=self.conv6(merge6)

up_7=self.up7(c6)

merge7 = torch.cat([up_7, c3], dim=1)

c7=self.conv7(merge7)

up_8=self.up8(c7)

merge8 = torch.cat([up_8, c2], dim=1)

c8=self.conv8(merge8)

up_9=self.up9(c8)

merge9=torch.cat([up_9,c1],dim=1)

c9=self.conv9(merge9)

c10=self.conv10(c9)

return c10# main.py

import torch

import argparse

from torch.utils.data import DataLoader

from torch import nn, optim

from torchvision.transforms import transforms

from unet import Unet

from dataset import LiverDataset

from torch.autograd import Variable

import torch.nn.functional as F

import numpy as np

import cv2

import os

from tensorboardX import SummaryWriter

# 是否使用cuda

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

x_transforms = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

# mask只需要转换为tensor

y_transforms = transforms.ToTensor()

def train_model(model, criterion, optimizer, dataload, num_epochs=3):

writer = SummaryWriter(r'model_record')

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

dt_size = len(dataload.dataset)

epoch_loss = 0

step = 0

for x, y in dataload:

step += 1

inputs = x.to(device)

labels = y.to(device)

# zero the parameter gradients

optimizer.zero_grad()

# forward

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

epoch_loss += loss.item()

writer.add_scalar('train loss', loss.item(), global_step=step+epoch*200)

print("%d/%d,train_loss:%0.3f" % (step, (dt_size - 1) // dataload.batch_size + 1, loss.item()))

print("epoch %d loss:%0.3f" % (epoch, epoch_loss/step))

torch.save(model.state_dict(), 'weights_%d.pth' % epoch)

return model

#训练模型

def train():

model = Unet(3, 1).to(device)

batch_size = 2

criterion = nn.BCEWithLogitsLoss()

optimizer = optim.Adam(model.parameters())

liver_dataset = LiverDataset(r'data_road\train',transform=x_transforms,target_transform=y_transforms)

dataloaders = DataLoader(liver_dataset, batch_size=batch_size, shuffle=True, num_workers=4)

train_model(model, criterion, optimizer, dataloaders)

#显示模型的输出结果

def test():

model = Unet(3, 1)

model.load_state_dict(torch.load(r'weights_5.pth',map_location=lambda storage, loc: storage.cuda(0)))

# model.load_state_dict(torch.load(r'u_net_liver\weights_4.pth'))

liver_dataset = LiverDataset(r'data_road\val1', transform=x_transforms,target_transform=y_transforms)

dataloaders = DataLoader(liver_dataset, batch_size=1)

model.eval()

with torch.no_grad():

all_IoU = 0.0

record = 0

for x, tagart in dataloaders:

y=model(x)

img_y=torch.squeeze(y).numpy()

img_tagart = torch.squeeze(tagart).numpy()

img_y[img_y > 0.3] = 255

img_y[img_y <= 0.3] = 0

# print([x for x in img_y if x in img_tagart])

all = 0.0

inte = 0.0

for x1 in range(0,512):

for x2 in range(0,512):

if img_y[x1,x2] == 255 and img_tagart[x1,x2] == 1:

all=all+1

if img_y[x1,x2] == 255 or img_tagart[x1,x2] == 1:

inte=inte+1

all_IoU =all_IoU+ all/inte

pathname = "%03d_predict.png"%record

cv2.imwrite(os.path.join(r'data_road/result1',pathname),img_y)

record=record+1

print(all*1.0/inte)

print(all_IoU/20.0)

if __name__ == '__main__':

train()

test()

main.py函数比较杂,其中我将训练和测试函数都写在了一起,在train时单独运行train()将test()屏蔽即刻。采用的Loss函数是nn.BCEWithLogitsLoss(),这里有兴趣可以将其变换为其他的loss看看结果。值得注意的一点是这里有一个阈值0.3,对应的代码是img_y[img_y > 0.3] = 255和img_y[img_y <= 0.3] = 0,这里需要对每个不同情况自己去定义自己的分割阈值去确定。optimizer选取的是Adam。

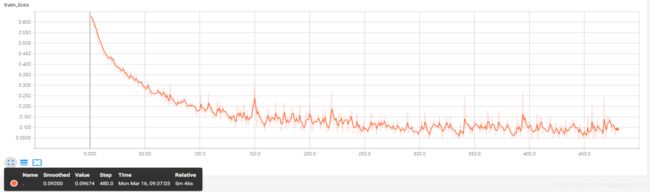

数据集采用的是Massachusetts road,数据地址为:Road and Building Detection Datasets,这里可以用简单爬虫批量下载,若有需求,可以让我在下面评论贴出该数据集的网盘地址。还有一个细节(坑)就是,unet结构是需要512或者1024等大小的16整数倍的image sizes。所以这里需要对下载的数据集(images和labels)进行批量重采样,采样用最邻近和双线性内插均可,没有太大影响,然而我是将数据集裁剪为了512*512,because of graphics memory。最后贴出我的训练结果。

三、结果讨论

首先,我的迭代次数不太多,也没有采用动态学习率策略,并且massachusetts road数据集也有很多坑(需要很多预处理,去除损坏样本,谁用谁知道),所以最后的分割效果一般,仅仅是跑通网络。结果见图3.1,3.2和3.3。

3.1 Training Loss

3.1 Training Loss  3.2 Mean IoU 3.3 从左到右分别是原始图像-真值-提取结果

3.2 Mean IoU 3.3 从左到右分别是原始图像-真值-提取结果

讨论:本文简要地用U-Net网络跑了一下遥感影像道路信息分割提取这个方面的研究,效果达到预期但是没有想象突出,原因有以下两点,1、原始数据集Massachusetts roads样本有部分有较大的偏差,真值存在错误致使训练错误。2、Loss设计不合理,具体可以见有关遥感领域分割信息提取Loss设计相关论文,本人也在学习阶段。

欢迎大家留言讨论。