[二十]深度学习Pytorch-Hook函数与CAM算法

0. 往期内容

[一]深度学习Pytorch-张量定义与张量创建

[二]深度学习Pytorch-张量的操作:拼接、切分、索引和变换

[三]深度学习Pytorch-张量数学运算

[四]深度学习Pytorch-线性回归

[五]深度学习Pytorch-计算图与动态图机制

[六]深度学习Pytorch-autograd与逻辑回归

[七]深度学习Pytorch-DataLoader与Dataset(含人民币二分类实战)

[八]深度学习Pytorch-图像预处理transforms

[九]深度学习Pytorch-transforms图像增强(剪裁、翻转、旋转)

[十]深度学习Pytorch-transforms图像操作及自定义方法

[十一]深度学习Pytorch-模型创建与nn.Module

[十二]深度学习Pytorch-模型容器与AlexNet构建

[十三]深度学习Pytorch-卷积层(1D/2D/3D卷积、卷积nn.Conv2d、转置卷积nn.ConvTranspose)

[十四]深度学习Pytorch-池化层、线性层、激活函数层

[十五]深度学习Pytorch-权值初始化

[十六]深度学习Pytorch-18种损失函数loss function

[十七]深度学习Pytorch-优化器Optimizer

[十八]深度学习Pytorch-学习率Learning Rate调整策略

[十九]深度学习Pytorch-可视化工具TensorBoard

[二十]深度学习Pytorch-Hook函数与CAM算法

深度学习Pytorch-Hook函数与CAM算法

- 0. 往期内容

- 1. Hook函数概念

- 2. Hook函数与特征图提取

-

- 2.1 Tensor.register_hook

- 2.2 register_forward_hook

- 2.3 register_forward_pre_hook

- 2.4 register_backward_hook

- 3. CAM算法

- 4. 完整代码

1. Hook函数概念

2. Hook函数与特征图提取

2.1 Tensor.register_hook

return为tensor的话会将该tensor覆盖原来的梯度。

代码示例:

# ----------------------------------- 1 tensor hook 1 -----------------------------------

flag = 0

# flag = 1

if flag:

w = torch.tensor([1.], requires_grad=True)

x = torch.tensor([2.], requires_grad=True)

a = torch.add(w, x)

b = torch.add(w, 1)

y = torch.mul(a, b)

a_grad = list()

def grad_hook(grad):

a_grad.append(grad)

handle = a.register_hook(grad_hook) #注册到对应的张量上

y.backward()

# 查看梯度

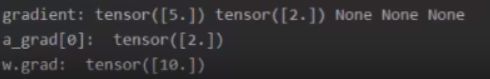

print("gradient:", w.grad, x.grad, a.grad, b.grad, y.grad)

print("a_grad[0]: ", a_grad[0])

handle.remove()

# ----------------------------------- 2 tensor hook 2 -----------------------------------

flag = 0

# flag = 1

if flag:

w = torch.tensor([1.], requires_grad=True)

x = torch.tensor([2.], requires_grad=True)

a = torch.add(w, x)

b = torch.add(w, 1)

y = torch.mul(a, b)

a_grad = list()

def grad_hook(grad):

grad *= 2

handle = w.register_hook(grad_hook)

y.backward()

# 查看梯度

print("w.grad: ", w.grad) #输出10,梯度扩大了两倍

handle.remove()

#######################

def grad_hook(grad):

grad *= 2

return grad*3

handle = w.register_hook(grad_hook)

y.backward()

# 查看梯度

print("w.grad: ", w.grad) #输出30,将上面输出的10扩大了3倍

handle.remove()

官网示例:

v = torch.tensor([0., 0., 0.], requires_grad=True)

h = v.register_hook(lambda grad: grad * 2) # double the gradient

v.backward(torch.tensor([1., 2., 3.]))

v.grad

h.remove() # removes the hook

2.2 register_forward_hook

flag = 1

if flag:

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 2, 3)

self.pool1 = nn.MaxPool2d(2, 2)

def forward(self, x):

x = self.conv1(x)

x = self.pool1(x)

return x

def forward_hook(module, data_input, data_output):

fmap_block.append(data_output)

input_block.append(data_input)

def forward_pre_hook(module, data_input):

print("forward_pre_hook input:{}".format(data_input))

def backward_hook(module, grad_input, grad_output):

print("backward hook input:{}".format(grad_input))

print("backward hook output:{}".format(grad_output))

# 初始化网络

net = Net()

net.conv1.weight[0].detach().fill_(1)

net.conv1.weight[1].detach().fill_(2)

net.conv1.bias.data.detach().zero_()

# 注册hook

fmap_block = list()

input_block = list()

net.conv1.register_forward_hook(forward_hook)

net.conv1.register_forward_pre_hook(forward_pre_hook)

net.conv1.register_backward_hook(backward_hook)

# inference

fake_img = torch.ones((1, 1, 4, 4)) # batch size * channel * H * W

output = net(fake_img)

loss_fnc = nn.L1Loss()

target = torch.randn_like(output)

loss = loss_fnc(target, output)

loss.backward()

# 观察

print("output shape: {}\noutput value: {}\n".format(output.shape, output))

print("feature maps shape: {}\noutput value: {}\n".format(fmap_block[0].shape, fmap_block[0]))

print("input shape: {}\ninput value: {}".format(input_block[0][0].shape, input_block[0]))

2.3 register_forward_pre_hook

2.4 register_backward_hook

3. CAM算法

4. 完整代码

hook_methods.py

# -*- coding:utf-8 -*-

"""

@file name : hook_methods.py

@brief : pytorch的hook函数

"""

import torch

import torch.nn as nn

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

# ----------------------------------- 1 tensor hook 1 -----------------------------------

flag = 0

# flag = 1

if flag:

w = torch.tensor([1.], requires_grad=True)

x = torch.tensor([2.], requires_grad=True)

a = torch.add(w, x)

b = torch.add(w, 1)

y = torch.mul(a, b)

a_grad = list()

def grad_hook(grad):

a_grad.append(grad)

handle = a.register_hook(grad_hook) #注册到对应的张量上

y.backward()

# 查看梯度

print("gradient:", w.grad, x.grad, a.grad, b.grad, y.grad)

print("a_grad[0]: ", a_grad[0])

handle.remove()

# ----------------------------------- 2 tensor hook 2 -----------------------------------

flag = 0

# flag = 1

if flag:

w = torch.tensor([1.], requires_grad=True)

x = torch.tensor([2.], requires_grad=True)

a = torch.add(w, x)

b = torch.add(w, 1)

y = torch.mul(a, b)

a_grad = list()

def grad_hook(grad):

grad *= 2

handle = w.register_hook(grad_hook)

y.backward()

# 查看梯度

print("w.grad: ", w.grad) #输出10,梯度扩大了两倍

handle.remove()

#######################

def grad_hook(grad):

grad *= 2

return grad*3

handle = w.register_hook(grad_hook)

y.backward()

# 查看梯度

print("w.grad: ", w.grad) #输出30,将上面输出的10扩大了3倍

handle.remove()

# ----------------------------------- 3 Module.register_forward_hook and pre hook -----------------------------------

# flag = 0

flag = 1

if flag:

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 2, 3)

self.pool1 = nn.MaxPool2d(2, 2)

def forward(self, x):

x = self.conv1(x)

x = self.pool1(x)

return x

def forward_hook(module, data_input, data_output):

fmap_block.append(data_output)

input_block.append(data_input)

def forward_pre_hook(module, data_input):

print("forward_pre_hook input:{}".format(data_input))

def backward_hook(module, grad_input, grad_output):

print("backward hook input:{}".format(grad_input))

print("backward hook output:{}".format(grad_output))

# 初始化网络

net = Net()

net.conv1.weight[0].detach().fill_(1)

net.conv1.weight[1].detach().fill_(2)

net.conv1.bias.data.detach().zero_()

# 注册hook

fmap_block = list()

input_block = list()

net.conv1.register_forward_hook(forward_hook)

net.conv1.register_forward_pre_hook(forward_pre_hook)

net.conv1.register_backward_hook(backward_hook)

# inference

fake_img = torch.ones((1, 1, 4, 4)) # batch size * channel * H * W

output = net(fake_img)

loss_fnc = nn.L1Loss()

target = torch.randn_like(output)

loss = loss_fnc(target, output)

loss.backward()

# 观察

print("output shape: {}\noutput value: {}\n".format(output.shape, output))

print("feature maps shape: {}\noutput value: {}\n".format(fmap_block[0].shape, fmap_block[0]))

print("input shape: {}\ninput value: {}".format(input_block[0][0].shape, input_block[0]))

hook_fmap_vis.py

# -*- coding:utf-8 -*-

"""

@file name : hook_fmap_vis.py

@brief : 采用hook函数可视化特征图

"""

import torch.nn as nn

import numpy as np

from PIL import Image

import torchvision.transforms as transforms

import torchvision.utils as vutils

from torch.utils.tensorboard import SummaryWriter

from tools.common_tools import set_seed

import torchvision.models as models

set_seed(1) # 设置随机种子

# ----------------------------------- feature map visualization -----------------------------------

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 数据

path_img = "./lena.png" # your path to image

normMean = [0.49139968, 0.48215827, 0.44653124]

normStd = [0.24703233, 0.24348505, 0.26158768]

norm_transform = transforms.Normalize(normMean, normStd)

img_transforms = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

norm_transform

])

img_pil = Image.open(path_img).convert('RGB')

if img_transforms is not None:

img_tensor = img_transforms(img_pil)

img_tensor.unsqueeze_(0) # chw --> bchw

# 模型

alexnet = models.alexnet(pretrained=True)

# 注册hook

fmap_dict = dict()

for name, sub_module in alexnet.named_modules():

if isinstance(sub_module, nn.Conv2d):

key_name = str(sub_module.weight.shape)

fmap_dict.setdefault(key_name, list())

n1, n2 = name.split(".")

def hook_func(m, i, o): #module input output

key_name = str(m.weight.shape)

fmap_dict[key_name].append(o)

alexnet._modules[n1]._modules[n2].register_forward_hook(hook_func) #对所有卷积层注册hook

# forward

output = alexnet(img_tensor)

# add image

for layer_name, fmap_list in fmap_dict.items():

fmap = fmap_list[0]

fmap.transpose_(0, 1)

nrow = int(np.sqrt(fmap.shape[0]))

fmap_grid = vutils.make_grid(fmap, normalize=True, scale_each=True, nrow=nrow)

writer.add_image('feature map in {}'.format(layer_name), fmap_grid, global_step=322)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第1张图片](http://img.e-com-net.com/image/info8/ee597e5629d74c51bed8d59e515832a8.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第2张图片](http://img.e-com-net.com/image/info8/eb5ea468be8c4d57ad63240b2bde33eb.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第3张图片](http://img.e-com-net.com/image/info8/61614fda025d47df8417beda71274abd.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第4张图片](http://img.e-com-net.com/image/info8/1024855ce1cd4cdda3ae1ad2690fc9ca.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第5张图片](http://img.e-com-net.com/image/info8/a3cbabdb52fb4a20adf1a71ad1c1a9bd.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第6张图片](http://img.e-com-net.com/image/info8/33477308505a42c5af04ea896fce37cb.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第7张图片](http://img.e-com-net.com/image/info8/f3291cad0b8f45eb934bdadfea213d86.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第8张图片](http://img.e-com-net.com/image/info8/1e5e7a29e2e34ff1868510b2af188493.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第9张图片](http://img.e-com-net.com/image/info8/b2531864213f4818835e2dccff768503.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第10张图片](http://img.e-com-net.com/image/info8/d96487e16ee747a3b8be6a41b7e0fcbc.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第11张图片](http://img.e-com-net.com/image/info8/9e84ff81ccc848eab7fe9161290b8443.jpg)

![[二十]深度学习Pytorch-Hook函数与CAM算法_第12张图片](http://img.e-com-net.com/image/info8/5884ac23ba124b34b4a1cc7cadb12f0a.jpg)