Pytorch lr_scheduler 各个函数的用法及可视化

Pytorch lr_scheduler 各个函数的用法及可视化

-

-

-

-

- 参考博客

- 说几个我之前不这么了解的吧,也不怎么用的

-

-

-

参考博客

- https://www.pythonheidong.com/blog/article/511529/e49e08939f608d9736fe/

- https://blog.csdn.net/xiaotuzigaga/article/details/87879198

- https://blog.csdn.net/baoxin1100/article/details/107446538

说几个我之前不这么了解的吧,也不怎么用的

- CyclicLR

这个函数没用过,其实估计效果应该不错,回头试试,这个mode有三种模式。“triangular2"是一个周期就减半,即缩放是0.5;“exp_range”是可以自定义这个每个周期后的缩放比例,通过设置"gamma"参数实现。

import torch

import matplotlib.pyplot as plt

lr = 0.001

epochs = 10

iters = 4

cyc_epoch = 1

# triangular, triangular2, exp_range

# 三角的形式,0.0001代表最小的学习率, 0.001代表最大的学习率, cyc_epoch*iters代表一个升降周期

scheduler_cyc0 = torch.optim.lr_scheduler.CyclicLR(torch.optim.SGD([torch.ones(1)], lr), 0.0001, 0.001, cyc_epoch*iters, mode='triangular')

scheduler_cyc1 = torch.optim.lr_scheduler.CyclicLR(torch.optim.SGD([torch.ones(1)], lr), 0.0001, 0.001, cyc_epoch*iters, mode='triangular2')

scheduler_cyc2 = torch.optim.lr_scheduler.CyclicLR(torch.optim.SGD([torch.ones(1)], lr), 0.0001, 0.001, cyc_epoch*iters, mode='exp_range', gamma=0.8)

triangular = []

triangular2 = []

exp_range = []

for epoch in range(epochs):

for i in range(iters):

# print (scheduler_cyc0.get_lr()) #[0.000325]

triangular += (scheduler_cyc0.get_lr()) # list之间, + 和 append有区别

triangular2 += scheduler_cyc1.get_lr()

exp_range += scheduler_cyc2.get_lr()

scheduler_cyc0.step()

scheduler_cyc1.step()

scheduler_cyc2.step()

print (triangular)

print (triangular2)

print (exp_range)

x = list(range(len(triangular)))

plt.figure(figsize=(12,7))

plt.plot(x, triangular, "r")

plt.plot(x, triangular2, "g--")

plt.plot(x, exp_range, "b-.")

# plt.plot(triangular2)

plt.legend(['triangular','triangular2','exp_range'], fontsize=20)

plt.xlabel('iters', size=15)

plt.ylabel('lr', size=15)

# plt.savefig("CyclicLR.png")

plt.show()

这边讲个小细节,以前注意过。

a ,b = [1], [2]

a.append(b) # [1, [2]]

a += [b] # [1, 2]

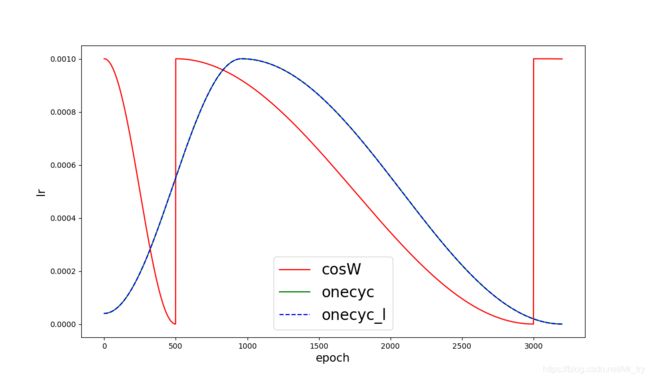

lr = 0.001

epochs = 100

iters = 32

# T_0代表第一个余弦退火的周期,T_mult代表周期的成长因子,例如:T_0=5, T_mult=5. [5, 5+25, 5+25+50]

scheduler_cosW = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(torch.optim.SGD([torch.ones(1)], lr), T_0=500, T_mult=5)

# lr从initial_lr = max_lr/div_factor在pct_start*total_steps(=epochs*steps_per_epoch)步数里

# 采用cos(可选还有线性)升到max_lr,

# 然后用cos(可选还有线性)退火降至min_lr = initial_lr/final_div_factor

scheduler_onecyc = torch.optim.lr_scheduler.OneCycleLR(torch.optim.SGD([torch.ones(1)], lr), max_lr=lr, steps_per_epoch=iters, epochs=epochs, anneal_strategy='cos')

scheduler_onecyc_linear = torch.optim.lr_scheduler.OneCycleLR(torch.optim.SGD([torch.ones(1)], lr), max_lr=lr, steps_per_epoch=iters, epochs=epochs, anneal_strategy='linear')

从图中可以看出,OneCycleLR才是我们口中常说的warmup吧,而且anneal_strategy这个参数居然没用,都是cos退火。我觉得以后可以直接用OneCycleLR。当然也有一些其他scheduler带上warmup的,自己改下即可。

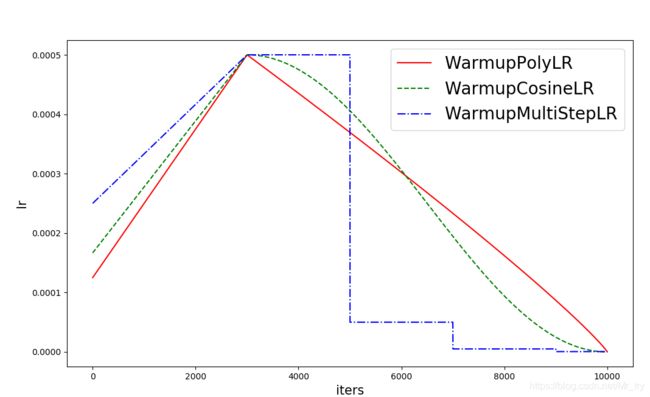

- Warmup 改写的https://github.com/xiaoyufenfei/Efficient-Segmentation-Networks/blob/master/utils/scheduler/lr_scheduler.py

import math

from torch.optim.lr_scheduler import MultiStepLR, _LRScheduler

class WarmupMultiStepLR(MultiStepLR):

r"""

# max_iter = epochs * steps_per_epoch

Args:

optimizer (Optimizer): Wrapped optimizer.

max_iter (int): The total number of steps.

milestones (list) – List of iter indices. Must be increasing.

gamma (float): Multiplicative factor of learning rate decay. Default: 0.1.

pct_start (float): The percentage of the cycle (in number of steps) spent

increasing the learning rate.

Default: 0.3

warmup_factor (float):

last_epoch (int): The index of last epoch. Default: -1.

"""

def __init__(self, optimizer, max_iter, milestones, gamma=0.1, pct_start=0.3, warmup_factor=1.0 / 2,

last_epoch=-1):

self.warmup_factor = warmup_factor

self.warmup_iters = int(pct_start * max_iter)

super().__init__(optimizer, milestones, gamma, last_epoch)

def get_lr(self):

if self.last_epoch <= self.warmup_iters:

alpha = self.last_epoch / self.warmup_iters

warmup_factor = self.warmup_factor * (1 - alpha) + alpha

return [lr * warmup_factor for lr in self.base_lrs]

else:

lr = super().get_lr()

return lr

class WarmupCosineLR(_LRScheduler):

def __init__(self, optimizer, max_iter, pct_start=0.3, warmup_factor=1.0 / 3,

eta_min=0, last_epoch=-1):

self.warmup_factor = warmup_factor

self.warmup_iters = int(pct_start * max_iter)

self.max_iter, self.eta_min = max_iter, eta_min

super().__init__(optimizer)

def get_lr(self):

if self.last_epoch <= self.warmup_iters:

alpha = self.last_epoch / self.warmup_iters

warmup_factor = self.warmup_factor * (1 - alpha) + alpha

return [lr * warmup_factor for lr in self.base_lrs]

else:

# print ("after warmup")

return [self.eta_min + (base_lr - self.eta_min) *

(1 + math.cos(

math.pi * (self.last_epoch - self.warmup_iters) / (self.max_iter - self.warmup_iters))) / 2

for base_lr in self.base_lrs]

class WarmupPolyLR(_LRScheduler):

def __init__(self, optimizer, T_max, pct_start=0.3, warmup_factor=1.0 / 4,

eta_min=0, power=0.9):

self.warmup_factor = warmup_factor

self.warmup_iters = int(pct_start * T_max)

self.power = power

self.T_max, self.eta_min = T_max, eta_min

super().__init__(optimizer)

def get_lr(self):

if self.last_epoch <= self.warmup_iters:

alpha = self.last_epoch / self.warmup_iters

warmup_factor = self.warmup_factor * (1 - alpha) + alpha

return [lr * warmup_factor for lr in self.base_lrs]

else:

return [self.eta_min + (base_lr - self.eta_min) *

math.pow(1 - (self.last_epoch - self.warmup_iters) / (self.T_max - self.warmup_iters),

self.power) for base_lr in self.base_lrs]

if __name__ == '__main__':

import matplotlib.pyplot as plt

import torch

import sys

# sys.setrecursionlimit(12000)

max_iter = 10000

lr=5e-4

optimizer = torch.optim.SGD([torch.ones(1)], lr)

scheduler_WP = WarmupPolyLR(optimizer, T_max=max_iter)

scheduler_WS = WarmupCosineLR(optimizer, max_iter)

scheduler_WM = WarmupMultiStepLR(optimizer, max_iter, [5000, 7000, 9000])

lrs_wp = []

lrs_ws = []

lrs_wm = []

for cur_iter in range(max_iter):

# lr = optimizer.param_groups[0]['lr']

# lrs_wp.append(scheduler_WP.get_lr()[0])

lrs_wp += scheduler_WP.get_lr()

lrs_ws += scheduler_WS.get_lr()

lrs_wm += scheduler_WM.get_lr()

optimizer.step()

scheduler_WP.step()

scheduler_WS.step()

scheduler_WM.step()

x = list(range(len(lrs_wm)))

plt.figure(figsize=(12,7))

plt.plot(x, lrs_wp, "r",

x, lrs_ws, "g--",

x, lrs_wm, "b-.")

# plt.plot(triangular2)

plt.legend(['WarmupPolyLR','WarmupCosineLR','WarmupMultiStepLR'], fontsize=20)

plt.xlabel('iters', size=15)

plt.ylabel('lr', size=15)

# plt.savefig("CyclicLR.png")

plt.show()

- other

class MyLRScheduler(object):

'''

CLass that defines cyclic learning rate that decays the learning rate linearly till the end of cycle and then restarts

at the maximum value.

'''

def __init__(self, initial=0.1, cycle_len=5, ep_cycle=50, ep_max=100):

super(MyLRScheduler, self).__init__()

self.min_lr = initial# minimum learning rate

self.m = cycle_len

self.ep_cycle = ep_cycle

self.ep_max = ep_max

self.poly_start = initial

self.step = initial/ self.ep_cycle

print('Using Cyclic LR Scheduler with warm restarts and poly step '

+ str(self.step))

def get_lr(self, epoch):

if epoch==0:

current_lr = self.min_lr

elif 0< epoch and epoch <= self.ep_cycle:

counter = (epoch-1) % self.m

current_lr = round((self.min_lr * self.m) - (counter * self.min_lr), 5)

else:

current_lr = round(self.poly_start - (epoch-self.ep_cycle )*self.step, 8)

# current_lr = round(self.poly_start * (1 - (epoch-self.ep_cycle) / (self.ep_max-self.ep_cycle)) ** 0.9, 8)

return current_lr

class WarmupPoly(object):

'''

CLass that defines cyclic learning rate that decays the learning rate linearly till the end of cycle and then restarts

at the maximum value.

'''

def __init__(self, init_lr, total_ep, warmup_ratio=0.05, poly_pow = 0.98):

super(WarmupPoly, self).__init__()

self.init_lr = init_lr

self.total_ep = total_ep

self.warmup_ep = int(warmup_ratio*total_ep)

print("warup unitl " + str(self.warmup_ep))

self.poly_pow = poly_pow

def get_lr(self, epoch):

#

if epoch < self.warmup_ep:

curr_lr = self.init_lr*pow((((epoch+1) / self.warmup_ep)), self.poly_pow)

else:

curr_lr = self.init_lr*pow((1 - ((epoch- self.warmup_ep) / (self.total_ep-self.warmup_ep))), self.poly_pow)

return curr_lr

if __name__ == '__main__':

import matplotlib.pyplot as plt

max_epochs = 300

lrSched = MyLRScheduler(initial=0.0001, cycle_len=10, ep_cycle=150, ep_max=300)

lrSched1 = WarmupPoly(1e-3, max_epochs , poly_pow=0.95)

x = []

y = []

y1 = []

for i in range(max_epochs):

x.append(i)

y.append(lrSched.get_lr(i))

y1.append(lrSched1.get_lr(i))

print (y[0], y[-1]) # 0.0001 6.7e-07

print (y1[0], y1[-1]) # 7.633317097623927e-05 4.654760957913004e-06

plt.figure(figsize=(12,7))

plt.plot(x, y, "r",

x, y1, "b-.")

plt.legend(['cyclic','WarmupPoly',], fontsize=20)

plt.xlabel('iters', size=15)

plt.ylabel('lr', size=15)

plt.savefig("MyLRScheduler.png")

plt.show()

- 还发现Pytorch doc optim部分最后出现了SWA,以后实际任务跑一下,试试。