HDFS的Shell操作和API操作

文章目录

-

- HDFS的Shell操作

-

- 1.1 基本语法

- 1.2 命令大全

- 1.3 常用命令实操

-

- 1.3.1 准备工作

- 1.3.2 上传

- 1.3.3 下载

- 1.3.4 HDFS直接操作

- 第二章 HDFS客户端操作

-

- 2.1 准备Windows关于Hadoop的开发环境

- 2.2 HDFS的API操作

-

- 2.2.1 HDFS文件上传(测试参数优先级)

- 2.2.2 HDFS文件下载

- 2.2.3 HDFS删除文件和目录

- 2.2.4 HDFS文件更名和移动

- 2.2.5 HDFS文件详情查看

- 2.2.6 HDFS文件和文件夹判断

HDFS的Shell操作

1.1 基本语法

hadoop fs 具体命令 OR hdfs dfs 具体命令

两个是完全相同的。

1.2 命令大全

[

atbigdata@hadoop102 hadoop-3.1.3]$ bin/hadoop fs

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-checksum <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-copyFromLocal [-f] [-p] <localsrc> ... <dst>]

[-copyToLocal [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] <path> ...]

[-cp [-f] [-p] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot <snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] <path> ...]

[-expunge]

[-get [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getmerge [-nl] <src> <localdst>]

[-help [cmd ...]]

[-ls [-d] [-h] [-R] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] <file>]

[-test -[defsz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touchz <path> ...]

[-usage [cmd ...]]

1.3 常用命令实操

1.3.1 准备工作

1)启动Hadoop集群(方便后续的测试)

[atbigdata@hadoop102 hadoop-3.1.3]$ sbin/start-dfs.sh

[atbigdata@hadoop103 hadoop-3.1.3]$ sbin/start-yarn.sh

2)-help:输出这个命令参数

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -help rm

1.3.2 上传

1)-moveFromLocal:从本地剪切粘贴到HDFS

[atbigdata@hadoop102 hadoop-3.1.3]$ touch kongming.txt

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -moveFromLocal ./kongming.txt /sanguo/shuguo

2)-copyFromLocal:从本地文件系统中拷贝文件到HDFS路径去

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -copyFromLocal README.txt /

3)-appendToFile:追加一个文件到已经存在的文件末尾

[atbigdata@hadoop102 hadoop-3.1.3]$ touch liubei.txt

[atbigdata@hadoop102 hadoop-3.1.3]$ vi liubei.txt

输入

san gu mao lu

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -appendToFile liubei.txt /sanguo/shuguo/kongming.txt

4)-put:等同于copyFromLocal

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -put ./liubei.txt /user/atbigdata/test/

1.3.3 下载

1)-copyToLocal:从HDFS拷贝到本地

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -copyToLocal /sanguo/shuguo/kongming.txt ./

2)-get:等同于copyToLocal,就是从HDFS下载文件到本地

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -get /sanguo/shuguo/kongming.txt ./

3)-getmerge:合并下载多个文件,比如HDFS的目录 /user/atbigdata/test下有多个文件:log.1, log.2,log.3,…

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -getmerge /user/atbigdata/test/* ./zaiyiqi.txt

1.3.4 HDFS直接操作

1)-ls: 显示目录信息

```scala

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -ls /

2)-mkdir:在HDFS上创建目录

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -mkdir -p /sanguo/shuguo

3)-cat:显示文件内容

```scala

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -cat /sanguo/shuguo/kongming.txt

4)-chgrp 、-chmod、-chown:Linux文件系统中的用法一样,修改文件所属权限

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -chmod 666 /sanguo/shuguo/kongming.txt

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -chown atbigdata:atbigdata /sanguo/shuguo/kongming.txt

5)-cp :从HDFS的一个路径拷贝到HDFS的另一个路径

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -cp /sanguo/shuguo/kongming.txt /zhuge.txt

6)-mv:在HDFS目录中移动文件

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -mv /zhuge.txt /sanguo/shuguo/

7)-tail:显示一个文件的末尾1kb的数据

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -tail /sanguo/shuguo/kongming.txt

8)-rm:删除文件或文件夹

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -rm /user/atbigdata/test/jinlian2.txt

9)-rmdir:删除空目录

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -mkdir /test

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -rmdir /test

10)-du统计文件夹的大小信息

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -du -s -h /user/atbigdata/test

2.7 K /user/atbigdata/test

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -du -h /user/atbigdata/test

1.3 K /user/atbigdata/test/README.txt

15 /user/atbigdata/test/jinlian.txt

1.4 K /user/atbigdata/test/zaiyiqi.txt

11)-setrep:设置HDFS中文件的副本数量

[atbigdata@hadoop102 hadoop-3.1.3]$ hadoop fs -setrep 10 /sanguo/shuguo/kongming.txt

这里设置的副本数只是记录在NameNode的元数据中,是否真的会有这么多副本,还得看DataNode的数量。因为目前只有3台设备,最多也就3个副本,只有节点数的增加到10台时,副本数才能达到10。

第二章 HDFS客户端操作

2.1 准备Windows关于Hadoop的开发环境

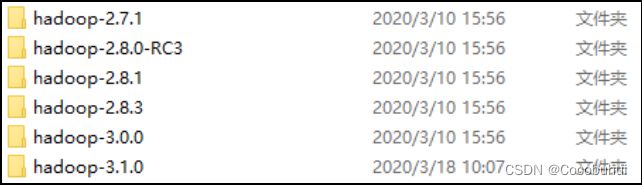

选择Hadoop-3.1.0,拷贝到其他地方(比如d:)。

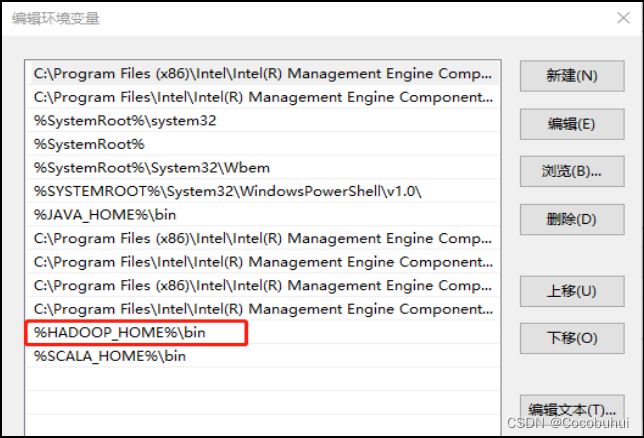

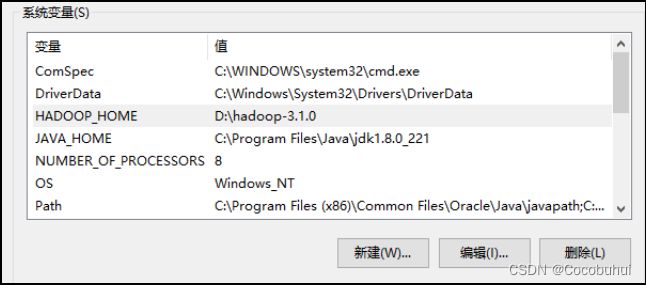

2)配置HADOOP_HOME环境变量。

4)创建一个Maven工程HdfsClientDemo,并导入相应的依赖坐标+日志添加

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>2.12.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

</dependencies>

在项目的src/main/resources目录下,新建一个文件,命名为“log4j2.xml”,在文件中填入

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="error" strict="true" name="XMLConfig">

<Appenders>

<!-- 类型名为Console,名称为必须属性 -->

<Appender type="Console" name="STDOUT">

<!-- 布局为PatternLayout的方式,

输出样式为[INFO] [2018-01-22 17:34:01][org.test.Console]I'm here -->

<Layout type="PatternLayout"

pattern="[%p] [%d{yyyy-MM-dd HH:mm:ss}][%c{10}]%m%n" />

</Appender>

</Appenders>

<Loggers>

<!-- 可加性为false -->

<Logger name="test" level="info" additivity="false">

<AppenderRef ref="STDOUT" />

</Logger>

<!-- root loggerConfig设置 -->

<Root level="info">

<AppenderRef ref="STDOUT" />

</Root>

</Loggers>

</Configuration>

5)创建包名:com.atbigdata.hdfs

6)创建HdfsClient类

public class HdfsClient{

@Test

public void testMkdirs() throws IOException, InterruptedException, URISyntaxException{

// 1 获取文件系统

Configuration configuration = new Configuration();

// 配置在集群上运行

// configuration.set("fs.defaultFS", "hdfs://hadoop102:9820");

// FileSystem fs = FileSystem.get(configuration);

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "atbigdata");

// 2 创建目录

fs.mkdirs(new Path("/1108/daxian/banzhang"));

// 3 关闭资源

fs.close();

}

}

7)执行程序

运行时需要配置用户名称

客户端去操作HDFS时,是有一个用户身份的。默认情况下,HDFS客户端API会从JVM中获取一个参数来作为自己的用户身份:-DHADOOP_USER_NAME=atbigdata,atbigdata为用户名称。

2.2 HDFS的API操作

2.2.1 HDFS文件上传(测试参数优先级)

1)编写源代码

@Test

public void testCopyFromLocalFile() throws IOException, InterruptedException, URISyntaxException {

// 1 获取文件系统

Configuration configuration = new Configuration();

configuration.set("dfs.replication", "2");

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:8020"), configuration, "atbigdata");

// 2 上传文件

fs.copyFromLocalFile(new Path("e:/banzhang.txt"), new Path("/banzhang.txt"));

// 3 关闭资源

fs.close();

System.out.println("over");

}

2)将hdfs-site.xml拷贝到项目的根目录下

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

3)参数优先级

参数优先级排序:(1)客户端代码中设置的值 >(2)ClassPath下的用户自定义配置文件 >(3)然后是服务器的自定义配置(xxx-site.xml) >(4)服务器的默认配置(xxx-default.xml)

2.2.2 HDFS文件下载

@Test

public void testCopyToLocalFile() throws IOException, InterruptedException, URISyntaxException{

// 1 获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "atbigdata");

// 2 执行下载操作

// boolean delSrc 指是否将原文件删除

// Path src 指要下载的文件路径

// Path dst 指将文件下载到的路径

// boolean useRawLocalFileSystem 是否开启文件校验

fs.copyToLocalFile(false, new Path("/banzhang.txt"), new Path("e:/banhua.txt"), true);

// 3 关闭资源

fs.close();

}

2.2.3 HDFS删除文件和目录

@Test

public void testDelete() throws IOException, InterruptedException, URISyntaxException{

// 1 获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "atbigdata");

// 2 执行删除

fs.delete(new Path("/0508/"), true);

// 3 关闭资源

fs.close();

}

2.2.4 HDFS文件更名和移动

@Test

public void testRename() throws IOException, InterruptedException, URISyntaxException{

// 1 获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "atbigdata");

// 2 修改文件名称

fs.rename(new Path("/banzhang.txt"), new Path("/banhua.txt"));

// 3 关闭资源

fs.close();

}

2.2.5 HDFS文件详情查看

查看文件名称、权限、长度、块信息

@Test

public void testListFiles() throws IOException, InterruptedException, URISyntaxException{

// 1获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "atbigdata");

// 2 获取文件详情

RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true);

while(listFiles.hasNext()){

LocatedFileStatus status = listFiles.next();

// 输出详情

// 文件名称

System.out.println(status.getPath().getName());

// 长度

System.out.println(status.getLen());

// 权限

System.out.println(status.getPermission());

// 分组

System.out.println(status.getGroup());

// 获取存储的块信息

BlockLocation[] blockLocations = status.getBlockLocations();

for (BlockLocation blockLocation : blockLocations) {

// 获取块存储的主机节点

String[] hosts = blockLocation.getHosts();

for (String host : hosts) {

System.out.println(host);

}

}

System.out.println("-----------班长的分割线----------");

}

// 3 关闭资源

fs.close();

}

2.2.6 HDFS文件和文件夹判断

@Test

public void testListStatus() throws IOException, InterruptedException, URISyntaxException{

// 1 获取文件配置信息

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "atbigdata");

// 2 判断是文件还是文件夹

FileStatus[] listStatus = fs.listStatus(new Path("/"));

for (FileStatus fileStatus : listStatus) {

// 如果是文件

if (fileStatus.isFile()) {

System.out.println("f:"+fileStatus.getPath().getName());

}else {

System.out.println("d:"+fileStatus.getPath().getName());

}

}

// 3 关闭资源

fs.close();

}